Key Takeaway

- Autonomous agents require a foundational ethical framework that spans from design to deployment. Reactive compliance is insufficient; proactive ethical alignment is essential for fostering trust and sustainability.

- Every agentic decision should be explainable and traceable. Organizations must define clear responsibility hierarchies and implement audit trails to ensure human accountability remains intact.

- Training agents on historical or unbalanced datasets can perpetuate existing biases and discrimination. Ethical deployment demands fairness audits, diverse training sets, and bias mitigation at every model iteration.

- Agents that handle user data must adhere to privacy-by-design principles, ensure informed consent, and implement mechanisms for user control, anonymization, and transparency.

- Autonomy should never mean unchecked control. HITL/HOTL safeguards, autonomy boundaries, and override capabilities are vital to ensure agents act as augmenters—not replacers—of human judgment.

The rise of autonomous agents is transforming the way modern enterprises operate. From automating decisions in procurement and finance to enhancing interactions in customer service and healthcare, these agents are more than just tools—they are actors in the decision-making loop. But with increasing autonomy comes a greater ethical responsibility.

When a digital agent takes an action—be it approving a loan, scheduling a surgery, or flagging a security risk—it does so independently. The complexity and opacity of such systems create a dilemma: How do we ensure these decisions are just, fair, and accountable?

This blog examines the ethical implications of deploying autonomous agents, providing insights and frameworks to navigate these challenges responsibly.

Also read: The Role of APA in Building Autonomous Business Processes.

What Are Autonomous Agents?

Autonomous agents are AI-driven software systems capable of performing tasks and making decisions without direct human input. They operate on predefined goals, sense their environment (via APIs, data feeds, and sensors), and adapt behavior based on changing inputs.

Unlike traditional rule-based bots that require human-defined instructions for every scenario, autonomous agents can:

- Learn from data

- Interact with users and systems.

- Plan and prioritize actions dynamically.

- Collaborate with other agents in multi-agent systems

These agents are now deployed in areas like:

- Insurance claims adjudication

- Intelligent procurement approvals

- Logistics optimization

- Real-time fraud detection

While these use cases boost productivity, their decision-making capabilities often operate beyond human visibility, raising ethical concerns.

Why Ethics Matter in Autonomous Agent Deployment?

The shift from automation to autonomy transforms not only workflows but also power dynamics. Machines now possess the agency to influence outcomes with long-term impacts.

Consider these examples:

- A healthcare agent erroneously flags a patient as high-risk, delaying treatment.

- A credit-scoring agent denies a loan based on outdated or biased data.

- A customer service bot escalates complaints ineffectively, damaging brand trust.

The cost of ethical oversight failure includes:

- Regulatory fines (GDPR, HIPAA, FCRA violations)

- Loss of trust among customers and employees

- Brand erosion due to bad publicity or lawsuits

- AI model degradation due to feedback loops and bias amplification

In short, ethical deployment isn’t optional—it is mission-critical for enterprises relying on AI-driven decision-making.

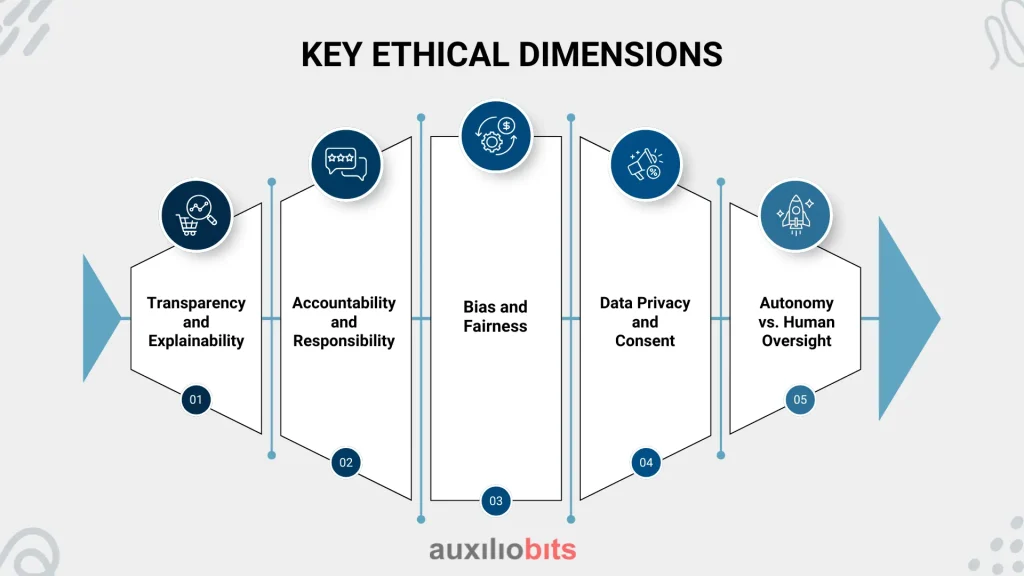

Key Ethical Dimensions

The key ethical dimensions are as follows:

1. Transparency and Explainability

One of the most pressing ethical concerns is the lack of interpretability in how agents make decisions. This is especially true for agents built using deep neural networks or transformer-based models.

- Users may feel alienated when outcomes cannot be explained.

- Developers struggle to troubleshoot or improve opaque systems.

- Regulators may reject agent-based decisions lacking rationale.

Ethical design approach:

- Use explainable AI (XAI) techniques such as SHAP, LIME, or counterfactual explanations.

- Create decision traceability logs that record how an agent arrived at its conclusion.

- Provide users with simplified decision summaries in high-impact scenarios (e.g., medical or financial).

2. Accountability and Responsibility

A common trap is the “responsibility vacuum”: when a system fails, no one steps forward to take responsibility.

For instance:

- Is it the data scientist who trained the model?

- The product manager who defined the goal?

- Or is the enterprise deploying it?

Best practice:

- Maintain a transparent chain of responsibility with defined roles and escalation paths.

- Set up AI Ethics Boards within organizations to review and approve agent deployment pipelines.

- Conduct periodic agent audits to evaluate post-deployment behavior and anomalies.

3. Bias and Fairness

Agents trained on historical or unbalanced datasets tend to inherit the biases within them. This is especially dangerous when decisions relate to:

- Gender (e.g., underwriting, hiring)

- Race or ethnicity (e.g., facial recognition)

- Geography (e.g., access to credit or subsidies)

Real-world fallout:

In one well-documented case, an AI hiring tool favored male candidates because the training data had more male applicants from past hiring decisions.

Mitigation:

- Use bias detection metrics like disparate impact ratio, statistical parity, or equal opportunity.

- Diversify training data across relevant demographic groups.

- Employ fairness-aware ML algorithms that correct or down-weight biased signals.

4. Data Privacy and Consent

Autonomous agents thrive on data, but often, the rights to that data are unclear or violated.

For example:

- Agents are pulling user data from connected systems without renewed consent.

- Chatbots are capturing sensitive personal information (PII) during conversations.

- Supply chain bots accessing proprietary vendor data without authorization

Ethical imperatives:

- Use differential privacy, encryption, and data minimization techniques.

- Obtain explicit and purpose-bound consent before data ingestion.

- Make data usage auditable and user-reversible.

5. Autonomy vs. Human Oversight

Autonomous agents must know when to defer to humans.

Without human-in-the-loop (HITL) or human-on-the-loop safeguards:

- Misjudgments go unchecked.

- Novel scenarios cause unintended behavior.

- Overreliance reduces human skill and situational awareness.

Recommendation:

- Define autonomy boundaries: what the agent can and cannot decide.

- Implement override mechanisms that empower users to interrupt or rollback actions.

- Continuously monitor agent behavior for drift or unexpected patterns.

Sector-Specific Ethical Risks

Healthcare

- Agents analyzing Electronic Health Records (EHRs) must comply with HIPAA.

- Misdiagnosis or prescription errors can cause direct harm.

- Explainability is crucial—physicians must understand recommendations.

Finance

- Algorithmic trading agents can trigger flash crashes or exploit micro-patterns.

- Credit scoring and underwriting agents may unknowingly reflect redlining practices.

- Agents must comply with FCRA, ECOA, and PSD2 directives.

Government and Public Services

- Predictive policing or welfare eligibility agents risk algorithmic discrimination.

- Citizens often don’t have visibility into agentic decisions.

- These agents demand public accountability and open auditing mechanisms.

Governance and Regulatory Landscape

- EU AI Act: Classifies agentic systems as “high-risk.” Mandates documentation, transparency, and human oversight.

- OECD AI Principles: Stress robustness, safety, and accountability.

- US AI Bill of Rights (Blueprint): Suggests guidelines for privacy, safety, and non-discrimination.

- India’s DPDP Bill focuses on user consent, purpose limitation, and legal recourse for data violations.

Challenge: Regulation lags behind technology. Therefore, businesses must self-regulate responsibly until standardized global norms catch up.

Designing Ethically-Aligned Autonomous Agents

Ethical agent design requires collaboration between engineering and governance. Here’s how to bake ethics into design:

| Ethical Pillar | Design Strategy |

| Fairness | Regularly test for demographic parity in decisions |

| Explainability | Integrate visual explanation tools (e.g., explainer dashboards) |

| Privacy | Deploy zero-trust architectures and anonymized data flows |

| Accountability | Record every decision with agent ID and confidence score |

| Control | Set escalation triggers, rollback functions, and user overrides |

Additionally, enterprises can use Ethical Impact Assessments (EIAs)—similar to environmental impact studies—for every critical agent deployment.

Case Study: Ethical Failure in Agentic Deployment

The LoanBot Debacle (Expanded)

In 2023, a prominent fintech launched “LoanBot” to autonomously assess micro-loan applications. It was trained on internal historical approval data.

What went wrong?

- Rejected women and minority applicants at twice the rate.

- Lacked explainability: Users received “not approved” messages without reasons.

- No appeals process existed.

Consequences:

- Consumer backlash on social media.

- Lawsuits under anti-discrimination laws.

- Regulatory audit forced a shutdown.

Root Cause:

- No bias audits.

- Absence of ethics review board.

- Overdependence on historical (biased) data.

Key Learning: Always include ethics as a deployment stage, not a post-launch reaction.

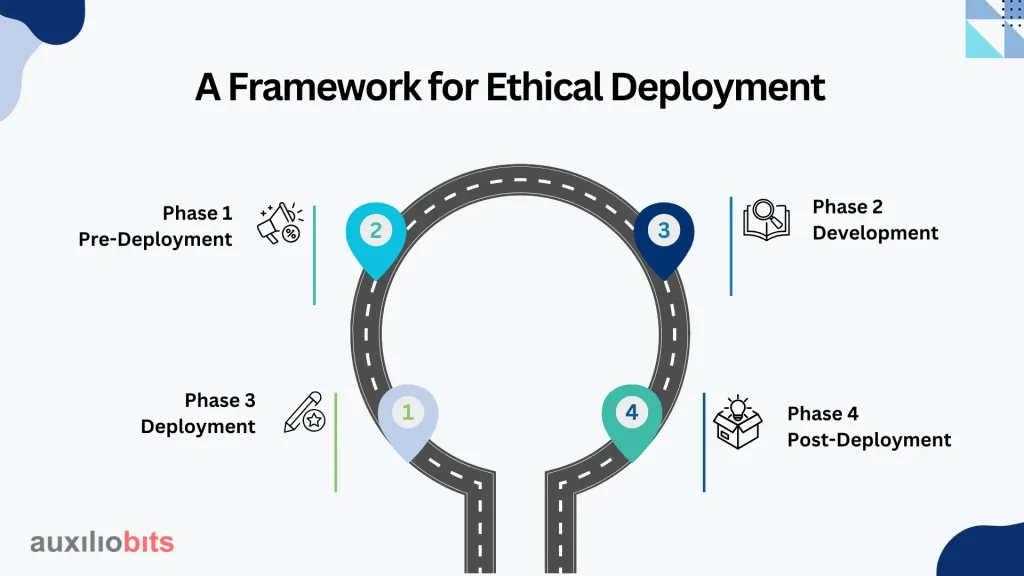

A Framework for Ethical Deployment

Deploying autonomous agents ethically requires a repeatable framework:

Phase 1: Pre-Deployment

- Conduct ethical risk assessments early.

- Identify stakeholders affected (users, customers, regulators).

- Set boundaries for agent autonomy.

Phase 2: Development

- Integrate ethics into data pipelines and model selection.

- Implement audit logs, explainability modules, and bias checks.

- Form interdisciplinary development teams.

Phase 3: Deployment

- Pilot in sandbox environments.

- Enable real-time monitoring dashboards.

- Provide user feedback loops and support channels to ensure seamless communication.

Phase 4: Post-Deployment

- Audit decisions periodically.

- Conduct ethical retrospectives every 3–6 months.

- Measure trust scores via customer sentiment or NPS.

Final Thoughts

The march toward Agentic AI is inevitable, but responsible AI is not automatic.

Ethics must be a proactive design principle, not a last-minute compliance checkbox. Enterprises that embrace ethical deployment now will be better positioned to:

- Earn long-term stakeholder trust

- Avoid legal and regulatory blowback.

- Innovate responsibly and inclusively.

Agentic systems are not just tech assets—they are digital actors representing your brand, culture, and values. Make sure they stand for the right things.