Key Takeaways

- RPA programmes that ground discovery in process‑ and task‑mining data achieve up to 55 % higher year‑one ROI because they automate the right workstreams and eliminate hidden exceptions early.

- Combining process mining (macro flow), task mining (micro actions), and automated business insights (textual context) uncovers both systemic bottlenecks and human‑centric pain points, far beyond what interviews reveal.

- Metrics like activity frequency, 95th‑percentile handle time, rework ratio, and the Automation Suitability Index (ASI) translate technical findings into CFO‑friendly numbers, ensuring stakeholder buy‑in and realistic business cases.

- Role‑based data masking, audit‑trail versioning, and strict data‑residency controls keep discovery compliant while building trust with IT, security, and legal teams.

- Treat your discovery outputs as code—versioned, continuously refreshed, and API‑accessible—so process models evolve with the business and your bots stay aligned with real‑world work.

Robotic Process Automation (RPA) promises rapid efficiency gains, yet even the most sophisticated bot underperforms when pointed at a poorly understood workflow. Process discovery—the disciplined effort to map, measure, and prioritise how work is done—forms the critical foundation.

Too many teams treat discovery as a brief workshop: interview a few subject‑matter experts (SMEs), sketch a swim‑lane diagram, and call it done. Six months later, the bot stalls because an “edge case” surfaces every Tuesday that nobody mentioned. This post delivers a battle‑tested playbook that blends data‑first process mining, task‑level observation, and behavioural analytics.

Whether you’re a Centre of Excellence leader or a business analyst drafting your first automation charter, the guide below will help you uncover hidden variants, quantify automation value, and de‑risk delivery before a single line of bot code is written.

Also read: Building Centers of Excellence for Enterprise-Wide Implementation

Why Process Discovery Matters More Than Design?

- Risk Compression – Early identification of exception paths prevents costly rework late in the lifecycle.

- ROI Fidelity – Quantitative discovery (e.g., activity frequency, cycle time) transforms back‑of‑envelope savings into CFO‑grade business cases.

- Change Empathy – Mapping human touchpoints clarifies whose daily tasks will shift, enabling smoother adoption.

- Compliance Hygiene – Event logs expose undocumented “quick fixes” that violate policy long before auditors do.

- Design Acceleration – Clean, validated requirements shave weeks off bot sprints and slash debugging hours.

Real‑world stat: A 2024 McKinsey survey showed that RPA programmes with formal discovery practices achieved 55 % higher year‑one ROI than those without.

Common Pitfalls That Derail RPA Projects

Even mature organisations stumble. Watch for these traps and the subtle ways they manifest:

| Pitfall | How It Shows Up | Preventive Action |

| Happy‑Path Bias | SMEs describe the ideal flow; logs reveal 27 variants. | Validate interview findings with process‑mining heatmaps. |

| Workflow Myopia | Automating AP ignores that Purchasing feeds dirty data. | Expand discovery boundaries upstream and downstream. |

| Shadow‑IT Blind Spots | VBA macros silently transform data before the bot sees it. | Run desktop scans for hidden scripts; document every workaround. |

| Sample‑Size Fallacy | Observing two clerks for a day misses quarter‑end spikes. | Capture at least one full fiscal cycle of logs (e.g., 13 weeks). |

| Documentation Lag | Visio diagrams are stale within a month of sign‑off. | Store mined models in a versioned repository and refresh quarterly. |

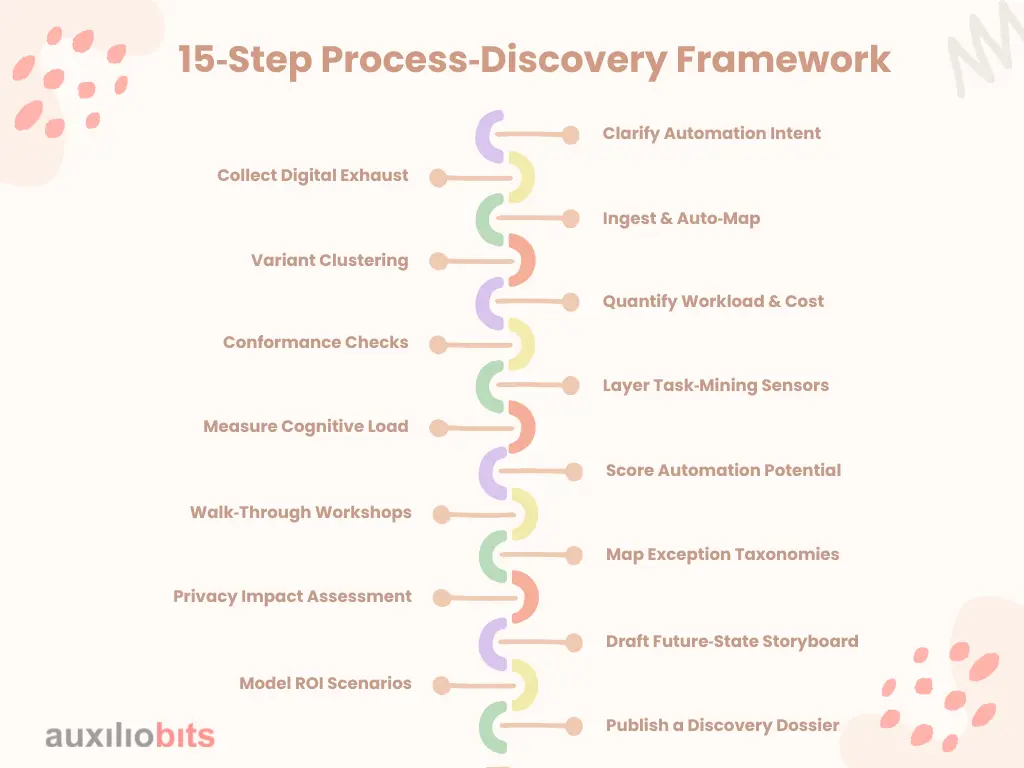

15‑Step Process‑Discovery Framework

Below is the step‑by‑step checklist we use on enterprise engagements. Each cluster includes “why it matters” annotations so stakeholders grasp the payoff.

- Clarify Automation Intent – Align on the problem, success metrics, and constraints. Why? Prevents scope creep and unrealistic ROI promises.

- Collect Digital Exhaust – Export system logs, ERP event tables, and audit trails. Why? Logs tell the unvarnished truth, unlike interviews.

- Ingest & Auto‑Map – Feed data into a process‑mining engine to generate the “as‑is” model. Why? Produces a defensible visual starting point.

- Variant Clustering – Group similar but not identical paths using frequency filters. Why? Highlights hidden complexity that bleeds maintenance budgets.

- Quantify Workload & Cost – Attach handle time and FTE cost to each variant. Why? Converts tech insights into finance language.

- Conformance Checks – Compare mined flows to SOPs to reveal policy drift. Why? Flag governance risks early.

- Layer Task‑Mining Sensors – Capture keystrokes and screen actions on high‑variance steps. Why? Surface micro‑tasks that slow bots, especially in Citrix.

- Measure Cognitive Load – Track window switches, scroll depth, and mouse‑travel distance. Why? High‑friction steps are prime automation targets.

- Score Automation Potential – Use a weighted model (frequency × rule‑basedness × system stability). Why? Prioritises quick wins versus deep refactors.

- Walk‑Through Workshops – Play mined recordings to SMEs, capturing context and tacit knowledge. Why? Validates findings and builds stakeholder buy‑in.

- Map Exception Taxonomies – Categorise data errors, approvals, and external dependencies. Why? Each category demands a distinct automation approach.

- Privacy Impact Assessment – Identify PII in task‑mining footage and plan masking. Why? Avoids legal headaches, especially under GDPR/CCPA.

- Draft Future‑State Storyboard – Show hand‑offs between bots, humans, and AI services. Why? Makes the automation vision tangible and fosters alignment.

- Model ROI Scenarios – Build base, conservative, and aggressive projections, including rework avoidance. Why? Equips leadership with decision‑ready options.

- Publish a Discovery Dossier – Store in a knowledge repo with version control. Why? Creates a single source of truth for dev, ops, and auditors.

Advanced Techniques the Industry Rarely Talks About

Digital Twin A/B Replay

- Spin up a virtual copy of production systems, replay six months of logs, and insert hypothetical bot actions.

- Outcome: Predict where bottlenecks migrate when 80 % of cycle time disappears from Step 3.

Neuro‑Ergonomic Task Scoring

- Use eye‑tracking during task mining to grade visual clutter.

- Insight: Steps with high saccade counts often merit UI automation instead of brittle image recognition.

Process Grasps (Graph Embeddings)

- Encode activity sequences as graph embeddings, enabling similarity search across thousands of processes.

- Payoff: Rapidly spot reusable automation patterns—and avoid reinventing the wheel.

Automated Narrative Generation

- Large language models convert mined metrics into stakeholder‑friendly prose.

- Benefit: Saves analysts from PowerPoint purgatory and accelerates executive sign‑off.

Exception Heat‑Mapping

- Overlay frequency and business‑value data on variant graphs.

- Result: Visual “red zones” direct the team to the costliest pain points first.

Tooling Landscape: Process Mining vs. Task Mining vs. ABI

| Tooling Layer | Primary Data | Granularity | Ideal Use Case | Popular Vendors |

| Process Mining | ERP/CRM event logs | End‑to‑end workflow | Long processes with clear case IDs | Celonis, UiPath, SAP Signavio |

| Task Mining | Desktop interactions | Keystroke/screen | High‑variance, user‑driven tasks | Microsoft Power Automate, UiPath Task Mining |

| Automated Business Insights (ABI) | Unstructured docs, email threads | Semantic/textual | Knowledge‑work processes | Microsoft 365 Copilot, Skan.ai |

Integration Tip: Pipe process‑mining results into task‑mining tooling via APIs to maintain a continuous view from macro flow to micro task.

Data & Metrics: What to Capture and How to Interpret?

To ensure that process discovery delivers actionable insights and sets the stage for high-impact RPA implementation, it’s essential to go beyond surface-level observations and focus on hard data and nuanced behavioral indicators. This section outlines the key quantitative metrics and qualitative signals you should capture—and how to interpret them effectively—to prioritize automation opportunities, anticipate edge cases, and design bots that thrive in real-world conditions.

Core Quantitative Metrics

- Activity Frequency & Std Dev – Reveals seasonal spikes and helps capacity‑plan bot infrastructure.

- Median vs. 95th‑Percentile Handle Time – Long‑tail delay diagnostics; bots need design for worst‑case, not average.

- Rework Ratio – Cases that revisit a prior step; high ratios often indicate data‑quality issues rather than process flaws.

- Automation Suitability Index (ASI) – Composite score based on rules density, data quality, and system stability. ASI > 70 is a green flag.

Qualitative Indicators

- Cognitive Friction – Measured via mouse‑travel heatmaps and window jitter.

- Decision Latency – Gap between task completion and approval action; signals potential for AI‑assisted judgement.

- Sentiment Drift – Apply NLP to chat/email transcripts; rising frustration may reflect hidden manual pain.

Governance, Compliance, and Change Management

Process discovery isn’t merely technical—it rewires governance and culture:

- Data Residency & Sovereignty – Ensure task‑mining footage never crosses borders without legal approval.

- Role‑Based Masking – Blur sensitive fields before reviewers watch recordings.

- Audit Chains – Check in every discovery artefact to Git or SharePoint; add immutable hashes for tamper‑proofing.

- Change Champions – Nominate frontline employees to co‑own discovery insights; peer advocacy outperforms top‑down mandates.

- Training & Upskilling – Offer micro‑modules on reading process‑mining dashboards so business users feel empowered, not replaced.

Building a Living Process Knowledge Base

Static Visio diagrams age quickly. Replace them with a living repository:

- Graph Database Backbone – Store mined models with unique IDs and semantic tags.

- API Exposure – Provide REST endpoints so dev teams can fetch the latest task parameters at build time.

- QR‑Enabled SOPs – Embed QR codes on procedure docs that open directly to the current video snippet.

- Automated Drift Alerts – Schedule weekly log samples; trigger Slack alerts when variant frequency shifts >10 %.

Long‑term vision: Treat discovery output as code—versioned, testable, and continuously integrated with the automation pipeline.

One‑Paragraph Spotlight: How Auxiliobits Applies These Practices

At Auxiliobits, we automate around your existing ERP rather than rip and replace. Our consultants feed SAP or Oracle event logs into an Azure‑native process‑mining stack, enrich noisy Citrix screens with DRUID AI OCR, and push variant insights into a Git‑versioned knowledge base. The result: bots that launch with < 1 % exception rate and save clients an average of 4,200 FTE hours annually—without altering a single line of core‑system code.

Key Takeaways & Next Steps

- Start With Data, Not Interviews – Let logs tell the truth.

- Blend Mining Layers – Process + task + ABI yields 360‑degree visibility.

- Quantify With ASI – Prioritise tasks scientifically, not politically.

- Govern from Day 1 – Embed privacy and audit controls inside discovery artefacts.

- Keep It Alive – Refresh models quarterly to match reality.

Immediate Action Plan

- Export three months of event logs from your highest‑volume process.

- Load into a free trial of any major process‑mining tool.

- Invite key SMEs to a one‑hour “model reveal” workshop.

- Draft your first discovery dossier and share it with the CIO within a week.

Frequently Asked Questions (FAQs)

Q1: How long does end‑to‑end discovery take?

Four to six weeks for a medium‑complexity finance process (50k cases/year). Rushing inflates build costs by 3–5× later.

Q2: Do I need specialised software?

Yes. Excel can’t handle millions of events or generate heatmaps. Licensing is trivial compared to mis‑automating the wrong workflow.

Q3: How do I handle processes that span systems with no shared case ID?

Use fuzzy correlation—string similarity on references, temporal windows, or middleware GUID stamping.

Q4: What distinguishes discovery from documentation?

Documentation freezes a “should‑be” flow; discovery continuously surfaces the “is‑now” flow.

Q5: Can discovery itself yield quick wins before automation?

Absolutely. Standardising naming conventions or removing duplicate approvals often cuts cycle time by 10 % even before the first bot is built.

Real‑World Success Metrics

When discovery is executed correctly, numbers speak louder than case studies:

- 98 % exception paths captured before development, versus ~60 % under interview‑only methods.

- 35 % reduction in bot build effort due to precise requirements.

- 12‑point NPS increase from users whose pain points were exposed and resolved.

- 20 % faster change requests post‑go‑live because the knowledge base already contains variant context.

Next Frontier: Autonomous Process Discovery with GenAI

Large language models (LLMs) can now parse log traces, cluster activities, and draft human‑readable summaries.

How does it work?

- Stream Event Data to an on‑prem LLM fine‑tuned on process graphs.

- Variant Consolidation Suggestions appear live as data arrives.

- Policy Drift Detection triggers Slack alerts when manual overrides spike.

- Interview Scripts auto‑generate based on uncovered gaps, ensuring SMEs address the right anomalies.

Implementation Guardrails

- Human‑in‑the‑Loop – Analysts approve or reject every AI suggestion.

- Prompt Encryption – Sensitive data stays within enterprise boundaries.

- Retraining Cadence – Quarterly fine‑tuning keeps the model aligned with reality.

Early pilots show a 40 % speed‑up in discovery documentation and a 20 % accuracy boost in variant detection. Treat the AI as an analyst amplifier—not a silver bullet—to multiply throughput without sacrificing trust.

Conclusion

Process discovery is the unsung hero of successful RPA. Nail it, and your bots will launch on time, under budget, and with measurable impact. Skip it, and even the flashiest automation platform can’t save you from rework purgatory. Use the best practices above to transform discovery from a checkbox into a strategic moat.

Ready to gain an unfair advantage? Run a discovery sprint next week, share the dossier with your CIO, and watch stakeholder confidence—and automation velocity—skyrocket. Delay costs ROI; start today.