Key Takeaways

- Agentic AI systems must adapt to changing data and environments, ensuring they remain accurate and effective through automated learning processes enabled by MLOps.

- MLOps provides an integrated framework for building, deploying, and maintaining Agentic AI models, automating processes like retraining, validation, and monitoring for optimal performance.

- Without regular monitoring, models can suffer from concept, data, or label drift, leading to inaccurate predictions and poor decisions. This highlights the need for constant vigilance.

- MLOps tools like AI, Alibi Detect, and Azure Monitor help proactively identify and address model drift, minimizing disruptions and ensuring real-time updates to the AI system.

- Automated retraining, triggered by performance thresholds or data shifts, allows Agentic AI to stay aligned with evolving patterns and behaviors, reducing manual intervention and optimizing outcomes.

Artificial intelligence has come a long way, from allowing customers to avoid long waiting lines to enabling them to place an order online. It has worked like magic for companies of all types. AI has allowed these industries to grow, from helping customers find suitable items to allowing manufacturers to avoid stockouts.

But companies need to identify the significance of models when it comes to model drift detection and continuous learning. Models come in handy only if they have collected valuable data and operate on systems smoothly. This cannot be taken lightly when using agentic AI. Nevertheless, to stay up to date, AI agents must learn and enhance their knowledge to function correctly. This is where MLOps, or machine learning operations, come into play.

MLOps is a rulebook or a toolbox that helps handle the entire lifecycle of artificial intelligence models. From building and training them to deploying, improving, and monitoring, MLOps are paramount. Regarding agentic AI, MLOps ensures that the agents are up to the mark and operate wisely. Without MLOps, the models helping AI agents become outdated and tend to make wrong decisions.

Also read: Integrating RPA AI and Process Mining for Manufacturing Success.

What is Agentic AI?

Agentic AIs are independent AI systems that work to achieve goals. However, these agents function independently, meaning no human is needed to perform a task. AI agents function better than they do. They can:

1. Adjust themselves considering different situations.

2. Make data-based decisions

3. Learn from interactions

4. Perceive their environment

Examples include AI customer service bots, autonomous vehicles, and intelligent automation agents in business processes.

Why is Continuous Learning Crucial for Agentic AI?

Agentic AI systems are designed to function autonomously, making decisions, performing tasks, and learning from their surroundings with minimal human involvement. These systems often operate in dynamic, ever-changing environments, like finance, healthcare, e-commerce, or logistics, where new data and situations emerge regularly. In such settings, static models quickly become outdated. If an Agentic AI cannot adapt to new information, it may begin to make poor decisions, act on incorrect assumptions, or fail to complete tasks effectively. This is why continuous learning is beneficial and essential for these systems to remain valuable and accurate over time.

One of the main advantages of continuous learning is adaptability. Agentic AI systems with continuous learning can adjust to new patterns, behaviors, or environmental changes in real time. For instance, an AI agent used in fraud detection can recognize and respond to evolving fraud tactics without needing manual reprogramming. This flexibility allows the AI to stay aligned with real-world conditions, making it more resilient and effective.

Another key benefit is improved accuracy. AI models often rely on historical data to make predictions. But if those predictions are not updated with the latest information, the model’s performance can degrade. Continuous learning allows the AI to incorporate recent data and correct for any drift in behavior, thus reducing errors and maintaining high-quality outcomes.

Continuous learning also enhances scalability. As systems grow—whether in terms of users, tasks, or data sources—continuous learning ensures that the AI can handle the increased complexity without needing complete retraining from scratch. This makes it easier to scale intelligent systems across multiple environments or departments.

Finally, continuous learning reduces the need for manual intervention. Traditional machine learning models require human data scientists or engineers to retrain them periodically. With constant learning, this process becomes automated. The AI can self-monitor, detect when its performance drops, and update itself accordingly. This saves time and resources and speeds up the feedback loop, allowing quicker improvements and more reliable performance.

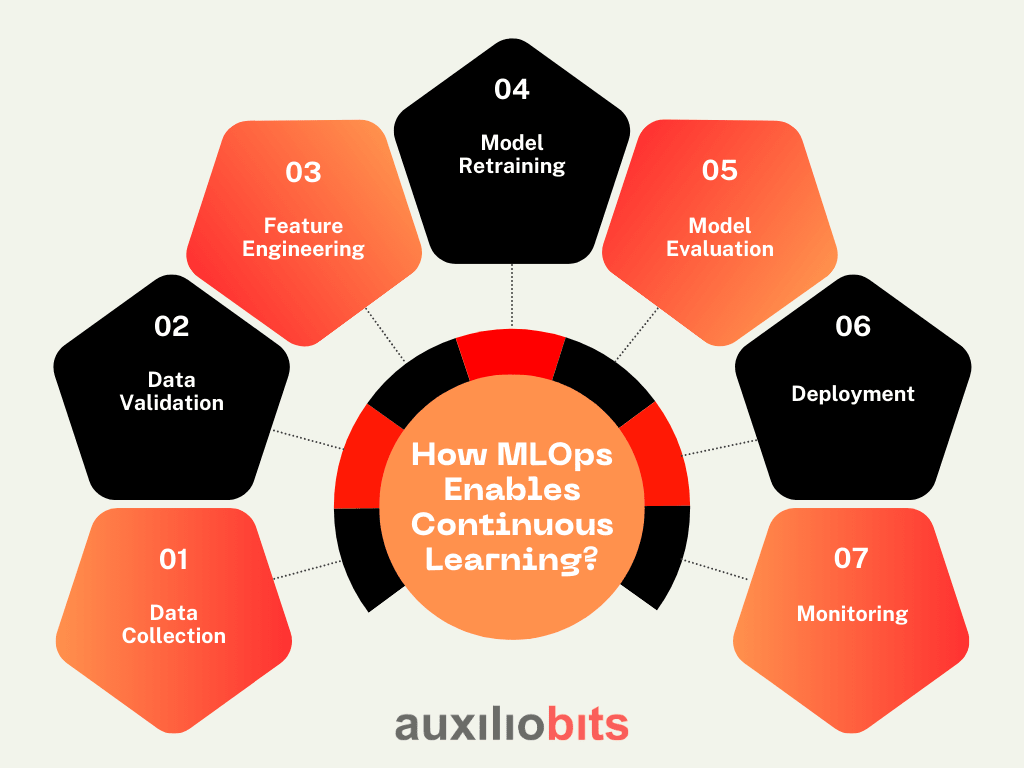

How MLOps Enables Continuous Learning?

MLOps, or Machine learning operations, enables continuous learning for Agentic AI systems. These systems must constantly adapt to new information, behaviors, and environmental patterns. To do this effectively and reliably, they require well-structured processes that can automate the learning and improvement of their underlying models. This is where MLOps comes in, providing a framework to streamline and automate the entire machine learning lifecycle—from data collection to deployment and monitoring.

A typical continuous learning pipeline powered by MLOps consists of several automated stages:

1. Data Collection:

The process begins with collecting new data from the AI agent’s interactions with its environment, often in real time. This might include user behavior, sensor inputs, transaction records, or conversational logs. This new data is essential for keeping the model relevant and aligned with changing conditions.

2. Data Validation:

Once the data is collected, it must be validated to ensure quality. This includes checking for missing values, outliers, corrupted entries, or inconsistent formats. Unreliable data can harm the model’s performance. Automated validation steps catch these issues early and prevent insufficient data from entering the training process.

3. Feature Engineering:

As the nature of data changes, features—i.e., the inputs used by the model—may also need updating. MLOps pipelines can automatically engineer or re-engineer features to better capture patterns in the new data, improving the model’s ability to learn and generalize.

4. Model Retraining:

In this stage, the model is retrained using old and new data. Retraining ensures the model learns from recent trends while retaining valuable past knowledge. MLOps automates this process, triggering retraining when specific conditions are met, such as performance degradation or a particular volume of new data.

5. Model Evaluation:

After retraining, the model undergoes evaluation to verify its accuracy and performance. MLOps systems compare the new model against the previous version to ensure improvement. If the updated model performs better, it moves to the next stage.

6. Deployment:

The validated model is then automatically deployed to production. MLOps enables seamless rollout without disrupting ongoing operations, sometimes using strategies like canary or blue-green deployments for added safety.

7. Monitoring:

The model is continuously monitored post-deployment to track key metrics such as accuracy, response time, and error rates. MLOps tools alert teams to potential issues like model drift, triggering the cycle to restart when necessary.

By automating these steps, MLOps ensures that Agentic AI systems can continuously learn, evolve, and perform reliably with minimal human oversight.

Model Drift: The Hidden Threat

Model drift is a silent but serious challenge in machine learning, especially for Agentic AI systems that rely on accurate, real-time decision-making. It occurs when a model’s performance declines over time, not because of bugs or errors in the system, but because the data it was trained on no longer reflects the current reality. This misalignment can lead to wrong predictions, poor decisions, and a loss of trust in AI systems.

There are several types of model drift. One common type is concept drift, which happens when the relationship between the input data and the expected output changes. For example, an AI model that detects fraudulent transactions might become less effective if fraud tactics evolve. The same inputs might no longer signal fraud, or new patterns may emerge that the model doesn’t recognize.

Another type is data drift, where the distribution or characteristics of the input data change. For instance, if a chatbot is trained on formal English but starts receiving more slang or regional phrases, its understanding may weaken, even if the task remains the same.

Label drift occurs when the meaning or distribution of output labels changes. This might happen in customer sentiment analysis, where phrases once considered harmful may now be neutral or positive, depending on cultural shifts or new usage patterns.

Many factors can cause model drift. Seasonal changes may influence shopping behavior, affecting recommendation systems. User behavior changes can alter how people interact with websites or apps. Market trends may shift what customers value or expect. Even system updates, such as a new app layout, can change how users interact with the platform.

Identifying and addressing model drift early is critical. Continuous monitoring and retraining through MLOps help detect these shifts and update models accordingly, ensuring Agentic AI remains accurate, relevant, and effective.

Detecting and Managing Model Drift with MLOps

In the lifecycle of machine learning models, managing model drift is one of the most critical tasks, especially for Agentic AI systems that operate autonomously in dynamic environments. Drift can reduce accuracy, cause faulty decision-making, and degrade user experiences. MLOps plays a vital role in detecting and managing model drift by providing the infrastructure and tools to continuously monitor, analyze, and adapt models.

One of the core strategies in MLOps is monitoring key performance metrics. By constantly tracking metrics such as accuracy, precision, recall, F1-score, and prediction confidence, teams can identify when a model starts underperforming. Sudden drops or gradual declines in these metrics may signal that model drift is occurring. This real-time monitoring helps catch issues early before they affect business outcomes.

Another critical approach involves statistical tests to detect changes in the data distribution. For example, the Kolmogorov-Smirnov test can compare the distribution of incoming live data with historical training data. If a significant difference is found, it indicates that the model is encountering unfamiliar data and may no longer be valid. These tests can be run on input features, output predictions, or both, offering a robust way to spot drift even before performance metrics drop.

MLOps also supports drift detection tools designed explicitly for this purpose. Tools like AI, Alibi Detect, and Azure Monitor provide visualizations, automated alerts, and statistical analyses to help detect concept and data drift. These tools can be integrated into the MLOps pipeline to create a proactive monitoring system that responds to real-time changes.

Once drift is detected, MLOps enables automated retraining. Based on predefined thresholds or rules, the pipeline can trigger retraining workflows automatically. This includes gathering fresh data, validating it, retraining the model, evaluating the new version, and deploying it without human intervention. Automated retraining ensures that models align with current data patterns and user behavior.

The Ending Words

Agentic AI has the potential to revolutionize how we automate and scale intelligent tasks. However, these systems can fail silently without continuous learning and effective drift detection. MLOps offers a robust framework to keep Agentic AI systems reliable, adaptive, and high-performing.

By investing in MLOps best practices, organizations can ensure that their AI agents continue to learn, grow, and deliver value over time.