Key Takeaways

- Explainable AI helps detect hidden bias in credit models, ensuring fair treatment across all demographics and reducing discrimination in financial decision-making.

- Laws like ECOA demand clear explanations for credit denials; explainable AI enables institutions to provide specific, legally compliant reasoning.

- When people understand why they were approved or denied, they’re more likely to trust the system and engage positively with financial institutions.

- Techniques like SHAP and LIME make complex models transparent, helping stakeholders understand, validate, and improve AI-driven credit decisions.

- Using ethical data, testing fairness, choosing suitable methods, and involving humans ensures AI systems remain accurate and explainable.

With so many people applying for loans and credit cards, finance experts are utilizing artificial intelligence to decide who should get one. These artificial intelligence systems identify individuals’ data to determine their suitability for a loan. Additionally, they use their information to monitor whether they will pay back the money on time. This allows financial settings to make wise decisions without spending too much time.

Nevertheless, many of the artificial intelligence models are considered to be black boxes. They give results without explaining how or why they have come to a specific decision. This makes it challenging to identify if the decision was appropriate. Even the customers may feel confused if they do not know why their loan was not approved. This is why explainable AI is paramount. It opens up the black box and makes it easier for everyone to understand by mentioning clear reasons behind the decision. This way, financial institutions can build trust with their customers.

Also read: Leveraging Generative AI for Predictive Maintenance in Manufacturing Equipment.

What is Credit Risk Assessment?

Credit risk assessment is the process lenders use to determine how likely someone is to repay the borrowed money. This could be a person asking for a credit card or a business applying for a loan. The goal is to ensure that the lender does not lose money by lending to someone who cannot repay.

In the past, this process was simple. Lenders made their decision based on a few key factors. They checked the person’s credit score, which shows how well they have managed loans and credit in the past. They also examined the person’s income to see if they earned enough money to repay the loan. Employment history helped show how stable their job was. Past loan behavior, such as whether they missed payments, was also necessary.

Today, things have changed. With the rise of AI (Artificial Intelligence) and machine learning, lenders use many more data types to make decisions. They may look at a person’s spending habits, such as how much they spend each month and what they buy. Some lenders even use digital footprints, like online activity or social media behavior, to better understand a person’s habits and financial behavior.

These new AI models are compelling. They can quickly go through vast data and find patterns humans might miss. This helps lenders make faster and often more accurate decisions. It also allows them to process more loan applications in less time.

But there is a downside. These models are often very complex. Even the experts who build them may not fully understand how every decision is made. This makes them hard to explain and sometimes hard to trust. If a person is denied a loan, they might not be told why. This can feel unfair and confusing.

That’s why transparency in credit risk assessment is becoming more critical. People want to know how decisions are made and whether they are treated fairly. Lenders also need to follow rules and be able to prove that their systems are not biased or discriminatory.

As AI grows in finance, making clear and understandable credit decisions is key to keeping the process fair and responsible.

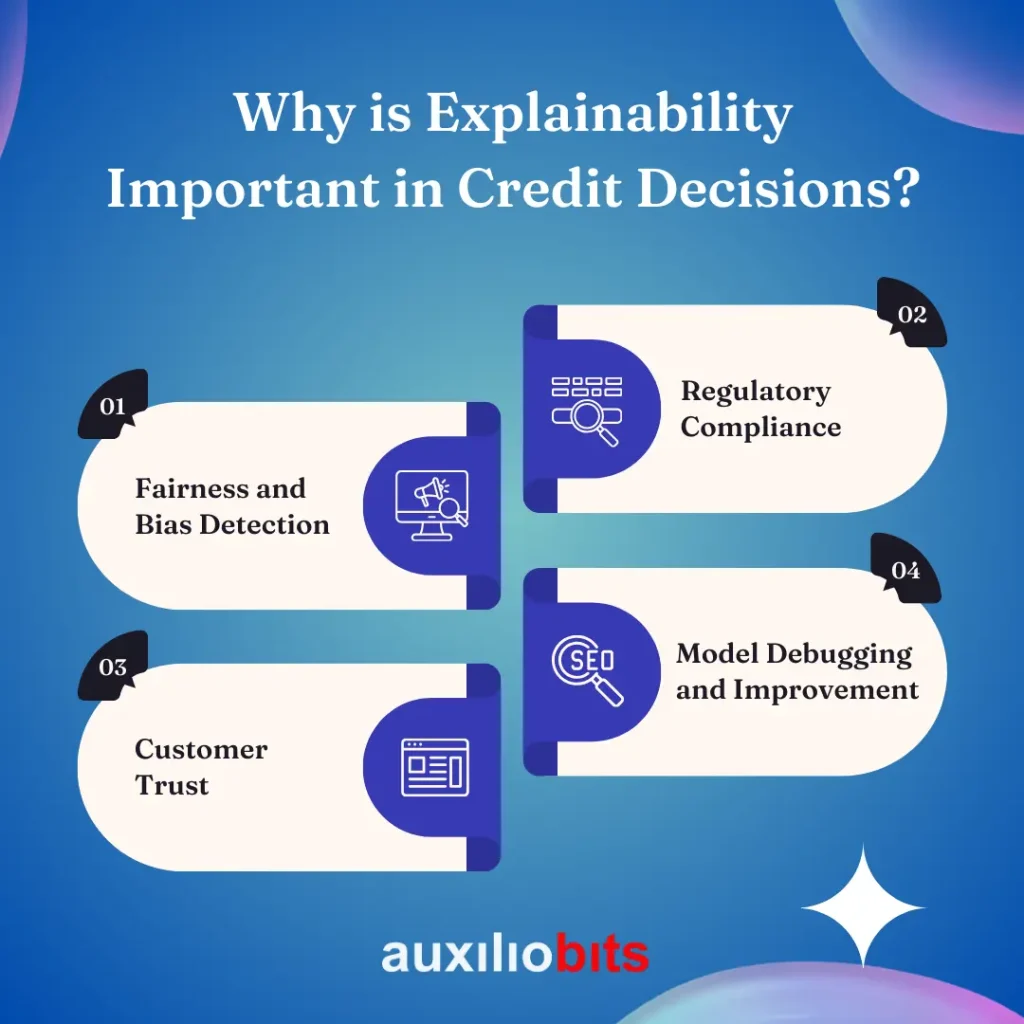

Why is Explainability Important in Credit Decisions?

1. Fairness and Bias Detection

AI models learn from data. If the data used to train a model contains bias, the model can remember and repeat those biases. For example, suppose a dataset includes historical decisions unfair to specific groups, such as people of a certain race, ethnicity, gender, or zip code. In that case, the AI model might also make unjust decisions. These are not always obvious, especially in complex models with many inputs and layers.

Explainability helps uncover these hidden patterns. By showing why a model made a specific prediction, XAI tools can highlight potential discrimination. This allows data scientists to use the model without sensitive or inappropriate features. If so, the model can be retrained or adjusted to make it fairer. Without explainability, these issues could go unnoticed and continue to harm people unfairly.

2. Regulatory Compliance

In many countries, credit decisions must follow strict legal rules. In the United States, for example, the Equal Credit Opportunity Act (ECOA) requires lenders to give a clear and specific reason when someone is denied credit. Saying “your credit score is too low” is not enough, based on many complex factors.

XAI helps financial institutions meet these regulatory requirements. It allows them to trace and explain which factors influenced their decision and how much each mattered. This transparency is essential for meeting legal standards and avoiding lawsuits, penalties, and damage to reputation.

3. Customer Trust

People want to know why they were accepted or denied credit. If they receive a clear and fair explanation, they are more likely to decide, even if it’s not in their favor. But if the decision seems random or unclear, it can lead to confusion, frustration, and distrust.

Explainable AI builds customer confidence. It shows that decisions are made using fair and logical rules, not hidden or mysterious processes. This is especially important when customers challenge decisions or ask for reviews. When they see the reasoning, they may also understand what they can do to improve their creditworthiness in the future.

4. Model Debugging and Improvement

AI models are not perfect. They can unexpectedly have an unexpected effect, especially when working with large and changing datasets. Without explainability, it can be hard for data scientists and risk managers to understand what went wrong or how to fix it.

Explainability tools help by showing which features the model focused on and how those features led to the outcome. This helps teams debug problems, improve model accuracy, and reduce risks. It also supports better collaboration between technical and business teams since everyone understands the model’s behavior more clearly.

Balancing Performance and Transparency

Here’s the challenge: the most accurate AI models are often the least explainable.

1. Complex models like deep neural networks or gradient boosting machines (e.g., XGBoost) are powerful but difficult to interpret.

2. Simple models like decision trees or logistic regression are easy to understand but might be less accurate.

So, how do we get the best of both worlds?

Several approaches to explainable AI (XAI) are used in credit risk assessment, including AI in Credit Risk.

AI (XAI) is used to make AI decisions more transparent. These methods help explain how models work and why a specific decision was made. The two main approaches are using interpretable models and applying post-hoc explainability techniques.

1. Use of Interpretable Models

One of the simplest ways to ensure explainably interpretable models. These models are easy to understand and explain without extra tools. Examples include:

- Logistic Regression: This model predicts the probability of repayment based on features like income or credit score. Each feature has a weight that shows its impact.

- Decision Trees: These models follow a simple tree structure, asking yes/no questions at each step, making the decision path easy to trace.

- Rule-Based Systems: These use “if-then” rules to decide, such as “If credit score > 700 and income > $3000, approve loan.”

While these models are transparent, they may not handle complex pattern datasets and advanced AI models.

2. Post-Hoc Explainability Techniques

When lenders use more complex models like neural networks or ensemble methods for better accuracy, they often add explainability after the model is built. Key techniques include

- LIME (Local Interpretable Model-Agnostic Explanations): LIME builds simple models around individual predictions. It shows which features had the most influence on that specific decision.

- SHAP (SHapley Additive exPlanations): SHAP assigns a value to each feature showing how much it contributed to a prediction. It can explain both individual and overall model behavior.

- Counterfactual Explanations: These show what changes would lead to a different result. For example: “If your monthly income were $500 higher, your loan would be approved.”

- Feature Importance Plots: These plots highlight which features most influenced decisions across many predictions, helping stakeholders understand model behavior at a high level.

Together, these tools make complex models more transparent and easier to trust.

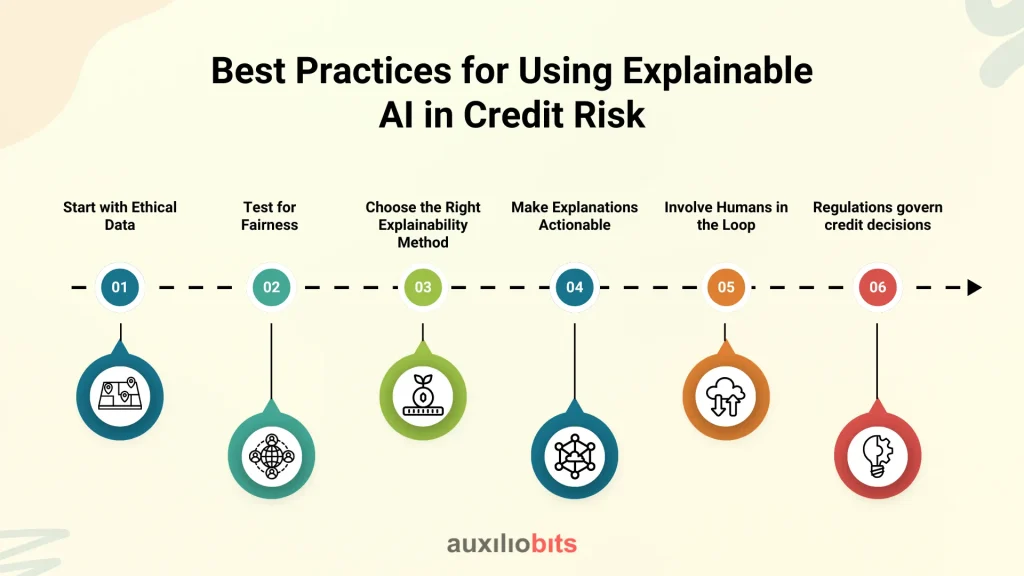

Best Practices for Using Explainable AI in Credit Risk

As the use of AI in credit risk assessment is crucial, it becomes essential not just to make accurate predictions but to do so fairly, transparently, and responsibly. Explainable AI (XAI) plays a key role in achieving this, but it must be implemented thoughtfully to be effective. Here are some best practices for using XAI in credit risk decisions:

1. Start with Ethical Data

Explainability starts with the data. AI models learn from historical data, which is biased; the model is biased if the data is biased. It is essential to use clean, well-prepared, and unbiased data. This means removing or correcting data that reflects past discrimination or unfair practices. For example, the model may learn that bias if previous decisions were unfairly favored. Starting with ethical data ensures a fair foundation for the AI system.

2. Test for Fairness

After building the model, regularly test it for fairness. This includes checking how the model performs for different groups based on race, gender, age, or income level. Are certain groups more likely to be denied when they have similar financial profiles? These audits help identify hidden decisions that are fair across all segments. Tools and metrics like disparate impact analysis can support this effort.

3. Choose the Right Explainability Method

Not every situation requires the same approach. Use interpretable models like decision trees or logistic regression, especially for high-stakes decisions when possible. For more complex models, apply post-hoc explainability tools like SHAP or LIME to provide clear and accurate explanations. Choosing the correct method ensures that accuracy and transparency are maintained.

4. Make Explanations Actionable

Explanations should tell users what happened and guide them on how to improve. For example, telling someone “your loan was denied because of including” should also come with suggestions like “increasing your income by $500 per month could change the result.” Actionable insights help customers make informed decisions and improve financial outcomes.

5. Involve Humans in the Loop

AI should support, not replace, complex cases; combining AI recommendations for borderline or complex cases with human expertise is best. Loan officers or credit analysts can review the AI’s decisions and provide additional context, especially when the AI’s prediction is unclear or questionable.

6. Regulations govern credit decisions

Make sure all explanations are understandable, legally compliant, and well-documented. This protects the customer and the organization and builds long-term trust in AI-powered systems.

Following these best practices helps ensure that explainable AI in credit risk is used ethically, fairly, and effectively.

Conclusion

Explainable AI reshapes credit risk assessment by making advanced models more transparent and fair. Balancing performance with clarity presents challenges, but the ethical and practical benefits make it a worthy pursuit for any financial institution.

We can create more innovative, intelligent, and human-centered systems by focusing on explainability.