Key Takeaways

- Training your AI on your sector’s workflows, language, and policies ensures context-aware assistance that is accurate, efficient, and aligned with your business’s goals and customer expectations.

- Without domain adaptation and security controls, AI models may leak sensitive data, fabricate facts, or violate regulations like HIPAA or GDPR, putting trust, reputation, and legality at risk.

- Retrieval-Augmented Generation (RAG) keeps sensitive data outside the model weights while enabling real-time access to trusted knowledge, improving safety, flexibility, and regulatory alignment.

- Use vector stores like Pinecone or FAISS with document embeddings to allow precise, secure, and real-time knowledge retrieval, powering accurate, dynamic agent responses without retraining.

- Setting clear agent roles, constraints, and examples through prompts improves consistency, reduces hallucinations, and ensures your AI responds professionally and only from authorized knowledge sources.

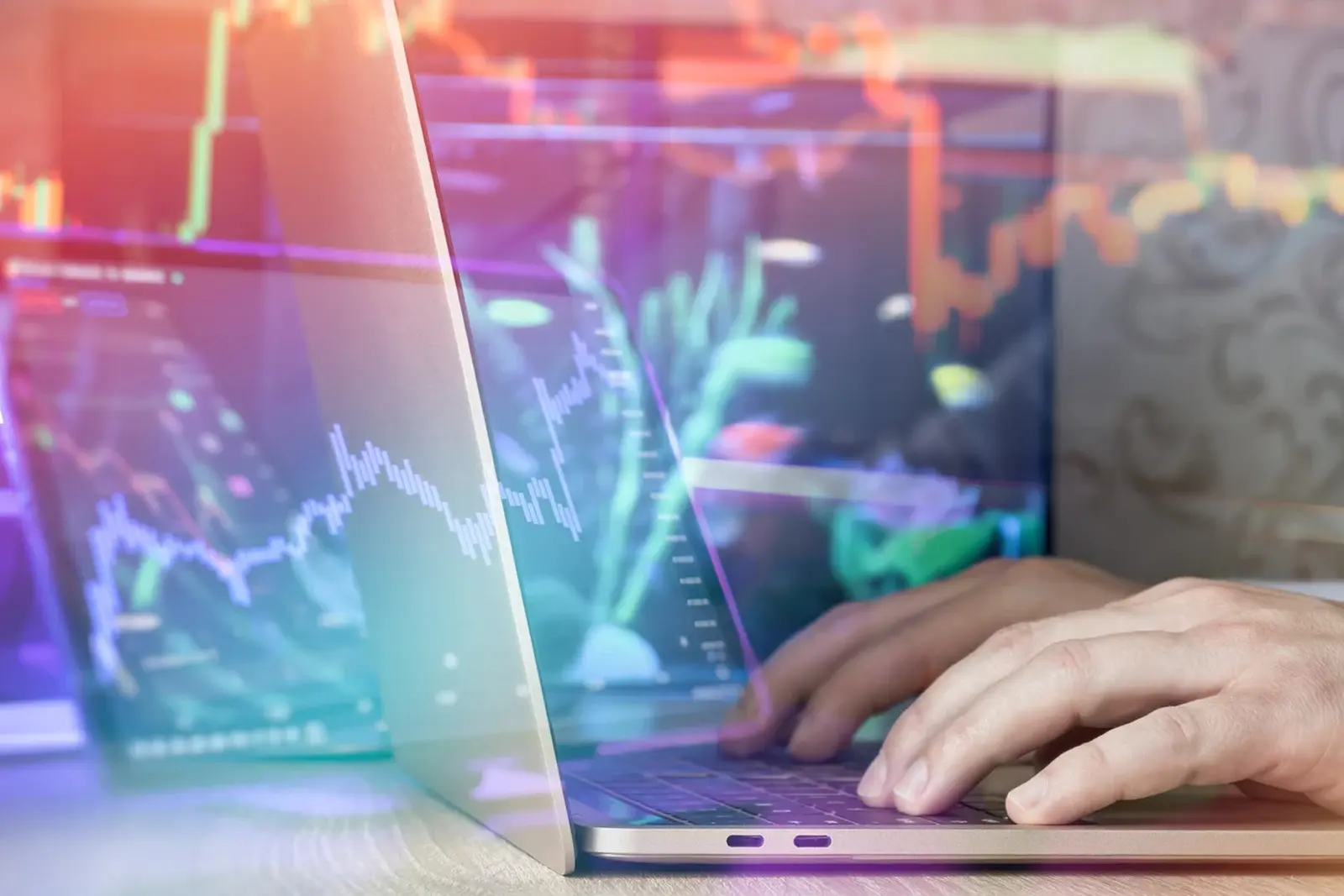

AI chat agents have gained immense popularity in how firms interact with their users. This has helped them provide smart, real-time assistance to several sectors. From virtual medical assistants in healthcare that help patients schedule appointments to customer service bots in financial services that respond to queries, AI chat agents are evolving into indispensable tools. They are best known for growing their skills to understand domain-specific knowledge, internal workflows, and customer behavior patterns. As firms have continued to utilize these agents often, domain-relevant data becomes paramount. Nevertheless, this comes with a significant challenge: teaching an AI chat agent about a particular sector’s procedure and data without hampering performance and security.

Feeding sensitive information to AI models—especially those deployed in cloud or hybrid environments—poses significant risks. Proprietary business data, personally identifiable information (PII), and compliance-sensitive content cannot be handled carelessly. Moreover, poorly designed training pipelines can result in degraded model performance, hallucinations, or the leakage of private data through unintended outputs.

To address these challenges, organizations must adopt strategies that prioritize secure fine-tuning or retrieval-augmented generation (RAG), strict data governance, robust access control, and model monitoring. The goal is to strike a balance between enabling the AI agent to deliver accurate, helpful, and context-aware responses while ensuring compliance with data protection laws and preserving the integrity of business-critical information.

This blog will explore the best practices for safely training AI chat agents on domain-specific knowledge. We’ll look at different approaches—from secure embedding-based retrieval systems to guardrail-enforced prompting—and provide practical tips that help ensure your agent is intelligent and trustworthy. The principles apply universally in finance, healthcare, retail, or manufacturing.

Also read: Explainable AI in Credit Risk Assessment: Balancing Performance and Transparency

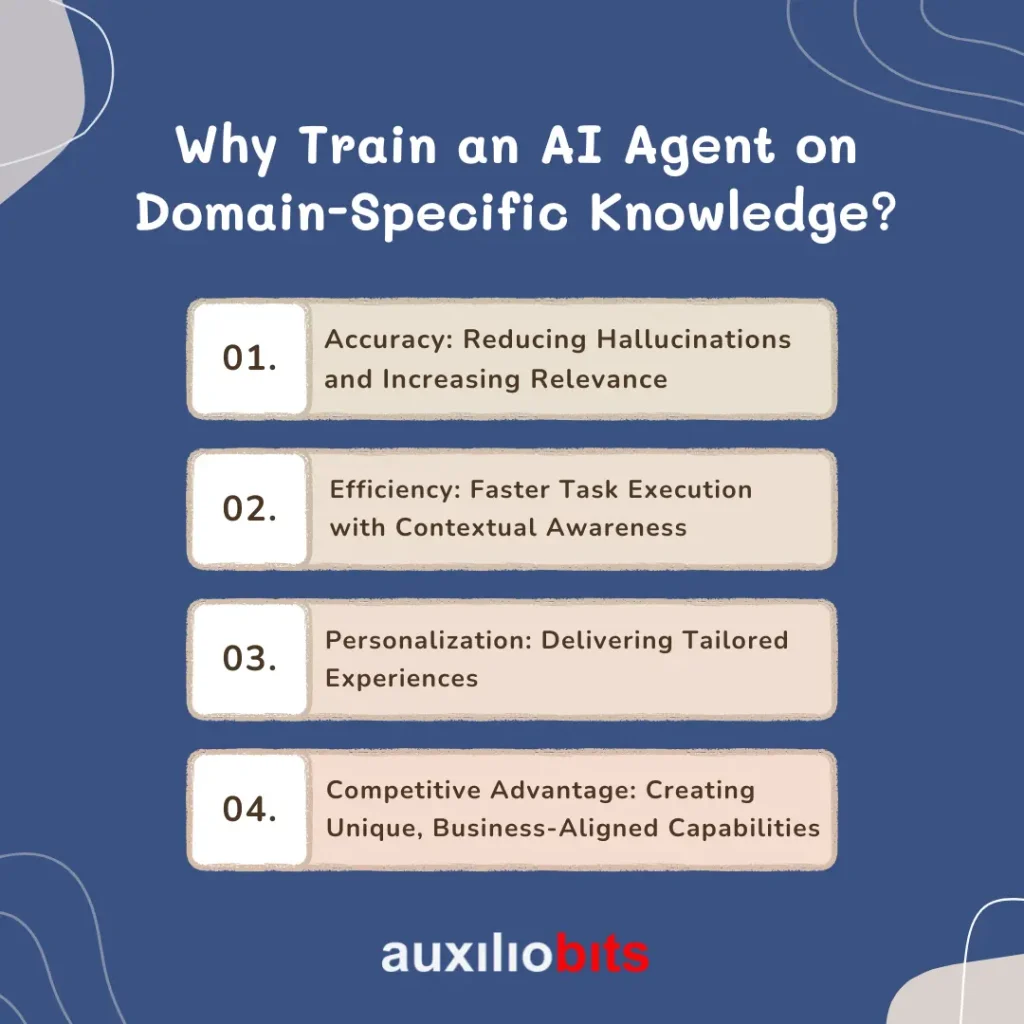

Why Train an AI Agent on Domain-Specific Knowledge?

Generic AI chatbots help answer surface-level queries, such as providing store hours, tracking shipments, or resetting passwords. But in many real-world scenarios, businesses need more than just a helpful assistant—they need a domain expert. Whether offering tailored financial advice, guiding a patient through pre-operative instructions, or handling internal queries about HR policies, your AI chat agent must understand the nuanced language, rules, and workflows unique to your industry. This is where domain-specific training becomes essential.

1. Accuracy: Reducing Hallucinations and Increasing Relevance

One of the most critical issues with general-purpose language models is hallucination, when the model generates responses that sound plausible but are factually incorrect. Training your AI agent on domain-specific data dramatically reduces the chances of such errors. When the model is equipped with the correct terminology, standard procedures, and up-to-date compliance requirements relevant to your field, its responses become more grounded in reality and significantly more helpful to users.

2. Efficiency: Faster Task Execution with Contextual Awareness

An AI agent trained in your domain can handle more complex tasks faster and with fewer clarifications. It understands the context, abbreviations, and workflow steps unique to your business. For example, a healthcare AI assistant trained on clinical documentation and hospital protocols can schedule appointments, retrieve patient histories, and explain treatment options precisely, without requiring constant human oversight.

3. Personalization: Delivering Tailored Experiences

Domain-aware agents can adapt responses based on the user’s role, intent, or historical interactions. In a banking scenario, an AI that understands account types, interest rates, and loan application workflows can provide advice tailored to individual customers, enhancing engagement and satisfaction. Personalization at this level is nearly impossible with a one-size-fits-all model.

4. Competitive Advantage: Creating Unique, Business-Aligned Capabilities

Training your AI on proprietary processes, product catalogs, compliance guidelines, and customer service protocols transforms it into a strategic asset. It becomes an extension of your brand voice and an intelligent service interface. This streamlines operations and creates a differentiated user experience that competitors using generic chatbots can’t replicate.

In short, domain-specific training enables your AI agent to go beyond being helpful—it becomes knowledgeable, reliable, and aligned with your business goals. It’s not just an enhancement; it’s a necessity for serious enterprise-grade AI deployment.

Risks of Improper Training

Before exploring how to train AI chat agents safely and effectively, it’s crucial to understand what can go wrong. Improper training of AI agents—especially when dealing with proprietary or sensitive data—can lead to serious technical, ethical, and legal consequences. Without a disciplined approach, organizations risk undermining trust, exposing confidential data, and degrading the quality of user interactions.

1. Data Leaks: Exposing Sensitive or Customer Data

One of the most critical risks during AI training is the unintended exposure of sensitive information. If personal data, proprietary business content, or internal communications are used without proper anonymization or security controls, they could become part of the model’s responses. This is especially dangerous when using models in customer-facing scenarios. Imagine an AI agent inadvertently revealing a client’s private health records or internal strategy documents—such incidents can lead to reputational damage and legal liabilities.

2. Overfitting: A Brittle Model That Can’t Generalize

Overfitting occurs when an AI model is trained too specifically on a narrow dataset, causing it to memorize rather than understand. While domain-specificity is desirable, overfitting makes the agent inflexible and unable to respond to variations or edge cases on which it wasn’t explicitly trained. In practical terms, this might result in the chatbot failing when users phrase questions differently or when a new policy is introduced.

3. Hallucinations: Generating Inaccurate or Fabricated Responses

AI agents rely heavily on the clarity and completeness of their training data. If the domain-specific knowledge is fragmented, outdated, or poorly structured, the model may “hallucinate”—confidently presenting false or misleading information. This is particularly dangerous in regulated industries like finance, healthcare, or law, where factual accuracy is non-negotiable.

4. Bias: Inheriting Unwanted Assumptions from Source Content

If your internal documentation contains biases—intentional or not—your AI agent can amplify them. This could include gendered language, regional favoritism, or cultural insensitivities. In customer service, for example, biased behavior can alienate users or lead to discriminatory outcomes, potentially violating fairness or diversity commitments.

5. Compliance Issues: Violating Regulations like HIPAA or GDPR

Different regions have strict data privacy regulations, and using personal or regulated data without proper handling can lead to legal action. For instance, using health data in AI training without following HIPAA guidelines can result in heavy penalties. Similarly, the GDPR mandates that personal data in AI applications be processed transparently and securely.

Understanding these risks is the first step toward building safe, compliant, and effective AI agents.

Step-by-Step Guide to Train Your AI Chat Agent Safely

While training your AI chat agent, make sure to follow the steps below to ensure safety:

1. Define the Scope and Use Case

- Identify what tasks the agent should handle (e.g., onboarding, technical support, claims processing).

- Define boundaries: What should the agent not do?

- This reduces unnecessary data exposure and makes training more targeted.

2. Choose the Right Architecture

You have two main options:

- Retrieval-Augmented Generation (RAG): The AI pulls knowledge from external sources like databases or documents at runtime.

- Fine-tuning: You modify the AI’s internal model weights with your domain data.

Tip: RAG is safer and more flexible than full model fine-tuning for most enterprise scenarios.

3. Prepare and Sanitize Your Knowledge Base

Before feeding anything into the AI, clean your data:

- Remove personal or confidential information (PII, PHI).

- Standardize formats (e.g., product manuals, policy PDFs).

- Use document chunking for large files (split into 300–1000 token chunks).

- Add metadata tags like department, document type, date, etc.

Tools: LangChain, LlamaIndex, Azure Cognitive Search, Pinecone

4. Use Embeddings and Vector Databases

Store your domain documents as embeddings (numerical representations) in a vector database. During a conversation, the agent fetches relevant content via a similarity search.

Popular tools:

- Vector Stores: FAISS, Pinecone, Weaviate, Azure AI Search

- Embeddings Models: OpenAI, Hugging Face, Azure OpenAI, Cohere

This allows the AI to access your domain content without storing it in its core model.

5. Implement Role-Based Access and Security Layers

Protect your data and user interactions:

- Use Authentication & Authorization to limit access to certain documents.

- Mask or redact sensitive fields in responses.

- Encrypt data at rest and in transit.

- Set up usage monitoring and logging.

Integrate with identity providers like Azure AD, Okta, or Auth0 for enterprise use.

6. Test with Red Teaming and Guardrails

Before going live:

- Perform adversarial testing to check for data leaks, hallucinations, and unsafe behavior.

- Set up guardrails using tools like Microsoft Presidio, Guardrails.ai, or OpenAI’s function calling and safety flags.

- Validate answers with subject-matter experts (SMEs).

7. Continuously Monitor and Improve

AI isn’t “set and forget.” Monitor:

- Accuracy rates

- Escalation volumes

- User feedback

- Failure modes (e.g., unhelpful answers)

Use this feedback loop to refine your content base and retrain as needed.

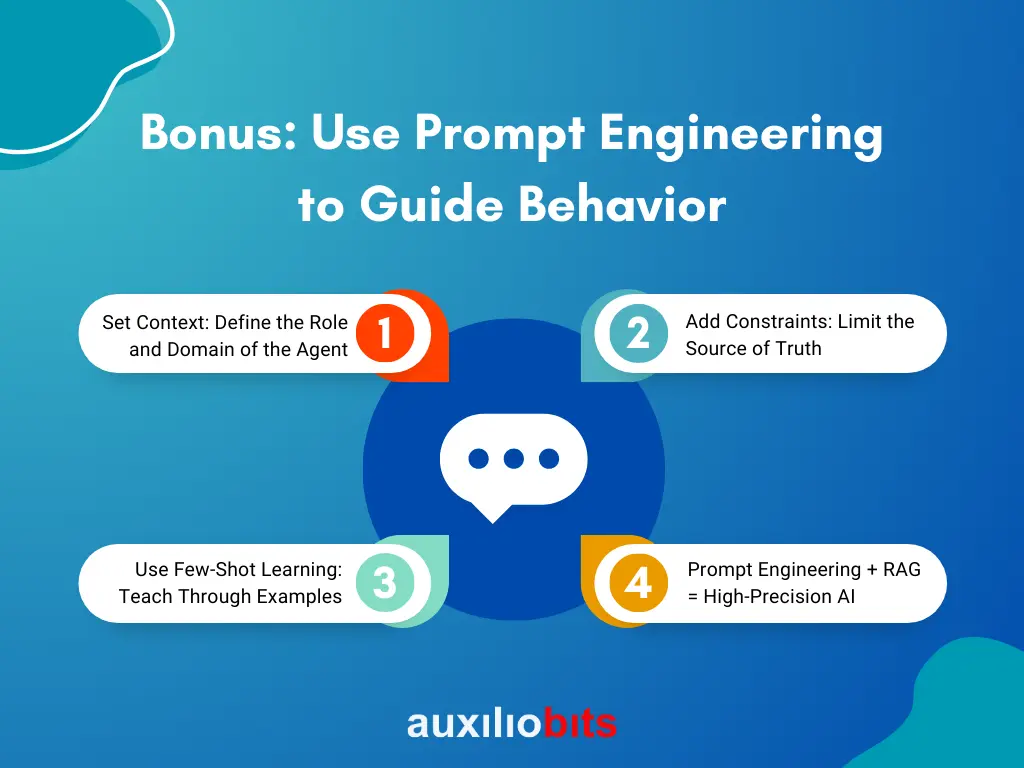

Bonus: Use Prompt Engineering to Guide Behavior

While model training and retrieval-augmented generation (RAG) form the technical backbone of intelligent AI agents, prompt engineering is an equally powerful yet often underutilized tool for guiding agent behavior. Think of prompts as the instructions or cues that tell your AI how to think and what role to play in a given interaction. When designed effectively, prompts can significantly improve the quality, consistency, and safety of AI-generated responses, especially in domain-specific use cases.

1. Set Context: Define the Role and Domain of the Agent

A generic AI model can behave like a jack-of-all-trades, but if you want specialized performance, you must provide clear context.

For example, “You are a compliance assistant for a healthcare provider. Your job is to help staff understand internal policies related to HIPAA regulations.”

You help the model focus its behavior and tone by explicitly assigning a role. This reduces irrelevant outputs and steers the AI to act within the boundaries of the expected domain. Such role-setting is critical to maintaining professionalism and precision in finance, law, or medicine.

2. Add Constraints: Limit the Source of Truth

AI models are probabilistic by nature—they try to predict the most likely next word based on patterns. Without constraints, they might invent information or rely on general internet knowledge, which may be outdated or inaccurate. A simple but powerful prompt constraint might be, “Only answer questions based on official company documentation. If the information is unavailable, respond with ‘I don’t know’.”

This approach limits hallucinations and reinforces data governance policies, making AI more trustworthy in regulated environments. In the context of RAG-based systems, you can even go further by pointing to specific document types or repositories.

3. Use Few-Shot Learning: Teach Through Examples

Few-shot prompting involves giving the model a handful of input-output examples before asking it to complete a new task. This is incredibly effective for fine-tuning response style, formatting, and reasoning.

For instance:

Input: “What should I do if a patient requests their medical records?”

Output: “You should follow HIPAA guidelines and initiate the patient record release form. Contact the compliance officer for assistance.”

Providing two or three examples helps the model internalize your preferred answer structure and accuracy level. This technique is instrumental in customer support, HR, and IT, where consistency matters.

4. Prompt Engineering + RAG = High-Precision AI

When prompt engineering is combined with retrieval-augmented generation (RAG), the benefits compound, RAG systems fetch relevant documents from a knowledge base in real time and feed them into the AI model as part of the prompt.

Your prompt can then say, “Answer using only the retrieved documents. Do not rely on prior knowledge. Summarize only if explicitly asked.”

This ensures responses are grounded in real, authoritative data—whether internal policies, legal texts, or product manuals—while benefiting from the model’s language capabilities.

In short, prompt engineering acts as a behavioral control layer for AI agents. It’s lightweight, flexible, and highly effective in aligning large language models with business needs. When used wisely, it enhances safety, compliance, and user trust without requiring complete model retraining.

Final Thoughts

Training your AI chat agent on domain-specific knowledge safely isn’t just about feeding it documents. It’s about:

- Choosing the right architecture

- Preparing high-quality, sanitized data

- Protecting sensitive information

- Validating outputs

- Improving continuously

When done right, your AI chat agent becomes a trusted domain expert, helping users, employees, and customers with real, contextual value.