Key Takeaways

- Encrypt PHI in storage and during transmission to prevent unauthorized access, using strong standards like AES-256 and TLS protocols.

- Use access control measures such as role-based permissions and multi-factor authentication to limit PHI access to only those who genuinely need it.

- Minimize data usage by collecting only necessary PHI, storing it briefly, and anonymizing it when full details aren’t essential for AI tasks.

- Continuously monitor AI systems handling PHI for unusual behavior, logging all access and changes to detect and respond to potential threats quickly.

- When developing AI agents for healthcare, follow regulatory frameworks like HIPAA and GDPR closely to ensure legal compliance and build patient trust.

In today’s digital world, Artificial Intelligence agents are becoming a big help in many industries, especially healthcare. AI agents can automate tasks, analyze large amounts of data, and assist doctors and staff in providing better care. However, security becomes a top priority when handling Protected Health Information (PHI)—sensitive patient data.

In this blog, we will explore how to build AI agents that safely handle PHI, protect patient privacy, and follow important laws.

Also read: What Is the MCP Protocol, and Why Does It Matter for Agentic Architectures?

What Is Protected Health Information (PHI)?

Protected Health Information, commonly called PHI, is any information about a person’s health that can be used to identify them. This information is very private and sensitive because it tells a story about a person’s medical history, treatments, and even payments related to healthcare. Because of its sensitive nature, healthcare providers and anyone else who handles this data must keep PHI safe and confidential.

PHI includes many types of information. Some examples are:

- Name, address, or date of birth: Basic details that can identify a person.

- Medical records and test results: Information about diagnoses, treatments, lab tests, X-rays, or prescriptions.

- Insurance information: Details about a person’s health insurance coverage.

- Billing and payment data: Records related to costs, payments, and claims for medical services.

These details can reveal a lot about a person’s health status and history.

PHI is protected under laws like the Health Insurance Portability and Accountability Act (HIPAA) in the United States. These laws ensure that healthcare providers, hospitals,insurance companies, and other organizations keep this information private and secure. They must follow strict rules about storing, sharing, and accessing PHI.

For example, a doctor’s office must protect patient records so only authorized staff can see them. If PHI is shared, it should only be with people who need the information to provide care or manage payments. It can’t be shared with others without the patient’s permission, except in certain legal situations.

If PHI is lost, stolen, or shared improperly, it can cause serious patient problems, such as identity theft or discrimination. That’s why protecting PHI is very important.

In healthcare, PHI is everywhere—from paper charts to electronic health records and emails. With modern technology, it’s crucial to use strong security measures like encryption, passwords, and secure networks to keep this information safe.

Why Secure AI Agents Matter in Healthcare?

AI agents in healthcare are innovative software programs that can perform tasks automatically. They help with scheduling appointments, managing medical records, assisting in diagnoses, sending reminders, and even supporting billing. These AI agents can save time, reduce errors, and improve patient care. However, when they work with Protected Health Information (PHI), they also carry serious responsibilities.

PHI includes sensitive details like a patient’s name, medical history, test results, and insurance information. If AI agents are not properly secured, this data could be exposed to hackers or unauthorized users. That’s why patient privacy is a top concern. Patients trust healthcare providers to protect their information; any breach can lead to serious consequences.

Strict laws also govern the use of PHI. In the United States, the Health Insurance Portability and Accountability Act (HIPAA) requires healthcare organizations and their technology partners to follow strong security and privacy rules. Failure to meet these requirements can result in heavy fines and damage to the organization’s reputation.

A data breach involving AI can lead to identity theft, fraud, or patient harm. That’s why designing AI agents that securely handle PHI from the ground up is essential, with privacy and compliance in mind.

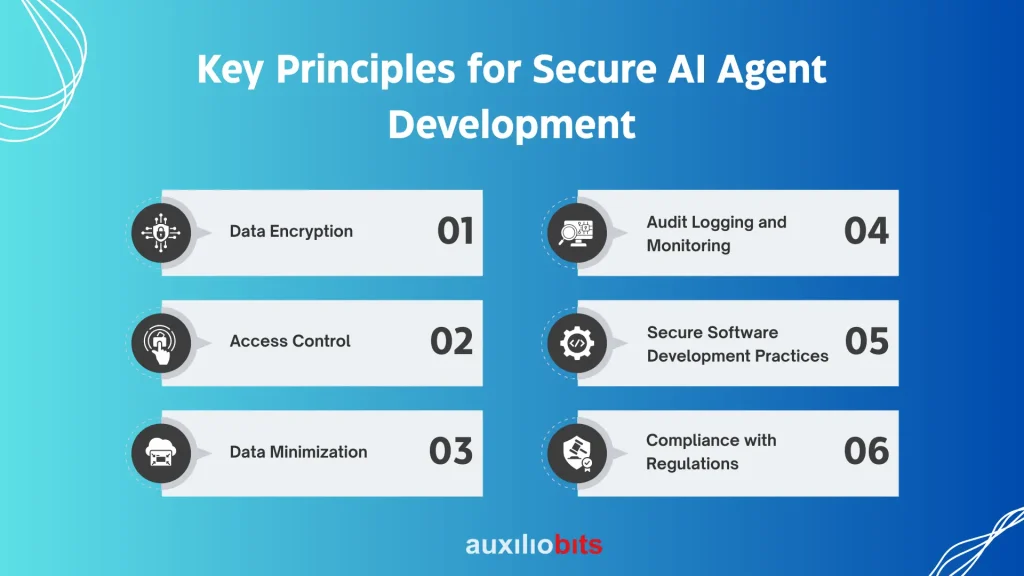

Key Principles for Secure AI Agent Development

Security must be a top priority when building AI agents for healthcare, especially those that handle Protected Health Information (PHI). PHI includes sensitive medical records, patient names, test results, and insurance data. If this data is leaked or accessed by the wrong person, it can lead to serious harm, including identity theft and loss of trust. To keep AI agents secure, developers must follow certain key principles. Let’s break them down simply and clearly.

1. Data Encryption

Encryption is like putting sensitive data in a locked box. Even if someone tries to steal the data, they can’t read it unless they have the key.

- Encrypt PHI at rest: The data is protected when saved on a server, database, or storage device.

- Encrypt PHI in transit: Data is protected while being sent across the internet or internal systems.

- Use strong encryption: AES-256 is a widely trusted standard that makes it hard for hackers to break in.

- Secure communication: When sending data online, always use TLS (Transport Layer Security) or SSL (Secure Sockets Layer) protocols.

Encryption ensures that even if data is intercepted or stolen, it remains unreadable without the correct decryption key.

2. Access Control

Only the right people, systems, or AI agents should be allowed to access PHI.

- Authentication: To verify user identity, use passwords, multi-factor authentication (MFA), or even biometrics (like fingerprints.

- Role-Based Access Control (RBAC): Give people access based on their job role. For example, a receptionist doesn’t need access to complete medical histories, but a doctor might.

- The least privilege principle states that each person or system should only have access to the data needed to do their job, nothing more.

Proper access control prevents unauthorized people or AI programs from viewing or modifying sensitive patient data.

3. Data Minimization

Don’t collect or store more data than necessary.

- Only use what’s needed: If an AI agent only requires a patient’s age and symptoms to perform a task, don’t collect their full medical history.

- Avoid long-term storage: Don’t keep PHI longer than necessary. Delete it securely when it’s no longer needed.

- Use anonymization: Remove or mask personal identifiers like names or addresses to protect privacy during processing.

Minimizing the amount of PHI being handled reduces the risk of data being lost or compromised.

4. Audit Logging and Monitoring

Keep track of everything the AI agent does with PHI.

- Logging: Record who accessed what data, when, and what actions they took. This is helpful for investigations if something goes wrong.

- Real-time monitoring: Watch the system for strange activity, such as unusual access patterns or attempts to steal data.

- Alerts: Set up alerts for suspicious actions, such as someone trying to access data they shouldn’t.

Logs are critical for accountability and help you quickly detect and respond to security incidents.

5. Secure Software Development Practices

Build security into the AI agent from day one.

- Secure coding: Use coding practices that avoid common bugs and weaknesses (e.g., avoid hardcoded passwords or SQL injection).

- Code reviews: Have other developers regularly check the code for issues.

- Security testing: Use tools to scan for vulnerabilities and fix them early.

- Update regularly: Patch known software bugs and keep all dependencies current.

Writing secure software is the foundation of protecting PHI handled by AI agents.

6. Compliance with Regulations

Always follow the privacy laws and regulations for your location and your users.

- HIPAA (USA): Requires strong safeguards for protecting health information.

- GDPR (EU): Protects personal data and gives individuals control over their data.

- Other local laws: Many countries have their own healthcare data protection rules.

Staying compliant not only protects patients but also protects your organization from legal trouble and fines.

Building secure AI agents in healthcare is not just about innovative technology—it’s about trust, responsibility, and safety. By following these six principles—encryption, access control, data minimization, logging, secure development, and regulatory compliance—you can ensure your AI systems handle PHI securely and ethically.

Steps to Develop a Secure AI Agent for PHI

Developing an AI agent that handles Protected Health Information (PHI) requires careful planning and security at every step. PHI includes sensitive data like patient names, medical records, lab results, and insurance information. If this data is exposed or misused, it can harm patients and lead to legal consequences. Below are simple and essential steps to develop a secure AI agent for healthcare.

Step 1: Define Use Case and PHI Data Involved

Start by clearly identifying what your AI agent is supposed to do. Ask yourself:

- Will it help schedule doctor appointments?

- Will it analyze lab results?

- Will it manage medical billing?

Next, determine what types of PHI the AI agent needs to perform its job. This could include:

- Patient names and contact details

- Medical history and diagnoses

- Lab results and prescriptions

- Insurance or billing information

Knowing what PHI is involved in helps you plan how to protect it adequately.

Step 2: Design Data Flow with Security in Mind

After defining the use case, map out how data will move through your system. This step is like drawing a map showing the journey of PHI from one point to another.

Ask these questions:

- Where does the data come from?

- Who uses it?

- Where is it stored?

- Where does it go next?

Mark critical points in the data flow where security must be applied. These are moments when data is:

- Sent over the internet

- Stored in databases

- Accessed by the AI agent or other systems

At each point, encryption, access control, and logging are applied to keep the data safe.

Step 3: Build Secure APIs and Interfaces

Many AI agents connect to other tools, like electronic health record (EHR) systems or cloud services, usually through APIs (Application Programming Interfaces).

To keep PHI safe:

- Use secure APIs that only work if the request is authenticated.

- Apply authentication methods like API keys or tokens.

- Use TLS/SSL encryption to protect data as it travels between systems.

- Validate all data that enters or leaves your system to block harmful inputs.

APIs and user interfaces should be designed so only authorized users and systems can access PHI.

Step 4: Train AI Models Carefully

If your AI agent uses machine learning or natural language processing, be extra careful during training.

- Use de-identified or anonymized data whenever possible. This means removing names, IDs, or anything that could link data back to a person.

- If real PHI must be used, ensure the environment is secure and access is limited.

- Regularly audit your training data and process to ensure no sensitive data leaks.

Also, check that the AI doesn’t unintentionally memorize or repeat sensitive information in its responses.

Step 5: Implement Continuous Monitoring and Updates

Even after the AI agent is built and deployed, the work doesn’t stop.

- Set up real-time monitoring to track who accesses PHI and when.

- Watch for suspicious activity, such as unexpected data access or system behavior.

- Keep all software components up to date with the latest security patches.

- Regularly test your system for vulnerabilities and fix any weaknesses quickly.

This ongoing monitoring helps detect and respond to threats before they cause damage.

Conclusion

Building AI agents that process Protected Health Information securely is vital to protect patient privacy and comply with healthcare laws. By following best practices like encryption, access control, data minimization, and continuous monitoring, organizations can safely leverage AI to improve healthcare outcomes without risking sensitive data.

Secure AI agent development is not just a technical challenge—it’s a responsibility to maintain patients’ trust and support and provide better, safer healthcare.