Key Takeaways

- LLMs can replace manual chart review by reliably parsing unstructured clinical documents and extracting structured insights.

- Prompt engineering is critical to guide LLMs in extracting clinically valid and codified data.

- Validation pipelines using ontologies and rules ensure downstream systems’ accuracy, compliance, and reliability.

- Integrated systems with EHRs enable real-time updates, audit trails, and a seamless feedback loop.

- LLMs are not just extractors—they’re collaborators, capable of powering next-gen AI co-pilots in clinical workflows.

Healthcare produces enormous amounts of clinical data, primarily unstructured, siloed, and hard to leverage. Manual chart review is still the norm in most environments, causing delays, mistakes, and burnout.

Large Language Models (LLMs ) such as GPT-4 and some domain-specific models (like Med-PaLM and GatorTron) are scalable options. They apply contextual knowledge and reasoning to clinical narratives, making it possible to extract data and validate it in real time from patient charts. This guide discusses creating a production-ready LLM pipeline for structured, validated chart intelligence.

Also read: LLM-Powered Health Analytics in Power BI

Why do LLMs Matter in Patient Data Extraction?

Patient records are filled with rich but unstructured information: SOAP notes, discharge summaries, physician dictations, and scanned forms. Conventional NLP models tend to lack context or clinical subtlety across formats. LLMs, trained on vast textual corpora and fine-tuned on clinical data, provide better generalization and comprehension.

Core Capabilities:

1. Automating Clinical Documentation Review

LLMs can analyze and summarize complicated stories, e.g., extracting “Type II Diabetes Mellitus, uncontrolled” from a multi-paragraph assessment plan.

2. Handling Diverse Unstructured Data Formats

LLMs read free text notes, scanned text (through methods like OCR), and PDFs, with support for abbreviations, spelling errors, and nested clinical reasoning.

3. Contextual Understanding of Clinical Language

LLMs understand the underlying meaning: e.g., deducing a medication stop from “Patient no longer on metformin due to GI issues.”

4. Integration with Downstream Clinical Systems

LLM outputs can be piped into HL7 FHIR APIs or EHR systems like Epic or Cerner, enabling real-time chart updates or pre-coding for billing.

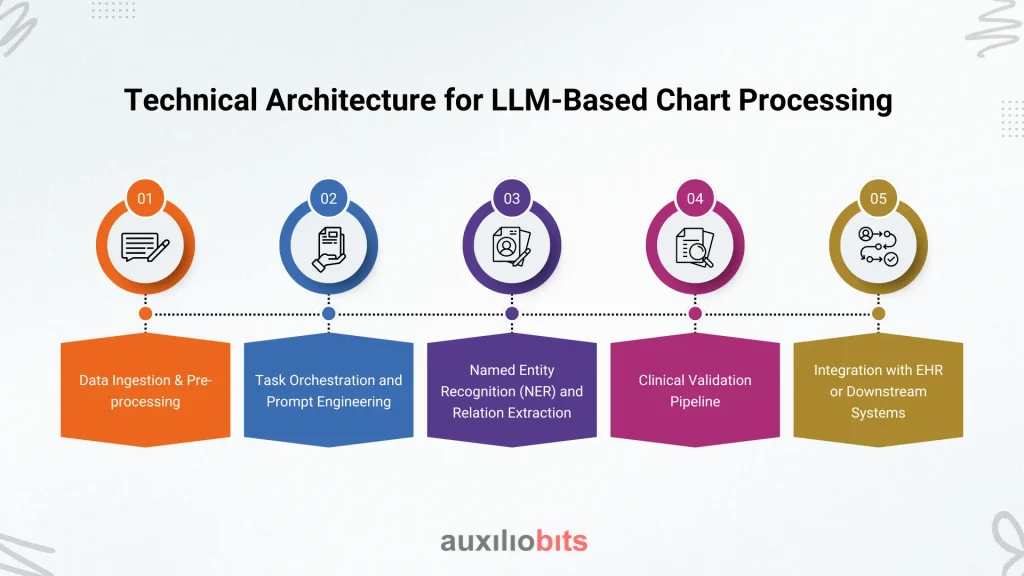

Technical Architecture for LLM-Based Chart Processing

An enterprise-level chart extraction and validation system includes a few elements in harmony. They are:

1. Data Ingestion & Pre-processing

- Scanned PDFs, HL7 messages, or raw text from the EHR systems are ingested.

- OCR (Tesseract or Amazon Textract) was used to scan the data.

- Preprocessing the pipeline that includes de-identification, section segmentation, and format normalization.

2. Task Orchestration and Prompt Engineering

- The well-crafted prompts direct the LLMs to pull out structured values (e.g., diagnosis: ICD-10, medication: RxNorm).

- Multi-turn prompts and reasoning yield sound output.

- Orchestrators (LangChain, LlamaIndex) are responsible for prompt chaining, tool calls, and memory persistence.

3. Named Entity Recognition (NER) and Relation Extraction

- Use LLMs or hybrid models (LLM + spaCy/Stanza) to extract entities:

- Diagnoses, medications, labs, dosages, vitals

- Establish relationships:

- Medication–Dosage–Frequency

- Diagnosis–Onset–Severity

4. Clinical Validation Pipelin

- Cross-checking of extracted data with other fields (e.g., lab value reference ranges, medication interactions).

- Rule-based validators or secondary LLM prompts confirm the consistency.

- Integration with medical ontologies (e.g., UMLS, SNOMED CT) for validating entity mapping.

5. Integration with EHR or Downstream Systems

- Use APIs to push validated data into FHIR-based endpoints or directly update patient records.

- Build audit trails of what was extracted, validated, and modified for compliance.

Implementing Large Language Models (LLMs) in clinical data extraction offers transformative benefits that enhance efficiency, accuracy, and compliance in healthcare workflows.

Benefits of Implementing LLMs for Clinical Data Extraction

Some of the benefits of implementing LLMs for clinical data extraction are as follows:

- Zero-Burnout Workflows

LLMs minimize the effort required for human chart review, allowing physicians, coders, and billers to save time and never repeat the same task. This is more effective and less stressful. - Clinically Explainable Outputs

LLMs can make their decision-making process explicit. This allows clinical teams to be confident in the information extracted, as they can see how the decisions were reached. - Audit-Ready Documentation

Organized and verified data facilitates compliance requirements. Adhering to CMS guidelines and clinical coding schemes reduces audits and leads to proper billing. - AI That Learns from Feedback Provided

LLMs can also learn and improve with corrections and feedback over time. Continuous use makes the system intelligent and trustworthy.

Future Directions: From Extraction to Agentic Co-Pilots

The next step isn’t data extraction, but collaboration.

LLM-powered agents can:

- Create clinical summaries from the patient chart history

- Suggest the following actions in diagnosis or treatment

- Identify inconsistencies (e.g., lacking conditions, aberrant vitals)

- Collaborate with staff in a loop: observe, act, learn, and improve.

These abilities are already being developed and experimented with. They are the future of AI-supported healthcare workflows.

Conclusion

LLMs are no longer prototypes—they are ready for prime time. By transforming dirty, unstructured patient charts into neat, validated information, they enable healthcare organizations to work faster, remain compliant, and make more informed decisions.

Auxiliobits develops end-to-end LLM solutions for healthcare providers—unifying AI reasoning, validation pipelines, medical ontologies, and EHR integration to support more intelligent, scalable clinical automation.