Key Takeaways

- Any AI tool handling patient data must meet strict privacy, security, and accountability standards to protect PHI and avoid legal penalties.

- From clinical note-taking to patient communication and billing, GPT-4o automates tedious tasks, enabling providers to focus more on care.

- PHI exposure, unauthorized access, and “shadow IT” use of AI tools can create major compliance violations if not proactively managed.

- Ensure that any AI partner, including OpenAI, agrees to a HIPAA-compliant BAA and follows security protocols for data handling and encryption.

- With technology evolving quickly, healthcare organizations must continuously train staff, audit systems, and update processes to stay compliant.

In healthcare today, introducing new technologies like Artificial Intelligence (AI) is no longer just an idea for the future; it’s happening right now. Among these new tools, GPT-4o, OpenAI’s latest model, stands out. It can handle various types of information, and it appears poised to transform many aspects of how healthcare operates. However, for any AI used in healthcare, one essential requirement is adherence to the rules of the Health Insurance Portability and Accountability Act (HIPAA).

This guide explains the vital connection between automation that adheres to HIPAA rules and the use of GPT-4. It’s written for healthcare workers, administrators, and anyone involved with technology, providing them with key vital information to handle this complex yet beneficial area. We’ll examine the significant benefits GPT-4 can offer in healthcare, the stringent rules of HIPAA, the challenges of ensuring AI adheres to these rules, the best ways to implement it safely, and what the future holds for this advanced GPT-4 technology.

Also read: Synthetic Data Generation for Healthcare AI Training: Techniques and Privacy Considerations.

How Does GPT-4o Help in Healthcare?

GPT-4 understands words, ensuring adherence to better regulations and previous approaches. Implementing means many new things in healthcare. It can create text that sounds like it was written by a human, understand spoken words, and even make sense of visual information. This opens up numerous opportunities to enhance efficiency, better support patients, and streamline office tasks. Here is how GPT-4o helps in healthcare:

1. Making Clinical Notes: The most significant responsibility of healthcare staff is writing detailed notes for patient care. GPT-4o can change this by:

* Automating Doctor’s Notes: It can record doctor-patient conversations as they occur, accurately capturing important notes, diagnoses, and treatment plans. This lets doctors spend more time with patients.

* Creating SOAP Notes: It can automatically organize subjective, objective, assessment, and plan notes from recorded meetings, saving a lot of time.

* Summarizing Patient Information: It can quickly create concise summaries of lengthy patient histories, discharge papers, and consultation notes, facilitating faster and more informed decision-making.

2. Better Patient Communication and Support: Enhance patient communication with intelligent automation that delivers timely answers, reminders, and personalized health education with clarity and care. This happens by:

* Smart Chatbots: These can help patients around the clock by answering common questions, scheduling appointments, and providing personalized health information in an easy-to-understand format.

* Before and After Visit Messages: It can automatically send reminders, follow-up steps, and general health tips, helping patients stick to their plans and get better results.

* Personalized Health Education: It can create simple explanations of medical conditions, treatment options, and medication instructions tailored to each patient’s reading level.

3. Helping with Diagnosis and Treatment Choices: AI-powered tools can assist clinicians by rapidly analyzing research, suggesting possible diagnoses, and supporting the interpretation of medical data to improve decision-making.

* Reviewing Medical Papers: It can quickly sift through vast amounts of research, medical guidelines, and journals to provide facts for diagnosis and treatment.

* Helping with Different Diagnoses: It can suggest possible diagnoses based on patient symptoms, health history, and test results, helping doctors think about more options.

* Support for Image Reading: Although it’s not a standalone diagnostic tool, GPT-4’s ability to handle various types of information can aid in describing medical images (such as X-rays and MRIs) for educational purposes or initial assessments when used in conjunction with specialized medical imaging AI.

4. Enhancing Office Operations: Streamline administrative tasks with AI-driven automation that boosts efficiency in billing, referrals, and HR, allowing staff to focus on patient care.

* Managing Money Flow (Revenue Cycle): It can automate tasks such as sending claims, handling denied claims, and obtaining approvals in advance by reviewing documents and identifying errors.

* Handling Referrals: It can make the referral process smoother by quickly finding the right specialists and writing referral letters.

* HR and New Employee Setup: It can help with HR questions, explaining policies, and getting new employees set up by giving instant access to needed information.

The potential of GPT-4o to change healthcare is clear. However, to make this happen, we must handle patient data privacy and security with the utmost care, as mandated by HIPAA.

Understanding HIPAA: The Core of Patient Data Privacy

The Health Insurance Portability and Accountability Act (HIPAA) of 1996 is a federal law. It establishes national guidelines for safeguarding private patient health information (PHI) from unauthorized disclosure or disclosure without the patient’s consent or knowledge. It’s a patient’s perspective on how patients’ trust and privacy are kept safe in the US healthcare system.

Main Parts of HIPAA:

- Privacy governs the use and disclosure of PHI. It establishes national guidelines for safeguarding medical records and other sensitive personal health information. It also gives patients the right to access their health information, including the ability to view and obtain a copy of their records and request changes.

- Security Rule: This rule outlines the administrative, physical, and technical safeguards that healthcare organizations and their business associates must implement to protect electronic Protected Health Information (ePHI).

- Administrative Protections: These are policies and procedures designed to manage administrative actions, including training, risk assessment, and safeguarding staff data.

- Physical Protections: These measures safeguard electronic information systems, their buildings, and equipment against natural hazards, environmental threats, and unauthorized access.

- Technical Protections: These refer to the technology and rules governing its use, which protect ePHI and control access to it. This includes features such as encryption, access controls, audit controls, and integrity controls.

- Breach Notification Rule: This rule says that healthcare groups and their business partners must tell affected people, the Secretary of Health and Human Services, and sometimes the media if unsecured PHI is exposed.

Who Must Follow HIPAA?

- Covered Entities: These include healthcare providers (such as hospitals, clinics, and doctors’ offices), health plans (like insurance companies), and healthcare clearinghouses.

- Business Associates (BAs): These are people or groups that do work for a covered entity that involves using or sharing PHI. This is particularly important for AI companies, as any AI solution that handles PHI will typically be considered a Business Associate. A Business Associate Agreement (BAA) is a legal contract between a covered entity and a business associate. It explains how the BA will protect PHI.

Failing to comply with HIPAA can result in severe penalties, including substantial fines and even criminal charges, as well as significant damage to a healthcare organization’s reputation. Therefore, truly understanding and being committed to HIPAA compliance is essential for any group seeking to utilize AI automation in healthcare.

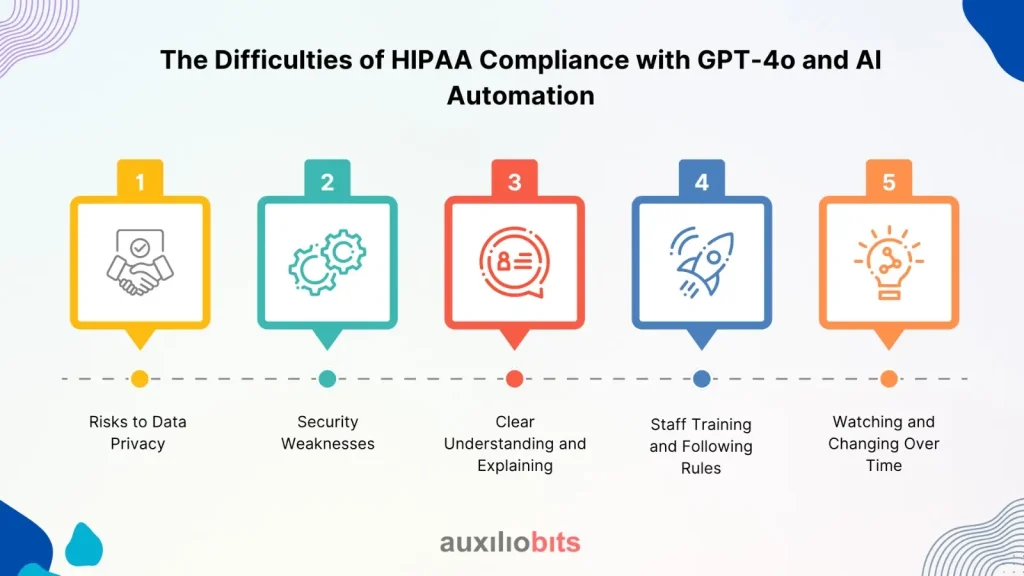

The Difficulties of HIPAA Compliance with GPT-4o and AI Automation

Using advanced tools like GPT-4 presents unique and challenging issues, including maintaining HIPAA compliance. The way large language models (LLMs) operate and the data they process can clash with HIPAA’s strict privacy and security rules if not managed carefully.

1. Risks to Data Privacy:

Making Data Anonymous vs. Being Able to Identify It Again: While AI can help make data anonymous, there’s a risk that advanced AI models, because they can process huge and varied amounts of data, might be able to identify individuals from data that was supposed to be anonymous. This is a significant concern for the “safe harbor” method of anonymizing data under HIPAA.

* Asking the AI and PHI Leaks: If users accidentally include PHI in their requests to GPT-4, and the model isn’t set up with HIPAA-friendly protections, this information could be processed and possibly stored in a manner that doesn’t comply with regulations.

* Rules for Keeping Data: LLMs often learn from the data they process. It’s crucial to ensure that PHI isn’t used to train the model or retained longer than necessary, as required by HIPAA. For example, OpenAI has promised not to train models on business data by default for business users; however, this needs to be confirmed and handled through specific agreements.

2. Security Weaknesses:

* Managing Outside Vendors: Most healthcare groups will utilize GPT-4 through an API from OpenAI or another vendor. It’s most important to ensure that these vendors also comply with HIPAA and sign robust Business Associate Agreements (BAAs). The vendor’s security directly affects the healthcare group’s compliance.

* API Security: The ways AI models interact with healthcare systems (APIs) can be weak spots for cyberattacks if they lack strong login checks, encryption, and access controls.

* Cloud Security: If GPT-4o or related data processing happens in cloud systems, it’s essential that the cloud provider meets HIPAA security rules and has an BAA in place.

3. Clear Understanding and Explaining (The “Black Box” Issue):

* Bias in Algorithms: AI models can exhibit biases that were present in the data from which they were trained. This could lead to unfair or wrong results for specific patient groups. While not a direct HIPAA violation, it can have ethical and legal consequences that connect to fair care, which HIPAA indirectly supports.

* Lack of Clarity: It can be challenging to understand how an AI model arrives at a particular answer. In healthcare, especially when making decisions about patient care, being able to explain why an AI suggested something is significant for being responsible and building trust. This “black box” nature can make compliance checks and investigations harder.

4. Staff Training and Following Rules:

Even with strong technical protections, these remaining significant risks are still a considerable concern. Healthcare staff must be fully trained on how to use AI tools in a manner that adheres to HIPAA guidelines, including understanding what information can and cannot be shared, as well as recognizing any potential warning signs.

* Hidden IT: Because powerful GPT-4 tools are easily accessible, employees sometimes use these tools without proper oversight. This increases the chance of breaking HIPAA rules.

5. Watching and Changing Over Time:

* Changing Technology: AI technology is advancing rapidly. What’s considered a good way to do things today might be old news tomorrow. Healthcare groups must establish mechanisms to continually monitor and adapt their processes to stay current with emerging technologies and evolving interpretations of HIPAA rules.

* Handling Problems: Strong plans for handling problems are vital to quickly find, stop, and fix any data breaches or security issues involving AI systems.

Good Ways to Use GPT-4o and Follow HIPAA Rules

To utilize -4 effectively while complying with HIPAA regulations, a comprehensive approach is necessary that encompasses technical, administrative, and physical security measures.

1. Choosing a Secure Vendor and Business Associate Agreements (BAAs):

* Careful Checks: Fully check any AI vendor, including OpenAI (for their business-level assurances), to ensure they comply with Thunderstand and follow their promise to adhere to HIPAA rules.

* Strong BAAs: A detailed BAA is a must. This agreement must clearly state what each party is responsible for in protecting PHI, list the uses and sharing that are allowed, explain the security measures, and include provisions regarding notification of breaches and audit rights. For OpenAI, their business services often include BAA support, which is key.

2. Making Data Anonymous and Unidentifiable:

* Make Anonymous First: Before putting any patient data into GPT-4o for learning or processing (where allowed and needed), use strong methods to make it anonymous. This means removing or concealing the 18 items HIPAA uses to identify individuals.

* Changing Names and Tokenization: Utilize methods such as name replacement (replacing identifiers with reversible codes) or tokenization (replacing sensitive data with non-sensitive substitutes) to process data by the AI. This allows the AI to perform its task without directly handling raw PHI.

* Secure Data Paths: Design secure methods for data movement that ensure PHI is anonymized as early as possible and only identified again when necessary, under strict controls.

3. Strong Technical Protections:

* Full Encryption: All PHI, whether stored or transmitted, must be encrypted using strong, industry-standard encryption methods. This applies to data sent to and from GPT-4o’s API.

* Access Controls and Role-Based Access (RBAC): Implement strict controls for accessing AI systems and the data they handle. Only authorized people who genuinely need to know should be able to access PHI or AI outputs that contain PHI. RBAC ensures that users have only the minimum access required for their work.

* Tracking and Logging: Keep thorough records of all access to PHI and AI system actions. These logs are essential for identifying unusual activity, investigating problems, and demonstrating compliance during audits.

* Secure API Connections: When connecting GPT-4o through APIs, ensure that you use secure methods for authentication (such as API keys or OAuth), secure communication protocols (HTTPS/TLS), and limit the frequency of requests to prevent misuse.* Self-Hosted or Private Cloud Use (if possible): For groups with sufficient resources and stringent control requirements, utilizing AI models on their servers or in private clouds can provide greater control over data. However, this also means more work and cost to manage.

4. Administrative Rules and Procedures:

* Data Management Plan: Establish clear rules and steps for collecting, using, storing, sharing, and disposing of PHI within AI workflows. This includes saying who owns the data, who is responsible, and who is accountable.

* Risk Checks and Management: Conduct regular, complete HIPAA risk assessments to identify, assess, and mitigate potential vulnerabilities and risks to ePHI when utilizing AI. This is a continuous process that should change as AI technology develops.

* Plan for Handling Problems: Create and regularly test a detailed plan for addressing data breaches or security issues related explicitly to AI. This plan should outline steps for identifying, addressing, resolving, recovering from, and learning from the problem.

* “Minimum Necessary” Rule: Design AI applications and workflows to follow the HIPAA “minimum necessary” rule. This means that the AI should access, use, or share the absolute minimum amount of PHI necessary for a specific task.

5. Thorough Staff Training and Awareness:

* Required HIPAA Training: All staff who use AI tools or PHI must take required and regular HIPAA training, specifically covering the details of AI in healthcare.

* AI-Specific Guide guidelines for staff on how to use AI tools in the established way that follows the rules. This includes the types of information that can and cannot be input into the AI, as well as how to handle AI-generated outputs that contain PHI.

* Security and Privacy Culture: Establish a strong culture within the organization that prioritizes data security and patient privacy, enabling employees to identify and report potential compliance issues.

6. Constant Monitoring and Auditing:

Regular conductance of internal and external assessments assesses the performance of AI systems in conjunction with Performance Monitoring. This involves continuously monitoring how AI systems are performing to identify any unusual behavior that may indicate security breaches or unintended data sharing.

* Stay Up-to-Date: Keep informed about the latest HIPAA rules, guidance from the Office for Civil Rights (OCR), and emerging best practices for AI security and privacy.

What’s Next for HIPAA-Compliant Automation with GPT-4o?

Bringing together powerful AI, such as GPT-4, and the strict demands of HIPAA compliance isn’t just a hurdle; it’s an opportunity to build a healthcare system that’s more effective, patient-focused, and safe. The future of healthcare automation with GPT-4o will likely see:

- More Specialized HIPAA-Friendly AI Platforms: We can expect to see more companies offering AI solutions specifically designed for healthcare, with HIPAA compliance already built into their design. These platforms will utilize GPT-4 (or later versions) but will incorporate additional layers of security, methods to anonymize data, and guidelines for data management.

- “Guardrail” AI for PHI: Better “guardrail” systems will be developed and utilized with LLMs to automatically identify and remove PHI from both user queries and AI responses, thereby preventing accidental disclosure.

- Combined Learning and Data Privacy Techniques: These methods for maintaining the privacy of AI are increasingly being utilized more widely. Combined learning enables models to be trained on different datasets without the data ever leaving its source. Data privacy techniques modify data to protect individual privacy while still allowing for overall analysis—this significantly reduces the risk of exposure during AI training.

- Better AI Explanation Tools: Continue to focus on making AI models more straightforward to understand. Provide doctors with more insight into how AI-driven suggestions are made, building accountability and responsibility.

- Standard Rules for AI Management: Common rules and certifications, such as those following HIPAA in healthcare, will likely emerge, making it more straightforward for groups to follow the rules.

- AI and Patient Rights: Automation, when implemented correctly, can benefit patients by providing them with faster access to their health information, more personalized care, and improved communication methods, all while safeguarding their privacy rights.

Entirely utilizing GPT-4 in healthcare to maintain compliance is an ongoing journey. It requires constant care, dedication to good practices, and teamwork among technology creators, healthcare providers, and government agencies.

Conclusion

Automation that adheres to HIPAA rules, powered by new AI models like GPT-4, promises to transform the delivery of healthcare, streamline processes, and enhance patient outcomes. From changing how clinical notes are written and how patients are supported to making office work smoother and helping with treatment choices, the possibilities are enormous.

How immense, this great power comes with a serious responsibility: keeping private patient health information safe. Following HIPAA isn’t just a task to check off; it’s a fundamental principle that should be built into every step of using AI in healthcare. By understanding HIPAA’s detailed rules, actively addressing the privacy and security challenges of AI data, and strictly adhering to good practices, healthcare groups can harness the remarkable capabilities of GPT-4 while safeguarding the trust and privacy of their patients.

The future of healthcare is bright, automated, and, most importantly, safe. Using automation that adheres to HIPAA with GPT-4 isn’t just an upgrade in technology; it’s a vital step forward to a healthier, more effective, and more trusted healthcare system.