Key Takeaways

- Voice AI is only as strong as its integrations. A speech agent that can’t connect to CRM, billing, or authentication systems quickly becomes just another IVR with a friendlier tone.

- Escalation design is as critical as recognition accuracy. Successful deployments hinge on knowing when the machine should stop and a human should step in.

- Cost savings are real but rarely straightforward. Automation trims handling time for routine tasks, but integration, compliance, and customer-experience investments eat into the raw numbers.

- Human workforce dynamics change. Reps shift from handling repetitive logistics to managing escalations, and leadership has to rethink performance metrics around empathy and resolution rather than speed alone.

- Azure’s advantage lies in the ecosystem, not just the tech. The speech models are impressive, but the seamless tie-in with Microsoft’s CRM, automation, and compliance stack is what wins enterprise adoption.

Most enterprises know the frustration of scaling customer support. More channels open, call volumes spike, and suddenly the carefully trained team of live agents spends half the day apologizing for wait times rather than solving problems. Voice automation has been pitched as a cure for decades—think IVR menus from the 1990s—but those systems never really felt like talking to a person. They were rigid, brittle, and rarely left a caller satisfied.

That landscape has shifted. Microsoft’s Azure Cognitive Speech Services have matured enough that voice agents can carry out customer-facing tasks that previously demanded human presence. Still, the story isn’t as simple as “plug in AI, replace call center.” It’s a messy, nuanced evolution where performance, integration, and culture all matter just as much as the technology itself.

Also read: Automating Data Pipeline Optimization with GenAI in Azure Synapse Analytics

Why Azure’s Speech Stack Matters

Plenty of cloud vendors promise conversational AI. The reason many enterprises quietly prefer Azure isn’t just the speech recognition accuracy—it’s the full ecosystem around it. A contact center rarely needs “just transcription.” It needs:

- High-accuracy automatic speech recognition (ASR) across multiple dialects and noisy backgrounds. Callers don’t all speak into studio microphones; they’re in cars, busy shops, or on low-quality VoIP.

- Real-time speech translation when a support queue suddenly has to handle Spanish-speaking customers in Phoenix or Mandarin speakers in Vancouver.

- Neural text-to-speech (TTS) voices that sound natural enough not to break the illusion after the third exchange.

But the real draw is how all of this plugs into Microsoft’s broader stack—Power Virtual Agents, Dynamics 365 Customer Service, and Azure Bot Service. Enterprises that already run their CRM and workforce platforms on Microsoft aren’t buying speech as a point solution; they’re buying a set of interoperable gears that can be dropped into their workflow.

The Practicalities of Voice Agents in Service

It’s easy to get wowed by a demo where an agent books a flight or resets a password in seconds. The harder question is, what does it take to operationalize that across tens of thousands of daily calls?

1. Integration with Core Systems

Speech services don’t exist in a vacuum. A customer doesn’t want a pleasant robotic voice that simply says, “Please wait while I connect you.” They want a refund processed, a shipping address updated, or an account unlocked. That requires APIs into ERP, CRM, billing, and authentication systems.

This is where many deployments stumble. The speech layer is polished, but the back-end connectivity is brittle. When the agent cannot execute, it becomes a glorified IVR again.

2. Escalation and Handover

Every enterprise support leader will say the same thing: you cannot automate empathy. Customers want to know they’ve been heard, especially when money is at stake. A voice agent that knows when to quit—that is, when to hand over gracefully to a human—is far more valuable than one that stubbornly tries to resolve beyond its ability.

The nuance lies in escalation cues. It’s not just when the caller says “agent.” It’s when the sentiment detection notices rising frustration in tone, or when multiple rephrasings fail to match an intent. Azure’s cognitive stack has built-in sentiment analysis, but fine-tuning thresholds is more art than science.

3. Compliance and Recording

For industries like healthcare or banking, support calls aren’t just conversations—they’re legal records. Enterprises need transcripts, audio storage with proper encryption, and sometimes even redaction of sensitive data in real-time. Microsoft has leaned into this with features like “conversation redaction” for numbers or identifiers, but implementation requires tight policy alignment.

Case Examples: What Works, What Doesn’t

Example 1: Retail Returns

A global apparel brand adopted Azure speech agents to handle post-holiday return spikes. The scenario looked perfect for automation: most customers just wanted a return label or refund status.

- What worked: Agents could instantly pull up order history via Dynamics 365, verify details, and email a label—all in under a minute. Call abandonment dropped.

- What didn’t: Customers calling about defective merchandise often expressed frustration, sarcasm, or unusual item descriptions. The agents struggled, escalations spiked, and human staff still absorbed the emotional load.

The takeaway? Automate repetitive logistics, but don’t expect voice AI to soothe angry customers about a ripped jacket.

Example 2: Healthcare Scheduling

A regional hospital chain experimented with Azure voice scheduling for outpatient visits. Patients called a central line, stated symptoms, and received available slots.

- What worked: Elderly patients appreciated not having to press keypad options endlessly. The speech interface was simpler.

- What didn’t: HIPAA compliance required scrubbing sensitive details, and cross-system scheduling APIs were fragile. IT overhead ballooned, and the rollout slowed.

In other words, usability was there, but integration debt became the bottleneck.

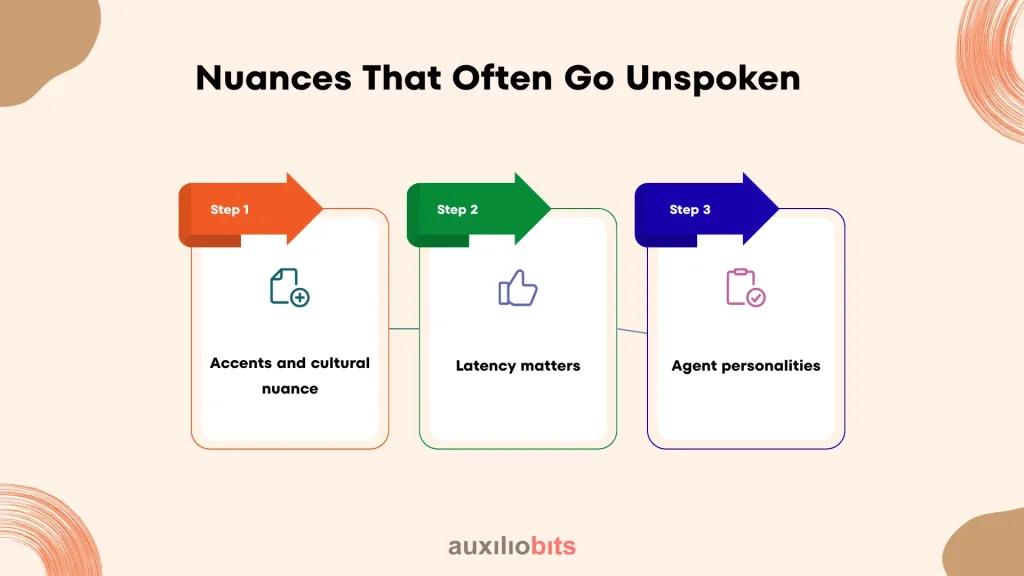

Nuances That Often Go Unspoken

Some of the nuances that often go unnoticed are:

- Accents and cultural nuance. Azure’s speech models are robust, but enterprises serving immigrant communities still report occasional mistranscriptions. A heavy Punjabi accent or strong Appalachian drawl can still trip the models. Humans adapt; machines less so.

- Latency matters. Even a 300-millisecond delay in response feels robotic. Customers notice. Engineers know this, but business leaders often underestimate how much edge optimization and caching matter.

- Agent personalities. A voice agent that always says “thank you for your patience” can sound polite once but grating the tenth time. Enterprises are experimenting with micro-variations in phrasing to avoid the uncanny valley.

These aren’t failures of Azure itself—they’re signs of where human tuning still matters.

The Important Question

CFOs inevitably ask: Does this save us money? The answer is complicated.

- For high-volume, low-complexity queries (password resets, shipping status, policy renewals), yes, the ROI can be swift.

- For emotionally charged or complex interactions (fraud disputes, medical consultations), automation may reduce agent minutes but increase customer dissatisfaction if pushed too far.

There have been organizations that save 25–30% on call handling costs, only to spend part of that on improving escalation workflows, customer education, and quality monitoring. So the “savings” story is less linear than vendors suggest.

The Cultural Layer Inside Contact Centers

Technology conversations often ignore the human workforce. Deploying voice agents changes team morale. Some reps feel displaced, others relieved that repetitive drudgery is gone. Supervisors suddenly spend more time coaching on empathy rather than call handling speed.

A subtle but important shift: metrics evolve. Instead of “average handling time,” leaders start caring about “first-time resolution,” “handover accuracy,” and “customer sentiment recovery.” The cultural transformation sometimes takes longer than the technical one.

Where Azure Has an Edge—and Where It Doesn’t

Strengths:

- Tight integration with Microsoft’s enterprise ecosystem.

- A mature global cloud footprint, which matters for low-latency and compliance requirements.

- Ongoing investment in multilingual support and neural voice synthesis.

Limitations:

- Pricing models can get tricky at scale. Minute-based charges plus add-ons (translation, TTS neural voices, analytics) often surprise finance teams.

- Innovation sometimes lags behind nimbler startups, especially in rapid iteration on new languages or experimental features.

- Vendor lock-in is real: once your CRM, speech, and automation all run on Azure, migrating out is painful.

Opinions That Should Not be Missed

Some leaders still treat voice agents as an “IVR replacement project.” That mindset undersells the potential. What Azure Cognitive Speech Services really enable is an orchestrated, multimodal service—voice as one channel in a unified fabric. Customers might start by speaking, switch to SMS confirmation, and later get an email follow-up, all tied to the same case. That’s when the investment pays dividends.

Another opinion, slightly unpopular: too many enterprises chase “human-like” voices when customers mostly want efficient and accurate service. Yes, tone matters. But a voice that solves a problem in 60 seconds will win over a silky-smooth but unhelpful one.

What Peers Should Watch For

If you’re leading a customer service transformation, a few pragmatic checks go a long way:

- Pilot on narrow, repeatable tasks. Returns, password resets, and appointment confirmations. Don’t start with fraud disputes or medical triage.

- Track escalation metrics, not just containment. A high containment rate that frustrates customers is worse than a lower rate with smooth handovers.

- Involve compliance early. Especially in healthcare, finance, or government services. Retrofitting security and redaction is painful.

- Expect to invest in voice design. Script variations, sentiment handling, and response pacing aren’t minor polish—they’re the difference between “robot” and “assistant.”

Closing Reflection

Customer-service voice agents aren’t replacing human empathy anytime soon. But they are reshaping the operational fabric of call centers, carving out space where human staff focus on judgment and care while automation handles routine logistics. Azure Cognitive Speech Services provide one of the most enterprise-ready toolkits for this shift.

The organizations that succeed aren’t the ones who blindly automate every call. They’re the ones who treat speech AI as a strategic layer—integrated, tuned, and constantly evaluated against real human behavior.

And if history has taught us anything from IVR systems and early chatbots, it’s this: customers have long memories of bad experiences. Deploying voice AI isn’t about “being first.” It’s about being good enough that customers forget they were even speaking with an agent in the first place.