Key Takeaways

- Hybrid agentic systems complement human analysts, offering richer investigation threads rather than replacing teams.

- Success depends on integrating structured and unstructured data, including transaction streams, customer interactions, and historical patterns.

- Agents can rethink conclusions and adapt mid-investigation, but this introduces reasoning drift, noise, and auditability challenges.

- Simple pattern-based fraud rarely benefits; agentic enrichment shines in ambiguous cases like social engineering, account takeovers, and synthetic identities.

- Regulators require traceable logic and auditable outcomes, necessitating explainability features, prompt co-design, and human oversight at every stage.

Fraud detection in most large companies is rarely as clean or efficient as it looks in theory. Some unusual cases go unnoticed, while false alarms frustrate genuine customers. Even worse, alerts often come in too late—hours after a fraud attempt has already caused damage. Anyone who’s worked in fraud operations knows that “accurate, real-time risk detection” is hard to achieve without making big sacrifices in cost or system complexity.

It’s well known that streaming data changed everything. Once transactions could be analyzed in near real time, fraud detection started to depend not just on spotting patterns but on how fast systems could respond. But as companies built these real-time data pipelines, the number and types of fraud cases grew dramatically—and so did the creativity of fraudsters. Every time a new machine learning model was introduced, attackers found a new way to trick it with spoofed or fake data.

That’s where agentic architectures come in—systems that combine live data streams with language-based reasoning. In these setups, the lines between fixed rules, machine learning models, and human analysis start to blur. If you’re exploring this space expecting an easy connection between real-time data and large language models (LLMs), you’ll quickly find it’s more complex—but also far more flexible and responsive.

Also read: Return‑on‑Investment (ROI) of deploying agentic automation in assembly lines

Operational Complexity: The Arrival of LLM-Augmented Agents

The move to connect LLMs with real-time event analysis didn’t come from theory—it came from frustration with slow manual reviews and rigid, model-heavy systems. Take card-not-present (CNP) fraud in global payments. Old setups mostly used gradient-boosted trees and regex-based validation flows—functional, but easy targets for skilled attackers.

A fintech team in Europe started testing agentic models that watched transaction streams, metadata, customer chat logs, and even support tickets. When an anomaly score crossed a set limit, an LLM-driven agent stepped in to review the sequence, combine related clues (“Does this match past behavior?”), and build a clear investigation thread for fraud analysts—no more jumping between disconnected systems or raw logs.

It wasn’t a magic fix. Early versions produced strange contradictions: sometimes LLMs flagged harmless actions (“late-night purchases abroad”) that statistical models overlooked—and sometimes the reverse. Context proved critical, and the agentic method—while more dynamic—forced teams to handle that uncertainty as it happened.

How Agentic Analysis Actually Works

Forget the idea that LLMs only “read text.” In agentic fraud detection, they act more like digital investigators—reacting to live triggers and probing multiple, diverse data sources to understand what’s really happening. Unlike static models or rigid rule engines, these agents can interpret sequences of events, connect the dots across different systems, and adjust their approach as new information arrives.

Streaming triggers fire whenever a risk event occurs—unusual transactions, logins from unfamiliar IPs, or device fingerprint mismatches. Each trigger sends data to the agent, often in the form of a JSON payload or, in more complex setups, a concatenated text sequence created by upstream sensors. This is just the starting point. The agent doesn’t simply score or classify events; it synthesizes them into a situational narrative, building context that a human analyst could otherwise only reconstruct by jumping between fragmented logs and dashboards.

When needed, the agent can pull in additional external data: historical transaction patterns, recent support interactions, or even relevant threat intelligence from public sources. This allows the agent to explore hypotheses, confirm anomalies, and highlight inconsistencies that rigid rule-based systems or standard machine learning classifiers would likely miss.

The key difference is adaptability. Rule engines blindly follow predefined logic, no matter how adversaries evolve their tactics. ML models, meanwhile, can fail when patterns shift or attackers deliberately manipulate inputs. An agentic system, by contrast, can reconsider conclusions mid-investigation, drop unproductive leads, or flag gaps in the evidence. This flexibility is powerful, though not without controversy. Some analysts worry that such malleability might introduce confusion or inconsistency. Yet in complex, high-volume fraud environments, the ability to reason dynamically—adjusting decisions in real time as new signals arrive—is increasingly essential for staying ahead of sophisticated adversaries.

Imperfections and Shortcomings

Agentic fraud systems aren’t perfect. Language models often chase unusual edge cases while missing obvious risks. In one notable example, an e-commerce merchant set up a pipeline that sent every “midnight refund request” to an LLM for analysis. The LLM generated multi-step explanations referencing support ticket details (“Customer seems anxious,” “Extra punctuation in message”), but completely ignored the merchant’s policy flagging midnight refunds for certain SKUs as high risk. This wasn’t a machine error—it was a mismatch in context. The streaming pipeline was solid, the LLM capable, but without close alignment between business rules and agentic automation, decisions became inconsistent.

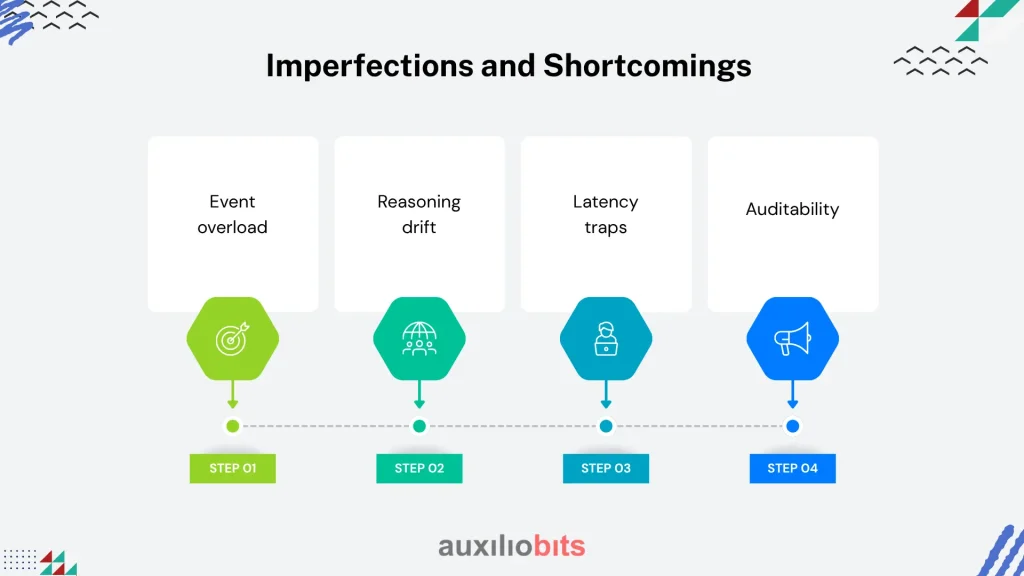

Other challenges include:

- Event overload: Too many triggers can make narratives noisy. Filtering becomes as critical as detection itself.

- Reasoning drift: Over long investigations, LLMs may link unrelated events (“Is this Russian IP tied to the earlier Irish login?”), producing speculative connections that frustrate analysts.

- Latency traps: Real-time analysis can lag. Decisions meant to occur in milliseconds may stall while waiting for LLM responses.

- Auditability: LLMs often produce multi-step rationales. Tracing the exact logic for auditors can be extremely difficult.

Bridging Humans and Machines

Let’s not overrate the agents. Strong fraud teams treat agentic output as one input—not the final word. In the European fintech example, overnight surges in small-value cross-border transactions triggered multiple alerts. Streaming data caught the spikes; the LLM built context from user history and support logs; and a human analyst, usually after a cup of coffee, made the final call.

A few observations from real deployment:

- Fatigue management: LLM-driven agents can prioritize cases automatically, helping reduce analyst burnout.

- Feedback: Analysts often correct the chat-style explanations from agents. This feedback guides retraining and fine-tunes prompts.

- Divergent thinking: Running agentic narratives alongside ML risk scores gives a “second opinion” for tricky cases. Sometimes the LLM is off; other times, it highlights subtle patterns missed by traditional models.

The main advantage is interaction—a fraud defense system works best when human investigators can actively engage with digital agents, asking questions, challenging reasoning, and refining outcomes. This dialogue makes the overall process more flexible and responsive to evolving threats.

Integration Is Messy, But Necessary

Connecting real-time streams to LLM-powered agents isn’t just about network speed; it’s about linking structured and unstructured information. Every pipeline has bottlenecks: delays in serialization, mismatched schemas, or missing data errors. The best teams build custom middle layers—a mix of event brokers, feature stores, and real-time scoring services.

The challenge is context. LLMs need it, and fraud systems generate it in countless forms. That means integrating not only transaction data but also customer service text, call logs, and voice-to-text transcripts. Agentic setups rarely work out of the box.

Engineers often spend more time fixing data formats than tuning models. Real-time enrichment can stall when the LLM asks for info (“Which device was last used?”) that isn’t available. Some streaming events are fleeting—missing one can permanently weaken an investigation.

Caution is needed. Not every scenario justifies this complexity. For simple, low-volume pipelines, adding agentic agents can be overkill and may create more overhead than benefit.

What Works Well—And Why

Agentic systems shine when detail matters more than sheer volume. Simple, pattern-based frauds—like duplicate transactions or signature attacks—don’t gain much. They’re most valuable for ambiguous cases: layered social engineering, account takeovers, or synthetic identity schemes. The hybrid method supports investigation rather than just scoring, letting agents weigh evidence for or against risk in flexible, context-rich ways.

Take cross-border payment fraud. Traditional ML often misses local nuances, but streaming plus agentic systems include linguistic hints, time zone differences, and social network signals in their analysis. The combination of real-time events and language-driven reasoning produces deeper insights—provided teams can manage the extra complexity and noise.

What Sometimes Fails—And When

Despite the promise, integrating LLM reasoning isn’t a panacea. In particular:

- Data quality bottlenecks often rear their heads. Corrupted payloads, missing logs, duplicated streams—all throw agents off their game.

- Contextual overfitting: LLM-powered agents sometimes learn quirks from analyst feedback that reflect individual (not systemic) risk postures.

- Fragmented governance: Who takes responsibility for agentic decisions? If the agent makes a call that leads to wrongful account suspension, the fallout isn’t just technical.

One prominent bank quietly rolled back its agentic fraud pilot after a rash of wrongful risk flags on VIP accounts. The LLM “learned” that high net-worth individuals with multiple addresses matched synthetic identity profiles; not only was this a misfire, it nearly cost the bank several lucrative business relationships.

Industry Nuance: Regulatory and Compliance Hurdles

Agentic systems fundamentally challenge legacy audit paradigms. Regulators—especially in the EU and APAC—now ask for transparent logic chains that not only explain risk scores, but the rationale behind investigatory threads. LLMs, for all their power, don’t naturally produce neat, stepwise explanations; transaction narratives can drift, and key steps may get lost in summary.

As a result:

- Enterprises must invest in traceability features, surfacing intermediate agent actions and data requests.

- Compliance teams increasingly co-design prompt logic to guarantee auditable outcomes.

- Some vendors now offer “explainability wrappers,” translating agentic reasoning into risk-appropriate summaries suitable for audit review.

Yet this is still emerging. The best fraud teams work with compliance—and sometimes despite it—to ensure agentic decisions hold up under scrutiny.

What should fraud teams keep in mind about agentic intelligence?

- Monitor event volume dynamically, not with fixed thresholds. Streaming flows are never uniform.

- Invest in human-agent interaction features; feedback loops matter more than sheer automation.

- Track reasoning drifts longitudinally. The best agents aren’t the most verbose, but those who return to first principles when context changes.

- Build explainability into every stage—from streaming triggers to agentic narratives, not as an afterthought.

- Don’t expect universal accuracy; some fraud scenarios stubbornly resist any real-time narrative.

- Reserve agentic enrichment for high-value, high-complexity cases. Simple trigger-based frauds don’t justify the extra latency.

The Last Word

The truth? Hybrid agentic systems will not replace the fraud analyst, at least not soon. What they do offer is a less monotonous, far richer investigative workbench. Streaming data provides speed; agentic reasoning injects depth. Neither survives contact with real incidents unscathed.

A decade into streaming analytics, and just a few years past the LLM surge, it’s clear that the only unifying principle here is constant adaptation. Fraud morphs, pipelines fracture, agents meander—and practitioners improvise. Perhaps that’s the real allure: in this domain, uncertainty is the norm, and only those who embrace a bit of chaos keep up.