Key Takeaways

- LangGraph requires a memory-aware, stateful deployment architecture, unlike traditional stateless APIs. Azure services must be thoughtfully composed to support agent orchestration and persistence.

- Azure Container Apps with Dapr and Cosmos DB is a strong baseline stack for mid-to-large LangGraph deployments, balancing event-driven execution, scalability, and shared memory coordination.

- Agent memory management is not trivial—without scoped context and memory summarization strategies, performance and correctness degrade quickly, especially under concurrent execution.

- Monitoring must go beyond infrastructure metrics. You need to track agent reasoning paths, hallucination rates, and semantic drift using OpenTelemetry and custom dashboards.

- The biggest mistakes are architectural, not technical—treating LangGraph as stateless, skipping secrets management, or building monolithic agents will cripple scalability and observability.

LangGraph has quietly emerged as one of the more compelling frameworks for orchestrating AI agents in enterprise settings. Unlike most libraries that offer vague abstractions or overly academic tools, LangGraph is practical and user-friendly. It’s graph-based and deterministic if needed, yet it supports asynchronous workflows—features that map well to the messiness of real-world enterprise environments. But none of that matters unless it can be deployed and scaled reliably. That’s where Azure comes in.

Now, deploying LangGraph agents is not just a matter of “standing up some containers.” We’re not talking about yet another bot on a VM. We’re dealing with long-running, memory-aware, multi-agent orchestration systems that need to respond to triggers across enterprise data pipelines, third-party APIs, and internal systems like Dynamics 365, ServiceNow, or even homegrown legacy apps.

So how do you architect a robust, secure, and scalable environment on Azure to host these agents? The answer lies in blending Azure-native services—Functions, Durable Tasks, Kubernetes, Event Grid, and Azure Container Apps—with LangGraph’s architectural constraints and opportunities.

Also read: Forecasting Variance Analysis with GPT-4o and LangGraph: A New Era of Conversational Forecasting

What Makes LangGraph Different and Enterprise-Ready?

LangGraph isn’t just another library stitching together OpenAI functions with a chat loop. It’s about stateful, reactive, and coordinated workflows using multiple agents. Each agent can be:

- An LLM-backed cognitive module

- A rule-based procedural executor

- An API-wrapper agent

- A data-trigger listener or responde

This flexibility brings power but also complexity—especially when you consider:

- Persistent state management across agents

- Dependency sequencing (some agents can’t run until others finish)

- External triggers (human-in-the-loop approvals, API responses)

- Edge cases: retries, error handling, versioning of agent logic

So any deployment platform must support not just compute, but coordination

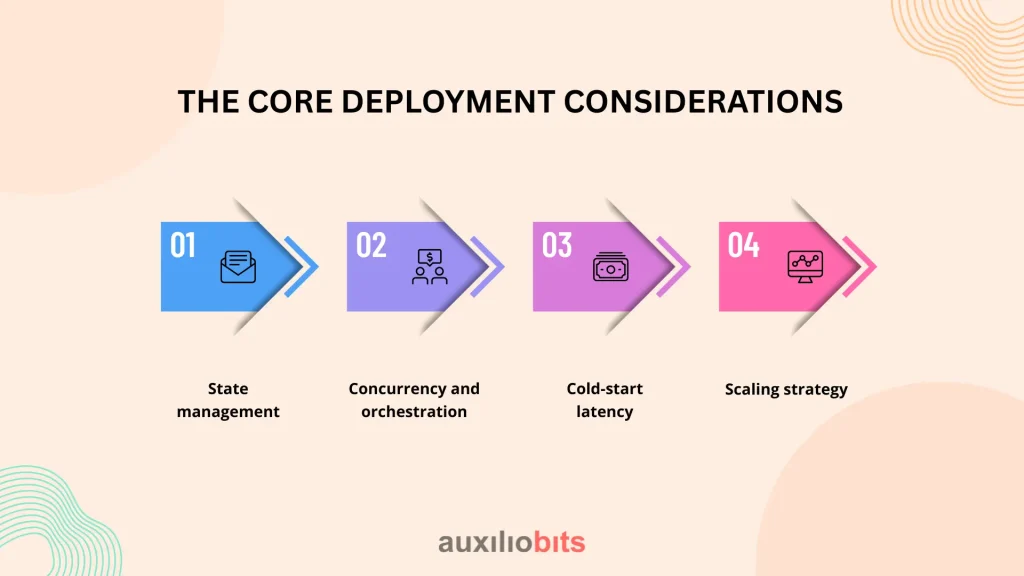

The Core Deployment Considerations

Before drawing any diagrams, these are the key decisions to lock in:

- State management: Will you store agent memory in Azure Cosmos DB, Redis, or a custom Postgres store?

- Concurrency and orchestration: Can the system support parallel agent execution without breaking deterministic flows?

- Cold-start latency: Are you okay with occasional function cold starts, or do you need warmed containers?

- Scaling strategy: Horizontal scaling is fine—but what about memory consistency and shared context?

You’d be surprised how many POCs break simply because they treated LangGraph as if it were a stateless REST API backend. It’s not.

Key Architectural Components on Azure

Here’s how you compose a scalable and secure LangGraph hosting architecture:

a. Azure Kubernetes Service (AKS)

- Best suited for large-scale, persistent agent graphs.

- Offers fine-grained control over pods, memory, and inter-agent communication.

- Works well with Dapr sidecars for stateful agent-to-agent coordination.

b. Azure Container Apps

- Ideal for mid-sized, event-driven agent orchestration.

- Native support for scale-to-zero, which saves costs during idle phases.

- Integrated with Dapr, which simplifies binding to state stores, pub-sub, and secrets.

c. Azure Durable Functions

- Good for lightweight, event-chained LangGraph flows.

- Can model agent lifecycles using function orchestrators.

- Drawbacks: Cold starts, limited LLM hosting, and poor debugging at scale.

d. Azure Cosmos DB (NoSQL or PostgreSQL modes)

- Acts as the brain behind the graph.

- Stores agent memory, token histories, and state transitions.

- Choose PostgreSQL if relational queries are needed across agents.

e. Azure Event Grid

- Enables LangGraph agents to respond to real-world triggers: document uploads, CRM events, etc.

- Avoids polling loops by pushing events directly to LangGraph entry points.

Multi-Agent Coordination and Memory Management

One of the hidden challenges is memory. LangGraph enables agents to remember context—but how is that memory shared, persisted, or scoped?

In practice:

- Short-term memory is stored in Redis-backed caches.

- Long-term memory resides in Cosmos DB documents per agent.

- Shared memory (cross-agent) requires careful structuring: avoid race conditions, and use versioned checkpoints.

And don’t underestimate the problem of memory bloat. If you serialize every agent’s full context after each hop, your I/O latency balloons. Architect for summarization or token window trimming in high-churn systems.

Authentication, Secrets, and Policy Enforcement

Running LangGraph in a regulated enterprise means obeying corporate IAM policies, even when agents are abstracting actions across systems.

Recommendations:

- Use Managed Identities for all compute services to access Azure Key Vault.

- Centralize secret rotation policies via Key Vault lifecycle policies.

- Enforce data egress restrictions using Private Endpoints and Azure Policy.

Most LangGraph examples in the wild hardcode keys or assume implicit trust between components. That’s fine in dev. It’ll get flagged—and killed—in production security reviews.

Monitoring, Observability, and Agent Behavior Tracing

LangGraph agents don’t just fail; they get confused. You’ll need to monitor not just crashes or timeouts, but semantic drift, hallucination rates, and abnormal call chains.

Here’s a layered approach:

- Azure Monitor + Log Analytics: For infrastructure health, latency, and error spikes.

- OpenTelemetry instrumentation: To trace agent hops, LLM calls, and memory reads.

- Custom dashboards: To visualize agent decisions, token use, and fallback patterns.

And yes—build a “black box recorder.” Something that logs the full sequence of prompts, decisions, and memory state. Not for debugging. For accountability.

Scalability Patterns: From Prototype to Production

Scaling LangGraph deployments isn’t as easy as “throwing more CPU.” You’re scaling state, dependencies, memory context, and external API quotas.

Some patterns that work:

- Sharded agent pools: Allocate agents to specific micrographs. Reduces contention.

- Event-driven activation: Use Event Grid + Durable Functions to wake agents only when relevant.

- Checkpointing: Periodically store graph state snapshots. Enables fault recovery and A/B testing of agent variants.

Avoid premature multi-tenancy. You’ll end up debugging 30 concurrent agent failures in different customer contexts—without knowing which prompt triggered which issue.

Common Pitfalls and Architectural Anti-Patterns

Let’s call them out:

- Stateless LLM functions: Agents with no memory lead to brittle flows and repetition loops.

- Monolithic agent design: Trying to do too much in one agent reduces testability and auditability.

- Implicit dependencies: Agents calling services without defined contracts (e.g., calling SharePoint APIs directly).

- Overuse of open-ended prompts: Leads to hallucinations and inconsistent outputs across runs.

- Treating LangGraph as a black box: You need transparency and traceability at every hop.

Real-World Architecture Example

Let’s say a financial services firm wants LangGraph agents to automate underwriting approvals.

They want:

- Multiple agents (Document Extractor, Risk Assessor, Human Reviewer Notifier)

- Integration with SharePoint, Teams, Dynamics 365

- Support for human-in-the-loop handoffs

Architecture:

- Azure Container Apps hosts LangGraph graph execution logic.

- Each agent is a sidecar container communicating via Dapr.

- Azure Event Grid listens to document uploads, triggering the Document Extractor agent.

- Memory is shared via Cosmos DB with TTL-based cleanup.

- Azure API Management is used to expose webhook endpoints securely.

- All logs flow into Azure Log Analytics with KQL dashboards to track agent outcomes.

Did it work? Mostly. What broke first? Memory management—agents began stepping on each other’s tokens. The fix involved adding stricter memory scopes and introducing a Gatekeeper agent to coordinate handoffs.

Closing Thoughts

LangGraph is one of the few frameworks that feels like it was designed for enterprise-grade agent systems. But that doesn’t make it plug-and-play. If you treat it like a stateless API layer, it’ll fight you—subtly, then loudly.

Azure gives you the scaffolding: scalable compute, robust eventing, strong IAM, and first-class observability. But the real work? It’s in mapping your agents’ cognitive responsibilities to actual services, managing memory without bloating cost, and debugging behaviors that are 80% correct but 20% wrong in costly ways.

And if your team is still measuring performance by how many prompts are processed per second? You’ve missed the point.

LangGraph on Azure is not about speed. It’s about composability, traceability, and aligned autonomy across agents. If you get that right, the rest can be tuned. But that architecture—the real architecture—is the strategy.