Key Takeaways

- In distributed AI environments, intent recognition acts as the semantic backbone that aligns agents toward a shared goal—without it, orchestration collapses into fragmented execution.

- Modern orchestrators don’t just pass tasks; they propagate evolving intents that guide agents dynamically, ensuring adaptive behavior as context and objectives change.

- Maintaining consistent intent across agents demands structural mechanisms like central intent stores, schema-based communication, and temporal versioning—not merely better prompts.

- In enterprise automation, intent drift or ambiguity can trigger incorrect API calls, approvals, or escalations, making explicit intent modeling critical for safety and governance.

- The future of orchestration lies in systems that don’t just “know” but negotiate intent—clarifying ambiguities, learning from corrections, and continuously refining shared understanding.

When you strip away the technical language, every AI system—no matter how layered or distributed—is trying to do one thing: understand what the user really wants. That’s the essence of intent recognition. In multi-agent orchestration, where dozens of autonomous entities collaborate to achieve a goal, that understanding becomes the backbone of coordination. Without it, even the most sophisticated agent ecosystem turns into a noisy room full of well-meaning but confused machines.

Also read: Using Embeddings to Power Multi-Agent Knowledge Sharing

The Real Problem: Misaligned Understanding

A human analogy helps. Think of a cross-functional project team—say, marketing, sales, and operations—working on a product launch. Everyone hears the same directive: “Increase adoption.” But to marketing, that means optimizing campaigns; to sales, it’s outreach incentives; to ops, it’s supply planning. Unless there’s a shared interpretation of intent, collaboration unravels.Agentic systems suffer from the same problem, only faster and at machine speed. Each agent may be excellent at its local task—classifying, retrieving, recommending—but if their understanding of the user’s or the system’s intent diverges, the overall orchestration falters. That’s why intent recognition isn’t a “nice-to-have” NLP feature. It’s the semantic glue that allows distributed intelligence to act coherently.

Why Intent Is Harder Than It Sounds

At a glance, “intent” seems straightforward: just figure out what the user said or wants. But orchestration environments—especially those involving LLM-based agents—rarely deal with clean inputs. Intents emerge in layers:

- Explicit Intent—A direct instruction, like “generate a report on Q3 sales.”

- Implicit Intent—The contextual or unspoken purpose, e.g., “I want to compare Q3 to Q2 to justify budget expansion.”

- Evolving Intent—The kind that changes mid-interaction: “Actually, focus on the European market only.”

Traditional bots could only manage the first type. Agentic architectures, on the other hand, must dynamically reinterpret the second and third. It’s not just about “slot-filling” anymore. It’s about maintaining a theory of shared purpose—across agents, across time.

Intent as the Coordination Primitive

In multi-agent systems, orchestration is usually handled through some form of planning or negotiation. But both rely on a common substrate: a shared model of what’s being pursued. Intent becomes that substrate.

You could think of it like this:

- Goal abstraction: Intent acts as a high-level contract that can be decomposed into subtasks.

- Context continuity: It preserves purpose as agents hand off control or data.

- Conflict resolution: Competing agents can reconcile priorities by referring back to intent.

In a practical deployment, the orchestration layer (sometimes called the meta-agent) constantly infers, updates, and rebroadcasts a “working intent” to sub-agents. For example:

- The retrieval agent understands that the goal is to “generate insight,” not to “fetch everything.”

- The summarization agent knows it’s synthesizing evidence for a financial report, not producing marketing copy.

- The reasoning agent adjusts its explanation depth based on inferred audience expertise.

All this happens not through rigid workflow logic but through intent propagation—a subtle but powerful shift from static task routing to goal-aware collaboration.

Case Example: Knowledge-Worker AI Suites

Consider how enterprise-grade AI assistants like Microsoft Copilot or Google Duet operate. Beneath the surface, these are orchestrations of multiple agents—document parsers, semantic retrievers, summarizers, and model adapters. When a user types, “Summarize client sentiment across last month’s support tickets,” the system must disambiguate:

- Are we summarizing textual sentiment or numerical metrics?

- Which client?

- What constitutes “last month” in calendar terms?

The orchestration layer uses intent inference to route the query correctly—calling a classifier to detect sentiment analysis mode, triggering connectors to fetch data from CRM, and finally invoking an LLM to summarize.

If that same system later receives “And how does that compare to Q2?”, the intent shifts. The system must preserve context (the same client), detect comparative framing, and engage analytical agents rather than linguistic ones.

That kind of fluid adaptability isn’t possible without a continuous understanding of intent. Otherwise, every message would start from zero, like working with an intern who forgets what you said five minutes ago.

When Intent Recognition Fails

It’s tempting to believe more data solves everything. Feed a large language model enough context, and it will “understand” the intent, right? Not quite.

Failure modes are everywhere:

- Ambiguity collapse: When the model overcommits to one interpretation despite uncertainty.

- Intent drift: When conversational context shifts but earlier assumptions persist.

- Mis-specified coordination: When agents interpret shared intent differently due to missing metadata or ontology mismatches.

In enterprise systems, these failures aren’t academic—they have operational costs. A misread intent in a workflow automation agent might result in a false escalation, an incorrect approval, or an unwanted API trigger.

That’s why intent recognition must be explicitly represented, not just “implied” through prompt engineering. The orchestration logic should have a memory of the current intent state, complete with confidence scores and history.

Designing for Intent Fidelity

How do you build a system that not only detects but also preserves intent across multiple agents? In practice, teams apply a mix of architectural and linguistic strategies:

- Central Intent Store – Maintain an intent registry as part of the orchestration graph. Each agent reads and updates this shared structure, similar to how distributed systems use a blackboard model.

- Schema-Based Communication—Instead of passing free-form text, agents exchange structured messages (e.g., JSON with fields like intent_id, goal_type, and context_scope).

- Confidence and Ambiguity Handling—Allow agents to flag uncertain intent interpretations and request clarification. A “semantic feedback loop” can be more reliable than silent guessing.

- Temporal Awareness—Intent isn’t static; timestamping and versioning help track evolution across a session or project.

- Human-in-the-Loop Recovery—In critical systems (finance, healthcare), surface intent conflicts to a human overseer rather than auto-resolving them.

Ironically, good intent recognition systems embrace doubt. They don’t rush to decide what the user meant—they negotiate meaning. That’s a very human trait, and one reason multi-agent design feels as much like organizational psychology as it does computer science.

Understanding What’s Not Said

Under the hood, intent recognition relies on a mix of NLP models—semantic parsers, embeddings, and transformer-based classifiers. But the real differentiator lies in pragmatic understanding: discerning what’s implied.

For example:

- “Can you check if the client’s renewal went through?” might mean “Access the billing API and confirm status.”

- “Remind me what we discussed with Contoso last quarter” implies fetching meeting summaries, not random emails.

This is where context fusion—merging linguistic cues with system metadata—becomes essential. In modern stacks, that often means combining vector similarity (from LLM embeddings) with structured data lookups (from CRM or ERP). The resulting hybrid intent model has situational awareness: it doesn’t just parse words; it interprets them in light of operational context.

Multi-Agent Implications: Negotiation and Autonomy

Intent recognition also influences how agents negotiate autonomy. Suppose three agents—Planning, Data, and Validation—are collaborating on a task. If all share the same intent model, they can act semi-independently. But if each infers intent differently, the orchestration layer becomes a bottleneck.

Some emerging designs address this through intent consensus protocols—essentially, mini-negotiations where agents exchange interpretations and converge on a shared goal. It’s not unlike distributed consensus algorithms (like Raft or Paxos), but for semantics instead of state.

That may sound abstract, but it’s already showing up in advanced frameworks like LangGraph, AutoGen, and CrewAI, where agents can “argue” or “propose” next steps. Intent is what keeps those arguments productive. Without it, collaboration devolves into semantic noise.

Cloud Platforms and Intent-Aware Orchestration

Both AWS and Azure have started embedding intent recognition deeper into their AI orchestration stacks.

- Azure OpenAI + Copilot Studio uses orchestration flows that carry “conversation intents” between plugins and connectors.

- AWS Bedrock Agents manage user requests through intent schemas that dictate which underlying model or action to trigger.

These aren’t just marketing terms—they reflect a real shift toward intent-centric architectures. Instead of rigid pipelines, we’re seeing orchestrators that dynamically recompose based on the evolving purpose of the interaction.

Still, the tooling remains primitive. Many enterprises today hardcode intents into YAML definitions or prompt templates—a brittle approach. The next step will be self-learning orchestration, where intent models adapt to new phrasing and patterns automatically, just as CRM systems learn customer preferences over time.

The Human Paradox

There’s an irony in all this. The more sophisticated our intent recognition becomes, the more it mimics human uncertainty. Human collaborators don’t always know exactly what the other person means; they infer, clarify, and adjust on the fly. Good multi-agent systems should do the same—gracefully handle misalignment rather than pretend it doesn’t exist.

That requires an acceptance: intent is not a static artifact but a living hypothesis. The orchestration layer must continually test and refine that hypothesis. And perhaps that’s the truest sign of intelligence—not perfect comprehension, but resilient misunderstanding.

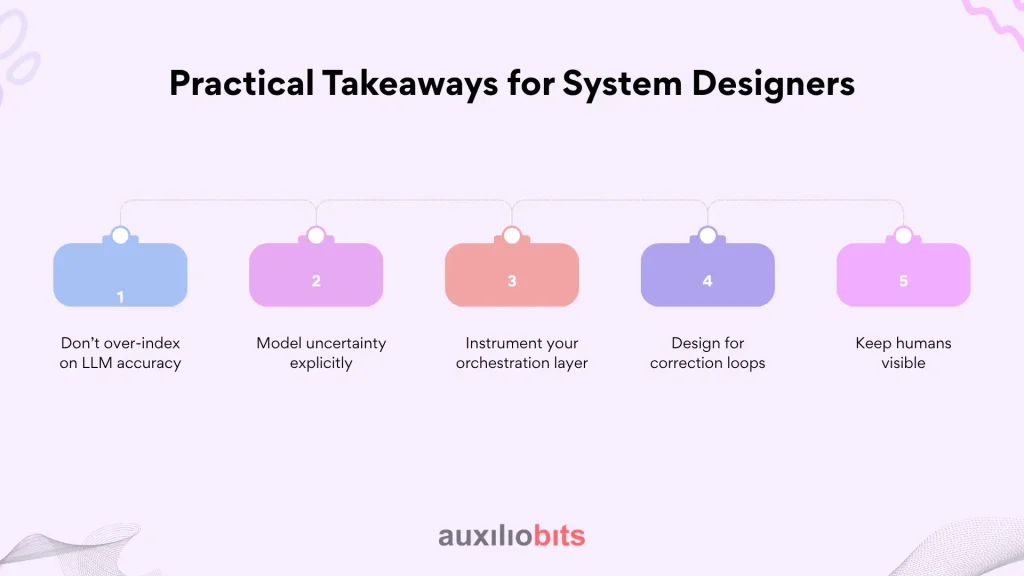

Practical Takeaways for System Designers

For those actually building or deploying multi-agent orchestrations in production, here’s what experience has shown matters most:

- Don’t over-index on LLM accuracy. Intent errors usually come from missing context, not bad language models.

- Model uncertainty explicitly. Use probabilistic or fuzzy logic rather than binary intent classification.

- Instrument your orchestration layer. Log every intent interpretation and transition—it’s invaluable for debugging.

- Design for correction loops. Allow agents (or users) to clarify and refine intent mid-flow.

- Keep humans visible. Even a simple “did you mean…” checkpoint can prevent automation disasters.

A Shift in How We Think About Automation

Once you view orchestration through the lens of intent, automation stops being about workflow execution and starts looking more like goal alignment. The orchestration layer becomes less of a traffic cop and more of a facilitator of shared understanding.

That’s a profound change. We used to design systems that said, “Tell me exactly what to do.” Now we’re building ones that ask, “What are you really trying to accomplish?”

And while that may sound like a philosophical shift, it’s an architectural one too. Because in a multi-agent world, intelligence isn’t measured by how well each agent performs in isolation—but by how faithfully they interpret the intent that binds them together.