Key Takeaways

- Agent-driven digital twins move beyond static replication by enabling autonomous decision-making, emergent behavior detection, and scenario simulations that traditional twins cannot achieve.

- They improve operational efficiency and resilience by proactively modeling complex production processes, predicting bottlenecks, and suggesting optimal interventions.

- Layered architecture is crucial: Data integration, autonomous agents, simulation engines, and actionable visualization together create an effective decision-support system.

- Challenges like data quality, overfitting, and computational load must be managed, emphasizing phased deployment, continuous validation, and operator trust.

- The future extends beyond production optimization to strategic decision-making, collaborative supply chains, and enterprise-wide AI-driven insights, making agent-driven twins a core enabler of digital transformation.

The term “digital twin” is almost omnipresent in manufacturing and industrial operations. Nevertheless, the way it is often used, most of the tools or processes are scratching the surface as a stable, high-loyal model. The next generation of digital twins is just beyond visualization; This introduces agent-powered simulation that predicts system behavior in variable conditions, adapts, and even predicts system behavior. This is not just publicity – this is a change of how production is understood, controlled, and optimized.

Also read: The Future of AI-Driven Digital Twins in Smart Factories

Digital Twins: From Static Models to Dynamic Agents

Traditional digital twins repeat the physical world in a digital format, capturing geometry, operational parameters, and environmental references. But here is the grip: Most of these twins are inactive. They tell you what has happened, sometimes what is happening, but rarely what can happen. Stable models can detect discrepancies, suggest preventive maintenance, or track the production throughput – but they do not autonomously detect alternative scenarios or simulate the cascading effects of operating decisions.

Enter agent-powered digital twins. Instead of a single monolithic model, the system has several autonomous agents, each representing a component, machine, or even a human operator. These agents are not only data containers-they are decision-making institutions that interact with each other, react to changing circumstances, learn from previous results, and simultaneously imitate many “what-if “scenarios.

Think of a factory floor: conveyor belts, robot arms, quality inspection stations, and supply chain logistics all interconnect. Each element is not present in isolation; there is an Interruption in a station throughout the process. Agent-based digital twins capture these dependencies and provide actionable insights that often recall stable twins.

Why Agent-Driven Simulations Matter

You may be surprised-when traditional analytics work quite well, why complicate cases with agent-powered simulation? The answer lies in complexity and adaptability.

- Casual behavior: The production system is naturally complex. Botleen are not always linear, and small changes can have an effect. Agent-operated models allow these emerging behaviors to go to the surface in simulation before disrupting reality.

- Active adaptation: Instead of reacting to downtime or defects, agent-driven twins simulate several intervention strategies. For example, if an important machine is overheated, agents can predict downstream effects, allowing operating managers to rebuild the workflows or adjust the schedule in advance.

- Scenario exploration at scale: Consider the scale on the scale: Consider new product lines, supplier changes in lead time, or unexpected maintenance. Traditional models struggle with combustible scenarios. Agents can run parallel simulations, weigh trade-offs, and suggest the best course of action.

- Human-in-loop decision making: Agents do not replace humans; They increase decision making. Operators can use it in a virtual environment, validate hypotheses, and gain confidence before executing the real-world change.

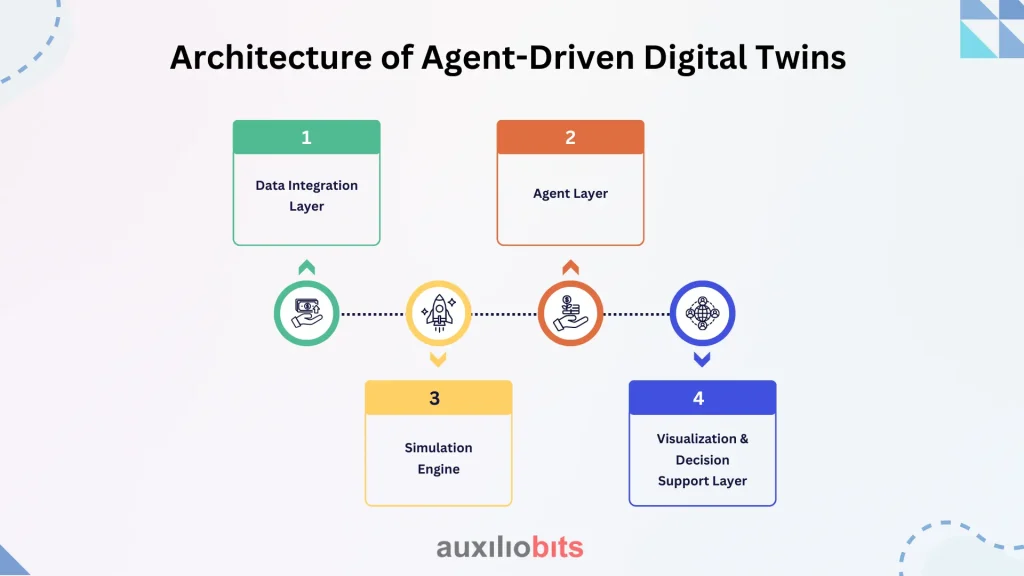

Architecture of Agent-Driven Digital Twins

The construction of an agent-powered digital twin requires a layered approach:

1. Data Integration Layer:

Real-time sensors, IOT devices, ERP systems, MES (manufacturing execution system), and external supply chain data feed the digital environment. Accuracy is paramount. Garbage, garbage still applies, perhaps more so when many agents rely on the same data.

2 Agent Layer:

Each agent symbolizes the arguments, obstacles, and objectives of the unit he represents. Some agents adapt for efficiency, others for reduction in cost or quality control. Agents interact on the basis of defined rules but also adapt using reinforcement learning or

3. Simulation Engine:

This is the atmosphere where agents work. It should handle concurrent, dependent, and stochastic phenomena—essentially imitating the unexpected nature of real production systems.

4. Visualization & Decision Support Layer:

Insights from simulation are presented in actionable formats—all are allowed to interfere with allies, dashboards, alerts, and landscape comparison—managers are all allowed to intervene.

Examples of the real world: The Siemens Ambar Electronics Plant in Germany uses agent-powered digital twins for its PCB assembly lines. Autonomous agents simulate component flow, machine availability, and workforce allocation. It has allegedly increased the overall production efficiency by about 20%, reducing scrap rates.

Challenges and Nuances

Despite his promise, agent-powered digital twins are not a panacea. There are nuances and potential disadvantages:

- Data quality and delay: agents rely on time and accurate data. Inconsistent sensors can promote errors in reading or delayed ERP update simulation.

- Overfiting for historical patterns: Surprise learning-based agents can sometimes be “comfortable” with historical data, remembering novel disruption. This is particularly important in industries that suddenly suffer from a jerk in demand or supply chain.

- Computational Overhead: Running many interacting agents in a high-fidelity simulation is resource-intensive. Cloud computing and edge computing have reduced some obstacles, but scaling is a practical concern.

- Interpretability: Agents may suggest counterintuitive strategies. Without clarification, operators can hesitate to rely on the model, highlighting the requirement for a transparent decision-making outline.

These challenges emphasize the importance of a phased deployment, starting with high-effect areas, validating agent behavior against known scenarios, and progressively scaling.

Practical Applications in Production Optimization

- Dynamic Scheduling: Agents model production lines and labor allocation. Instead of a static Gantt chart, managers can test schedule shifts, machine maintenance, or supply delays. Agents can recommend the least disruptive schedule changes, balancing throughput with downtime risk.

- Predictive Maintenance: Traditional predictive maintenance models detect likely failures based on historical patterns. Agent-driven twins go further, simulating maintenance interventions and estimating impact on output, energy consumption, and downstream processes.

- Quality Assurance: Defect propagation in a production line is rarely isolated. Agents representing inspection stations, assembly robots, and upstream suppliers can identify likely defect sources, suggest inspection adjustments, and even simulate process tweaks to prevent recurrence..

- Supply Chain Resilience: Agents can represent suppliers, transport logistics, inventory buffers, and demand fluctuations. By running agent-based simulations, manufacturers can identify vulnerabilities and proactively adjust inventory or sourcing strategies.

One notable case is Bosch’s use of agent-based twins in automotive manufacturing. When a key stamping press experiences a minor delay, agents simulate potential bottlenecks across assembly lines and suggest rerouting subassemblies. The result: minimal production loss and better resource utilization.

Designing Effective Agent-Driven Twins

From experience, many design principles distinguish successful implementation:

- Modularity: Agents must be modular, reflecting the separation of the real world into components and processes. This allows easy updates and scalable use.

- Hierarchy of Agents: Not all agents are the same. Some work at the machine level, others at the line level, and some at the plant level. The hierarchical coordination prevents chaos and enables meaningful accidental behavior.

- Continuous Learning: The Digital twin must develop. Feedback loops agent rearrange strategies from real-world results, gradually improving the prediction accuracy and quality of decisions.

- Scenario Diversity: Encourage agents to detect non-illiterate strategies. Sometimes the best adaptation is never incremental but disintegrating—such as temporarily actual resources that humans can oppose in the beginning.

Beyond Optimization: Strategic Decision Support

Agent-driven twins are not limited to daily tasks. They can inform strategic decisions:

- Capacity Planning: Before investing in new equipment or plants, agents can simulate various production scenarios, throughput expectations, and bottlenecks.

- Energy Optimization: In energy-intensive industries such as steel or chemical production, agents can model the use of electricity, shift patterns, and cooling requirements to reduce costs and emissions.

- Product Customization: As the demand for mass-unfeated products increases, agents simulate complex production paths for various configurations, helping managers to balance flexibility with efficiency.

Future Directions

The area is still developing. Some emerging trends to see:

- Integration with Agentic AI: Advanced AI agents are being developed that not only simulate physiological processes, but also understand market signs, regulatory obstacles, and supplier negotiations. This factory expands digital twins from optimization to enterprise-wide decisions.

- Hybrid Twins: The combination of physics-based models with data-powered AI models allows for better handling of incomplete data.

- Edge Deployment: Running agent simulation of production hardware reduces delay and allows real-time autonomous decisions.

- Collaborative Twins Across Ecosystems: Imagine many companies in the supply chain connecting your digital twins. Agents can interact on logistics, predict supply disruption, and optimize multi-entry production with collaboration.

Lessons from Real Deployments

It is worth noting that the most effective agent-operated twins are not the most staunch children. Siemens, Bosch, and some other early adopters emphasized practicality:

- Start small: automate the most volatile or expensive part of the first process.

- Validate assumptions: Agents are only as good as the rules and data behind them.

- Avoid overconfidence: it can provide a twin insight, but humans still need to make decisions.

- Embrace “imperfect” simulations: Even a model that does not perfectly predict every result can save millions if it exposes trends, bottlenecks, or weaknesses.

Sometimes, an ordinary agent representing a downtime pattern of a single machine can provide more ROIs than a wide plant-wide model that does not fully rely on operators.

The Ending Thoughts

Agent-driven digital twins are not just an evolution of technology—they’re a shift in mindset. They invite operations managers, engineers, and supply chain planners to think in terms of autonomous interactions, emergent behavior, and scenario-based optimization. The promise is clear: higher efficiency, lower downtime, proactive maintenance, and a new lens for strategic decision-making. Yet, success hinges on careful design, data integrity, operator trust, and iterative learning. Those who master these subtleties will find themselves not just optimizing production, but transforming it.