Key Takeaways

- Agentic automation adoption fails more from cultural resistance than technical complexity—change management bridges that gap.

- Alignment between people, process, and adaptive agents is not sequential but interdependent; each shapes the success of the other.

- Governance, accountability, and transparency are essential to build trust in autonomous systems.

- Over-automation or poor communication around role changes can erode employee confidence and derail adoption.

- Successful enterprises embed continuous learning—not just in their agents, but in their teams and operating culture.

Organizations embarking on agentic automation journeys often underestimate one crucial factor: change management. Technology adoption, especially when it involves autonomous, adaptive agents, isn’t just a question of deployment or licensing. It’s about synchronizing people, processes, and technology in a way that produces tangible value while minimizing disruption. And yet, time and again, enterprises find themselves frustrated—not by the software, but by misaligned expectations, resistance, and process friction.

Also read: How Agentic Process Automation differs from traditional RPA—tech stack perspective

Understanding the Human Factor in Agentic Automation

Agentic automation (or Agentic Process Automation, APA) extends beyond traditional RPA. It introduces autonomy, decision-making capabilities, and self-learning mechanisms. While this promises efficiency and scalability, it also introduces uncertainty. Employees who have historically executed tasks deterministically now face machines that adapt, negotiate, and sometimes act unpredictably. This is where change management becomes non-negotiable.

A common mistake is assuming that training a team on a new interface or workflow is sufficient. In reality, the challenge is behavioral, cultural, and cognitive. Workers often ask, “Will my job survive this?” or “If the agent makes a decision, who is accountable?” These are not trivial concerns; ignoring them can derail even the most technically sound automation project.

Case in point: A global logistics company implemented an agentic credit assessment bot. Initially, employees resisted because it could autonomously approve certain low-risk clients. Even though it reduced manual errors and sped up approvals by 40%, pushback persisted until management established a “decision validation window,” allowing humans to review agent decisions for the first three months. Simple oversight mechanisms like this can bridge the trust gap.

Process Alignment: The Backbone of Successful Adoption

People alone don’t make automation successful; processes must be equally aligned. Many organizations adopt agentic solutions on top of outdated or fragmented workflows. An agent is only as effective as the process it’s embedded in. Misalignment can produce unintended consequences, such as automating inefficiencies or magnifying bottlenecks.

Processes for agentic adoption differ from traditional RPA. RPA can tolerate procedural rigidity; agents thrive on context, learning, and adaptability. This requires:

- Process transparency: Employees must understand what the agent does, when, and why. Black-box automation is a recipe for mistrust.

- Decision boundaries: Clearly defined limits of autonomy prevent agents from overstepping their intended scope.

- Feedback loops: Real-time monitoring and human feedback are critical. Agents should learn from exceptions and corrections.

- Process modularity: Breaking workflows into discrete, well-defined modules allows agents to adapt without disrupting the entire operation.

A practical example comes from a manufacturing firm that introduced agentic supply chain bots. Instead of a single monolithic workflow, the company modularized supplier selection, order prioritization, and shipment tracking. Agents could optimize each module independently, but humans retained control over exceptions and strategic overrides. This modular approach enabled faster adaptation to demand fluctuations while maintaining human oversight.

People-Process-Technology Interdependency

Successful change management recognizes that people, process, and technology are not separate levers—they are interdependent. Adjusting one without considering the others risks suboptimal outcomes.

- Technology without process: Introducing autonomous agents into a poorly defined workflow amplifies inefficiencies. For example, an agent tasked with approving invoices may fail if the underlying approval criteria are inconsistent or subjective. The result? Increased errors and employee frustration.

- Process without people: Even well-designed processes fail if employees resist adoption or lack understanding. Agents can handle routine approvals, but humans still make judgment calls. Ignoring the human element often leads to underutilization of the technology.

- People without technology: In highly dynamic environments, manual execution alone becomes a bottleneck. Skilled employees frustrated by repetitive tasks may disengage, leading to attrition or low morale. Introducing agents without proper change management exacerbates this effect.

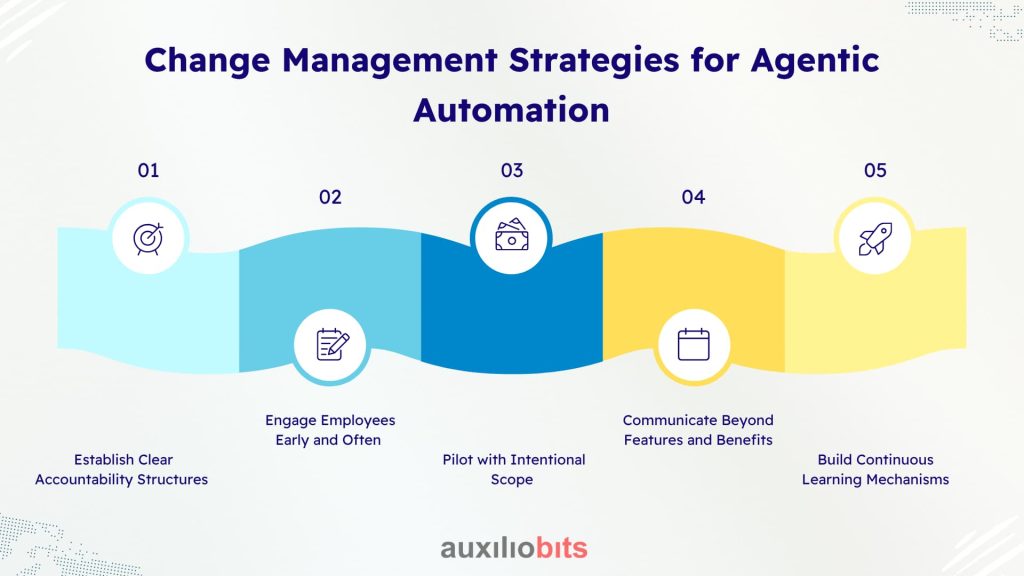

Change Management Strategies for Agentic Automation

Experience shows that adopting agentic solutions requires more than standard training or communication plans. Consider these nuanced strategies:

1. Establish Clear Accountability Structures

One recurring concern with agentic automation is accountability. Employees fear that autonomous decisions may expose them to risk. Organizations that define clear accountability frameworks—who reviews what, how exceptions are handled, and who signs off on outcomes—see smoother adoption. This is not about bureaucracy; it’s about trust.

- Assign ownership for agent oversight.

- Define escalation paths for exceptions.

- Maintain audit logs for transparency and compliance.

2. Engage Employees Early and Often

People resist change when they feel decisions are imposed. Engaging employees during the design and implementation phases fosters buy-in and provides practical insights into workflow nuances that technology architects may overlook.

- Conduct workshops to map existing pain points.

- Include employees in agent pilot testing.

- Collect iterative feedback and visibly act on it.

Interestingly, research from the Harvard Business Review suggests that organizations that involve employees in automation pilots report up to 30% higher adoption rates, compared with those that implement agents unilaterally.

3. Pilot with Intentional Scope

Agentic automation pilots often fail when organizations either oversimplify tasks or attempt enterprise-scale deployment too early. The goal should not only be to demonstrate technical capability but also to validate change readiness.

- Start with high-value, low-risk processes.

- Ensure the pilot scope allows observation of agent learning and human interaction.

- Measure both operational and behavioral KPIs (e.g., task accuracy, user satisfaction, speed of adoption).

4. Build Continuous Learning Mechanisms

Unlike RPA bots, agentic systems evolve. This requires embedding continuous learning into both the technology and organizational culture.

- Encourage human reviews to correct misaligned agent decisions.

- Monitor agent performance and fine-tune parameters over time.

- Create “learning sessions” where teams review exceptions and adapt processes accordingly.

It’s worth noting that continuous learning isn’t a silver bullet. Agents can adapt in ways that conflict with organizational norms if feedback loops are inconsistent or delayed. Effective change management must anticipate and manage these risks.

5. Communicate Beyond Features and Benefits

Most change programs focus on what the technology does and its ROI. In agentic automation adoption, communication must also address existential concerns, such as job security, role evolution, and decision-making authority.

- Highlight new opportunities for skill growth, rather than just efficiency gains.

- Clarify the agent’s role in augmenting—not replacing—human judgment.

- Use storytelling and real examples to show how agents improve outcomes without undermining careers.

Common Pitfalls and Lessons Learned

Even with a solid strategy, organizations stumble. Some recurrent pitfalls include:

- Over-automation: Pushing agents into areas with high ambiguity or political sensitivity can create conflict. Not every decision benefits from autonomy.

- Underestimating cultural resistance: Technological sophistication cannot substitute for leadership and communication. Resistance is often invisible until it manifests in disengagement or workarounds.

- Ignoring exception handling: Agentic systems are adaptive but not infallible. Neglecting exception protocols risks operational disruptions and undermines trust.

- Neglecting governance: Without oversight mechanisms, agents may learn suboptimal behaviors or make decisions misaligned with organizational priorities.

A real-world example is a financial services firm that deployed an agentic claims adjudication system. Initially, efficiency metrics skyrocketed, but complaints from employees about “opaque agent reasoning” grew. Once the organization implemented dashboards for transparency and weekly review meetings, adoption stabilized, and performance gains persisted. The lesson? Governance and transparency are as important as the code itself.

Metrics That Matter

Measuring success is tricky. Traditional KPIs like cost savings or processing time are necessary but insufficient. For agentic adoption, include behavioral and process metrics:

- Employee engagement: Surveys, adoption rates, and participation in feedback loops.

- Process accuracy and exception rates: How often agents make correct decisions and the frequency of escalations.

- Adaptation speed: How quickly agents learn from feedback and adjust workflows.

- Trust indices: Can be qualitative, tracking confidence in agent decisions over time.

Organizations that combine operational and human metrics develop a more realistic picture of change effectiveness. After all, automation that alienates employees rarely sustains long-term ROI.

Integrating APA into Organizational DNA

True adoption goes beyond project timelines. Agents must eventually become embedded in organizational routines, decision-making culture, and governance frameworks. Achieving this requires:

- Leadership sponsorship: Executives must actively support agents’ integration and model their usage.

- Embedded training: Incorporate agent interaction into standard operating procedures and onboarding programs.

- Iterative governance: Continuously update rules, decision thresholds, and oversight protocols as agents evolve.

- Cultural reinforcement: Celebrate successes, recognize employee-agent collaboration, and normalize iterative improvement.

Final Thoughts

The journey toward agentic automation is as much about human adaptability as technological sophistication. Aligning people, process, and agents is not linear; it’s iterative, messy, and full of small contradictions. Employees may initially resist, yet become champions once trust and transparency are established. Processes may require untangling and modularization before agents can truly optimize them. Agents themselves will evolve, revealing both opportunities and blind spots that demand oversight.

Organizations that succeed are those that respect these nuances. They treat agentic adoption not as a deployment project, but as an ongoing orchestration of technology, human behavior, and operational excellence. And they remember: an agent may learn, but humans teach, guide, and contextualize. Skip that, and efficiency gains may be short-lived, no matter how advanced the automation.