Key Takeaways

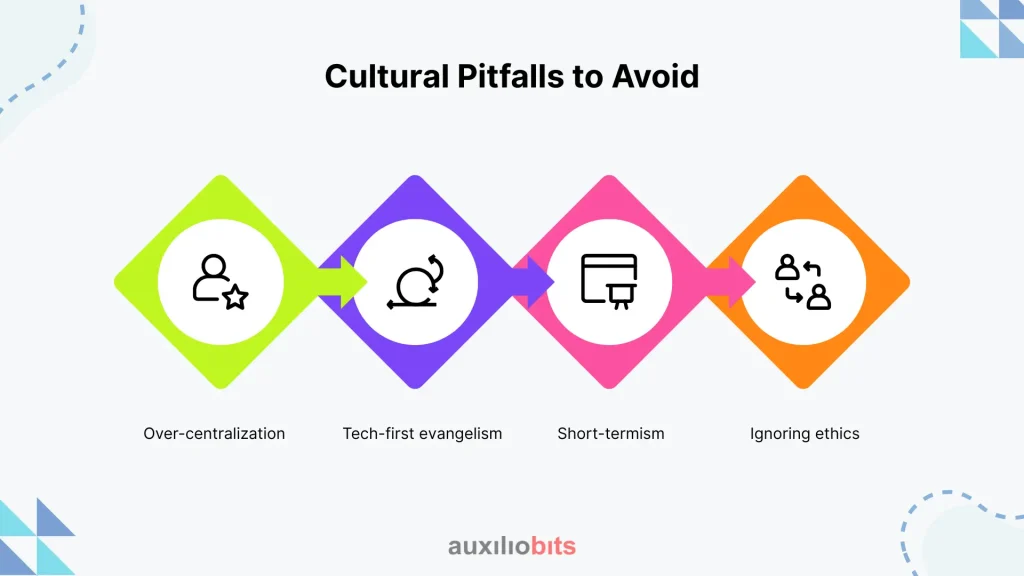

- Over-centralization, tech-first evangelism, short-term thinking, and neglecting ethics are the most common reasons AI-first cultures fail.

- Employees don’t care about algorithmic accuracy; they care about tools that simplify daily work and make them more effective.

- Embedding AI into routines through literacy campaigns, recognition, and performance alignment works better than big-bang initiatives.

- Even the best AI strategies fail if organizational mindsets remain rooted in legacy ways of working.

- Success means AI is no longer optional; it’s embedded into processes, trusted by default, and reinforced through behavior and leadership.

For decades, the playbook in large enterprises has been clear: refine processes, add incremental technology, and cautiously experiment at the edges. That approach worked in ERP adoption, CRM rollouts, and even cloud migration. But AI is testing the limits of that model. Unlike past technology waves, AI is not just another system bolted onto the existing stack—it reshapes how decisions are made, who makes them, and how quickly organizations move.

And here lies the uncomfortable truth: many traditional enterprises have invested in AI pilots, but very few have actually built an AI-first culture. Instead, AI projects often sit in silos, disconnected from strategy, culture, and day-to-day work.

So, what does it mean to build an AI-first culture? It’s not about filling a slide deck with AI buzzwords. It’s about embedding intelligence into how people think, how teams operate, and how leaders steer organizations.

Also read: The Impact of Agentic AI on Lean Six Sigma and Process Excellence

The Misconception: AI as a Department vs. AI as a Mindset

One of the most common mistakes in boardrooms is treating AI as a “thing” that belongs to a department. CIOs set up an AI CoE, recruit data scientists, and buy licenses for machine learning platforms. Necessary steps, yes. But they often stop there.

In practice, that model creates two camps:

- The “AI people” who experiment with models, dashboards, and proofs-of-concept.

- The business side nods politely but continues running processes the way they always have.

The result? A showcase demo at the next investor presentation, but no real cultural shift inside the enterprise. AI remains a lab project.

An AI-first culture flips this. Instead of confining AI to specialists, the goal is to make AI part of everyone’s work vocabulary—just as spreadsheets, email, and CRM became second nature over time.

Building Blocks of an AI-First Culture

There’s no universal recipe, but certain elements repeatedly show up in organizations that leap:

1. Leadership That Models Curiosity, Not Just Compliance

Executives often talk about “embracing AI” while outsourcing the learning to their teams. That sends the wrong signal. Employees notice when their leaders can’t articulate even the basics of how AI impacts their industry.

- A CFO who can ask informed questions about algorithmic bias builds more trust than one who simply signs off on budgets.

- A plant director who spends time with the analytics team signals that AI isn’t optional—it’s integral.

Curiosity is contagious, and in enterprises, it cascades top-down.

2. Incentive Systems That Don’t Punish Experimentation

Traditional performance reviews often reward predictability and penalize deviations. That mindset suffocates AI adoption. Why would a regional sales manager use a new AI lead-scoring system if missing quota could cost her bonus?

Rewiring incentives matters. Some firms add “AI adoption metrics” to KPIs. Others create safe zones where failure is not career-limiting. Either way, the message is clear: trying AI-driven methods is valued, not risky.

3. Reskilling Beyond Data Scientists

An AI-first culture is not about having a thousand PhDs in machine learning. It’s about equipping everyday professionals with AI literacy.

- Finance teams should know how anomaly detection works in expense monitoring.

- Procurement should grasp how contract analytics can surface risks.

- HR should understand the difference between a deterministic rule engine and a generative model.

None of this requires deep math. But it does require investment in practical, role-specific training that demystifies AI.

4. Embedding AI Into Workflows, Not Dashboards

AI adoption falters when insights live in dashboards that require logging into yet another system. Employees already juggle ERP, CRM, ticketing, and compliance tools. Adding a shiny new portal rarely sticks.

Cultural adoption accelerates when AI shows up inside the tools people already use—whether it’s a recommendation inside SAP, an anomaly alert in ServiceNow, or a suggested email draft in Outlook. AI should feel invisible, not like homework.

The Skepticism Barrier

Let’s be candid: most employees don’t resist AI because they’re lazy or technophobic. They resist because they’ve seen “transformational tools” come and go—each promising to save time but often adding more steps.

In banking, tellers were told that RPA bots would reduce workload. Instead, they had to double-check the bots’ output. In healthcare, clinicians were told that AI would streamline charting. Instead, they found themselves correcting poorly transcribed notes.

An AI-first culture acknowledges this skepticism openly. Leaders admit past failures and explain how this time will be different—not with platitudes, but with proof. Transparency builds credibility; empty cheerleading destroys it.

The Role of Middle Management: Gatekeepers or Enablers

Here’s a dynamic often overlooked. Senior leaders may champion AI, and frontline staff may be curious. But the middle layer of management—those who control budgets, assign tasks, and enforce policies—can make or break adoption.

- Some act as gatekeepers, shielding their teams from disruption because they fear performance dips.

- Others become enablers, translating strategy into pragmatic steps and ensuring teams have breathing room to adapt.

One insurance company invested heavily in AI-driven claims triage. The models worked, but claim supervisors instructed adjusters to “just stick to the old process” because they didn’t want audit headaches. AI stalled, not because of tech, but because middle managers weren’t aligned.

Lesson: You can’t build an AI-first culture by focusing only on executives and frontline staff. Middle management needs explicit empowerment, retraining, and reassurance.

Cultural Pitfalls to Avoid

Not every move towards an AI-first culture succeeds. Some patterns of failure repeat across industries:

- Over-centralization: A single “AI task force” controls everything. Business units feel sidelined, and adoption stalls.

- Tech-first evangelism: Talking endlessly about model accuracy while ignoring usability. Employees don’t care about AUC scores; they care if it helps them finish work faster.

- Short-termism: Expecting quarterly ROI from AI pilots. Culture doesn’t shift in three months; it compounds over years.

- Ignoring ethics: Deploying AI without addressing bias, explainability, and governance. Nothing erodes trust faster than a black-box system that appears unfair.

Practical Ways to Nudge Culture Forward

Culture change sounds abstract, but small actions accumulate. Some practical levers I’ve seen work:

- Redesign town halls: Instead of executives giving monologues, use AI agents to surface real-time employee questions from chat channels, and let leaders address them directly.

- Run AI literacy campaigns: Not as generic workshops, but embedded in actual work scenarios. A procurement team could analyze sample contracts with an AI tool, not sit through slides.

- Celebrate “AI in the wild”: Share stories of employees using AI in unexpected ways, even if small. Recognition signals value more than mandates.

- Adjust performance reviews: Include openness to AI-driven workflows as part of behavioral metrics, not just output metrics.

Why Culture Eats AI Strategy for Breakfast

The phrase “culture eats strategy for breakfast” is often overused, but in AI, it’s painfully true. Strategy documents can outline AI ambitions, but if culture resists, adoption grinds to a halt.

Think of Kodak’s engineers who invented the digital camera but couldn’t shift the cultural mindset from film. Or Blockbuster’s executives, who saw streaming but were culturally anchored in late fees. AI poses the same risk: you can have world-class algorithms, but still lose if people don’t use them.

A Subtle But Crucial Shift

When enterprises get it right, AI stops being a project and becomes a reflex. A supply chain planner no longer asks, “Should I trust this forecast?”—they simply use it because it’s embedded and normalized. A compliance officer doesn’t debate whether AI should scan contracts; they assume it does.

This doesn’t mean blind trust. Healthy skepticism remains. But the cultural baseline shifts: AI is no longer optional or experimental—it’s part of the organizational fabric.

Final Thoughts

An AI-first culture is not about installing tools. It’s about reprogramming how organizations think and act. That’s messier than technology, but it’s also where the competitive advantage lies.

Enterprises that succeed will be those that treat AI not as a side initiative but as a shift in cultural DNA. And culture, unlike technology, can’t be licensed from a vendor—it has to be built, modeled, and reinforced every day.