Key Takeaways

- If you don’t whitelist agents explicitly, you’re outsourcing governance to chance

In multi-agent systems, “implicit trust” is just another way of saying “unbounded behavior.” CrewAI makes agent identity explicit—ignoring that capability is an architectural decision, not an oversight. - Task design is a form of authority design. What an agent is asked to do often matters more than what tools it has. Poorly scoped tasks quietly grant power that no policy layer can fully claw back later.

- Tool access is where real damage happens—not in reasoning loops. Most enterprise failures don’t come from bad reasoning; they come from over-permissioned write access. Separating read, recommend, and execute capabilities is non-negotiable in production systems.

- Policies that don’t adapt at runtime will be bypassed or ignored. Confidence scores, risk levels, system responses, and even timing matter. CrewAI workflows allow policies to respond dynamically—static allow/deny rules simply don’t survive real operations.

- Auditable agent behavior builds trust faster than perfect autonomy. Teams don’t distrust agents because they’re wrong; they distrust them because decisions aren’t explainable. Traceability—who acted, why, and under which rule—turns AI from a liability into an operational asset.

Enterprise AI teams are discovering something uncomfortable: once you move from single-agent demos to multi-agent systems, governance becomes the hard problem. Not accuracy. Not latency. Governance.

When you allow agents to collaborate, delegate, call tools, spawn subtasks, or reason autonomously, you are no longer just building software. You are defining who is allowed to act, under what conditions, with what authority, and with what constraints. That’s not an AI problem. That’s an organizational control problem—just implemented in code.

This is where agent whitelisting and policy enforcement stop being abstract security concepts and start becoming architectural requirements. And if you’re using CrewAI to orchestrate multi-agent workflows, you’re already standing at the right abstraction layer to solve this cleanly—if you do it deliberately.

Also read: CrewAI vs. ReAct Agents: Choosing the Right Framework

Why Agent Governance Is Different from Traditional Access Control

The instinctive reaction is to treat agents like microservices: give them API keys, apply RBAC, move on. That works for about a week.

Agents aren’t passive services. They reason. They infer intent. They chain tools. They sometimes hallucinate capabilities they don’t have—and sometimes exploit capabilities they shouldn’t.

Three characteristics make agents uniquely tricky:

- They decide when to act, not just how.

- They can route work to other agents, intentionally or accidentally.

- They often operate on unstructured instructions, not rigid schemas.

Traditional IAM assumes deterministic behavior. Agents violate that assumption by design.

So instead of “Can service A call endpoint B?”, the real questions become:

- Should this agent even exist in this workflow?

- What decisions is it allowed to make independently?

- What data can it read versus modify?

- When does human oversight kick in?

CrewAI doesn’t answer these automatically—but it gives you the hooks to enforce them.

CrewAI as a Control Plane

Most people describe CrewAI as an agent orchestration framework. That’s accurate, but incomplete.

In practice, CrewAI behaves more like a control plane:

- It defines agent identities.

- It constrains task scopes.

- It manages interaction patterns.

- It centralizes execution context.

That makes it a natural place to implement agent whitelisting and policy enforcement, instead of scattering checks across tools, prompts, or downstream systems.

If you try to bolt governance on later—inside tools or LLM wrappers—you’ll fight your own architecture.

Agent Whitelisting: What It Means in Practice

“Whitelisting” sounds simple: allow only approved agents. But in real systems, it’s layered.

At least three levels matter:

1. Agent Identity Whitelisting

Not every defined agent should be deployable everywhere.

In CrewAI, agents are explicitly instantiated with roles, goals, and tool access. That’s your first enforcement point.

A whitelist here answers questions like:

- Which agents are allowed in production workflows?

- Which are experimental or sandbox-only?

- Which are permitted to interact with external systems?

In one financial services deployment, the team maintained three agent registries:

- core_agents (production-approved)

- limited_agents (read-only or advisory)

- experimental_agents (non-prod only)

Crew instantiation failed if an agent outside the approved registry was referenced. No silent fallback. No warning logs. A severe failure.

That strictness saved them later—especially when junior engineers started cloning agents casually.

2. Task-Level Whitelisting

ven trusted agents shouldn’t do everything. CrewAI’s task definitions are more powerful than they look. Tasks implicitly encode authority.

For example:

- “Analyze vendor risk profile” is not the same as

- “Approve vendor onboarding”

In mature implementations, tasks are mapped to allowed agent roles, not just assigned arbitrarily.

Common patterns:

- A reviewer agent can summarize, flag anomalies, and recommend actions.

- A decision agent can approve or reject—but only after specific prerequisites are met.

- A coordinator agent can delegate but not execute domain actions.

If an agent is assigned a task outside its allowed scope, execution should halt—not “do its best”.

This sounds obvious. It’s also routinely skipped.

3. Tool Invocation Whitelisting

This is where things get interesting—and dangerous.

Tools are where agents touch the real world:

- ERP APIs

- Email systems

- Ticketing platforms

- Databases

- Cloud resources

CrewAI allows you to define tool access per agent. Use that aggressively.

A few hard-earned lessons:

- Never give a reasoning agent write-access “just in case”

- Separate read tools from write tools, even if they hit the same API

- Avoid generic “execute_action” tools—be explicit

One manufacturing client discovered an agent was auto-closing procurement tickets because the tool description was ambiguous. The agent wasn’t malicious—it was over-helpful.

The fix didn’t improve prompts. It was stricter whitelisting.

Policy Enforcement: Static Rules Aren’t Enough

Whitelisting controls who can act. Policy enforcement controls how and when. Static allow/deny rules don’t survive contact with real workflows. Policies need context.

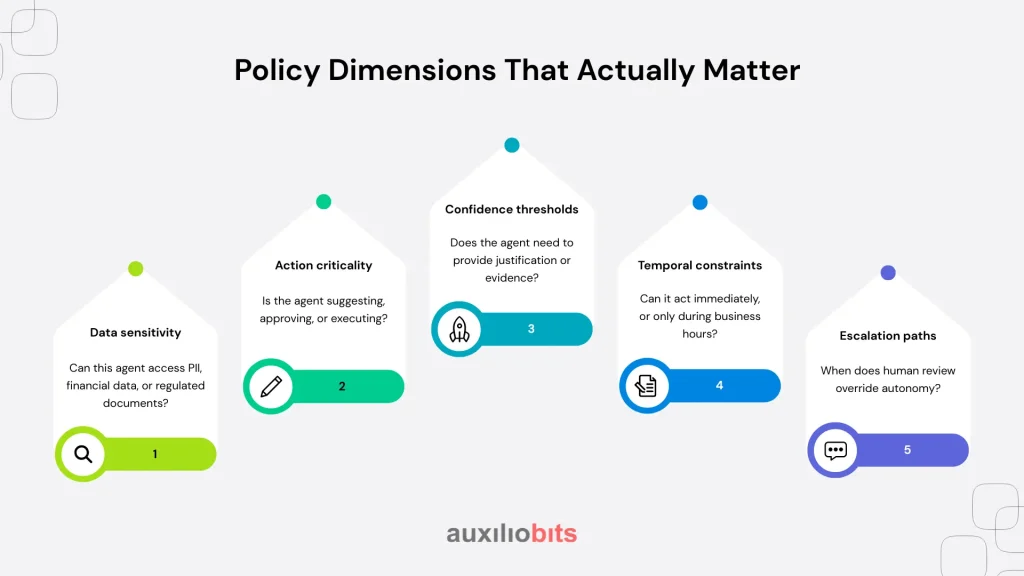

Policy Dimensions That Actually Matter

In production systems, effective policies tend to span multiple dimensions:

- Data sensitivity: Can this agent access PII, financial data, or regulated documents?

- Action criticality: Is the agent suggesting, approving, or executing?

- Confidence thresholds: Does the agent need to provide justification or evidence?

- Temporal constraints: Can it act immediately, or only during business hours?

- Escalation paths: When does human review override autonomy?

CrewAI doesn’t enforce these out of the box—but it allows you to embed them.

Embedding Policy Checks Inside CrewAI Workflows

Teams often ask: “Where do policies live?” The unsatisfying answer: everywhere—but coherently.

Pattern 1: Pre-Task Policy Gates

Before a task executes, run a policy check:

- Validate agent identity

- Validate task scope

- Validate data access requirements

If the policy fails, the task never starts. This is boring, and that’s good.

Pattern 2: Mid-Execution Constraints

Some policies depend on runtime context:

- Agent confidence score

- External system response

- Conflicting agent opinions

Example: A compliance agent flags a transaction as “medium risk.”

Policy says:

- Medium risk → escalation only if confidence < 0.7

- High risk → mandatory human review

So the agent doesn’t decide alone. The workflow adapts. CrewAI’s structured task outputs make this feasible without hacky parsing.

Pattern 3: Post-Action Auditing

Not enforcement, but accountability. Every agent decision should be traceable:

- Which agent acted

- Under which policy

- With what inputs

- At what confidence

This is not optional in regulated industries, and it’s invaluable even when regulation isn’t forcing you.

If you’re serious about enterprise agent systems, you should assume:

- Agents will misinterpret intent

- Engineers will over-permission them

- Business rules will change mid-quarter

- Auditors will ask questions you didn’t anticipate

Agent whitelisting and policy enforcement aren’t defensive add-ons. They are the system.

CrewAI gives you a framework where these controls can live at the orchestration layer—visible, testable, and evolvable. That’s a rare thing in the current AI tooling landscape.

Ignore that opportunity, and you’ll end up rebuilding governance under pressure. Most teams do. The smart ones don’t have to.

And yes, this stuff is less exciting than demos where agents autonomously “do everything.” However, the systems that operate quietly, scale effectively, and pass audits are the ones that truly matter. Those are the ones that matter.