Key Takeaways

- Context isn’t just “give the LLM all the data”—it’s about precision, layering, and timing.

- Task-level context blends global rules, process state, and local details for each step.

- Overloading the model can be as harmful as under-informing it.

- Orchestration outside the LLM—via controllers, state machines, and shared memory—is essential.

- Compliance and governance are part of context design, not an afterthought.

Some AI assistants sound clever in a demo and then get hopelessly lost in production. The root cause? They treat each task like a blank slate—forgetting that work rarely happens in isolation. Enterprise processes are messy, multi-step, and full of context that matters at the micro-level.

Building LLM-based process assistants that understand task-level context isn’t just a nice-to-have—it’s the difference between a system that politely rereads the manual every time and one that feels like an embedded colleague who “remembers how we do it here.”

Also read: Using LLM-Powered Agents for SLA Tracking Across Departments

What “Task-Level Context” Actually Means

Most people conflate context with memory. They are related but not interchangeable

- Memory is long-term—past conversations, documents, and customer history.

- Context is situational—what’s relevant right now for this specific step in the workflow.

Task-level context is about narrowing the scope of awareness to what’s necessary for this action while still grounding it in the larger process state.

Imagine onboarding a vendor in an ERP system. There’s:

- The global context, including procurement policy, supplier history, and category rules.

- The process context—which stage the onboarding is at (e.g., contract review).

- The task context is the specific details the assistant needs to validate in the form it is filling out right now.

If you hand an LLM only the global policy, it might lecture you on procurement ethics when you just asked for a missing tax ID. Give it only the task data, and it may approve a supplier that violates category restrictions. The skill is in blending both, without drowning the model in irrelevant information.

Why It’s Harder Than It Sounds

In principle, you could just “stuff” all the relevant data into the prompt. In practice:

- Token limits exist. Even with 128k context windows, careless design wastes half the space on things the model will never use.

- Noise-to-signal ratio matters. LLMs can get distracted by details that shouldn’t influence the current step.

- Dynamic state changes are tricky. By the time the assistant reaches task 7, some earlier assumptions may no longer hold.

- Ambiguity in real processes—one step’s “output” is another’s “guess,” which means the assistant has to handle uncertainty.

Real-World Parallel: The Good Ops Manager

Think of a seasoned operations manager. They don’t recite the company handbook for every decision. They keep a running mental model:

- Where is the process right now?

- Who’s waiting on what?

- What’s urgent versus routine?

- Which quirks the system or people might introduce.

A well-designed process assistant should behave the same way—zooming in when a specific decision is needed and zooming out when a broader checkpoint is required.

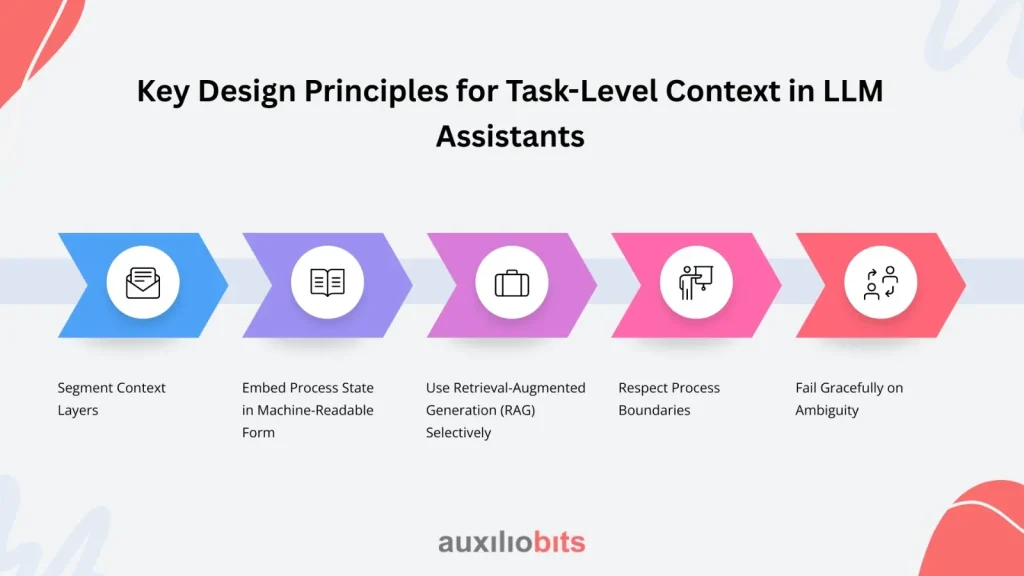

Key Design Principles for Task-Level Context in LLM Assistants:

1. Segment Context Layers

Don’t treat “context” as a single blob of information. Maintain layered retrieval:

- Static knowledge—policies, templates, and procedural rules.

- Dynamic process state—which step we’re in, what’s been completed, and outstanding blockers.

- Ephemeral task data—the form fields, the current customer record, and the just-uploaded invoice.

By keeping them separate, you can control which layer gets injected at which stage—rather than overwhelming the LLM with irrelevant historical chatter.

2. Embed Process State in Machine-Readable Form

Free-text notes are friendly for humans but fuzzy for models. When possible, store state in structured fields (JSON, key-value pairs, or even database lookups) and translate only the relevant parts into natural language when passing to the LLM.

This avoids the common trap where you pass a “meeting minutes” blob to the assistant and hope it figures out which decision was final

3. Use Retrieval-Augmented Generation (RAG) Selectively

The temptation is to run every task query through RAG. But not every step needs retrieval—sometimes the best answer comes from the process memory alone.

We’ve seen assistants in claims processing waste latency pulling the entire policy manual when the current step only requires checking a customer’s address format.

4. Respect Process Boundaries

A subtle but critical point: Just because an LLM can jump ahead and complete downstream tasks doesn’t mean it should.

In multi-agent orchestration, enforcing step locks ensures that later tasks aren’t executed with incomplete or unverified inputs. Task-level context works best when it’s not contaminated by “future knowledge” the process hasn’t earned yet.

5. Fail Gracefully on Ambiguity

Sometimes, the assistant won’t have enough context—because the process is missing data, or because human judgment is genuinely required.

The best design is not to guess wildly, but to:

- Flag the gap.

- Suggest possible sources.

- Log the ambiguity for audit.

Too many deployments fail because the assistant fabricates answers rather than surfacing the missing link.

Common Failure Modes in the Wild

LLM process assistants often stumble in predictable ways:

- Overgeneralization—treating customer onboarding in healthcare the same as in manufacturing because the surface steps look similar.

- Memory leakage—carrying irrelevant details from a previous customer or case into the current task.

- Context decay—losing track of earlier decisions after a long process run.

- Prompt bloat—stuffing the full CRM export into the prompt “just to be safe,” slowing responses and confusing the model.

Fixing these isn’t just prompt engineering—it’s workflow engineering.

A Case Example: Invoice Validation in a Logistics Company

We worked with a logistics provider whose AP team spent hours checking freight invoices against delivery confirmations. The LLM assistant was supposed to:

- Extract key invoice data.

- Match against shipment records.

- Flag discrepancies.

On paper, easy. In reality:

- Some invoices had reference numbers buried in the “notes” field.

- The shipment system sometimes logged deliveries under partner IDs instead of customer IDs.

- Currency conversions were not always recorded at the invoice date.

The first version of the assistant failed frequently because it saw each invoice in isolation. By adding task-level context—such as the shipment mapping rules for that customer, the typical invoice anomalies for that route, and the partner’s unique field formatting—accuracy jumped from 72% to 94%.

Orchestration Patterns for Task-Level Context

Task-level context isn’t managed by the LLM alone—it requires orchestration outside the model. Patterns we’ve seen work:

- Context Controllers—middleware that assembles the precise context package for the LLM at each step.

- State Machines—explicit process maps that dictate which context layers are active when.

- Shared Memory Pools—a centralized store where intermediate decisions, extracted fields, and retrieved docs live for the duration of a process run.

In multi-agent setups, one agent may be dedicated to maintaining and distributing context, acting like the project manager of the group.

When Not to Over-Engineer

Some tasks simply don’t benefit from heavy context injection. For example, a “generate thank-you email” step in a support process doesn’t need the full ticket history—just the resolution summary and customer name.

This is where judgment matters: knowing when to let the model be lightweight and when to hand it the full playbook.

Guardrails and Compliance Considerations

Context-rich assistants can accidentally expose sensitive information if boundaries aren’t enforced.

Good practice includes:

- Redaction filters before passing context to the LLM.

- Access controls so an assistant in the marketing workflow can’t see finance records.

- Process-specific vocabularies are so sensitive that identifiers aren’t accidentally generated in outputs.

One bank we worked with had to roll back a pilot because the assistant started including unrelated account numbers it “remembered” from earlier tasks. The fix was as much about data governance as about LLM design.

What does this mean for Enterprise Teams?

If you’re building or buying an LLM-based process assistant, ask hard questions:

- How does it decide what context to use for each step?

- Is the process state tracked externally or only in the conversation log?

- How does it handle missing or conflicting data?

- Can you audit the exact context that was passed for any given decision?

Vendors will talk about accuracy rates and latency. Those matter—but the mechanics of context control often matter more in real adoption.

Closing Thoughts

Task-level context turns an LLM from a generic chatbot into a process-native assistant. Without it, you get verbosity without reliability. With it, you get something closer to a digital colleague—one who doesn’t just know the rules but knows when to apply them, when to set them aside, and when to ask for help.

And that’s what makes the difference between a flashy proof-of-concept and a tool that people trust on Monday morning.