Key Takeaways

- Zero-shot reasoning enables adaptability—but not reliability. These agents can interpret unseen instructions and act without training data, yet still falter in domains with strict or implicit business logic.

- Autonomy and zero-shot capability are not the same thing. Enterprises succeed when they pair adaptive reasoning with guardrails, not when they chase full autonomy.

- Operational risks are hidden in the infrastructure. Latency spikes, state loss, token overuse, and security exposures can derail performance long before logic errors do.

- Human trust is the new currency of automation. Without transparency and auditability, even accurate agents fail to gain organizational acceptance.

- The future lies in meta-reasoning, not just execution. Next-generation agents will ask, “Do I have enough context to act?” —blending self-awareness with reliability, a critical step toward enterprise-grade cognition.

Every few years, a technical term that once sounded like research jargon slips into everyday enterprise discussions. “Zero-shot agents” is one of those terms. If you’ve been anywhere near AI architecture, process automation, or LLM orchestration discussions lately, you’ve probably heard people mention them—usually in the same breath as “autonomous workflows” or “agentic automation.” But here’s the thing: most people using the term aren’t entirely sure what zero-shot capability actually implies for workflow execution.

Let’s unpack it—not with hype, but with the grounded realism of someone who has seen more automation projects stall than succeed.

Also read: Design Patterns for Agent-Based Automation in Complex Enterprises

What “Zero-Shot” Really Means in Context

In machine learning, “zero-shot” originally described a model’s ability to perform a task it wasn’t explicitly trained on. A zero-shot classifier, for instance, might label unseen categories based on semantic relationships learned elsewhere.

In the agentic world, that concept expands. A zero-shot agent can receive a high-level instruction—say, “reconcile last month’s vendor invoices with the ERP records and flag discrepancies”—and execute it without pre-programmed workflows, examples, or human-guided decision trees. It builds the reasoning steps dynamically, drawing from prior world knowledge, APIs, and tool capabilities available in its environment.

Sounds powerful? It is. But it’s also a minefield.

From Prompts to Pragmatism: How Zero-Shot Agents Actually Work

At their core, zero-shot agents rely on large foundation models (think GPT-4, Claude, Gemini, etc.) to infer both the what and how of a given instruction. They operate inside orchestration frameworks that give them access to external tools—API connectors, data sources, or even robotic process automation (RPA) triggers.

When an instruction arrives, the agent typically follows a loop like this:

- Intent Parsing: It interprets the command’s purpose and constraints.

- Decomposition: Breaks the task into smaller, sequential, or parallel steps.

- Planning: Decides which available functions or systems can achieve each step.

- Execution: Calls those functions, reads responses, and adjusts as it goes.

- Evaluation: Assesses if the outcome aligns with the intended goal.

In traditional workflow automation, you’d have to design every one of those steps manually—often as BPMN diagrams, RPA sequences, or script logic. The zero-shot agent, in theory, figures it out on the fly.

It’s not magic. It’s structured reasoning guided by a probabilistic model that’s extremely good at generalizing. But that’s both its gift and its curse.

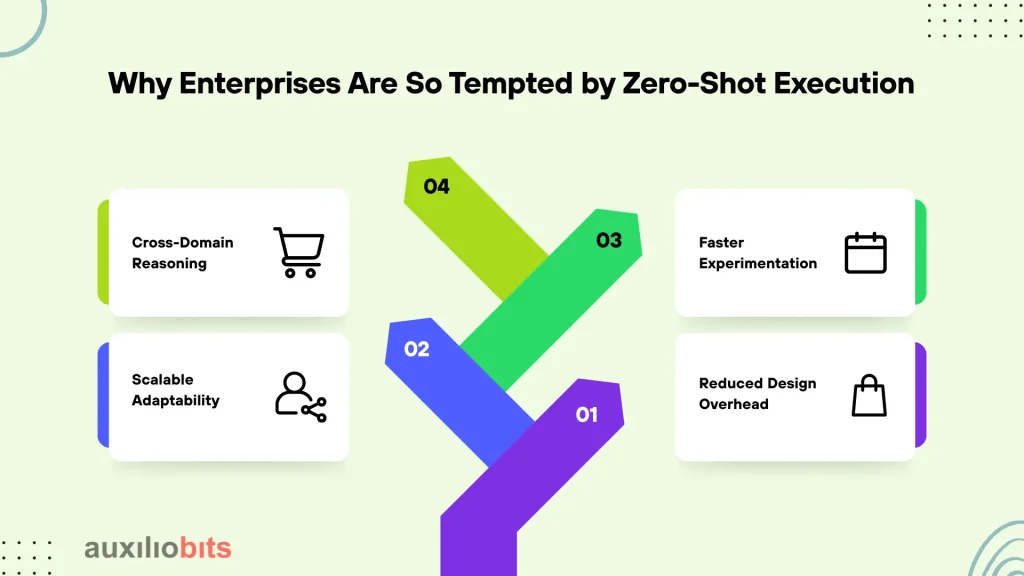

Why Enterprises Are So Tempted by Zero-Shot Execution

Ask a CIO why they’re exploring agentic systems, and the answer is rarely “because it’s cool.” It’s usually because of friction—the kind that sits between departments, data silos, or changing business rules.

Zero-shot agents promise relief from that friction in a few specific ways:

- Reduced Design Overhead: You don’t need to encode every process path. The agent can handle unseen variations, such as a new vendor format or policy change.

- Scalable Adaptability: Agents learn from generalized instructions, not hard-coded scripts. Updating workflows becomes a linguistic task, not a technical one.

- Faster Experimentation: A finance or operations lead can issue natural-language instructions and see instant prototypes of automated routines.

- Cross-Domain Reasoning: They can blend insights—linking HR data with logistics metrics, for instance—to achieve goals spanning multiple systems.

In practice, zero-shot capability often accelerates early-stage automation dramatically. Teams can move from idea to execution in hours rather than months. But there’s always a catch. Several, actually.

The Fragility Beneath the Flexibility

Zero-shot reasoning assumes that the model’s latent understanding of the world aligns with the domain logic it’s operating in. That’s a dangerous assumption in regulated or structured environments.

Consider this example: A zero-shot agent in an insurance claims department receives a task—“Approve all low-risk auto claims under $5,000 from verified customers.” The model parses it, queries the claims database, and begins executing. But what if “verified” has a specific internal definition involving multiple checks—something the model has never seen but thinks it understands from general English usage?

That’s not a hypothetical scenario. In pilot deployments, such agents often pass internal QA 80% of the time but fail silently the other 20%—submitting incorrect data, skipping edge conditions, or misunderstanding policy hierarchies.

That 20% can sink a production rollout.

So yes, zero-shot execution is impressive. But it’s brittle when facing domain-specific nuance, inconsistent data schemas, or ambiguous instructions. The “reasoning gap” between what the model infers and what the enterprise actually means can be subtle—and catastrophic

Zero-Shot ≠ Autonomous

One of the biggest misconceptions floating around is that zero-shot agents are synonymous with autonomy. They’re not.

Zero-shot capability is about adaptation without examples. Autonomy, on the other hand, involves goal pursuit with self-regulation. An agent can perform a zero-shot task without being fully autonomous. It may still rely on guardrails—approval steps, role-based constraints, or human oversight layers.

The best-designed systems recognize this distinction and intentionally limit autonomy while exploiting zero-shot reasoning for flexibility.

For example:

- A procurement agent may autonomously draft purchase orders but still route them for managerial review.

- A customer-service agent might generate zero-shot responses, but a confidence threshold determines when to escalate to a human.

This hybrid model—bounded autonomy—is far safer and more sustainable for enterprises transitioning from rule-based automation to agentic execution.

The Infrastructure Challenge No One Talks About Enough

Underneath the cognitive layer, zero-shot agents depend on an execution substrate—a mesh of APIs, vector databases, and context caches. Every “step” the agent takes often translates into multiple tool calls, embeddings, lookups, or document retrievals.

Here’s where practical issues arise:

- Latency Accumulation: Multi-step reasoning amplifies delay. A seemingly simple task can balloon into dozens of tool calls.

- State Management: Zero-shot agents generate and discard context dynamically. Persisting relevant states across sessions is non-trivial.

- Security Overhead: Every dynamic tool call expands the attack surface—especially when agent reasoning includes generating new API queries.

- Cost Volatility: Each reasoning step burns tokens. Unbounded loops or mis-specified goals can rack up compute costs fast.

Enterprises experimenting with zero-shot workflow agents are quietly investing in execution monitoring layers—systems that trace agent steps, visualize reasoning chains, and enforce boundaries. Think of it as air traffic control for AI cognition.

Real-World Example: The “Smart Intake” Experiment

A manufacturing firm tried deploying a zero-shot intake agent for invoice processing. The agent was designed to parse incoming vendor emails, extract data, validate it against ERP entries, and upload structured results.

During pilot testing:

- 75% of invoices were processed correctly.

- 15% were flagged for manual review due to ambiguous formatting.

- 10% failed silently—submitting incomplete line items due to OCR misalignment, the model didn’t notice.

What went wrong? The zero-shot agent “understood” how to reconcile invoices in principle but not in practice. It lacked procedural priors—domain rules that experienced accountants rely on instinctively.

The solution wasn’t to abandon the agent. It was to fine-tune task templates—essentially seeding it with example reasoning patterns (a few-shot hybrid). That blend preserved flexibility while restoring reliability.

So, real-world lesson: zero-shot alone rarely sustains production-grade workflows. It’s the scaffolding for adaptive reasoning, not the full structure.

The Psychological Gap: Human Trust in Machine Reasoning

A subtle but critical issue with zero-shot agents is interpretability. When a process owner can’t trace why an agent made a certain decision, trust evaporates.

In automation governance meetings, I’ve seen this play out repeatedly:

“It’s working, but we can’t explain how it got that result.”

That sentence usually precedes a rollback.

Explainability tools—like reasoning-chain visualizers or prompt tracebacks—help, but they’re reactive measures. The deeper solution is designing for auditability from the start. Each zero-shot decision should be logged with metadata: which context was used, what steps were planned, what data sources were touched, and how the final action was chosen.

Without that, compliance officers will (rightly) push back.

When Zero-Shot Works Well

Despite its risks, zero-shot execution can shine in the right contexts. These are the sweet spots where its probabilistic creativity aligns with business value:

- Exploratory workflows: Drafting new document templates, summarizing unstructured data, or generating first-pass analyses.

- High-volume triage: Categorizing or clustering inputs (emails, claims, support tickets) before routing to specialized agents.

- Dynamic integration tasks: Handling one-off API changes or schema drift between systems without developer intervention.

- Knowledge synthesis: Cross-referencing data sources to answer open-ended questions or build context graphs.

These use cases leverage reasoning breadth without demanding deterministic accuracy. The moment you need hard guarantees—think financial reconciliation, compliance filing, or medical eligibility checks—pure zero-shot reasoning becomes risky.

The Emerging Safeguards

The ecosystem is adapting fast. A few approaches are gaining traction to mitigate zero-shot fragility:

- Constrained Decoding: Forcing agents to output structured formats or conform to schemas reduces hallucination.

- Tool-Use Grounding: Pairing LLM reasoning with deterministic functions ensures factual accuracy for certain steps.

- Dynamic Few-Shot Adaptation: Agents retrieve relevant examples from memory before reasoning, blending flexibility with context.

- Human Oversight Triggers: Confidence scoring or anomaly detection can flag outputs for manual review automatically.

In other words, the industry is learning that pure zero-shot reasoning is too volatile for enterprise automation—but anchored zero-shot (grounded in constraints and feedback loops) might be the sweet spot.

The Quiet Irony

Zero-shot agents promise to eliminate the need for human intervention. Yet, every successful deployment so far has relied on strategic human supervision—not to micromanage, but to guide and verify.

It’s an irony automation veterans have seen before. The first RPA wave also promised “no-code autonomy,” and enterprises quickly learned that human context is irreplaceable. The same lesson repeats, just with fancier language models.

The takeaway? Zero-shot doesn’t mean zero responsibility.

What Comes Next: From Zero-Shot to Meta-Reasoning Agents

The next evolution may involve meta-reasoning—agents that not only execute zero-shot tasks but also evaluate whether they should. That’s the self-awareness layer missing today. Imagine an agent that, before acting, asks itself: Do I have enough context to execute this safely?”

We’re already seeing early frameworks that implement confidence estimation and chain-of-thought validation across multi-agent systems. It’s still early, but this direction feels inevitable.

Because in enterprise automation, the ultimate metric isn’t how intelligent your agent is—it’s how trustworthy its decisions are under uncertainty.

A Final Reflection

Zero-shot agents represent a bold step toward adaptive, cognitive workflows. They strip away the rigidity of rule-based automation and open the door to systems that reason rather than react. But the risks are proportional to the ambition.

To deploy them wisely, you need architectural humility—an understanding that language reasoning isn’t a substitute for procedural knowledge, and flexibility without governance is just entropy with good PR.

Zero-shot agents can accelerate innovation, yes. But only if you remember the one principle automation veterans quietly live by: every shortcut eventually shows up in QA.