Key Takeaways

- Automation isn’t static. Processes drift, exceptions creep in, and errors accumulate unless monitored continuously.

- CPI is not just dashboards. It’s end-to-end visibility, contextual intelligence, and adaptive feedback loops.

- Failures are subtle. Most breakdowns come from small changes—formats, policies, or integrations—not outright bot crashes.

- Business outcomes matter most. Monitoring only has credibility when tied to cash flow, compliance, and customer metrics.

- Culture makes or breaks CPI. Shared accountability and curiosity turn monitoring into real operational intelligence.

Something curious happens in most automation journeys. The day bots go live, there’s champagne, a press release, and slides showing “projected savings.” Then… silence. Executives move on, the automation sits in production, and six months later, someone realizes invoices are delayed or compliance reports have holes in them.

This isn’t negligence. It’s the assumption that automation is binary—either it runs, or it doesn’t. In reality, automation is like plumbing: most of the time it works invisibly, until one tiny crack leads to a flood.

A payments company once discovered, only after a quarterly review, that a “successful” bot had been double-paying a subset of suppliers for weeks. Why? A currency formatting change in one country. The bot didn’t crash. Nobody noticed until finance reconciliations exposed the hole.

That’s why monitoring isn’t optional. Once you automate, you inherit the responsibility to watch that automation continuously. Otherwise, you’re trading human error for systemic error—quieter, but much costlier.

Also read: How to Prioritize Processes for Automation: A Value-to-Effort Model

Defining Continuous Process Intelligence

Every year, the automation space invents new jargon. “Cognitive RPA,” “HyperAutomation,” “Digital Twin of an Organization.” Continuous Process Intelligence (CPI) risks the same fate if we let it stay at the buzzword level.

But CPI, if stripped of marketing gloss, is really about:

- Persistent visibility: Seeing process execution as it happens, not weeks later in a post-mortem.

- Contextual intelligence: Understanding not just that a task ran, but what impact it had on business objectives.

- Adaptability: Using monitoring as a trigger for course correction, not just a passive report.

Think of CPI as the equivalent of a car’s telemetry system. Speed, fuel efficiency, tire pressure, GPS—all monitored, all contextual. Without that, you might still drive, but you’d drive blind.

Where Automation Breaks—and Why Companies Miss It

Automation usually fails at the edges, not the core. Rarely does a bot stop completely. Instead:

- A new tax rule causes misclassification in 2% of invoices.

- A supplier changes their PDF format, shifting data fields by a line.

- A bank adds an extra validation step in its KYC form.

Each case causes micro-failures that slip past uptime metrics.

Why are these misses so common?

- Fragmented ownership—IT checks whether bots are “green,” operations cares about throughput, and finance watches outcomes. No one connects the dots.

- Optimism bias—once live, leaders assume automation is “solved.” They stop budgeting for ongoing monitoring.

- Tool blinders—many RPA dashboards track execution but not impact. The bot may “complete” 1,000 invoices, but if 300 need rework, what did you really automate?

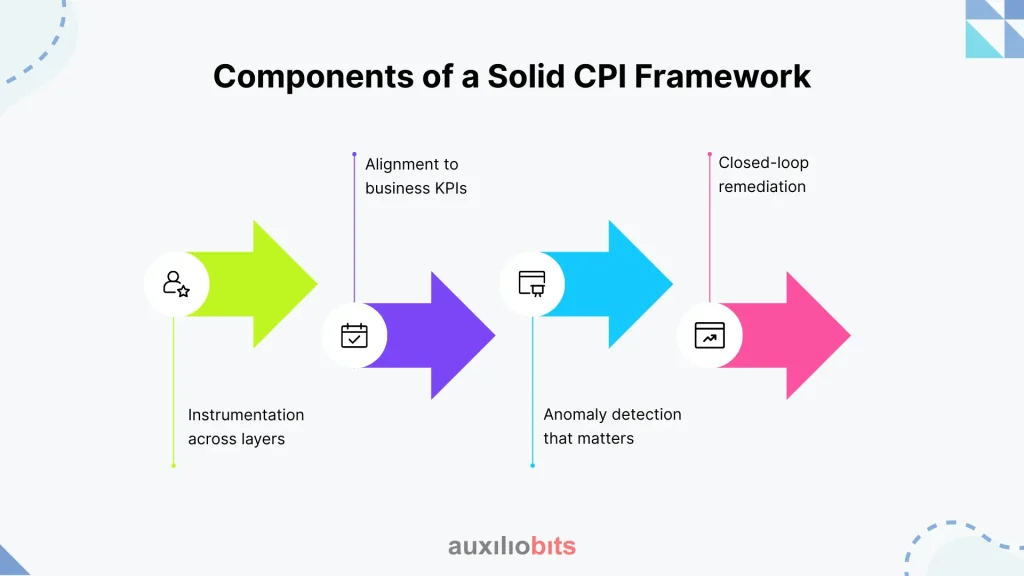

Components of a Solid CPI Framework

What separates “monitoring” from CPI? Breadth, depth, and the ability to act.

Instrumentation across layers:

Don’t stop at the bot layer. Capture logs from ERP, workflow exceptions, and even helpdesk tickets. If AP clerks are staying late to fix invoices, that’s a process signal too.

Alignment to business KPIs:

Track exception rates, SLA adherence, DSO, and compliance flags. Execution metrics are inputs; business outcomes are the actual scorecard.

Anomaly detection that matters:

Use statistical thresholds, not just alerts. A sudden 20% increase in rework is more important than a single task failure.

Closed-loop remediation:

CPI must feed back into process improvement. Otherwise, it’s just another dashboard. Some firms route anomalies straight into retraining pipelines or issue tickets that trigger escalation.

Done well, CPI shifts monitoring from “are bots alive?” to “is the business process healthy?”

Industry Case Studies and Lessons Learned

- Banking compliance: A European bank used CPI to track its anti-money-laundering automation. The system flagged rising manual overrides in one region. Turned out regulators had updated ID verification rules two months prior. Without CPI, fines would have been inevitable.

- Healthcare claims: A U.S. insurer noticed growing exception queues despite automation “working.” CPI revealed the real issue: payer portals had altered their submission windows. Fixing this shaved 12% off claim cycle times.

- Retail supply chain: A retailer discovered that one warehouse consistently processed orders more slowly than others. CPI tied it back to a legacy scanner integration. Fixing it improved regional delivery SLAs by double digits.

- Manufacturing procurement: A supplier onboarding automation looked fine in logs, but CPI showed a spike in late vendor approvals. The culprit? Document formats in one geography. Without CPI, procurement teams would have kept blaming “delays” on staff.

Patterns emerge: CPI uncovers mismatches between what automation thinks it did and what the business actually experiences.

The Blind Spots Nobody Likes to Admit

Even with CPI, there are pitfalls people gloss over:

- Too much noise: Alert fatigue kills adoption. If every blip triggers a notification, teams tune out.

- Misaligned incentives: IT wants fewer tickets; operations wants smoother flow; finance wants numbers that reconcile. Without shared metrics, monitoring devolves into finger-pointing.

- Political reluctance: Nobody enjoys admitting that an automation that costs millions is underperforming. CPI sometimes exposes hard truths, and leaders quietly bury the evidence.

Linking CPI to Business Outcomes

Executives rarely ask: “Did the bot run?” They ask:

- Did our DSO shrink?

- Did suppliers get paid on time?

- Did compliance gaps reduce?

That’s where CPI earns its keep. By translating monitoring signals into:

- Exceptions per 1,000 invoices → impact on supplier relationships.

- Average cycle time variation → customer satisfaction swings.

- Percentage of manual intervention → actual cost per transaction.

The Vendor Narrative vs. Enterprise Reality

Most vendor brochures will say they offer “real-time monitoring and analytics.” Look closer:80% of the time, it’s execution-level data—did the bot finish, how long did it take, and what error codes showed up?

Enterprises, however, need:

- End-to-end observability, spanning ERP, CRM, RPA, and human handoffs.

- Metrics tied to compliance and risk posture, not just throughput.

- Integration with incident management systems—not siloed dashboards, no one checks.

Building a Culture That Actually Pays Attention

Technology is only half the story. CPI thrives in cultures where:

- Responsibility is shared: Business leaders and IT jointly review outcomes. Monitoring reports appear in operational meetings, not just IT stand-ups.

- Governance is clear: When an anomaly surfaces, someone owns the next step. “Observed by all, acted on by none” is the default failure mode.

- Curiosity is encouraged: Teams don’t treat exceptions as nuisances but as learning signals. A spike in manual handling isn’t just an error—it’s a clue about process drift.

Without cultural reinforcement, CPI degenerates into background noise. With it, automation becomes truly resilient.

Closing Reflections

Continuous Process Intelligence isn’t an add-on to automation—it’s the insurance policy, the feedback loop, and the quiet mechanism that decides whether your automation strategy matures or unravels. Organizations that treat monitoring as an afterthought usually discover, too late, that their “savings” were leaking away in hidden exceptions and manual rework.

The irony is that CPI doesn’t have the glamour of automation launches or AI pilots. It’s more like plumbing—rarely celebrated, but painfully obvious when ignored. Yet those who build it into the fabric of operations gain something most automation programs lack: trust. Trust that numbers are real, trust that compliance holds, trust that customer commitments are met.

If automation was about removing human effort, CPI is about ensuring that effort stays removed. And in an environment where regulations shift, data formats mutate, and processes evolve by the quarter, that assurance is not just valuable—it’s existential.