Key Takeaways

- Azure OpenAI acts as the reasoning core but requires grounding and checkpoints to be reliable.

- Azure ML anchors decision intelligence with domain-specific models and governance pipelines.

- Cognitive Services provide perception and utilities, reducing the need to reinvent commodity AI.

- Orchestration is less about technology choice and more about sequencing, governance, and resilience.

- Practical deployments reveal gaps—latency, costs, cultural adoption—that documentation rarely mentions.

Agent orchestration is quickly moving from theory into boardroom conversations. A few years ago, enterprises spoke about automation in the language of workflows, scripts, and bots. Now, the conversation has shifted: it’s about orchestration of reasoning engines, data pipelines, and decision-making agents that can work together—sometimes even negotiate—without human intervention.

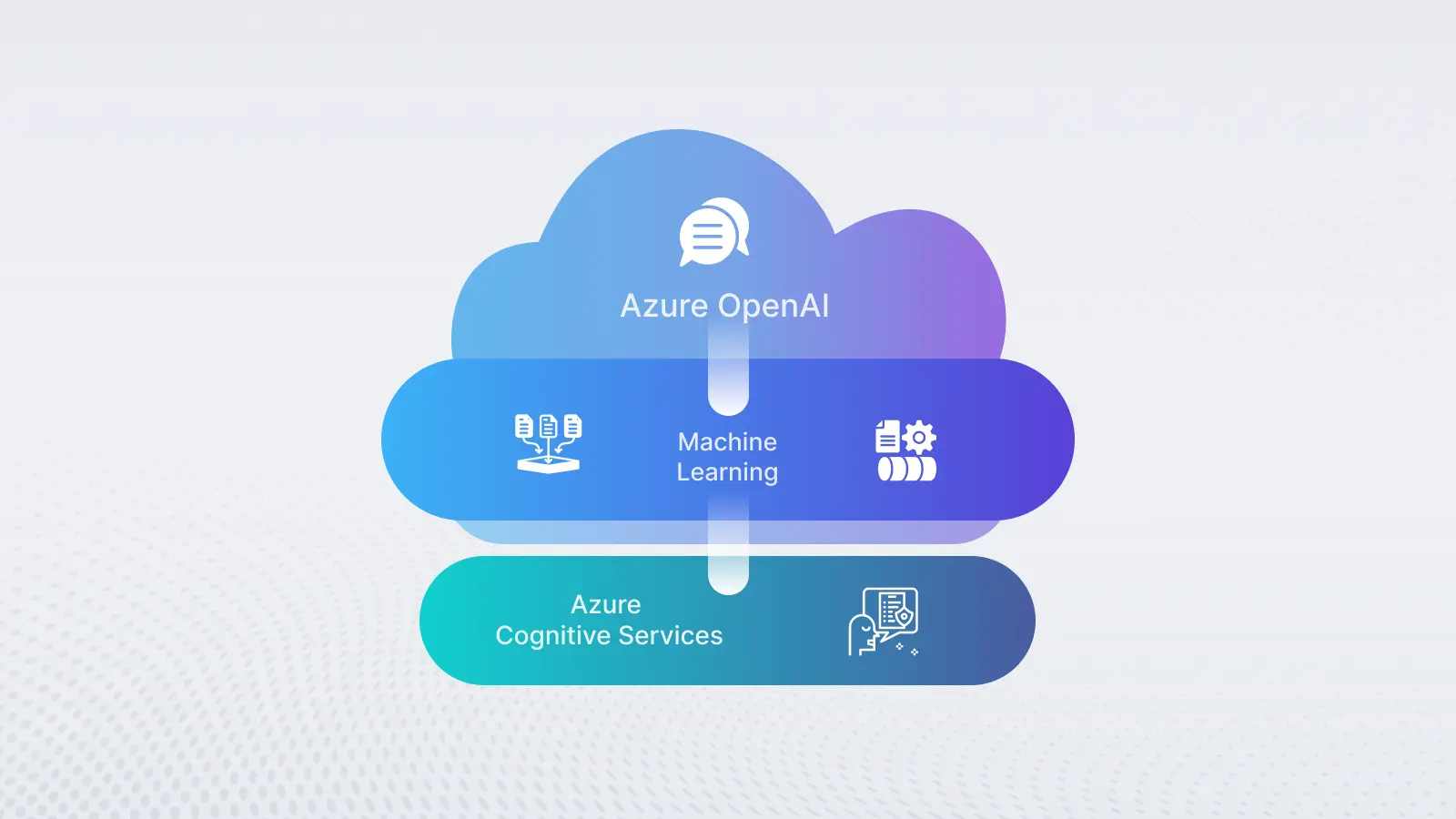

And when it comes to enterprise-scale deployment, Azure has emerged as one of the most pragmatic ecosystems to anchor these efforts. Not because it’s “flashier” than AWS or GCP, but because Microsoft has stitched together three families of capabilities that, when combined, create a coherent backbone for agent orchestration: Azure OpenAI, Azure Machine Learning, and Azure Cognitive Services.

This blog explores how these services actually fit together, what pitfalls practitioners run into, and where the opportunities lie.

Also read: AI Agent Orchestration on Azure: Architecture & Tips

Why Orchestration Matters in the First Place

A single intelligent agent can be powerful, but it’s also brittle. Ask an LLM to generate a response, and it does well within its training scope. Place it inside a financial services workflow, however, and cracks appear:

- It can’t fetch real-time risk scores unless it’s wired into external data.

- It struggles with compliance explanations unless rules are enforced.

- It doesn’t gracefully hand off tasks between document processing, decision-making, and transaction systems.

That’s where orchestration comes in: coordinating multiple specialized components (agents, services, models) so they function like a team rather than a set of silos.

Azure OpenAI: The Reasoning Core

Azure OpenAI provides access to GPT-4 and related large language models within a secure enterprise perimeter. On its own, it’s not enough—but it plays the role of the reasoning core.

Real-world roles include:

- Conversation orchestration: Routing customer support requests across multiple internal systems.

- Knowledge grounding: Using retrieval-augmented generation (RAG) to pull from enterprise data lakes before responding.

- Task delegation: Breaking down a business request into smaller subtasks that can be executed by other agents or APIs.

What makes Azure OpenAI attractive for orchestration is less about the models themselves and more about enterprise controls: private networking, data isolation, and role-based access. In regulated industries (think healthcare or banking), that’s non-negotiable.

But there’s a limitation that architects quickly bump into: LLMs hallucinate. Guardrails are required, and Azure doesn’t magically fix that. Orchestration must include fact-checking layers—sometimes handled by Cognitive Search, sometimes by smaller specialized models in Azure ML.

Azure Machine Learning: The Training and Deployment Layer

If OpenAI provides the reasoning, Azure ML provides the memory and the muscle.

Azure ML is where you:

- Train domain-specific models (risk models, fraud detectors, and demand forecasters).

- Package and deploy them as APIs that agents can call.

- Monitor drift and retrain continuously.

In orchestration, these ML models often act as decision checkpoints. For example:

- An LLM-generated plan for approving a loan can be routed through an ML risk model before execution.

- A customer support agent might suggest a refund, but the fraud detection model decides if that action is safe.

What’s underrated is Azure ML’s role in governance. Pipelines for versioning, CI/CD for models, and experiment tracking—these are the mechanics that ensure agents don’t just “wing it” with outdated logic.

Of course, ML teams sometimes over-engineer. I’ve seen cases where businesses train a custom model for something that could have been solved with a prebuilt Cognitive Service. Balance is the key.

Azure Cognitive Services

If OpenAI is the brain and ML is the muscle, Cognitive Services are the senses.

They provide off-the-shelf APIs for:

- Vision: OCR, face recognition, object detection.

- Speech: Recognition, synthesis, translation.

- Language: Sentiment, entity recognition, summarization.

- Search & Knowledge: Cognitive Search to index enterprise content.

Why reinvent these? It’s tempting for data science teams to say, “We can build our own sentiment classifier.” Sure, you can. But do you want to maintain it at scale? In most orchestration cases, Cognitive Services are plug-and-play sensory extensions that agents use as needed.

A manufacturing example: an autonomous quality-control agent may call Vision APIs to inspect product images, then feed results into an Azure ML anomaly detection model before letting Azure OpenAI decide how to report or escalate.

The orchestration lies not in choosing one of these services, but in sequencing them.

Orchestration Patterns in Azure

When enterprises start combining these tools, certain architectural patterns emerge. Here are the most common ones that work in practice.

1. LLM as the Planner, ML as the Gatekeeper

- LLM generates a multi-step action plan.

- ML models act as checkpoints before high-stakes decisions.

- Cognitive Services handles perception tasks.

Example: A claims processing system where GPT drafts an adjudication plan, but fraud detection and compliance ML models must validate before execution.

2. Cognitive Search as the Grounding Layer

- Enterprise data indexed with Cognitive Search.

- LLMs query it to provide contextually accurate answers.

- ML models flag anomalies in query responses.

This works well in legal or policy-heavy contexts, where hallucination risk is unacceptable.

3. Event-driven Agent Mesh

- Agents deployed as microservices in Azure Container Apps.

- Event Grid or Service Bus coordinates their communication.

- OpenAI models provide reasoning, ML provides decisions, and Cognitive Services provide inputs.

This is closer to a “digital colleagues” architecture, where agents talk to each other asynchronously.

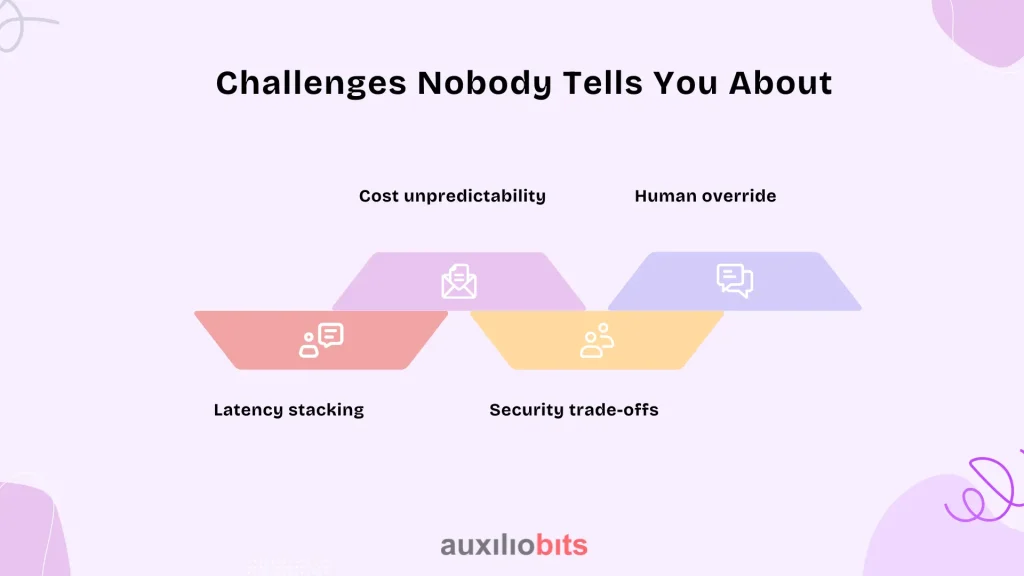

Challenges Nobody Tells You About

It’s easy to read Azure documentation and believe orchestration is straightforward. It isn’t.

- Latency stacking: When every agent call requires hitting OpenAI, ML APIs, and Cognitive Services, end-to-end response times balloon. Mitigation often means caching, batching, or selectively skipping “nice-to-have” calls.

- Cost unpredictability: Usage-based pricing across three services makes forecasting tricky. An orchestration that looks cheap in a sandbox can explode at scale.

- Security trade-offs: Private endpoints and managed identities are essential, but they add friction. Some teams cut corners here, and that’s a recipe for compliance nightmares.

- Human override: Fully autonomous orchestration is rare. Most real deployments include an “approval human-in-the-loop,” even if it’s just a confidence-threshold trigger.

And then there’s the cultural challenge: IT teams accustomed to deterministic systems struggle with orchestrated agents. When an LLM behaves unpredictably, the instinct is to blame Azure. Often, it’s just the nature of probabilistic reasoning.

Practical Case Examples

Case 1: Financial Services – Loan Origination

A bank built a loan origination agent mesh using:

- Azure OpenAI for applicant conversations.

- Cognitive Services OCR for document extraction.

- Azure ML credit risk models to score applications.

The orchestration sequence was intake → doc extraction → risk score → LLM explanation → compliance check.

Result: Average processing time dropped from 14 days to 4. But they also found early on that without ML validation, the LLM occasionally recommended approvals outside policy.

Case 2: Manufacturing – Predictive Maintenance

An industrial manufacturer orchestrated agents for predictive maintenance:

- IoT telemetry flows into Azure ML anomaly models.

- Cognitive Services speech-to-text captures operator notes.

- Azure OpenAI synthesizes reports and schedules interventions.

Outcome: Downtime reduced by 22%. But operators initially resisted because reports felt “too verbose.” They tuned the orchestration to shorten summaries—proof that orchestration is as much about UX as architecture.

Where Azure Still Falls Short

Azure’s integration is impressive, but not flawless.

- The boundary between Azure OpenAI and Azure ML is still clunky—moving embeddings or outputs between the two often requires custom glue.

- Cognitive Services, while robust, can feel “one-size-fits-all.” Enterprises often need finer control or customizability.

- Orchestration tooling itself is still maturing. Today, much of the orchestration logic is written manually (Python, Logic Apps, Durable Functions). A native “agent orchestrator” service doesn’t exist yet.

That said, Azure’s strength is consistency. While AWS has more experimental tools and GCP has cleaner ML pipelines, Azure’s appeal is that enterprises can roll out orchestrated agents without stitching together five different vendors.

What This Means for Practitioners

For architects and technical leaders considering Azure for agent orchestration, a few guiding principles stand out:

- Don’t treat LLMs as oracles—always pair with ML models for critical decisions.

- Use Cognitive Services where speed-to-value matters; save Azure ML for high-value, domain-specific needs.

- Plan orchestration as an event-driven system, not just a synchronous chain of API calls.

- Budget realistically for both cost and latency; both creep up faster than you think.

Above all, keep orchestration flexible. Business needs to shift. New Azure model families arrive every quarter. What you build today should be modular enough to evolve without tearing the whole system apart.

Conclusion

Agent orchestration on Azure isn’t a matter of bolting services together—it’s about treating reasoning, decision-making, and perception as distinct but interdependent capabilities. Azure OpenAI provides the conversational and planning intelligence, Azure ML supplies rigor and governance for decisions, and Cognitive Services extend the system with sensory and utility functions.

Where teams succeed is rarely in their choice of models; it’s in how they design the handoffs. When does the LLM hand over to a risk model? When does a human need to step in? Which perception tasks are common enough to rely on Cognitive Services? Those decisions shape whether orchestration feels like a coherent system or a patchwork of APIs.

Azure isn’t perfect—its orchestration story is still partly DIY—but it offers a uniquely enterprise-friendly foundation. For organizations serious about deploying digital colleagues at scale, the question isn’t whether to use Azure’s AI stack. It’s how thoughtfully you can orchestrate the moving parts so that agents act less like isolated tools and more like a coordinated workforce.