Key Takeaways

- Containers are the only practical unit for AI agent deployment—they encapsulate dependencies, prevent CUDA/runtime drift, and support highly variable workloads.

- Kubernetes is indispensable for orchestration, but its GPU scheduling challenges require augmentation with tools like NVIDIA’s GPU Operator.

- Managed K8s services (EKS/AKS) are the enterprise default, with tradeoffs largely driven by ecosystem fit and data locality rather than raw GPU pricing.

- Scaling AI agents is not the same as scaling web servers—stateful reasoning, GPU fragmentation, and inter-agent communication introduce unique complexities.

- Cost and observability make or break agent orchestration—idle GPUs, resource misallocation, and poor workload tagging quickly erode ROI without tight monitoring.

When people talk about scaling AI agents today, the conversation often gets muddled. Some people focus on the algorithmic side—how large models coordinate, share context, and manage tasks. Others immediately think about the infrastructure side—how to keep GPUs busy, how to allocate resources, and how to prevent a single agent from hogging a cluster. Both matter. But from an operational perspective, especially if you’re running dozens (or hundreds) of AI agents in parallel, orchestration at the container and Kubernetes layer is where theory turns into something usable.

This piece is about that layer: containers, Kubernetes, GPU scheduling, and the growing role of tools like NVIDIA’s GPU Operator. And since most enterprises don’t run their own bare-metal clusters anymore, we’ll also pull in managed services like Amazon EKS and Azure AKS, where reality sets in with cost models, SLAs, and networking quirks.

Also read: Leveraging NVIDIA’s AI stack (CUDA, TensorRT, Jetson) for real‑time smart agent deployment

Why Containers Are Non-Negotiable for AI Agents

While containers are widely recognized as the standard deployment unit, their role with agents carries unique implications. Unlike monolithic training workloads, agents possess distinct characteristics:

- Short-lived: Some live for seconds, some for days.

- Burst-oriented: A customer request may spin up ten concurrent reasoning chains, then drop to zero.

- Heterogeneous: Not all agents need a GPU. Some perform heavy model inference, while others primarily manipulate data.

That variability makes VM-based orchestration clunky. Containers, by contrast, let you package not just the model runtime but also the quirky dependencies (say, a Python 3.10 environment with transformers pinned to a specific minor version, or a specialized retriever plugin).

There have been teams that skip containers for internal prototypes, running everything in Conda environments on shared servers. It works until someone installs a slightly incompatible CUDA toolkit, and suddenly, half the workloads crawl. Containers sidestep that by freezing runtime assumptions into something testable and reproducible.

Kubernetes as the Real Orchestrator

If containers are the unit, Kubernetes is the conductor. The point isn’t just “autoscaling” in the marketing sense; it’s about aligning agent workloads with infrastructure constraints. Kubernetes provides:

- Pod-level isolation: Agents that shouldn’t step on each other’s toes get separate namespaces.

- Custom resource definitions (CRDs): You can define an “agent” object, abstracting away the boilerplate.

- Horizontal Pod Autoscaling: Not perfect, but workable for stateless reasoning agents.

- Service abstraction: Routing messages between agents without wiring raw IPs.

Now, does Kubernetes solve everything? Hardly. If you’ve ever tried to fine-tune resource allocation for GPUs on K8s, you know the scheduling logic isn’t always intuitive. CPU-based pods are easy—just request 2 vCPUs and 4 GB of RAM. GPU scheduling, however, introduces tension between maximizing utilization and avoiding starvation.

Where NVIDIA’s GPU Operator Fits

Kubernetes doesn’t natively know much about GPUs. Sure, you can expose them as resources, but managing drivers, runtime hooks, and monitoring is a maintenance headache. Enter the NVIDIA GPU Operator.

This operator automates:

- Driver installation and updates inside the cluster.

- NVIDIA Container Toolkit setup (so CUDA apps don’t break).

- GPU telemetry collection for observability.

- Integration with Kubernetes device plugins.

In practice, what this means is you stop treating GPU setup as a manual prerequisite and start treating it as declarative infrastructure. A kubectl apply away, and your cluster becomes GPU-aware.

A real-world example: A financial-services client needed dozens of lightweight agents performing Monte Carlo simulations alongside LLM inference. Without the GPU Operator, half their ops budget was sunk into troubleshooting driver mismatches whenever nodes were patched. With it, updates became predictable, and the DevOps team could actually focus on scaling workloads instead of chasing CUDA errors.

Why Managed K8s (EKS, AKS) Is the Default Choice

Running Kubernetes on your own hardware isn’t dead, but for most enterprises, it’s not worth the overhead. EKS and AKS abstract away the painful control-plane management while leaving you enough room to tune workloads. That said, each brings tradeoffs:

- Amazon EKS: Strong ecosystem support, ties neatly into IAM and CloudWatch, but GPU instances (like p4d or g5) carry region-based availability quirks. Spot pricing can save money, but only if your agents handle sudden evictions.

- Azure AKS: More integrated with enterprise security tooling, arguably easier networking models, and better out-of-the-box monitoring. GPU node pools, however, sometimes lag behind AWS in terms of instance variety.

Both are good enough for most cases, but the deciding factor is usually where your data already sits. Moving terabytes of embeddings across clouds just to save a few cents per GPU hour is rarely a net win.

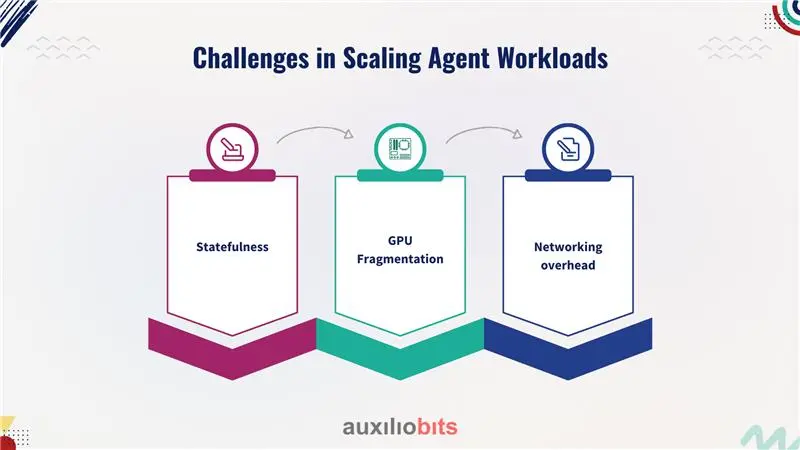

Challenges in Scaling Agent Workloads

This is where reality bites. Scaling agents isn’t the same as scaling web servers. Some differences:

- Statefulness: A reasoning agent may need to hold a multi-step plan in memory. Stateless pods aren’t enough; you need sticky sessions or externalized state (Redis, vector databases, etc.).

- GPU Fragmentation: A 40GB GPU might be overkill for a small inference agent, but Kubernetes doesn’t natively split it into fractional units. NVIDIA’s Multi-Instance GPU (MIG) helps, but configuration isn’t trivial.

- Networking overhead: Agents often talk to each other. If you’re routing every message through a load balancer, expect latency spikes. Sidecar service meshes (Istio, Linkerd) can mitigate, but they add complexity.

This is why “autoscaling agents” can’t just be a checkbox in the Kubernetes dashboard. It requires modeling the workload profile: are these long-running conversational flows? Short, batch-like inference jobs? Or hybrid workflows that blend both?

Observability and Cost Control

Two hard truths:

- Agents leak resources if you don’t watch them. GPU pods were stuck idle for hours because a reasoning loop crashed halfway, but the container stayed alive.

- Cloud GPU pricing is ruthless. A single p4d instance in AWS runs north of $30/hour. Leave three idle over a weekend, and you’ve burnt through someone’s travel budget.

The practical response:

- Use Prometheus and Grafana dashboards tied into GPU Operator metrics.

- Set time-to-live (TTL) controllers for short-lived agents.

- Tag workloads aggressively; finance will eventually ask which team is burning compute.

- Run mixed node groups: not everything needs a GPU, and shifting lightweight agents to CPU nodes can halve costs.

Case Example: Multi-Agent Simulation for Retail Forecasting

A retail analytics firm wanted to run 200+ cooperative agents that simulate demand under various pricing strategies. Some agents needed GPU inference (customer sentiment analysis via fine-tuned LLMs), while others ran lightweight statistical models.

- Containerization: Each agent type is packaged with its dependencies, avoiding Python conflicts.

- K8s orchestration: Deployed with CRDs defining agent roles and lifespans.

- GPU Operator: Ensured that the GPU-enabled agents ran without driver drift issues.

- EKS node pools: Split between GPU-heavy and CPU-only workloads, autoscaling independently.

- Outcome: 35% lower compute costs versus the previous VM-based setup, faster deployment cycles, and—perhaps most critically—fewer “it works on my machine” complaints.

Pitfalls Nobody Tells You About

- GPU scarcity: During high-demand seasons (think Q4 holiday rush), even managed K8s may fail to provision GPU nodes quickly. Pre-warming is essential.

- Startup time: Spinning up a new GPU node in AKS can take 5–10 minutes. Not great for latency-sensitive workloads.

- Security posture: Exposing agents via load balancers without strict network policies is asking for trouble. Sidecar proxies are extra work but worth it.

- Human error: Ironically, the biggest failure mode isn’t Kubernetes itself but engineers forgetting to set resource limits. One runaway pod can starve others.

Containers + K8s Are Necessary, but Not Sufficient

Containers and Kubernetes, even with GPU Operator, won’t magically give you scalable agent orchestration. They give you a substrate. The actual scalability comes from workload-aware design: splitting inference from reasoning, externalizing state, and treating GPUs as precious rather than infinite.

If you think about it, Kubernetes in this context is like electricity wiring. It matters enormously when it fails, but when it works, the real differentiation comes from what you plug into it.

Closing Thought

The intersection of containers, Kubernetes, GPU scheduling, and managed services is where AI agents move from interesting prototypes to systems you can trust in production. It’s not glamorous work—setting up node pools, debugging GPU telemetry, or tuning autoscalers rarely makes headlines. Yet without that infrastructure scaffolding, the grander vision of scalable, cooperative AI agents collapses into brittle demos.

If there’s one practical takeaway, it’s this: treat orchestration not as an afterthought but as a design constraint from the start. Agents that can’t scale cleanly in Kubernetes won’t scale cleanly anywhere else.