Key Takeaways

- Autonomous call agents extend beyond summarization by extracting structured context and triggering definitive post-call actions.

- Policy functions paired with LLM state extraction provide far more stable behavior than using language models for downstream decision-making.

- Agent performance depends heavily on the precision of the internal state schema, not on the size of the base model.

- Incremental, architecture-driven scale (from monolithic to modular) keeps the system manageable and avoids early-stage complexity traps.

- System integration and persistent memory ultimately drive impact more than “accuracy” of the summary itself.

Call summarization used to be treated as an afterthought in enterprise workflows. Most organizations viewed it simply as documentation hygiene—something you did because you had to. In practice, it’s one of the most expensive hidden steps in the post-call lifecycle. A single sales engineer finishing a deep-dive discovery call spends another fifteen minutes rewriting handwritten notes, translating spoken agreements into CRM updates, and triggering next-step reminders. Multiply that across a 50-person team operating five calls a day, and you end up with over 60 hours per day spent on work that wasn’t even part of the conversation itself.

The push toward autonomous agents started when organizations realized that summarization—when modeled correctly—is not just a content generation task but a decision-making moment. A real enterprise agent isn’t just providing a TL;DR of the call. It’s evaluating the context, understanding the internal systems where updates are needed, and taking autonomous steps on behalf of the human.

Also read: How Autonomous Agents Interact with Legacy Systems via Voice

Why Classic Summarization Tools Fall Flat

There’s a reason most transcription platforms barely improved operational KPIs. Even the “smart” ones that extract labeled entities, intention tags, or simplified bullet points still force the human to do the heavy lifting afterward. They’re little more than fancy repackaging layers.

Some of the failure modes:

- The “summary” is so generic that users copy/paste it into an email and rewrite it entirely.

- Tasks suggested by the system are disconnected from internal terminology (“follow up regarding widget integration”, ≠ “open integration backlog subtask in Jira under EPIC-331”).

- Over-reliance on confidence scores—the system refuses to take action unless it’s 95% certain, so it ends up not taking action at all.

That’s because those older tools are fundamentally passive. They process information, but they don’t orchestrate anything.

What Changes with Autonomous Call-Follow-Up Agents

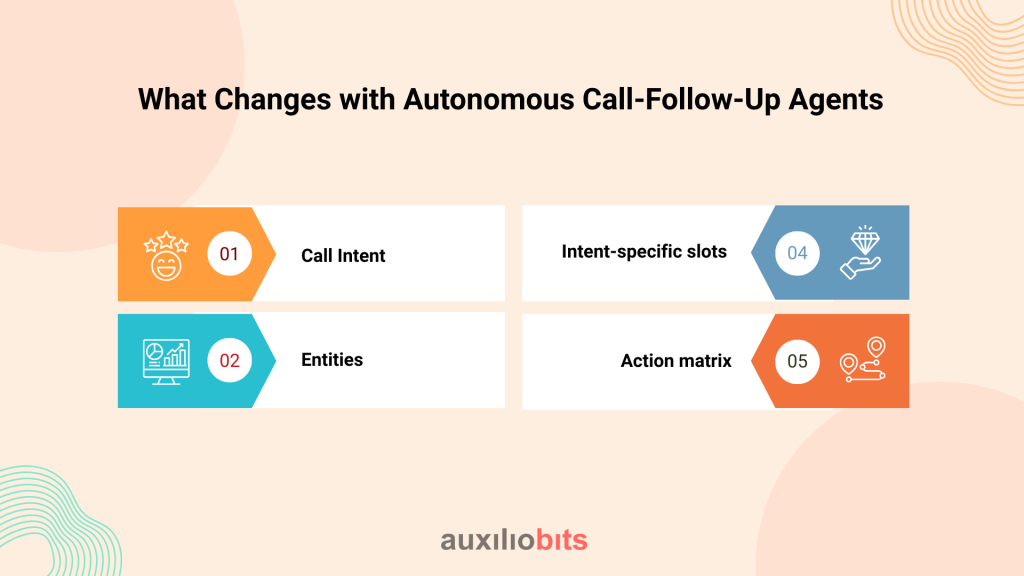

There is no denying that autonomous agents don’t treat summarization as an end goal. It’s only a state-generation step in a broader orchestration workflow. The agent’s real job is to maintain a continuously updated state based on the call, then trigger appropriate downstream actions.

During the call, the agent listens and builds a structured internal context. This isn’t just one long vector embedding. It typically includes:

- Call Intent → (e.g., renewal check-in, solution discussion, troubleshooting)

- Entities → customer name, product names, feature identifiers

- Intent-specific slots → renewal risk, discount mentioned, timeline (e.g., “within two weeks”), follow-up requirements

- Action matrix → mapped actions and relevant systems (CRM update, ServiceNow ticket open, KB draft, etc.)

Once the call ends, the agent evaluates this state against a policy. Here’s where conventional LLM use alone isn’t enough. Most enterprises embed business logic in policy functions—small pieces of deterministic logic that define when the agent should update which system. Think of it as a lightweight guardrail:

“If the call type = renewal and renewal_risk > 0.3 and timeline < 30 days → update the CRM stage and assign escalation to the CSM lead.”

The agent uses the language model to build a state. It uses policy functions to decide whether and how to act.

Technical Architecture

In a typical Azure + OpenAI deployment, the call audio stream is captured in real time using Azure Communication Services. Instead of waiting until the end to process, the agent performs incremental transcription, chunking the audio into small windows (usually 10–15 seconds). Each window is sent through a whisper-based model to generate partial transcripts. Those get appended to the agent’s state memory.

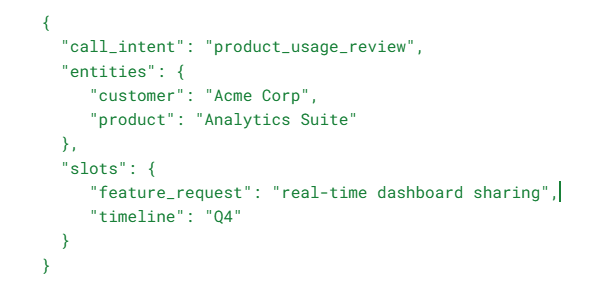

Parallel to the transcription pipeline, we run a context parser. This is not a generic GPT prompt—it’s a fine-tuned GPT-4 model wrapped with a constrained JSON schema. That way, every output is a structured JSON document like:

Once the full call transcript is complete (or the call disconnects), a policy engine runs. Most teams treat this as a rule-based microservice, not an LLM. The reason is simple: tasks such as “if escalation = yes, then create a Jira ticket” shouldn’t be probabilistic.

Typical policy engine stack:

- Azure Function or small Container App

- Stateless, triggered by a new “state” object arriving in a blob or service bus.

- Executes a decision tree (usually less than 30 lines of code)

- Calls internal APIs or Service Bus to raise tickets/schedule actions

The LLM is really only used for the state extraction and content drafting parts. The decision and execution parts are deterministic. This distinction is crucial. Companies that let the LLM decide whether to send an email end up with unpredictable behavior. Companies that separate “text generation” from “policy logic” have far more stable deployments.

The follow-up email drafting is handled by a second GPT-4 invocation, but it uses the stored state (plus customer tone preferences) rather than the raw transcript. That’s why the output sounds more human and less robotic. The model uses phrases that reflect how that company normally speaks (“Quick nudge,” “Just following up on…”), because the template injection happens before the LLM call.

Real-World Nuance and Field-Level Observations

- If you don’t maintain persistent agent memory across calls, the agent will repeatedly send intro emails as if it’s the first interaction. Companies often forget to store past actions in a retrievable knowledge base—the agent ends up acting like it’s brand new each time.

- Internal system throttling becomes a genuine issue. In one deployment, the agent started creating Jira tickets within 5 seconds post-call. Jira’s API rate limits caused delays and created false “failed” logs. We ended up adding a 10-second buffer and batching.

- Agents sometimes over-escalate. Early versions would escalate after hearing phrases like “this is frustrating,” even if the customer later laughed and said, “but it’s not a blocker.” Fine-tuning on multi-turn context helps, but it still misses sarcasm. In practice, we added a rule: only treat frustration as escalation if followed by an explicit request (“So can you escalate this?”).

When It Works Exceptionally Well

The architecture shines in repeatable, standardized scenarios, such as:

- Renewals and quarterly business reviews

- Technical “check-in” calls where the user walks through product usage.

- Support calls with predefined severity levels (“P1”, “P2”, etc.)

In these situations, the agent can rely on a predictable mapping between what is said and what needs to happen. The more the organization codifies internal processes (SOPs, escalation trees, approval hierarchies), the easier it is to transform them into policy functions.

Where It Still Fails or Needs Human Intervention

- Non-linear calls where the customer jumps between unrelated subjects (“Quick product question → pricing update → case escalation → new feature suggestion”). The agent struggles to determine which thread should drive the main autopilot response.

- Heavily negotiated commercial calls. Discount percentages or contractual clauses often require human negotiation—an agent generating a follow-up with discount details can jeopardize an ongoing negotiation if phrased incorrectly.

- Cross-functional coordination. If the same call involves Sales, Legal, and Product, the agent might create a follow-up action for one department and forget the other two. That’s because most models still bucket the “call intent” into a single high-level category.

An Opinionated Note on Multi-Agent Designs

Multi-agent hype is everywhere. In practice, most companies don’t need five agents running in parallel. You can craft a single agent with a robust internal state and a well-defined policy layer that handles 80% of use cases.

Only migrate to a hierarchical structure when:

- The number of distinct downstream systems becomes large (CRM + Service Desk + KB + Contract repo)

- Actions become conditional, e.g., only log a ticket if the call is support-related and the feature mentioned belongs to the “Platform Team”.

- You start reusing the same action module across different agents (e.g., the same email-drafting agent used by both customer success and support agents).

A pragmatic progression:

- Start with monolithic agent (transcription → state → policy → actions)

- Identify high-complexity sub-actions (e.g., legal review drafting)

- Abstract those into separate agents.

- Expose them as callable services to the main agent

Over-engineering from day one creates fragility—the agent ecosystem ends up spending more time coordinating with itself than actually executing tasks.

Conclusion

There’s a tendency to think of call summarization agents as a “nice to have” layer—something to help with note-taking so humans can focus on the real work. That’s backwards. In practice, the minute-by-minute progression of a call is already the real work. It’s where commitments are made, requirements clarified, and deadlines agreed. The post-call activities are simply the continuation of that workflow. Treating them as separate is what created the efficiency gap in the first place.

Autonomous agents finally bring those two moments back together. A well-architected summarization agent doesn’t produce a prettier transcript; it acts as part of the conversation continuum. It listens, interprets, and then continues the task without dropping context. That’s not convenience. It’s operational consistency—and in enterprise environments, operational consistency is what separates scalable teams from reactive ones.