Key Takeaways

- Automation isn’t static—once deployed, workflows immediately start drifting as policies, systems, and inputs change.

- AI reduces fragility by handling messy inputs, guiding decisions in gray zones, and fine-tuning processes in real time.

- Continuous optimization is a cycle of instrumentation, learning, adjustment, and oversight—not a one-time event.

- Governance and explainability matter as much as performance; managers must trust why AI makes certain adjustments.

- Start where it hurts most—focus AI on exception-heavy workflows, keep humans in the loop, and ensure transparency to build adoption.

A strange thing happens once you’ve automated a process: it immediately starts aging.

What looked simpler in testing begins to become weak the moment policies shift, an ERP screen gets patched, or someone upstream changes how they key in data. The workflow isn’t broken—it’s just out of sync with reality.

This is where AI has quietly played a very important role, not as a replacement for automation, but as a way of handling everything when the business refuses to sit still.

Also read: The Role of Conversational Memory in AI Chat Agents

Why Static Automation Doesn’t Last

he first generation of RPA promised stability: teach a bot the keystrokes, and it’ll never complain, never ask for a raise. True enough—until you realize the world around the bot changes faster than the bot itself.

- Banks update their KYC forms.

- Insurers add one more compliance field.

- Procurement adds a new exception to its approval chain because of “that one audit.”

Some teams take months to get into bot development, only to spend even more time later babysitting those bots as the rules evolve. That’s not optimization—it’s firefighting.

Where AI Plays a Role

There’s a misconception that AI can magically make automation “self-healing.” It can’t. What it can do is reduce the fragility in three areas:

- Messy inputs. Most workflows die at the intake stage. AI can normalize invoices, recognize documents, or detect outliers before the process collapses downstream.

- Decision gray zones. Rules are good for black-and-white logic. AI steps in when the decision requires probabilities—fraud likelihood, credit risk, or customer sentiment.

- Tuning over time. Instead of waiting for quarterly reviews, AI can adjust thresholds daily, rebalancing workloads, skipping redundant approvals, or rerouting exceptions.

But let’s not kid ourselves—AI is not rewriting your workflows in the middle of the night. Most companies wisely fence it in. Nobody wants to explain to regulators that a model silently rewrote the approval path.

A Case Study

A large hospital has automated eligibility checks. Initially, bots scraped payer portals. Every time the payer tweaked its portal, the bots broke. Staff called it the “Friday 3 a.m. problem.”

They added AI vision models. Suddenly, the bots weren’t looking for pixel-perfect XPaths—they were recognizing “this is a policy number field, regardless of layout.” On top of that, a predictive model flagged which patients were most likely to fail eligibility based on past patterns. Staff reviewed those before the bot even tried. The net effect wasn’t glamorous. It just meant fewer nighttime calls to the automation team. That’s optimization in the trenches.

Similar stories repeat in other industries:

- Insurance: AI models flag suspicious claims before automation routes them downstream.

- Finance: AI predicts which invoices will likely bounce due to vendor mismatches.

- Manufacturing: Vision AI detects anomalies in machine logs before RPA attempts standard data entry.

How Continuous Optimization Actually Works

This isn’t black magic—it’s plumbing. The cycle looks like this:

- Instrumentation. Capture detailed logs: exception reasons, latency, and handoff frequency. If you don’t measure it, AI has nothing to chew on.

- Learning. Models look for patterns: “85% of delays come from two vendors” or “approvals take 40% longer in branch X.”

- Adjustment. The system proposes tweaks—lowering thresholds, skipping a step, reordering queues.

- Oversight. Humans decide what sticks.

Think autopilot. The plane doesn’t fly itself; it trims constantly so the pilot doesn’t have to.

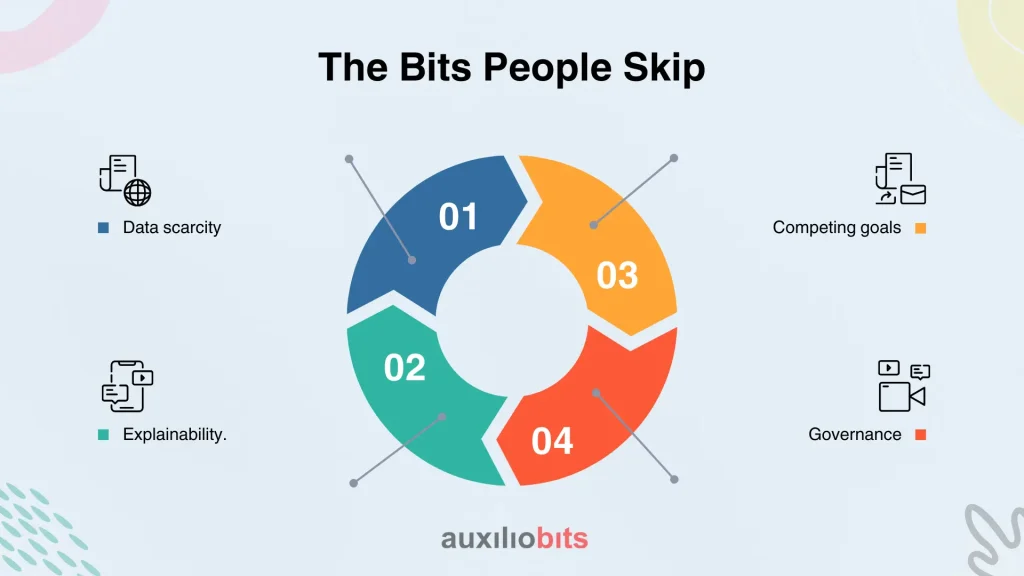

The Bits People Skip

Every glossy vendor deck skips the parts that actually matter:

- Data scarcity. Low-volume processes simply don’t generate enough examples for models to learn.

- Explainability. If the AI “skips” an approval, managers want to know why. Shrugging isn’t an option.

- Competing goals. Optimize for speed, and you risk skipping compliance. Optimize for compliance, and your cycle time balloons.

- Governance. Ironically, the more “self-optimizing” a system is, the more humans end up supervising it. One CIO called it the “babysitter paradox.”

How to Spot Real Issues

When auditing automation programs, look for a few telltale signs:

- Ops teams can explain why thresholds shifted last month. If nobody knows, that’s a red flag.

- Exception categories shrink, not just move around. If errors aren’t disappearing, optimization isn’t real.

- Governance treats models like software assets: versioned, tested, and retired.

- Most telling—line managers trust the system enough to let it make small changes without panicking.

Getting Started

Organizations often wonder, “Where should we even start?” The answer: where it already hurts.

- Begin with a workflow drowning in exceptions, not the smoothest one.

- Capture rich logs before you ever talk about models.

- Layer AI into gray zones, not obvious yes/no decisions.

- Keep a dashboard visible so humans can override. Transparency keeps trust intact.

Practical example:

- In accounts payable, start with invoice exceptions, not clean invoices.

- In HR onboarding, focus AI on mismatched documentation, not standard background checks.

- In the supply chain, deploy AI on unpredictable shipment delays, not routine order entries.

Conclusion

Continuous optimization isn’t about making automation glamorous—it’s about keeping it relevant. Static bots may get the job done on day one, but without AI, they erode under the weight of change. The point of AI here is not to take over but to make automation less brittle: normalizing messy inputs, guiding decisions in ambiguous spaces, and tuning processes before they drift too far out of alignment.

The real maturity test isn’t whether a company has “AI-powered automation” on a slide deck—it’s whether operations teams sleep better at night, whether exceptions are shrinking instead of multiplying, and whether managers actually trust the system to make small calls on their behalf. The organizations that win aren’t the ones with the flashiest bots, but the ones that treat optimization as a living cycle—instrument, learn, adjust, oversee—on repeat.

In short, automation gets you efficiency once; AI keeps that efficiency alive.