Key Takeaways

- Memory is an operational backbone, not a bonus feature. In enterprise workflows, it enables continuity across multi-step, non-linear tasks.

- Different memory types serve different purposes. Session, persistent, process-tied, and historical recall each has distinct benefits and risks.

- Over-remembering can be more damaging than forgetting. Stale, irrelevant, or intrusive data recall erodes trust and can create compliance risks.

- Integration with live systems is non-negotiable. Memory without authoritative data sources risks amplifying outdated or incorrect information.

- Governance will shape the future of AI memory. The retention, expiry, and legal ownership of stored context are becoming as important as accuracy.

In most boardroom discussions about AI chat agents, the spotlight falls on integrations, speed, and model accuracy. Yet, in real deployments, the thing that determines whether users embrace an agent or quietly stop using it isn’t always the accuracy score—it’s memory.

Not memory in the hardware sense, but the kind that lets the agent remember what you told it five minutes ago, last week, or even last quarter, and bring that context into the current exchange. Without it, even the most advanced model can feel like an intern with amnesia: competent in bursts, but exhausting to work with.

You’ve probably seen the difference yourself.

Without memory:

“What’s the last invoice amount from Orion Manufacturing?”

— “$46,210.”

“Okay, flag it for review.”

— “Which invoice?”

With memory:

“What’s the last invoice amount from Orion Manufacturing?”

— “$46,210.”

“Okay, flag it for review.”

— “Flagging invoice #88214 from Orion Manufacturing for review.”

That’s only two lines of dialogue, but multiply that over a thousand conversations a week in a procurement team, and you start to see the operational impact.

Also read: Conversational Agents for Admin Tasks: Bookings, Status Updates & More

Why Enterprises Lean Heavily on Memory

Consumer-facing bots might remember your coffee order or your kid’s name—nice, but replaceable. In enterprise contexts, memory often decides whether the automation sticks or gets sidelined.

A few realities make it critical:

- Work isn’t linear. A user might start an approval workflow, switch to checking a customer’s contract terms, then circle back to the approval half an hour later.

- Processes are multi-step and brittle. Without context carryover, a dropped variable forces the user to repeat steps or, worse, redo the whole task.

- Roles and permissions matter. If the agent recalls you’re a regional finance lead, it knows which numbers you can see without asking again.

- Repetition erodes trust. If users have to re-explain the same request three times in the same session, they stop believing the system is intelligent.

In short, conversational memory isn’t a luxury—it’s the scaffolding that makes complex, multi-turn workflows possible without overwhelming the human on the other side.

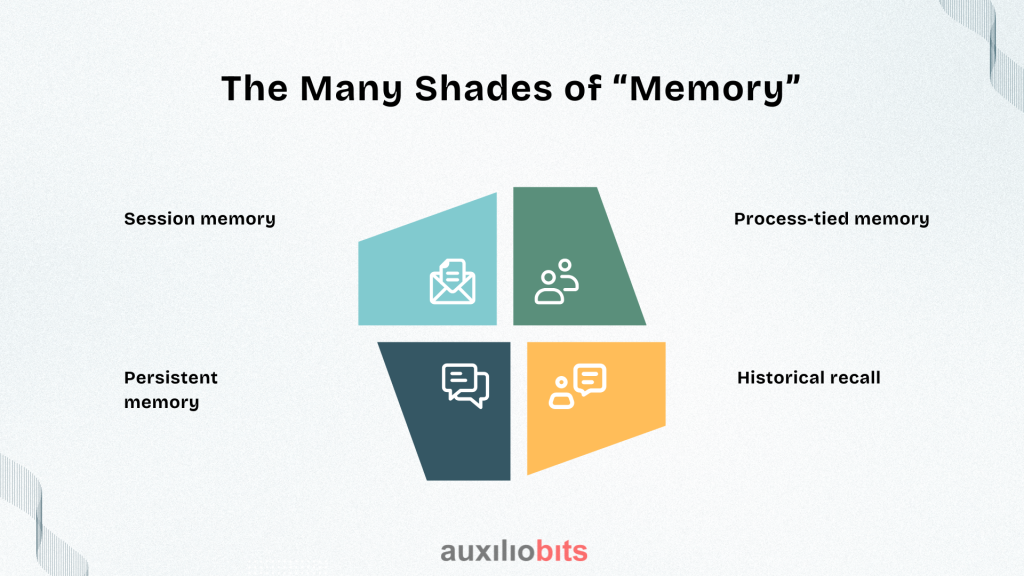

The Many Shades of “Memory”

People sometimes talk about AI memory like it were one feature. In practice, it’s several, and they don’t all serve the same purpose.

1. Session memory

Tracks what’s going on in the current conversation. It’s why the agent can respond to “approve it” without asking “approve what?” again.

- Good for: keeping interactions smooth and natural.

- Drawback: gone when the session ends.

2. Persistent memory

Holds facts across days, weeks, or months—like a client’s preferred escalation contact or an employee’s laptop model.

- Good for: avoiding repetitive Q&A in recurring workflows.

- Drawback: requires rules about updates, deletion, and user consent.

3. Process-tied memory

Remembers only the variables tied to a specific workflow in progress. For example, a vendor onboarding workflow might “remember” the tax form already validated earlier.

- Good for: ensuring continuity without clutter from unrelated past data.

- Drawback: demands tight integration with backend systems.

4. Historical recall

Let’s you search or reference past interactions—sometimes years old—for audit or decision review.

- Good for: regulated industries and complex negotiations.

- Drawback: storage costs and retrieval latency can become real issues.

When Memory Creates Problems

It’s tempting to assume that “more memory” is always better. Reality says otherwise.

- Old data lingers. A bot that remembers the wrong contract amount can keep reusing it until someone notices.

- Overfamiliarity feels intrusive. Not every user wants a bot to recall sensitive details from months ago.

- Noise competes with the signal. If the model considers every past detail equally, it may latch onto something irrelevant.

- Legal risk creeps in. Some sectors can’t store certain interaction data at all without explicit retention approval.

A manufacturing support bot once kept ticket data for 48 hours to “save time.” During a system outage, it kept quoting yesterday’s machine status because the memory cache outranked the live feed. Support queues doubled in length before anyone spotted the issue.

Designing Memory With the Business in Mind

Memory isn’t something you tack on at the end. It has to be designed alongside the workflow and compliance model.

Key starting points:

- Pinpoint high-impact use cases. In some processes, memory cuts minutes. In others, it only saves seconds—don’t overbuild.

- Map ownership and governance. Who controls what’s stored, and who can request deletion?

- Decide expiry rules up front. Session memory might vanish in minutes; compliance records might stay for years.

- Integrate deeply. Memory without access to source-of-truth systems is just a fancy note that may or may not be right.

Technical Building Blocks

Depending on budget, security constraints, and performance needs, different architectural choices emerge:

- Expanded context windows for immediate continuity in short conversations.

- Vector-based retrieval to pull semantically relevant snippets from older interactions.

- Key-value pairs for storing structured facts like IDs and status codes.

- RAG (retrieval-augmented generation) for pulling context only when needed, reducing noise.

- External state stores to preserve process status between sessions.

Trade-offs always exist: cost versus responsiveness, memory size versus relevance, and recall power versus privacy exposure.

What Human Conversation Can Teach

We don’t store every sentence we’ve ever heard. We summarize, forget irrelevant threads, and fact-check before repeating something important. Bots that mimic this selective retention tend to work better in the enterprise.

If an AI remembers every minor correction you’ve ever made, it may try to apply them when they’re no longer relevant. If it forgets too aggressively, it risks making you repeat steps unnecessarily. The sweet spot depends on the nature of the work, the tolerance for error, and the regulatory backdrop.

A Practical Case: IT Helpdesk Automation

A global chemicals company rolled out an internal support bot for IT requests. At launch, it was stateless: every new message was treated as a brand-new request. Users hated it.

After implementing process-tied memory, the bot could:

- Skip re-verifying the same user within a 24-hour window.

- Automatically recall device details from earlier that day.

- Pick up where the last session left off if the ticket was still open.

Metrics after 90 days:

- Mean resolution time dropped 25%.

- Ticket abandonment fell by 38%.

- User feedback improved from “annoying” to “actually useful” in internal surveys.

The Next Challenge: Governance

Memory is quickly moving from “nice-to-have” to a compliance conversation. Questions like these are starting to surface in board meetings:

- Who has legal ownership of stored conversation snippets?

- How do you guarantee expired data is really gone?

- What’s the protocol if memory surfaces something that shouldn’t be in a different workflow?

These aren’t hypothetical. Some financial firms have already had to shut down memory features when regulators flagged retention policies as inadequate.

Field Takeaways

- Don’t give an agent more memory than it needs for the job—it’s a liability magnet.

- Short-term context smooths conversations; long-term recall deepens efficiency, but both need boundaries.

- Memory that’s not linked to live, authoritative systems can quietly rot and spread errors.

- Governance will decide who thrives in regulated industries.

- The best deployments treat memory not as a database, but as a carefully managed workflow asset.

Final Thoughts

There is no doubt that conversational memory isn’t a technical checkbox; it’s a shaping force for how people experience AI in their daily work. Get it right, and the agent becomes an unintrusive partner—one that quietly recalls what matters, drops what doesn’t, and moves the conversation forward without friction. Get it wrong, and even the smartest model will feel clumsy, repetitive, or worse, untrustworthy.

The tricky part is that “right” looks different for every organization. For a high-compliance bank, that might mean minimal memory and ironclad audit trails. For a design agency, it could mean rich recall across projects to speed up creative handoffs. The technology is flexible enough to do either. The discipline is in deciding which memories are worth keeping, for how long, and in whose hands.

In the end, the best AI agents don’t just remember—they remember with purpose. And in the enterprise, purpose beats recall every time.