Key Takeaways

- The new service mesh isn’t about traffic—it’s about intent. Traditional routing rules give way to context-aware decision flows. Agents negotiate goals, not APIs, demanding a semantic layer for trust, reasoning, and adaptability.

- Control planes are evolving into metacognitive layers. They don’t just distribute configurations; they interpret ambiguity, reason about policy, and dynamically reassign tasks—sometimes in ways even their designers didn’t foresee.

- Cognitive proxies replace static sidecars. These embedded reasoning modules enforce ethical and compliance alignment before any output leaves an agent, turning enforcement into a local, intelligent reflex rather than a centralized bottleneck.

- Failure is part of the learning curve. Early agentic meshes will break through feedback loops, semantic drift, or over-alignment—but those failures are essential for discovering the balance between autonomy and orchestration.

- Architects must think like behavioral economists, not network admins. Designing agentic systems means managing incentives, context, and confidence levels, not just bandwidth or latency. The mesh becomes a medium of coordination between reasoning entities—effectively, a digital nervous system for enterprise cognition.

For the past decade, enterprises have been chasing abstractions—containers, orchestration, microservices, and APIs. Each promised control and composability. But as we move toward agentic infrastructure—where autonomous systems interact, negotiate, and learn—the abstractions themselves need orchestration. That’s where service mesh re-enters the picture, not as a networking sidecar anymore, but as a cognitive coordination fabric.

The old mesh was about traffic management; the new one must handle intent management.

Also read: Custom vs managed platforms: when to build vs when to partner in agentic automation

From Microservices to Micro-Decisions

A traditional service mesh (think Istio, Linkerd, Consul) emerged to solve a simple but brutal problem: once you split your monolith into hundreds of microservices, who manages all that communication?

The mesh did:

- It handled retries, routing, and observability.

- It standardized how services talked without changing their code.

- It gave operators uniform control across a chaotic landscape.

But agentic infrastructure doesn’t just pass requests; it passes decisions. An AI agent deciding whether to trigger a workflow, access a data source, or collaborate with another agent doesn’t operate within fixed API routes. It dynamically reasons about intent, context, and policy.

The “calls” between agents are not HTTPs—they’re negotiations.

In other words, microservices talk in verbs; agents talk in goals. That fundamentally changes what a mesh must handle.

Why Agents Need a Mesh

Imagine a large enterprise running dozens of domain-specific agents:

- A compliance-checking agent ensuring regulatory adherence.

- A financial forecasting agent is predicting short-term cash flow.

- A workflow optimizer managing SLA-based routing across departments.

Each agent has autonomy. But autonomy without coordination is just anarchy with better syntax.

A service mesh provides the invisible coordination tissue—the infrastructure-level diplomacy—that allows these agents to trust, route, and communicate effectively.

Let’s unpack the rationale:

1. Trust Boundaries

Agentic systems require decentralized decision-making but centralized trust. A mesh enforces identity, encryption, and policy at every interaction point. Mutual TLS, once a checkbox item, becomes existential.

2. Observability for Reasoning

Observability in an agentic context isn’t about latency or packet loss—it’s about explainability. If an agent made a decision that impacted compliance or finance, the mesh should trace not just which agents talked, but why.

3. Dynamic Policy Enforcement

Rules can no longer be static YAML configurations. The mesh must interpret policies expressed in higher-order logic—potentially even natural language—evaluated by an LLM or symbolic reasoning engine.

4. Fallbacks and Graceful Degradation

When an agent fails mid-workflow, the mesh should detect it and re-route intent to a backup agent or delegate partial execution. Think of it as a “self-healing conversation layer.”

Without that layer, multi-agent architectures devolve into brittle point-to-point chatter.

Redefining “Service Mesh” in the Agentic Era

Let’s be honest: if you drop Istio into an agentic ecosystem today, it won’t understand half the traffic flowing through it. JSON payloads become prompts, inference results, or reasoning graphs.

So what does a mesh look like when its primary job isn’t packet routing but semantic routing?

| Traditional Mesh | Agentic Mesh |

| Routes API requests based on rules | Routes intent-based on context, confidence, and capability |

| Focuses on latency, retries, and failover | Focuses on decision propagation, reasoning lineage, and fallback negotiation |

| Uses sidecars for traffic interception | Uses cognitive proxies for reasoning interception |

| Static service registry | Dynamic capability graph (what each agent “knows” or can “do”) |

| Relies on human-defined policies | Augmented with AI-generated or adaptive policies |

The design pattern shifts from “who can call whom” to “who should respond to what intent under what conditions.”

Architecture Snapshot: A Living Mesh

A realistic agentic service mesh might include:

- Intent Router—Interprets high-level goals (“analyze compliance gaps”) and routes them to the right agent cluster.

- Semantic Gateway—Normalizes data and language formats across diverse agents.

- Trust Broker – Maintains verifiable identity, capability attestations, and key distribution.

- Policy Reasoner – Applies contextual rules dynamically (e.g., “if agent confidence < 0.8, escalate to human”).

- Reflection Bus—A backchannel for feedback loops, allowing agents to broadcast reasoning updates to peers.

It’s not just network-level infrastructure. It’s cognitive plumbing.

How Control Planes Evolve

Every mesh has a control plane—the central nervous system that defines, distributes, and enforces policy. In an agentic mesh, that control plane evolves into what some researchers call a metacognitive layer.

It must manage not just:

- connection health

- service discovery

- policy templates

but also:

- Reasoning context windows

- memory synchronization between agents

- and confidence score propagation

In practice, that means the mesh may include an embedded LLM to interpret policy or negotiate task delegation.

Consider this scenario: A logistics firm’s order-processing agent encounters ambiguous shipment data. The intent router can’t find a clear next step. The control plane triggers a query to the meta-layer, which infers, from context, that the “inventory-check agent” should handle it, even though no explicit rule existed for that case.

That’s policy emergence, not policy definition. Traditional ops engineers would find that terrifying. Modern ones call it progress.

Sidecars, Gone Cognitive

In microservice architectures, sidecars were small processes attached to each service instance to handle networking, telemetry, and security. They were boring—but stable.

In agentic infrastructure, those sidecars evolve into cognitive proxies.

They can:

- Interpret and compress the reasoning context.

- Log semantic traces (why the agent made that choice),

- Apply local alignment checks before sending responses.

If an agent’s response doesn’t meet enterprise alignment policy—say, it violates ethical or compliance constraints—the sidecar can intercept and reframe it.

This idea is already emerging in LLM orchestration frameworks like LangGraph and AutoGen, where “guardrails” or “tool checkers” evaluate agent outputs. But as these systems scale enterprise-wide, the enforcement must move to the mesh, not the application layer.

When It Fails

Here’s the uncomfortable truth: a service mesh for agents will fail spectacularly before it stabilizes.

Why? Because we don’t yet have clear patterns for distributed reasoning at scale. Some foreseeable failure points include:

- Feedback Overload—Agents feeding reasoning summaries into the mesh faster than it can process them, creating cognitive congestion.

- Semantic Drift—When two agents evolve slightly different interpretations of the same policy.

- Over-Alignment—When adaptive policies suppress diversity in reasoning, leading to groupthink.

The Role of Standards

One could argue the industry needs an “Istio for Agents.” But history suggests otherwise. Early microservice teams that adopted Istio too early found themselves maintaining YAML files longer than code.

The agentic ecosystem risks repeating that—over-instrumenting too soon.

There are promising directions, though:

- OpenAI’s MCP Protocol, which defines structured agent communication.

- LangChain’s LCEL and CrewAI’s collaboration primitives.

- Kubernetes SIG-AI discussions on declarative agent lifecycles.

But no single standard will dominate soon. Instead, expect polyglot meshes—hybrids combining event buses, LLM routers, and lightweight observability daemons.

And that’s fine. Agentic infrastructure thrives on diversity.

Human-in-the-Mesh

The most underrated dimension of service mesh evolution is the human role.

In old architectures, humans observed metrics and occasionally tuned policies. In an agentic mesh, humans become context injectors—they shape the meta-rules that guide collective reasoning.

For example:

- A compliance officer might update a natural-language policy about permissible data sharing.

- The mesh’s policy reasoner parses it, evaluates ambiguity, and prompts for clarification before rollout.

This redefines DevOps. Engineers no longer “deploy services”; they coach autonomous systems.

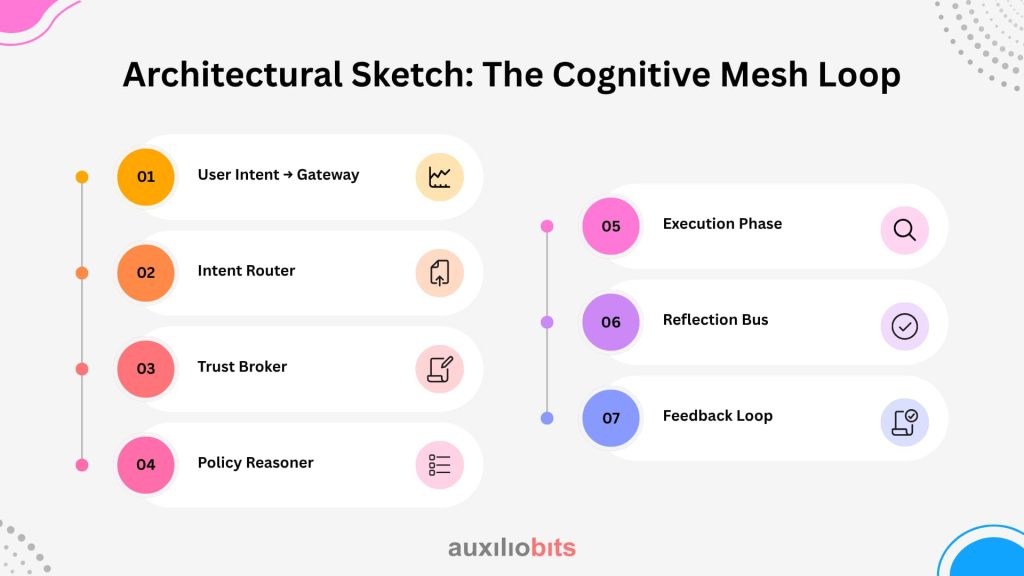

Architectural Sketch: The Cognitive Mesh Loop

Here’s a simplified flow you might actually see in production:

1. User Intent → Gateway

A business user sends a request (“summarize all overdue invoices and predict risk exposure”).

2. Intent Router

Parses the request and identifies three relevant agents (finance, document parser, risk assessor).

3. Trust Broker

Verifies each agent’s authorization and injects access tokens.

4. Policy Reasoner

Checks for policy conflicts (e.g., GDPR data rules) and adds constraints.

5. Execution Phase

Agents collaborate via the mesh, passing semantic tokens, not just data packets.

6. Reflection Bus

Collects reasoning metadata and generates observability traces—useful for audits or learning loops.

7. Feedback Loop

Insights are summarized into meta-policies for future optimization.

This isn’t hypothetical. Components of this design already exist in enterprise orchestration stacks combining Kubernetes, vector databases, LangChain orchestration, and observability layers like OpenTelemetry. The difference is conceptual—treating those plumbing layers as part of a larger cognitive system.

Security Becomes Cognitive Too

The zero-trust narrative doesn’t disappear—it deepens. Traditional zero-trust focuses on who can access what. Agentic zero-trust must also ask why and under what intent.

That’s not semantic nitpicking. It’s operational survival.

An LLM-based agent could theoretically synthesize an API call sequence that technically fits policy syntax but violates business intent. The mesh must detect intent drift—when an agent’s inferred goal deviates from its assigned one.

This introduces new primitives:

- Intent Hashing—Signatures based on semantic equivalence of prompts.

- Behavioral Attestation – Agents periodically submit summaries of their decision trees for validation.

- Context Isolation—Mesh can dynamically spin isolated “reasoning zones” for sensitive operations.

These are early-stage concepts, but you’ll see them appear in agentic governance frameworks soon.

Where This Leaves Architects

For enterprise architects, the implications are subtle but deep:

- You’re not designing data paths anymore—you’re designing decision paths.

- Observability isn’t just telemetry—it’s reasoning auditability.

- CI/CD pipelines may evolve into continuous reasoning pipelines, where agent behaviors are tested and versioned.

The challenge isn’t technical alone. It’s philosophical. How much autonomy are you willing to give your infrastructure?

Because once the mesh starts making micro-decisions—rerouting reasoning, suppressing loops, adjusting policies—you’ve effectively turned your network into a thinking participant.

And maybe that’s what we’ve been building toward all along.

Final Thought

The term “service mesh” might not even survive the agentic transition. It may evolve into something broader—a cognitive coordination fabric or a semantic control plane.

Whatever we call it, its purpose will remain: to make distributed systems act as one without erasing their individuality. The difference now is that the entities being orchestrated don’t just compute—they think.

And that makes the mesh less of a network component and more of a nervous system.