Key Takeaways

- Semantic kernels bridge reasoning and memory in enterprise AI, enabling agents to act with continuity, not just fluency.

- Memory must be curated, not just embedded—spanning episodic, procedural, and declarative layers.

- Stateless agents fail in context-heavy workflows, especially in support, legal, or policy-driven functions.

- The kernel doesn’t replace tools—it guides tool use intelligently, drawing on past context and procedural logic.

- Success requires more than code—you need organizational alignment, memory governance, and modular architecture.

The term “Semantic Kernel” might sound like a technical oddity from a machine learning paper circa 2017. And yet, in the context of enterprise AI systems—especially those rooted in agentic architectures—it’s emerging as a foundational concept. Not in the flashy “game-changer” sense, but in the quietly indispensable way that structured reasoning and contextual memory determine whether AI deployments produce value… or just verbose hallucinations.

There’s a gap—one that most enterprise leaders feel intuitively. Your AI agent might be capable of summarizing a 10-page policy document, but can it recall the last three resolutions in a client service thread and reason across them with context? Can it compare decisions made by two teams over months and determine inconsistencies? What you need isn’t raw compute. You need memory. Structured, flexible, contextual memory. That’s where a semantic kernel architecture starts making real sense.

Also read: Using LLM-Powered Agents for SLA Tracking Across Departments.

What is a Semantic Kernel?

At its core, a Semantic Kernel is a software architecture pattern designed to unify reasoning, memory, and execution across AI agents and workflows. It enables agents to not just “perform tasks,” but to do so with an understanding of why something is being done, what has been done before, and how that should influence decisions in the current context.

This isn’t about building another chatbot. It’s about giving your AI agents a substrate to:

- Maintain episodic memory (i.e., “what happened during this sequence?”),

- Store and retrieve semantic knowledge (i.e., “what does the organization know about X?”),

- Learn task structures or workflows from experience (i.e., “what’s the usual next step when this happens?”),

- Apply logic across decisions and documents, even when unstructured or ambiguous.

Not all enterprises are ready for that. But the ones that are exploring autonomous agents, or AI-augmented process orchestration, quickly discover that stateless reasoning hits a ceiling fast.

Why Stateless Tools Hit a Wall?

Let’s take a real-world example. A financial services firm deployed a GPT-based assistant to help process incoming customer requests related to mortgage forbearance. It worked well, initially. Queries were answered, PDFs parsed, and emails drafted.

Then the edge cases piled up.

- A customer who emailed three weeks ago with one scenario now returned with a contradictory request.

- Another had a partial payment plan approved, but the conditions weren’t documented in any structured field.

- The agent “forgot” past instructions or decisions unless everything was retrained or manually fed again.

This isn’t a failure of language models. It’s a failure of memory architecture. The model knew English, sure—but not continuity. Not context. Not enterprise-specific workflows.

This is where a semantic kernel flips the game. It sits between the model and the enterprise’s operational ecosystem, injecting recall, reasoning context, and process structure into every step.

Components of a Semantic Kernel (And Why They Matter)

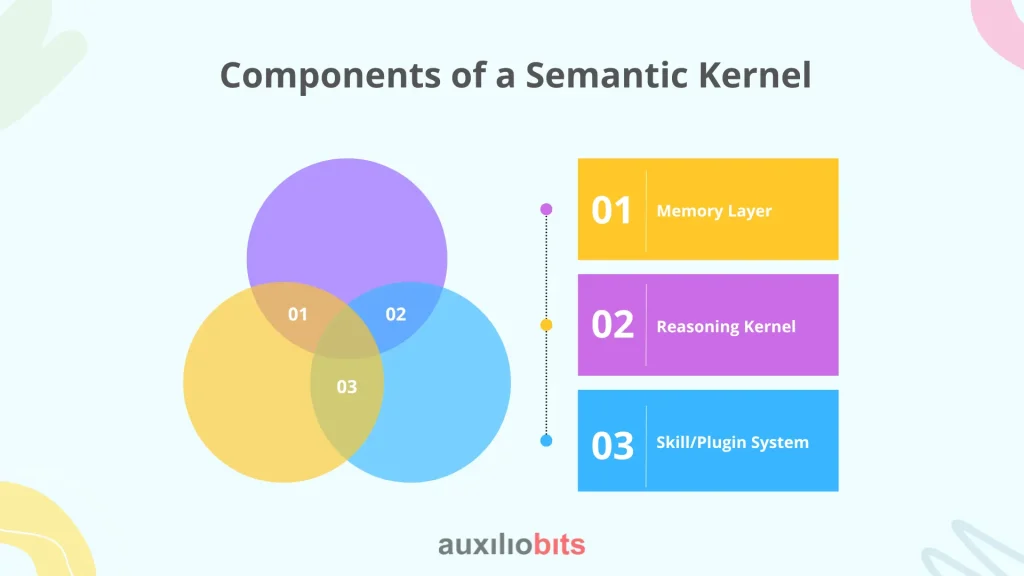

A well-designed semantic kernel comprises three overlapping but distinct layers

1. Memory Layer

This is the most misunderstood part. It’s not just about vector stores or embedding search. True enterprise memory spans:

- Episodic memory: Specific sequences, like conversation histories or task flows.

- Declarative memory: Structured facts or known data (e.g., “customer is on Tier-2 support plan”).

- Procedural memory: Workflows, playbooks, SOPs—essentially, “how we do things here.”

Too many implementations treat memory as a retrieval plugin. But in reality, memory has to be modeled, indexed, and curated—just like knowledge in a high-performing team.

2. Reasoning Kernel

This is the “brain” that links current tasks with memory and drives decisions. It often combines:

- Rule-based scaffolding (think function-calling or tool-use chains),

- Constraint solvers or planners (for multi-step or branching tasks),

- Prompt engineering logic (selecting templates or goals dynamically),

- Feedback loops (where agents self-correct or request clarification).

It’s subtle, but vital: the kernel mediates between what the AI knows and what the situation demands. This is where most orchestration tools fall short—they either overload the model or overly constrain it.

3. Skill/Plugin System

These are the tools or functions an agent can call—be it sending an email, updating a record, triggering an RPA script, or hitting an API. In LangChain, these are tools. In Semantic Kernel (the Microsoft SDK), they’re “skills.”

But skills alone don’t create intelligence. It’s the reasoning layer that determines which skill to use, when, and why—based on context retrieved from memory.

The Role of the Kernel in Enterprise AI Orchestration

Let’s talk orchestration—not as a buzzword, but in the real-world sense of integrating AI into existing, often chaotic, enterprise processes.

In a standard enterprise workflow (say, incident response), you’ve got:

- Human context (past escalations, known quirks, relationship history),

- Process complexity (who to notify, when to escalate, which policies apply),

- Tool fragmentation (ServiceNow, Jira, Slack, etc.),

- Business logic exceptions (don’t follow standard process if customer is strategic).

Now throw an AI agent into that mix. Without a kernel, it’s like hiring a new employee but giving them no training records, no SOPs, and no memory of their previous shifts.

With a semantic kernel, the agent can:

- Recognize the customer and recall prior escalations,

- Retrieve applicable policies from a document repository,

- Decide whether this case requires manager escalation (based on precedent),

- Use natural language to update Jira, notify Slack, and email a summary.

It’s not just doing things—it’s reasoning with context.

When Semantic Kernels Work—and When They Don’t

This architecture shines in domains where:

- The context is dynamic or nuanced (e.g., support, legal, compliance),

- Decisions depend on past events or policies,

- There’s a need to learn from past workflows and adapt.

But—and this is important—they’re overkill for rote, repeatable automations. For example, extracting values from invoices or triggering payroll alerts doesn’t need a semantic kernel. You’d be better off with RPA or deterministic logic. Introducing memory there just adds latency and fragility.

Here’s a quick check for whether you need a semantic kernel:

| Use Case | Need for Semantic Kernel? | Why |

| Automated invoice data capture | ❌ | Structured, rule-based |

| Legal clause comparison across contracts | ✅ | Needs semantic understanding and memory |

| Customer support response drafting | ✅ | Context continuity and recall |

| IT ticket creation from email | ❌ | Deterministic transformation |

| Compliance monitoring across threads | ✅ | Requires reasoning across conversations |

Lessons from the Field

Some practical notes from real implementations:

- Vector search ≠ memory. Semantic recall needs structure. Just dumping chunks into Pinecone won’t cut it.

- Memory curation is a full-time job. Enterprises often underestimate how much governance is needed to manage what the agent “remembers.”

- Too much context leads to decision paralysis. Agents sometimes try to reason with everything, and it slows them down. The kernel needs to filter, prioritize, and sometimes forget.

- Composable kernels > monoliths. Trying to build a single reasoning loop for everything (sales, support, ops) ends up brittle. Modularize by function or persona.

Also, human-in-the-loop isn’t optional. It’s foundational. Kernels should be designed assuming humans will review, correct, and feed back into the system. Otherwise, it becomes another opaque system that fails quietly until it explodes.

Final Thoughts

It’s tempting to see “semantic kernel” as yet another abstraction in the AI stack. But in practice, it’s the difference between an assistant who just talks and one who thinks. One that can pick up where it left off, connect the dots across workflows, and explain its reasoning with grounded logic.

It’s not magic. It’s architecture. And like most good architecture, when it works, it disappears into the background—letting your agents do what they’re supposed to do: act like intelligent teammates, not glorified macros.

But—one last thing—don’t underestimate the organizational effort needed to support it. A semantic kernel can’t fix fragmented data governance or chaotic process maps. It thrives when the underlying enterprise knowledge is available, consistent, and maintained. So, before chasing the latest orchestration SDK or memory module, ask: do you know what your enterprise knows? If not, no kernel can save you.