Key Takeaways

- VPCs are not “set and forget.” Agentic workloads evolve rapidly, and every API call, peer connection, or NAT exception can erode isolation if not carefully governed.

- IAM is the single most critical control plane. Over-permissive roles are a bigger risk than weak networks. Fine-grained, temporary, and context-aware access policies are non-negotiable.

- Encryption must extend beyond storage. Model artifacts, inter-GPU communication, and internal data streams demand protection—assuming the VPC itself is “safe” is a dangerous myth.

- Security vs. performance is a balancing act. Latency overhead from encryption or access controls is real, but the cost of breaches and compliance failures far outweighs the performance hit.

- Agentic security is a lifecycle, not a deployment checklist. Continuous IAM reviews, adversarial testing, and human oversight are essential as agents expand into new workflows.

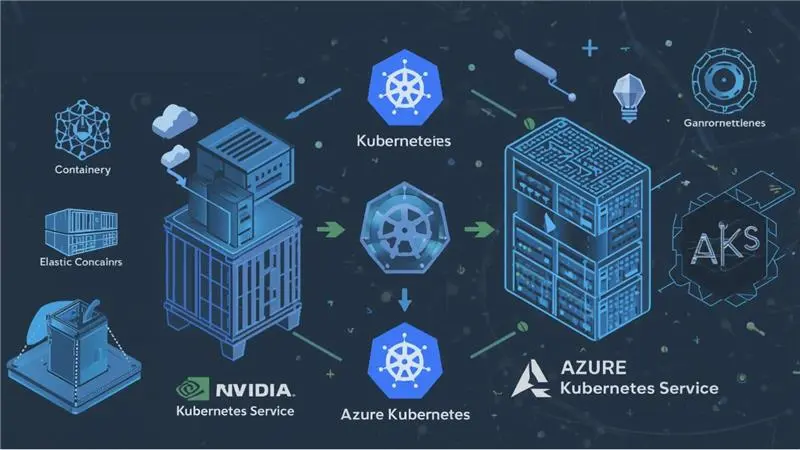

The rush to build agentic process systems—autonomous, decision-making workflows stitched together from LLMs, APIs, and GPU-backed inference pipelines—has produced a new class of security headaches. Everyone wants the scale and flexibility of Kubernetes clusters spinning up agents on demand. Few want to talk about the fact that these environments, if misconfigured, are essentially self-operating insider threats.

There have been teams that light up an NVIDIA-accelerated cluster on AWS or Azure, wire it into their CRMs, and treat security as a post-deployment concern. That’s dangerous. Once you give an agent access to live data and the authority to execute steps, the blast radius of a compromise can be wider than any single employee mistake.

This piece isn’t about security checklists. It’s about practical considerations: how virtual private clouds (VPCs), identity and access management (IAM), and encryption strategies mesh—or sometimes fail—in NVIDIA-powered agent systems.

Also read: Leveraging NVIDIA’s AI stack (CUDA, TensorRT, Jetson) for real‑time smart agent deployment.

The Reality of VPC Isolation

VPCs appear simple in theory: create a logically isolated network, manage ingress and egress, and security is ensured. However, in practice, agentic workloads are complex, requiring access to external APIs, partner systems, model endpoints, and telemetry pipelines.

Take an example from a manufacturing client running autonomous scheduling agents. Their VPC was locked down, but the agents still needed to call supplier APIs over the public internet. The temptation was to just poke holes in the security groups. That single decision weakened the entire premise of isolation.

Some challenges worth noting:

- NAT Gateways are double-edged: they allow controlled outbound traffic, but once outbound traffic is open, agents can be tricked into exfiltrating sensitive data. Not everything malicious looks like a “bad” IP address.

- PrivateLink and service endpoints reduce exposure: whenever possible, route traffic to first-party services over private connections. NVIDIA’s NGC containers pulled via a private endpoint are less of a risk than pulling from public registries.

- Peerings and transit gateways create complexity fast: as soon as multiple VPCs are peered at, the mental model of isolation starts to collapse. I’ve seen setups where the finance VPC was effectively open to development agents through a forgotten peering rule.

So yes, VPCs provide guardrails, but they’re not “set and forget.” Agentic systems shift communication patterns constantly—one week the agent is just reading tickets, the next it’s hitting accounting APIs. If your networking team isn’t in the loop, isolation erodes without anyone noticing.

IAM: The Difference Between Guardrails and Handcuffs

If there’s a single control plane that makes or breaks these systems, it’s IAM. You can have beautifully segmented networks and strong encryption, but if an autonomous agent is handed a role with wildcard permissions, game over.

The mistake that is very common is over-granting roles because agents are unpredictable. Engineers worry, “What if tomorrow the agent needs to write to S3 as part of a new workflow?” So they attach s3:*. That’s not just sloppy—it’s an invitation for lateral movement.

Key points that separate functional IAM from theater:

- Role granularity matters. One client broke out roles by “function class” (data fetcher, notifier, updater) rather than by project. This way, even if an agent evolved its responsibilities, it couldn’t suddenly start deleting databases.

- Temporary credentials beat long-lived keys. NVIDIA Triton Inference Server nodes pulling from private registries should be using short-lived tokens, rotated automatically. Humans hate dealing with rotation; agents don’t care.

- Context-aware access is still underused. Cloud IAM systems now support conditions—time of day, source VPC, MFA enforcement for API calls. It’s odd how rarely these are applied. Agents may not have a thumbprint, but you can restrict them to a computer environment that does.

Note: IAM for agentic systems should be managed with the same paranoia as IAM for CI/CD pipelines. Both are non-human actors executing privileged actions. Both, if breached, can destroy trust instantly.

Data Encryption: At Rest, In Transit, and Sometimes in Use

Encryption tends to be the most straightforward story in security reviews: “Yes, the S3 buckets are AES-256 encrypted, and TLS 1.2 is enforced.” But with NVIDIA-powered agent systems, the surface area widens.

Three specific wrinkles stand out:

1. Model artifacts and checkpoints:

- These aren’t just “data.” They’re intellectual property. Leaving them unencrypted in a GPU cache directory is careless.

- Some teams rely on EBS volume encryption and call it done. Fine for compliance checkboxes, but less fine when snapshots are shared across accounts.

2. Inter-GPU communication:

- With multi-GPU servers, data hops across NVLink or PCIe lanes. The risk here isn’t typical packet sniffing but rather leakage from shared memory or DMA attacks if the host OS is compromised.

- Confidential GPU initiatives are promising (NVIDIA’s Hopper architecture hints at this), but they’re not universally available yet.

3. Agent data streams:

- An agent pulling data from Salesforce, reasoning locally, and writing updates back must encrypt every leg of that journey. But many pipelines break encryption internally, assuming the VPC is “safe.” That’s a myth. Internal threats or compromised workloads make plaintext transit risky.

Encryption in use—confidential computing with TEEs (Trusted Execution Environments)—is starting to enter the conversation. Azure’s confidential VMs with NVIDIA GPUs are an early example. They’re not cheap, and the developer tooling is immature, but for sensitive sectors like healthcare, it’s hard to argue against them.

Where Security Collides with Performance

Every time security people talk about strong isolation, engineers counter with latency complaints. And honestly, they’re not wrong. Routing agent requests through private endpoints adds milliseconds. Encrypting data streams can reduce throughput on multi-GPU inference jobs.

For example, a healthcare deployment where encryption overhead added 8% latency to claims-processing agents. The CIO was furious until we compared that 8% to the potential reputational damage of a HIPAA violation. Not everything can be solved with “optimize your code.” Sometimes you just eat the performance hit.

But tradeoffs are real. Too much IAM complexity, and agents fail because they can’t get credentials. Too many restrictions on nodes to sit idle. This is where practical governance comes in—security policies can’t just be written for the SOC 2 auditor; they have to work in production.

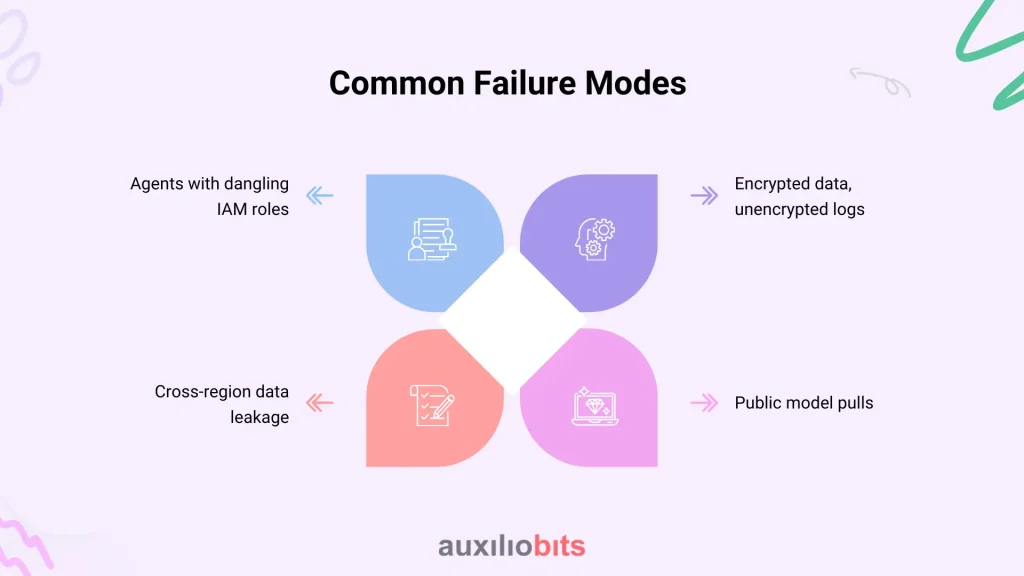

Common Failure Modes

To ground this in reality, here are a few scenarios that recur in different industries:

- Agents with dangling IAM roles: old test roles left attached, giving far more privileges than intended.

- Encrypted data, unencrypted logs: main storage is locked down, but logs in CloudWatch or Elastic contain sensitive payloads in plaintext.

- Cross-region data leakage: agents replicating datasets to GPUs in a “cheaper” region without realizing compliance constraints.

- Public model pulls: downloading NVIDIA containers from Docker Hub instead of private registries, introducing supply chain risk.

None of these failures is an exodus. They’re all the result of assuming that once an agent “works,” it’s safe. That assumption is dangerous.

A Few Recommendations

- Test agents as adversaries. Red-team your own agent system. What happens if an agent gets tricked into sending sensitive data to a public endpoint? You might be surprised how easily it can happen.

- Use service control policies (SCPs). On AWS, SCPs can prevent entire categories of actions regardless of IAM role misconfigurations. This adds a safety net.

- Think lifecycle, not deployment. Security has to evolve as agents take on new workflows. Quarterly IAM reviews are not optional.

- Human oversight is underrated. Everyone’s obsessed with “autonomy.” In practice, approval workflows or human-in-the-loop for certain actions (e.g., financial transfers) are still sensible.

Final Thoughts

Agentic process systems powered by NVIDIA GPUs are not just faster versions of yesterday’s automation platforms. They’re qualitatively different—autonomous, adaptive, and capable of both efficiency gains and unexpected chaos. VPCs, IAM, and encryption remain the foundational pillars of securing them, but none of those tools, on their own, guarantee safety.

Security in this domain isn’t a guaranteed checklist. It’s an ongoing negotiation between autonomy, performance, compliance, and risk tolerance. And sometimes that negotiation feels messy—because it is.

The teams that succeed are the ones treating these controls not as bureaucratic hurdles but as part of the design. When VPC architectures, IAM roles, and encryption schemes are engineered into the fabric of the agentic system—not patched in afterward—you get resilience that scales with the workload.