Key Takeaways

- Secret management in AI is no longer a backend problem—it’s a design discipline. The rise of multi-agent systems means secrets move across dynamic runtimes, orchestration layers, and GPUs. Security must now live inside the architecture, not just around it.

- Cloud-native tools aren’t enough on their own. AWS Secrets Manager, Azure Key Vault, and KMS provide the primitives—but enterprises need an orchestration layer that coordinates short-lived credentials across distributed agents.

- GPU inference is the new security blind spot. Memory persistence, serialized models, and caching layers can unintentionally store or expose secrets. Container isolation and explicit memory clearing are no longer optional.

- AI agents can leak secrets in plain sight. LLMs retain and reproduce tokens, API keys, and headers if prompt controls are weak. Redaction layers and pattern-based auditing must be part of the runtime stack.

- The future of secure AI is ephemeral trust. In agentic architectures, credentials should live only as long as the task does. Dynamic token issuance, continuous rotation, and strict observability define the new baseline for security maturity.

Agentic AI systems are only as secure as their weakest secret. And lately, those “secrets”—API keys, access tokens, encryption credentials, and model endpoints—have been moving deeper into places that traditional IT security frameworks barely touch: orchestration layers, multi-agent runtimes, and GPU inference environments.

We’re seeing an architectural collision here. AI engineers, cloud architects, and DevSecOps teams are all touching the same nerve—but from different perspectives. One side thinks about “weights and biases,” the other about IAM policies and KMS keys. Between them sits an increasingly complex credential problem: how do you manage secrets across an agent stack that spans cloud APIs, ephemeral containers, and GPU memory—without leaking something catastrophic?

This isn’t hypothetical. We’ve already seen a handful of security postmortems (not always public) where leaked access tokens from an inference pipeline triggered enormous data exposures.

Let’s unpack what’s really going on—and what “secure secret management” means when your AI system isn’t just code but a constellation of agents, prompts, and compute layers.

Also read: Agentic AI: The Future of Autonomous Decision-Making in Enterprises

The Context: Why Agent Stacks Complicate Secrets

In a typical enterprise automation setup, secrets management was boring—maybe a .env file, maybe HashiCorp Vault, maybe AWS Secrets Manager. You stored credentials, rotated them, and life went on.

But the moment you introduce agentic architectures—systems where multiple AI agents perform tasks autonomously and interact through orchestration frameworks (LangChain, Semantic Kernel, Azure AI Studio, etc.)—the picture gets messier.

- Agents don’t just run; they spawn. A single coordinator agent may instantiate child agents dynamically. Each new runtime may need credentials for databases, APIs, or vector stores.

- Some secrets are transient. Temporary tokens, session-based model endpoints, or signed URLs need secure creation and destruction.

- Secrets cross trust boundaries. One agent might run inside Azure Functions, another inside a GPU inference container on ECS. Different execution contexts mean different keystores, policies, and audit trails.

- Developers often “hardcode for convenience.” In practice, people bypass secret management for speed—embedding OpenAI keys or AWS credentials into config files just to get a prototype running. Those keys often live long past their intended purpose.

So, the first nuance is this: secret sprawl isn’t just an ops problem anymore—it’s an AI design problem. The moment you treat an LLM agent as code that runs somewhere “else,” you’re implicitly defining a security perimeter you now have to protect.

AWS vs Azure: Different Clouds, Same Headache

Both AWS and Azure have excellent secret-management primitives. The trouble is—using them correctly in an agentic context requires strategies most teams haven’t utilized.

AWS: Secrets Manager + KMS + IAM

AWS gives you a robust toolkit:

- AWS Secrets Manager handles secret rotation, encryption at rest, and fine-grained access policies.

- KMS (Key Management Service) underpins encryption for most AWS services, including Secrets Manager.

- IAM Roles and Policies define which services (like ECS tasks, Lambda functions, or SageMaker endpoints) can retrieve specific secrets.

Sounds perfect, right? The catch: real-world agent stacks rarely fit neatly into one service boundary. Consider this flow:

- An agent running in a SageMaker notebook requests credentials from Secrets Manager to call a downstream analytics API.

- That same agent triggers a GPU inference job on ECS via boto3—which itself needs credentials to access model weights stored in S3.

- The inference container logs partial outputs to CloudWatch for debugging—but accidentally includes part of the decrypted credential string.

Everything worked “securely,” but you still leaked sensitive data into logs.

AWS best practices often fail at the edges, where human behavior and agent orchestration collide. The service boundaries are secure; the coordination patterns are not.

Azure: Key Vault + Managed Identity

Azure takes a slightly different philosophy—more identity-centric, less key-centric.

- Azure Key Vault stores secrets, keys, and certificates.

- Managed Identities let Azure resources (like Web Apps, Logic Apps, or Container Instances) authenticate to Key Vault without credentials in code.

- Azure Role-Based Access Control (RBAC) governs who or what can read those secrets.

It’s elegant, especially when combined with Azure OpenAI or Azure Machine Learning—you can let the compute instance itself retrieve the token securely. But it’s easy to get it wrong when AI workloads span multiple environments:

- A developer tests locally, so they add a static AZURE_CLIENT_SECRET to .env.

- The agent is later deployed in production under a managed identity.

- The two paths diverge—one secure, one not—and secrets drift out of sync.

This kind of “environment split” is where enterprise AI projects quietly lose their security posture. The AI pipeline works, but no one’s quite sure where the live credentials reside anymore.

The GPU Inference Layer: The Forgotten Security Surface

Most secret-management frameworks assume secrets live in files, environment variables, or API requests. But once you start performing GPU-based inference—especially with fine-tuned or proprietary models—secrets can end up in memory, CUDA tensors, or temporary cache directories.

GPU inference workloads introduce new risks:

- Shared GPU nodes (especially in Kubernetes or cloud AI clusters) may have memory persistence issues—data remnants can remain accessible across sessions if isolation isn’t strict.

- Inference containers often pull model weights or environment keys at runtime from object storage (e.g., S3, Blob Storage). If your startup scripts log these events, you’re leaking more than you think.

- Driver-level caching (like TensorRT engine caching or ONNX runtime optimization) may serialize intermediate tensors or decrypted models to disk temporarily.

There was a case where a major AI startup unintentionally exposed inference-time credentials through GPU memory inspection on a shared A100 cluster. The problem wasn’t malicious exploitation—it was negligence: developers assumed GPU memory was “sandboxed” enough to ignore. It wasn’t.

When you push AI workloads closer to the GPU, you must extend secret governance there too. That means:

- Using ephemeral container lifecycles so credentials never persist beyond the job.

- Clearing CUDA context memory explicitly after inference runs.

- Avoiding hardcoded endpoints or signed URLs in the model-serving code.

- Auditing logs from frameworks like PyTorch Lightning, which can include more environment metadata than expected.

Real-World Pattern: Multi-Agent Pipeline on AWS

Here’s a simplified example.

Imagine a financial analytics platform that uses an agentic stack:

- An orchestration layer (LangGraph) runs in AWS Lambda.

- A data-enrichment agent runs in ECS Fargate.

- A GPU inference agent for credit-risk modeling runs in SageMaker.

- Secrets for each are stored in AWS Secrets Manager.

Now, consider what happens when these agents call each other:

- Lambda retrieves the API key for the enrichment service.

- The ECS agent decrypts its credentials to access an RDS instance.

- The SageMaker agent fetches both the model endpoint key and an S3 presigned URL to load weights.

Each of those three requests touches Secrets Manager independently. If a policy or rotation event happens mid-pipeline (say, someone rotates the enrichment API key), part of the system breaks silently.

So, the “secure” solution creates operational brittleness. You can’t just encrypt; you have to coordinate encryption events across an agent graph. Some teams now handle this with an internal secret-orchestration microservice—a small control-plane API that manages short-lived credentials and issues JWTs scoped per task, instead of per agent.

That’s not something AWS gives you out of the box. It’s architecture-level hygiene, not just service configuration.

Secrets in the Agent Brain

Here’s a subtle but serious issue: agents remember.

If your LLM-based agent ever processes or “thinks about” credentials—for instance, when reading environment variables or config data—there’s a risk it may reproduce those secrets later in conversation or logs.

I’ve seen logs where an autonomous agent explained an API error and, in doing so, printed the full Authorization header it was debugging. It wasn’t “hacked”; it was helpful.

Preventing this requires:

- Strict prompt constraints (prevent LLMs from accessing sensitive variable names).

- Token masking or redaction layers — intercept outputs and scrub anything matching credential patterns.

- Audit policies that treat LLM logs like database logs — never assume a “debug trace” is benign.

This is one of those subtle contradictions: we want explainable agents, but we also want them to forget. Balancing transparency and secrecy isn’t just a technical challenge; it’s philosophical.

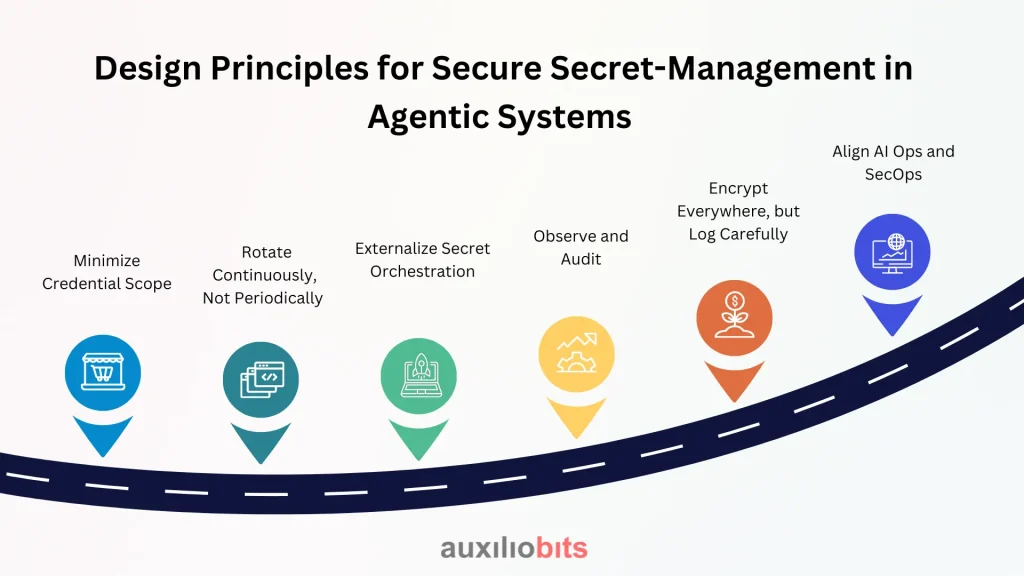

Design Principles for Secure Secret-Management in Agentic Systems

It’s easy to get lost in cloud-specific tooling. But there are broader patterns that actually matter more than the AWS/Azure flavor differences.

1. Minimize Credential Scope

Don’t give an agent more power than its function demands. Use least-privilege IAM roles, and separate them per agent type. If a data-retrieval agent never needs to write, don’t include write permissions “just in case.”

2. Rotate Continuously, Not Periodically

Traditional 90-day rotations don’t fit for transient workloads. Agents might live for minutes, so secrets should too. Consider token issuers that expire within a single run.

3. Externalize Secret Orchestration

When multiple agents need coordinated access, build a dedicated control plane that issues and revokes credentials dynamically. Treat it like a short-lived certificate authority.

4. Observe and Audit

Monitor access logs across Secrets Manager, Key Vault, and inference clusters. Look for anomalies like a GPU node requesting credentials outside expected workflow hours.

5. Encrypt Everywhere, but Log Carefully

It’s amazing how many teams encrypt their secrets but forget to redact them in logs. If you’re using structured logging, configure your logger to hash or mask any field containing “key,” “token,” or “secret.”

6. Align AI Ops and SecOps

Security teams often treat AI workloads as opaque. Bring them into design discussions early. Explain what an “agent orchestrator” actually does — or they’ll assume it’s another Lambda.

The GPU Edge Case: Secrets in Weights and Configs

One under-discussed angle: model weights themselves can embed secrets.

When fine-tuning or RLHF runs are configured with environment-dependent parameters, sometimes the serialized model (PyTorch .pt, TensorFlow .pb, or ONNX .onnx) ends up storing pieces of that configuration. It’s rare, but it happens—especially if training scripts use dynamic configuration objects.

If that model file later moves to a public inference endpoint or a partner environment, you’ve just published secrets baked into tensors.

The fix isn’t glamorous:

- Sanitize model configs before serialization.

- Store model metadata separately.

- Run static scans on exported models (using tools like Trivy or custom regex) before deployment.

This is where MLOps and SecOps need shared tooling, not just shared Slack channels.

When Things Go Wrong

There’s no shortage of cautionary tales. A few stand out:

- The OpenAI API leak (2023), where API keys were exposed in public GitHub repos by thousands of developers—not maliciously, but by accident.

- A fintech startup (unnamed, 2024) that deployed agent-based workflow automations in Azure Functions, only to realize the agents had read access to the company’s entire Cosmos DB because of overly broad managed identity scopes.

- GPU cluster misconfigurations where inference jobs were run in shared tenancy without container-level encryption—and memory persistence exposed fragments of prior jobs.

Every one of these cases shares the same theme: credentials didn’t leak because encryption was weak. They leaked because context was weak.

The Path Forward

Agentic AI brings its own kind of chaos—dynamic orchestration, distributed compute, and opaque reasoning. Managing secrets through all that requires more than a few environment variables and a vault plugin.

The mature posture looks something like this:

- AWS or Azure handles the primitives (encryption, storage, rotation).

- A lightweight internal control plane handles runtime issuance.

- Agents authenticate via signed identity assertions, not static keys.

- GPU inference jobs run ephemerally, with isolated containers and explicit memory wipes.

- Security teams continuously test for credential sprawl, not just data leaks.

At that point, your agents stop being potential liabilities — and start becoming first-class citizens in a zero-trust world.

Because, truthfully, in multi-agent architectures, trust isn’t binary. It’s negotiated, ephemeral, and conditional. Just like the credentials we’re learning—painfully—to protect.