Key Takeaways

- RPA didn’t fail—its job description was misunderstood. Bots were built to execute known decisions at scale. Problems start when enterprises expect them to interpret ambiguity, adapt to change, or resolve judgment-heavy exceptions. That was never their role.

- Most automation pain comes from forcing reasoning into execution layers. Bloated rule sets, fragile workflows, endless regression testing—these are symptoms of a deeper architectural mistake. When bots are asked to “decide,” complexity explodes and resilience disappears.

- AI agents add value before automation, not instead of it. Agents don’t replace workflows. They absorb uncertainty, interpret context, and determine intent—so that downstream automation can remain fast, deterministic, and auditable.

- “AI-powered RPA” often increases risk rather than reducing it. Embedding intelligence directly into bots blurs accountability, weakens governance, and makes failures harder to explain. Separating reasoning from execution keeps both controllable.

- The real scaling advantage comes from better decisions, not more bots. Enterprises that win aren’t the ones with the largest bot count. They’re the ones where agents reduce noise, surface meaningful exceptions, and let humans focus on judgment—not keystrokes.

Why bots still matter, and why they can’t think for themselves

There’s a familiar pattern I see in boardrooms and delivery reviews lately. Someone says, half-defensively, “We already have RPA.” The implication is clear: we’ve automated, box checked, move on.

But nobody in the room truly believes that anymore.

Not because RPA failed—far from it. RPA did exactly what it promised. It automated clicks. It moved data between systems that were never meant to talk. It reduced headcount pressure, stabilized brittle processes, and bought enterprises time.

The problem is that time has now run out.

What’s changed isn’t the value of RPA as an execution layer. What’s changed is the complexity of decisions sitting around those bots. And bots, by design, don’t reason.

That distinction—execution versus reasoning—is where most automation strategies quietly fall apart.

RPA’s Original Superpower

Robotic process automation was never meant to be intelligent. That was the point.

Early RPA succeeded because it:

- Mimicked deterministic human actions

- Operated reliably inside stable UI-driven workflows

- Required no core system changes

- Delivered ROI fast, sometimes uncomfortably fast

For processes like invoice posting, journal uploads, report generation, or master data updates, RPA still works. In fact, it works better than many modern tools that overcomplicate simple problems.

There have been finance teams stabilize month-end close using bots that haven’t changed in four years. Procurement teams rely on RPA to reconcile GRNs across plants. HR still uses bots to sync onboarding data across systems that refuse to integrate cleanly.

All of that is fine.

But RPA only works when three assumptions hold:

- The rules are stable

- The inputs are predictable

- The decision logic is already known

Once any of those break, RPA starts to show strain.

And in modern enterprises, they break constantly.

Also read: Integrating RPA, AI and Process Mining for Manufacturing Success

Where RPA Quietly Starts to Fail

Nobody wakes up one morning and says, “RPA is useless now.” Instead, it degrades in small, operationally painful ways.

- Exception queues grow, not shrink

- Bots pause more often “pending human review”

- Rule sets balloon into unreadable decision trees

- Changes require more regression testing than the original process

Take invoice processing—a classic RPA success story.

Posting invoices? Easy. Matching against POs? Still manageable. But detecting whether a price deviation is acceptable this month because of a contract amendment buried in an email thread? That’s not a rule problem. That’s a reasoning problem.

Or supplier onboarding.

RPA can extract documents, populate ERP fields, trigger approvals. But deciding whether a supplier should be onboarded when ownership structures change, sanctions lists update weekly, and ESG policies evolve? Again—not execution.

RPA doesn’t fail loudly. It fails by becoming brittle, overgoverned, and strangely expensive to maintain.

That’s not a tooling issue. It’s a layer mismatch.

Execution vs. Reasoning: A Necessary Separation

The mistake many enterprises make is trying to teach bots how to think.

They add:

- More if-else conditions

- More lookup tables

- More validation steps

- More human checkpoints

Eventually, you’re not automating—you’re encoding anxiety.

This is where AI-powered agents enter the picture, not as RPA replacements, but as a reasoning layer above execution.

Think of it this way:

- RPA executes decisions

- Agents decide what should happen

That separation matters more than most architecture diagrams admit.

What AI + Agents Do

There’s a lot of talk about “AI automating processes.” That’s not accurate, and it sets unrealistic expectations.

AI agents don’t replace workflows. They sit between uncertainty and action.

In practice, a reasoning layer does things RPA was never designed for:

- Interprets unstructured signals (emails, contracts, chat messages, PDFs)

- Evaluates context, not just conditions

- Adapts when policies change without rewriting flows

- Learns patterns across cases instead of handling each one blindly

Consider expense auditing.

RPA can validate amounts, receipts, and policy limits. But detecting suspicious behavior—split claims, timing anomalies, subtle abuse patterns—requires probabilistic judgment. Agents can flag why something looks wrong, not just that it violated a rule.

Or take procurement anomaly detection.

A bot can check prices. An agent can reason whether a deviation is acceptable based on supplier history, commodity trends, contract clauses, and urgency signals from operations.

Once the agent decides, RPA executes the outcome: block, route, post, or escalate.

Clean handoff. Clear responsibilities.

Why “AI Inside RPA” Is the Wrong Idea

Many platforms now advertise “AI-powered RPA.” The phrase sounds appealing. In practice, it often means bolting intelligence into the wrong layer.

When AI is embedded directly into execution flows:

- Debugging becomes opaque

- Auditability suffers

- Governance teams panic (sometimes rightfully)

- Minor model changes ripple through production bots

Reasoning should be explainable, testable, and decoupled from the mechanics of clicking buttons.

A better pattern looks like this:

- Agent analyzes context and recommends an action

- Decision is logged, explainable, and reviewable

- RPA carries out the approved step in the target system

That separation keeps regulators calm, auditors happy, and operations sane.

Real-World Pattern: From Bot Farms to Decision Systems

There have been enterprises that proudly ran hundreds of bots—and still missed critical risks.

One global manufacturer had automated invoice posting across regions. Volumes were high, accuracy looked good. But duplicate invoices kept slipping through—not identical duplicates, but near duplicates: same vendor, same amount, slightly altered descriptions.

Rules didn’t catch them. Humans were too overloaded to notice patterns.

Introducing an agent layer changed the economics entirely.

The agent didn’t just flag duplicates. It surfaced why they were suspicious, clustered similar behaviors, and prioritized cases worth human attention. RPA still did the posting. Humans intervened only when the reasoning layer said, “This deserves a look.”

Bot count stayed the same. Value didn’t.

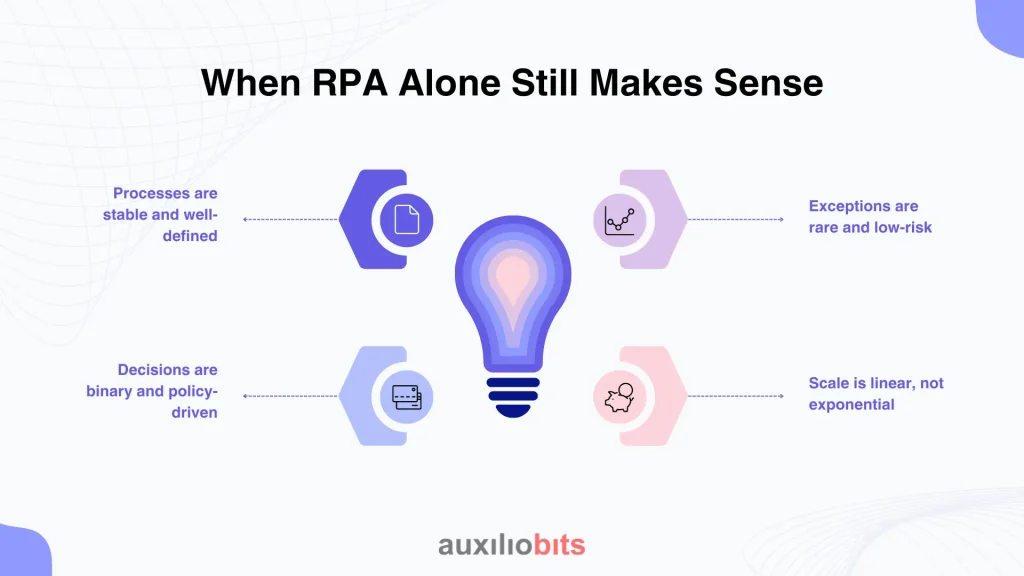

When RPA Alone Still Makes Sense

This isn’t an anti-RPA argument. It’s an architectural one.

RPA alone is still appropriate when:

- Processes are stable and well-defined

- Decisions are binary and policy-driven

- Exceptions are rare and low-risk

- Scale is linear, not exponential

Payroll uploads. Ledger reconciliations. Static report distribution. System-to-system bridging.

Not everything needs cognition.The danger is assuming everything can survive without it.

The Emerging Automation Stack

Most mature automation programs are converging on a layered model, even if they don’t call it that yet:

- Experience layer: humans, chat interfaces, approvals

- Reasoning layer: AI agents, policy engines, decision models

- Execution layer: RPA, APIs, scripts, workflows

- System layer: ERP, CRM, legacy platforms

RPA didn’t disappear here. It got promoted to what it always was best at: execution.

Trying to collapse these layers into one tool usually ends badly.

Important Shifts Leaders Miss

A few nuanced realities tend to get overlooked in strategy decks:

- Agents reduce exception volume, not eliminate it

- Reasoning systems need governance just as much as bots do

- Human oversight doesn’t vanish—it becomes higher leverage

- ROI shifts from labor savings to risk avoidance and decision quality

These benefits don’t show up in the first sprint. They emerge over quarters.

Which is why some executives conclude prematurely that “AI didn’t deliver,” when in fact they measured the wrong thing.

The Real Question Isn’t “RPA or AI”

Where should decisions be made, and where should actions be executed?

If your bots are deciding, you’re already in trouble.

If your humans are still executing, you’re wasting expensive judgment.

The middle ground—agents deciding, bots executing—is where automation finally starts to scale without becoming fragile.

RPA isn’t dead. It’s just been miscast as the brain instead of the hands.

And hands, as it turns out, still matter—when someone smarter tells them what to do.