Key Takeaways

- LangChain turns chatbots into cognitive coaches – Unlike static assistants, LangChain agents combine reasoning, memory, and data access, enabling financial conversations that evolve rather than reset with every query.

- Context and continuity define effective financial AI – By recalling past interactions and linking them to real-time data, these bots deliver nuanced, situation-aware advice that feels human rather than scripted.

- Agentic architectures create real collaboration – Using LangChain and LangGraph, multiple specialized agents (for budgeting, risk, compliance, etc.) can coordinate to simulate the dynamics of a real financial advisory team.

- Ethical and regulatory caution is critical – Personalized financial guidance can blur into regulated “advice,” requiring careful design boundaries, privacy safeguards, and transparency in reasoning.

- The future is hybrid, not humanless—AI coaching bots won’t replace advisors but will empower them, handling repetitive financial monitoring while humans manage complex judgment and emotional context.

There’s a quiet shift happening in personal finance—and it’s not the usual app revolution. It’s the arrival of AI agents that don’t just answer your questions but coach you through your financial decisions. They understand context, recall your patterns, and—if designed well—nudge you the way a human advisor might.

LangChain, a framework originally built to orchestrate large language models (LLMs), is now becoming the backbone for these “financial coaching bots.” But this isn’t about building another chatbot that spits out budgeting tips. It’s about stitching together reasoning, data interpretation, and memory into something that behaves less like a calculator and more like a thinking partner.

Also read: Using LangChain to Build Context-Aware Business Process Agents

The Broken State of Digital Finance “Help”

If you’ve ever tried to ask a banking chatbot why your monthly expenses look off or how to hit a savings goal faster, you already know the problem.

These bots are reactive—they respond to direct queries but fail at following through. Ask “how can I save more this month?” and they’ll toss you a list of generic tips (“cut your lattes,” “use a budgeting app”). Ask again next month, and they’ll forget you even exist.

Humans don’t work like that. A coach remembers that you took a vacation last month, that you tend to overspend on weekends, and that you’re due for an insurance renewal. Financial advice divorced from memory and reasoning is just a search engine in disguise.

That’s where LangChain agents change the game.

From Chatbots to Cognitive Coaches

LangChain isn’t a finance tool per se—it’s an agent framework. It lets you chain together reasoning steps, memory stores, and tool integrations (like APIs or databases) so that the AI can think, not just respond.

In finance, that means an agent can:

- Recall previous spending trends before suggesting new budgets.

- Run calculations using live data (through integrations like Plaid, Yodlee, or open banking APIs).

- Adjust recommendations when your income, risk appetite, or debt levels change.

- Explain why a certain decision might help—or backfire.

You don’t get this with static GPT-based assistants that start fresh with every prompt. LangChain agents can have episodic memory—retaining past interactions—and contextual reasoning—linking what you said today to what you did last quarter.

A human financial planner does that intuitively. AI, until now, couldn’t.

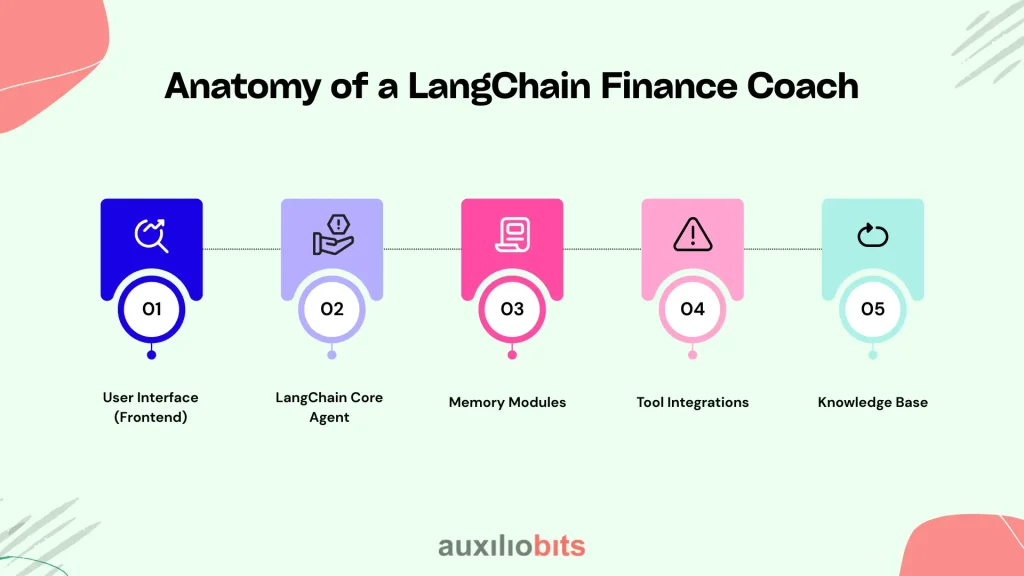

Anatomy of a LangChain Finance Coach

A working system usually combines several moving parts, each playing a distinct role. The technical architecture might look like this (simplified):

- User Interface (Frontend)—Could be a chat screen in a mobile banking app or a voice assistant embedded in a budgeting platform.

- LangChain Core Agent—The reasoning engine. It interprets user intent, plans actions, and routes queries to external tools.

- Memory Modules—These store past transactions, advice sessions, and user preferences. LangChain supports vector stores like Pinecone or FAISS for semantic recall.

- Tool Integrations—APIs for financial data (Plaid, QuickBooks, investment dashboards), calculators, and even external AI models for specialized tasks (like tax planning).

- Knowledge Base—Custom documents, policy guidelines, or financial education material indexed with embeddings for retrieval-augmented generation (RAG).

When the user says, “I want to save for a house in three years, but I’m paying off a student loan—what should I prioritize?”

The agent doesn’t just respond with prewritten advice. It might:

- Fetch your debt schedule and monthly inflows.

- Compare amortization timelines with projected savings rates.

- Reference your previous behavior (e.g., how consistently you’ve met targets).

- Offer a scenario plan: “If you allocate 20% toward debt, you’ll clear it by 2027; shifting 10% could get you a down payment by 2028.”

That’s not just chatting—it’s coaching.

Why LangChain Fits This Problem Unusually Well

Most frameworks excel at dialogue or data analysis, not both. LangChain’s design philosophy—agent orchestration—bridges this divide. It lets developers plug LLMs into tool ecosystems without losing coherence across sessions.

Three features stand out:

- Memory Persistence: The system remembers user states across conversations, allowing a form of longitudinal guidance.

- Tool-Calling Architecture: Agents can call external functions or APIs mid-conversation, turning language into action.

- RAG (Retrieval-Augmented Generation): Financial rules, compliance policies, or user-specific files can be fetched contextually to inform responses.

In practice, a finance coach built this way could recall that you already asked about Roth IRAs last week and skip the primer—jumping straight to contribution caps and tax strategy this year.

That feels human, not robotic.

Real-World Applications Emerging

A few startups and labs are already experimenting with LangChain-powered finance assistants, often quietly.

- Clearscore’s experimental AI assistant uses chained reasoning to evaluate credit score impact scenarios—e.g., how paying off one card before another affects your rating.

- HyperJar, a UK fintech, reportedly tests conversational saving plans using contextual AI memory.

- Some independent developers on GitHub have open-sourced LangChain templates for goal-based savings bots that simulate “if/then” financial modeling via external spreadsheets.

These are early, messy prototypes—but they work well enough to demonstrate potential.

Interestingly, banks are watching. While few would publicly announce LangChain integrations, internal innovation teams are experimenting with agent-driven financial guidance for Gen Z customers who find traditional advisors intimidating or inaccessible.

What Makes Coaching Different from Advice

Most digital finance tools give answers. Coaching, however, is a process of continuous calibration. It’s built on feedback loops—observe, interpret, recommend, adjust.

Here’s what makes it technically and conceptually harder:

- Personalization demands persistence. Without memory, personalization becomes a gimmick.

- Reasoning across contexts. Should a user invest or pay down debt? That depends on goals, risk, and time horizons—not a simple formula.

- Behavioral nudging. A good coach recognizes that finance isn’t only math—it’s psychology. Agents must know when to push and when to reassure.

LangChain’s modularity makes these feedback loops programmable. Memory nodes can track user goals over months; custom chains can compute risk-adjusted recommendations; tool access can execute small simulations (“what if my income drops by 10%?”).

You could say it turns the spreadsheet into a conversation.

When It Fails—or Becomes Dangerous

There’s a catch. Or several.

Financial coaching bots tread dangerously close to regulated advice. In the U.S., the SEC draws a hard line between general guidance (“Here’s how compound interest works”) and personal investment advice (“Buy this ETF”). Agents that generate tailored suggestions risk crossing that boundary.

Then there’s trust. A bot that “remembers” too much feels invasive; one that remembers too little feels useless. Striking the balance between personalization and privacy isn’t just an engineering problem—it’s an ethical one.

And let’s be honest: LangChain’s tool orchestration can break. One missing API key, one hallucinated transaction category, and suddenly your “coach” is misclassifying expenses or recommending impossible savings targets.

This is why most working prototypes are positioned as assistants, not advisors. They offer guidance, not guarantees.

Designing for Human-Like Interaction

The goal isn’t to mimic empathy—it’s to model understanding.

A well-designed finance agent doesn’t need to “feel” your frustration when your spending spikes; it just needs to recognize patterns that indicate stress and adapt its tone. That can be achieved with sentiment analysis or user-state tracking chains.

For example:

- If spending deviates 20% from baseline for two consecutive months, the agent might ask, “Did something change recently—like moving or a job shift?”

- If the user replies “Yes, rent went up,” the agent can adjust its forecasting baseline instead of labeling it as “overspending.”

That kind of nuance turns a data monitor into a partner.

There’s also value in controlled imperfection—not every response needs to be perfectly factual. Real advisors admit uncertainty (“You might want to double-check with your accountant”). Agents can be trained to include similar humility markers, improving user trust.

Building Blocks That Matter

When building such systems, developers often underestimate the importance of intermediate reasoning steps. LangChain’s agent_executor and chain_of_thought analogs allow an AI to plan before answering.

A good financial coach might:

- Parse user intent (“wants to save for a car”).

- Retrieve past data (income, current savings rate).

- Call an external API (to estimate loan rates).

- Run a calculation chain (to model 12-month affordability).

- Generate an explanation (“If you maintain $500 monthly savings, you’ll hit your goal in 18 months without new debt”).

Without visible reasoning, the output feels arbitrary. Users don’t just want answers—they want justification.

That’s what builds confidence.

LangGraph: The Next Evolution

A growing number of teams are using LangGraph, a newer layer built atop LangChain that structures multi-agent systems as stateful graphs. In financial contexts, this means multiple specialized agents—budgeting, investment, and debt management—can collaborate.

For example:

- The Budget Agent analyzes spending categories.

- The Risk Agent evaluates investment capacity.

- The Compliance Agent ensures responses stay within regulatory boundaries.

Each agent communicates via shared memory, creating a kind of distributed cognition.

This architecture mirrors how real financial teams operate—advisors, risk officers, and analysts all contributing perspectives. It’s messy, but it works.

A Small Case: Freelance Income Coaching

Consider a freelance designer juggling irregular payments. A LangChain-based finance coach could:

- Pull live data from connected bank accounts.

- Categorize inflows (client payments vs. refunds).

- Estimate runway using predictive modeling on historical income gaps.

- Advise on when to transfer surplus into tax-saving instruments.

If the designer asks, “Can I afford to skip this month’s tax installment?” the agent could simulate short-term cash flow, factor in penalties, and return:

“Skipping is possible but will incur ₹2,500 in interest by March. You could delay instead by reducing your upcoming mutual fund contribution.”

That’s actionable reasoning—not vague advice.

When Humans Still Win

A reasonable question: will these agents replace financial advisors? Unlikely.

Human coaches excel at managing ambiguity—things like family pressure, emotional trade-offs, or career uncertainty. AI can model variables but not values.

The real potential lies in augmentation: hybrid models where human advisors oversee dozens of clients with AI handling daily micro-decisions. Imagine an assistant that pre-screens transactions, flags risks, and drafts goal updates—freeing advisors to focus on strategy.

LangChain’s orchestration layer makes this feasible at scale.

The Bigger Picture

Personal finance has always been a trust problem disguised as a math problem. People don’t need help knowing that saving is good—they need help doing it consistently. Coaching bots that blend memory, reasoning, and contextual awareness might finally address that behavioral gap.

LangChain, for all its quirks, gives developers a practical substrate to make these bots less mechanical and more mindful.

Whether banks, fintechs, or independent developers get there first doesn’t matter much. What matters is that financial advice is slowly becoming conversational again—just this time, the conversation happens between a person and an algorithm that remembers.