Key Takeaways

- LangGraph is purpose-built for agent-based orchestration, where reasoning steps take time and context evolves.

- It models time as a first-class concern, allowing pauses, retries, event-driven triggers, and long-running state management.

- Modularity via subgraphs and composable logic makes LangGraph suitable for enterprise-scale architecture—not just demos.

- Observability and durability must be engineered externally, giving developers full control over operational behavior.

- In domains like insurance, procurement, finance, and legal, LangGraph can orchestrate complex, semi-autonomous workflows more naturally than legacy BPM tools.

In enterprise environments, “long-running” isn’t a euphemism for inefficiency. It’s the nature of distributed operations. Some processes should take days. A mortgage underwriting workflow that wraps in 30 seconds? That’s either synthetic or reckless. More often, the delay is legitimate—waiting for document validation, legal review, or third-party API responses that are queued behind real-world bottlenecks.

We’re not talking about inefficiencies; we’re talking about orchestrated latency. The real challenge is not speed but resilience. What we need is automation that doesn’t collapse just because someone took an extra day to reply.

And this is precisely where traditional automation platforms start to fray.

Also read: Forecasting Variance Analysis with GPT-4o and LangGraph: A New Era of Conversational Forecasting

Why Traditional Orchestration Tools Fall Short?

Let’s give credit where it’s due. Tools like Camunda, Airflow, and Temporal have done an excellent job at standardizing control flow, retries, and failure handling. But what they don’t model well are the gray areas—reasoning steps, asynchronous decision-making, and workflows driven by understanding rather than static rules.

Here’s a typical modern orchestration scenario:

- A user uploads a PDF with messy handwritten annotations.

- An LLM-based document classifier parses it and flags it as high-risk.

- A compliance agent initiates the process and sends a message to a regulatory bot.

- Nothing happens for 2 days because the regulator’s API is offline.

- Meanwhile, the user sends a clarification email, changing the document interpretation.

Try modeling that in BPMN. Try storing all intermediate thoughts, reasoning steps, and context shifts across a multi-agent workflow. The whole thing begins to wobble.

LangGraph natively supports these dynamic, delayed, and reasoning-heavy workflows. Its architecture treats “thinking” as a first-class operation—not a hacky script jammed into a box labeled “Python function.”

LangGraph: From Prompt Pipelines to State Machines

LangGraph’s key innovation isn’t visual design or real-time throughput. It’s the idea that LLM-based agents should be orchestrated as modular, stateful nodes in a graph, rather than chained calls in a monolithic script.

Some core capabilities LangGraph offers:

- Deterministic state tracking: You know exactly where in the workflow your data is.

- Conditional branching based on structured agent output: Not “true/false,” but “user_intent == ‘request escalation’ and retry_count > 2.”

- Pause and resume: Not pseudo-pauses. Real, persistent state with checkpointed memory.

- Composable subgraphs: Think reusable agent workflows, like Lego blocks—not one-off monoliths.

You’re not babysitting agents anymore. You’re designing a protocol where they behave intelligently over time, even if hours pass between one thought and the next.

Anatomy of a LangGraph Agent Orchestration

LangGraph orchestrations are conceptually clean but operationally powerful. A graph consists of:

- Nodes—Represent tasks or decisions. These can be agents, tools, or conditional logic units. Each can be stateless or stateful, synchronous or async.

- Edges—Define transitions based on pattern-matching over structured state.

- State object—your single source of truth. It can carry history, external references, decisions, timestamps, user feedback, and even agent “mood” if you’re modeling personality-based behavior.

Let’s say you’re modeling a contract review workflow. Your nodes might be:

- Initial Parsing Agent

- Risk Analysis Agent

- Human Review Fork (triggers human-in-the-loop if needed)

- Negotiation Response Generator

- Legal Sign-Off Agent

Each one leaves traceable breadcrumbs in the state, enabling downstream agents to reason instead of reprocessing the same raw inputs.

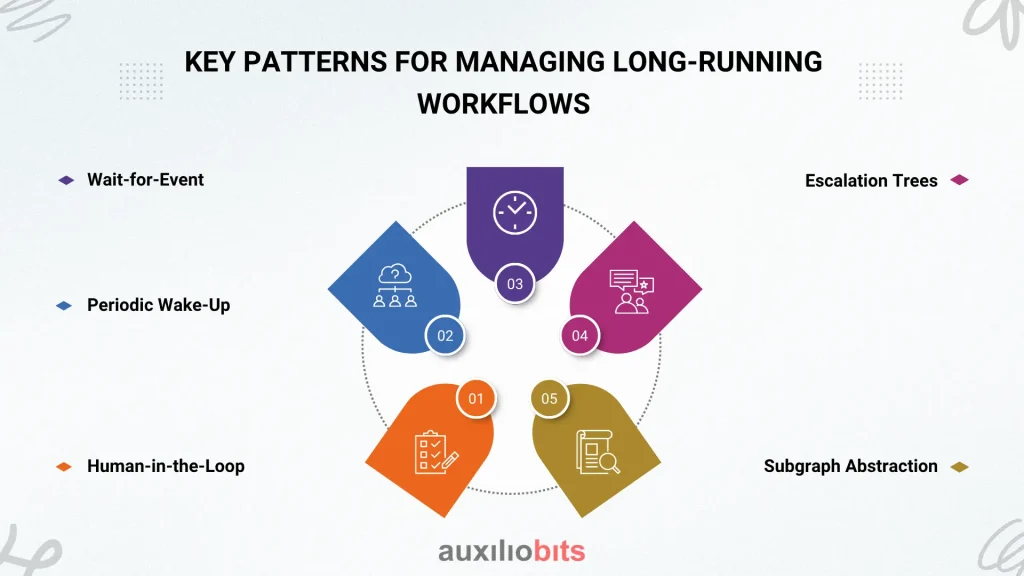

Key Patterns for Managing Long-Running Workflows

LangGraph supports several orchestration patterns that are invaluable when workflows aren’t linear or short-lived:

1. Wait-for-Event

- Ideal for: External system inputs (e.g., email, webhook, API callback).

- Common in: Procurement, legal, HR onboarding.

2. Periodic Wake-Up

- Think of agents that poll APIs, but only intelligently—based on time windows, exponential backoff, or event triggers

3. Human-in-the-Loop

- Built-in pause and resume logic.

- Accepts human decisions as structured state updates.

4. Escalation Trees

- Not just retries. Escalate to a different class of agent after multiple failures—say, from an LLM to a rules engine, then to a human.

5. Subgraph Abstraction

- Build once, use everywhere.

- Fraud detection logic doesn’t need to be redefined per process—it’s a pluggable component.

These patterns aren’t “features.” They’re architectural principles you can build systems around.

State Persistence, Timeouts, and Recovery

This is where LangGraph’s maturity—or rather, its willingness to let you own maturity—becomes apparent.

By design, LangGraph keeps orchestration lightweight. It doesn’t enforce a specific DB or timeout engine. But for long-running processes, you must externalize state. Redis, DynamoDB, or even file-based checkpoints can work—depending on the SLAs involved.

Timeout logic can be custom. You can:

- Use a scheduler to poke idle graphs.

- Add TTL logic to state records.

- Create watchdog services that monitor pending transitions.

If a graph crashes mid-execution? No problem. The checkpointed state contains every prior agent output. Rehydrating it is trivial.

But yes—you have to think about durability. LangGraph won’t save you from poor architectural discipline. Which is exactly why it works well in professional hands.

Real-World Example: Claims Processing in Insurance

Let’s model a common real-world process that’s anything but short-lived.

1. Scenario: Health Claim Filing

Workflow Nodes:

- Intake Agent: Parses claim documents.

- Eligibility Verifier: Confirms policy status via API.

- Human Review Trigger: Branches based on complexity.

- Anomaly Detection Agent: Uses past claim data for pattern matching.

- Final Decision Agent: Synthesizes all prior data to decide the outcome.

- Notification Dispatcher: Communicates the result to the user.

Unique Aspects:

- May pause 48–72 hours waiting for document resubmission.

- Includes a human adjudicator in some branches.

- Requires an external audit trail for compliance.

How LangGraph Helps:

- Each transition is deterministic, logged, and recoverable.

- Human inputs arrive via email, form, or webhook and trigger specific graph transitions.

- Agents don’t just “wait”—they observe, reason, and re-plan.

And yes, this maps better to LangGraph than to tools like Camunda. Why? Because the decisions are probabilistic, not procedural.

LangGraph vs BPM Engines: Where It Wins and Where It Doesn’t

| Feature | LangGraph | BPMN Tools (e.g., Camunda) |

| LLM-native support | ✅ First-class | ❌ Often bolted-on |

| Event-driven architecture | ✅ Strong, developer-first | ✅ But verbose to implement |

| Visual design tools | ❌ Not yet available | ✅ Mature editors |

| Human-in-loop modeling | ✅ Simple via external events | ⚠️ Often requires custom code |

| Modularity via subgraphs | ✅ Elegant | ⚠️ Achievable but clunky |

| Observability tooling | ⚠️ Customizable, but early stage | ✅ Extensive |

LangGraph isn’t a visual BPM tool for business analysts. But for architects and developers working with LLMs, APIs, and async orchestration, it’s a flexible, clean, composable framework.

Deployment and Observability in Production

LangGraph deployments are straightforward—if you’re disciplined. A minimal production setup should include:

- State Store: Pick something with TTL, indexing, and failover.

- Monitoring: Capture execution times, agent outputs, and failures per node.

- Alerting System: For unhandled transitions or timeouts.

- Replay Support: Ability to re-run from checkpoints or rehydrate failed sessions.

- Agent Telemetry: Log prompts, responses, tool calls, and confidence scores.

Final Thoughts

LangGraph doesn’t just let you build workflows. It asks you to think temporally. To consider that intelligence isn’t always instantaneous. To design for agents that pause, adapt, listen, and return later—with new context, updated reasoning, and better decisions.

In the enterprise, where “done in five seconds” is often meaningless, this mindset isn’t a luxury. It’s survival.

And while LangGraph isn’t everything for everyone, it’s undeniably one thing for a lot of people: the right abstraction for orchestrating thoughtful, multi-agent, time-aware workflows.