Key Takeaways

- LLMOps is about managing behavior, not just deployments. Once models entered real workflows, the biggest challenges shifted from model versioning to prompt drift, cost control, and output reliability. LLMOps exists because language models behave more like evolving systems than static software components.

- AgentOps becomes necessary the moment models are allowed to act. As soon as LLMs can call tools, trigger workflows, or make decisions, operational risk increases sharply. AgentOps addresses failure modes that LLMOps alone cannot—missed actions, unintended side effects, and silent decision loops.

- Outputs and outcomes must be evaluated together. A well-written response does not guarantee a correct action, and a clumsy response can still lead to the right business outcome. Separating LLM evaluation from agent outcome analysis creates blind spots that enterprises cannot afford.

- The convergence of LLMOps and AgentOps is already happening—by necessity. Platforms are quietly expanding beyond their original scope, and enterprises are stitching together telemetry, governance, and evaluation across models and agents. The overlap isn’t a future roadmap item; it’s an operational reality today.

- The real shift is from operating models to operating decision systems. Teams are no longer just responsible for latency, cost, or accuracy—they’re accountable for automated decisions and their downstream impact. This changes what “success” in AI operations looks like and forces harder conversations about autonomy, control, and accountability.

There was a time—not that long ago—when deploying a large language model into production felt like a novelty. You wrapped an API call in a service, added a prompt template, maybe logged a few responses, and called it “AI-enabled”. That phase didn’t last. The moment these models started touching real workflows—procurement approvals, customer escalations, financial reconciliations—the cracks appeared. Latency spikes. Prompt drift. Unexpected outputs. Quiet failures that only surfaced weeks later when someone asked, “Why did this decision happen?”

Out of that mess, LLMOps emerged as a discipline. At first, LLMOps was not a tool or a vendor category but rather a set of practices borne out of pain. And almost immediately after, AgentOps followed—because once you stop treating models as passive responders and start letting them act, coordinate, and decide, the operational surface area expands again.

What’s intriguing now isn’t that both exist. It’s that they are starting to overlap, sometimes uncomfortably, sometimes productively. The line between “managing models” and “managing agents” is blurring, and most enterprises are feeling it before they can clearly articulate it.

LLMOps: More Than Model Deployment, Less Than Autonomy

LLMOps borrowed its early language from MLOps, but it never fit cleanly. Traditional MLOps assumed relatively static models trained offline, versioned carefully, and deployed behind deterministic APIs. LLMs behave differently:

- Prompts evolve faster than code.

- Behavior shifts without retraining.

- Outputs are probabilistic, not predictive in the classic sense.

So LLMOps teams started focusing on a different set of concerns:

- Prompt lifecycle management (versioning, rollbacks, A/B testing)

- Output evaluation using heuristics, classifiers, or humans-in-the-loop

- Cost governance tied to token usage, not CPU cycles

- Safety filters that are contextual, not binary

In one financial services deployment I worked on, the model itself was stable for months. What changed weekly were the prompts—sometimes daily during regulatory review cycles. The operational burden wasn’t model drift; it was instruction drift. LLMOps tooling that couldn’t track prompt evolution alongside outcomes became useless rapidly.

Still, even mature LLMOps setups assumed something important: the model was responding, not acting. It generated text, recommendations, and summaries. Decisions remained elsewhere.

That assumption doesn’t hold anymore.

Also read: Purchase Order Automation: Agent Networks Integrating ERP + Supplier Systems

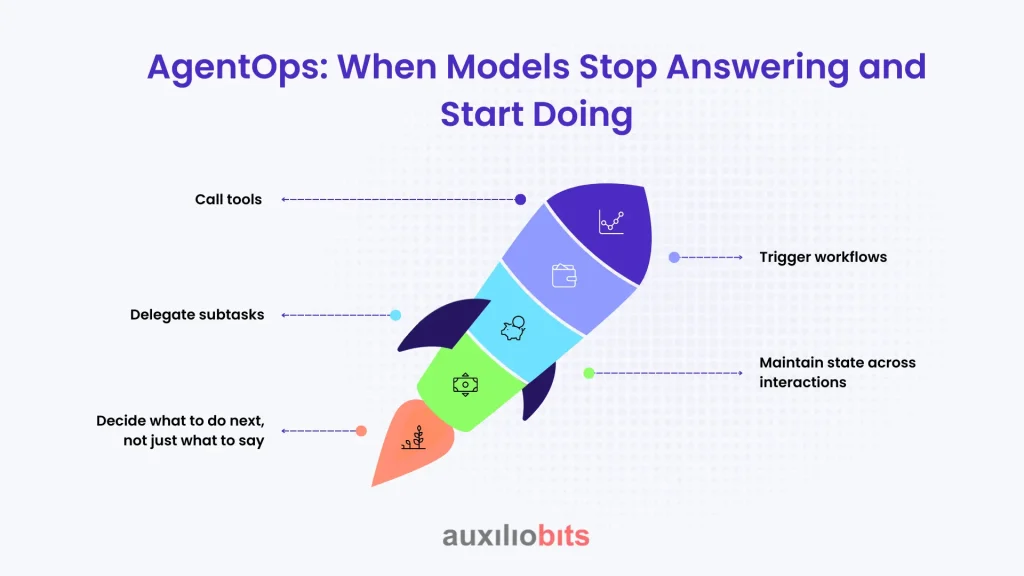

AgentOps: When Models Stop Answering and Start Doing

AgentOps shows up the moment an LLM is allowed to:

- Call tools

- Trigger workflows

- Delegate subtasks

- Maintain state across interactions

- Decide what to do next, not just what to say

This isn’t theoretical. Enterprises are already deploying agents that:

- Reconcile invoices by querying ERP systems

- Negotiate delivery timelines with suppliers via email

- Route support tickets based on inferred intent and urgency

- Monitor KPIs and initiate corrective actions

The operational challenges here are different and often sharper.

An agent failing isn’t just a bad response—it’s a missed payment, a duplicated order, or an email sent to the wrong stakeholder. Observability now needs to answer questions like:

- Why did the agent choose this action over another?

- Which tool call failed, and what did the agent infer from that failure?

- Did the agent loop, stall, or silently degrade?

AgentOps borrows concepts from distributed systems, workflow orchestration, and even SRE practices. Think runbooks, circuit breakers, and escalation policies—but applied to reasoning entities.

And here’s where things get messy: most agent stacks are built on top of LLMs, which means LLMOps and AgentOps are already intertwined, whether teams like it or not.

Shared Concerns, Different Lenses

There are areas where LLMOps and AgentOps are effectively solving the same problem, just from different angles.

Evaluation

- LLMOps evaluates outputs: accuracy, relevance, and safety.

- AgentOps evaluates outcomes: task success, business impact, and side effects.

In reality, you need both. An agent can produce “good” text and still make a poor decision. Alternatively, you may use awkward language while making the correct decision. Separating those evaluations leads to false confidence.

Observability

- LLMOps logs prompts, responses, and token counts.

- AgentOps traces decision paths, tool calls, and state transitions.

Without correlating these, root cause analysis becomes guesswork. There have been teams chasing model hallucinations when the real issue was a stale tool response that nudged the agent off course.

Governance

- LLMOps focuses on data usage, safety filters, and compliance.

- AgentOps focuses on authority boundaries and escalation.

But governance breaks when an agent’s autonomy exceeds the assumptions baked into prompt-level safeguards. A “safe” response can still trigger an unsafe action.

A Subtle Shift in How We Should Think About “Ops”

The deeper shift here isn’t technical—it’s conceptual. We’re moving from operating models to operating decision systems.

Models generate text. Agents generate outcomes. Ops teams are now responsible for both, whether they signed up for it or not.

That means success isn’t just:

- Fewer hallucinations

- Lower latency

- Better benchmarks

It’s:

- Fewer operational surprises

- Faster recovery when things go wrong

- Clear accountability for automated decisions

LLMOps and AgentOps converging isn’t a trend. It’s a response to reality. Enterprises are already running systems where language, reasoning, and action are inseparable. The operational practices are simply catching up.

Some teams will wait for a “unified platform.” Others will assemble their own stack, imperfectly, and learn faster. History suggests the second group usually wins—at least for a while.

And yes, the tooling will mature. Vendors will rebrand. Frameworks will stabilize. But the real work will still be human: deciding how much autonomy to allow, where to draw boundaries, and how to live with systems that don’t behave like traditional software.

If that sounds uncomfortable, it should. That discomfort is the signal that we’ve crossed from experimentation into operations—and there’s no going back.