Key Takeaways

- LangChain excels at rapid prototyping and experimentation, making it ideal for small teams or early-stage projects where speed matters more than operational rigor.

- LangGraph provides structured orchestration with explicit state management, error handling, and parallelism—critical for production-level, multi-agent workflows.

- Workflow complexity determines framework choice: linear, low-risk pipelines suit LangChain; stateful, high-stakes, or multi-agent flows favor LangGraph.

- A phased approach often works best: start with LangChain for experimentation, then migrate to LangGraph as reliability, traceability, and resilience become priorities.

- Enterprise adoption requires trade-off evaluation: consider observability, compliance, maintainability, team size, and integration needs before committing to either framework.

Orchestration has become the quiet backbone of enterprise AI projects. You can build the smartest large language model prompt or the most carefully engineered retrieval pipeline, but if you can’t sequence, monitor, and adapt the interactions between components, you end up with brittle demos instead of durable systems. Two frameworks—LangChain and LangGraph—now dominate conversations around orchestrating agentic workflows. On paper, both promise similar things: structure around LLMs, integration hooks, and composability. But in practice, they differ in philosophy, technical trade-offs, and fit for specific enterprise needs.

This is not a binary “which is better” argument. Instead, the real question is: when does LangChain make sense, and when is LangGraph the smarter bet?

Also read: EMR Integration Using LangChain and OpenAI for Smart Data Entry

The Roots: How These Frameworks Emerged

LangChain came first, and it spread quickly—too quickly, some would say. When it launched in 2022, developers finally had a framework that abstracted away the painful plumbing around LLM prompts, vector databases, and retrieval strategies. Enterprises latched on because it looked like a standardized way to move beyond hacky notebooks.

But LangChain’s growth created a paradox. It gave everyone tools, yet it lacked a strong opinion on how to structure multi-step, stateful processes. Developers often joked that LangChain was a Swiss army knife that could open a bottle of wine but might also stab your hand if you weren’t careful.

LangGraph entered later, bringing with it the rigor of graph-based state machines. Instead of chaining arbitrary functions in linear or branching flows, it insisted on nodes, edges, and explicit state transitions. In other words, LangGraph treated LLM-powered agents not as scripts to be glued together but as actors in a graph with memory, retries, and failure handling.

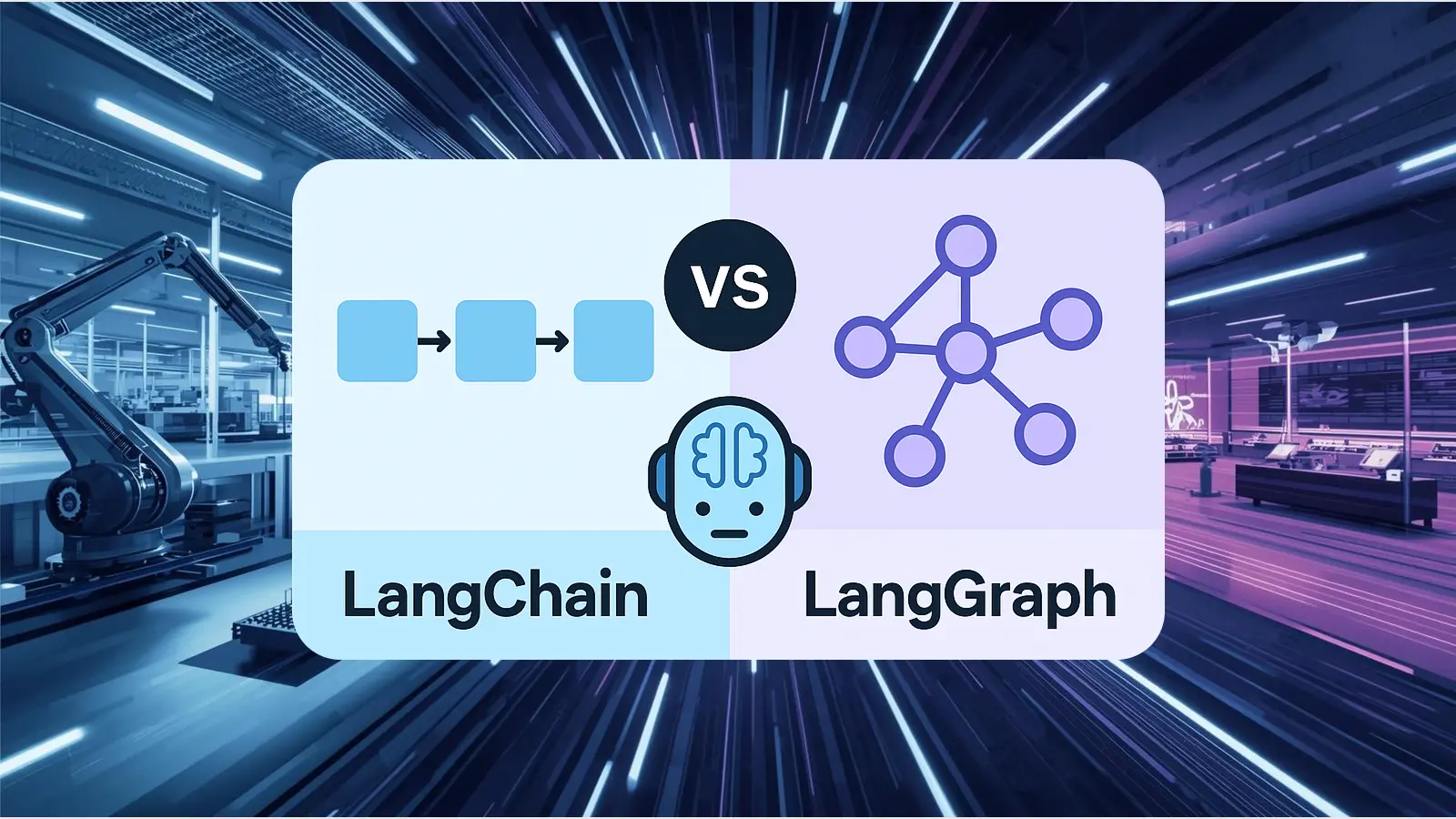

Linear Chains vs Explicit Graphs

Think of LangChain as Lego bricks you can snap together. You get modularity, plenty of third-party integrations, and the freedom to try new structures quickly. But as any parent who’s stepped barefoot on a pile of Legos knows, flexibility without discipline creates a mess. LangChain doesn’t stop you from building a fragile Rube Goldberg machine of prompts.

LangGraph, by contrast, borrows from systems engineering. It doesn’t just let you “chain”; it forces you to declare nodes (states) and edges (transitions), making workflows resemble a finite state machine. That structure pays off when you’re running agents in production. Agents get stuck? You can see exactly which node failed and retry from there. Need deterministic behavior? Graphs enforce it.

But here’s the rub: LangGraph requires more upfront design thinking. It’s like Kubernetes compared to Docker Compose. Great for scale and resilience, but not the friendliest when you’re hacking a prototype over a weekend.

Where LangChain Fits Best

- Rapid prototyping: A data science team at a fintech firm used LangChain to quickly test retrieval-augmented generation (RAG) for customer support. The goal wasn’t immediate production—it was to prove feasibility. LangChain’s ready-made connectors to Pinecone and FAISS shaved weeks off the proof-of-concept timeline.

- Simple orchestrations: If your workflow is “take query → search embeddings → generate answer,” LangChain is perfectly adequate. Adding LangGraph here would be over-engineering.

- Integration playgrounds: Because LangChain’s library of integrations is massive, it’s often the fastest way to experiment with multiple tools (e.g., switching from OpenAI embeddings to Cohere without rewriting everything).

Where LangGraph Excels

- Complex, stateful agent teams: A logistics company had autonomous agents coordinating between inventory, shipping schedules, and customer updates. Failures weren’t rare. With LangGraph, they could visualize the graph, isolate failures, and re-run just the failed node. That saved operational headaches.

- Enterprise-grade monitoring: LangGraph makes it easier to hook into observability pipelines (e.g., Prometheus or custom dashboards). In regulated industries like healthcare, being able to trace every state transition is critical.

- Recoverability and resilience: In scenarios where “try again from the top” is unacceptable—like financial transaction reconciliation—LangGraph’s checkpointing is worth its weight in gold.

Technical Nuances Often Missed

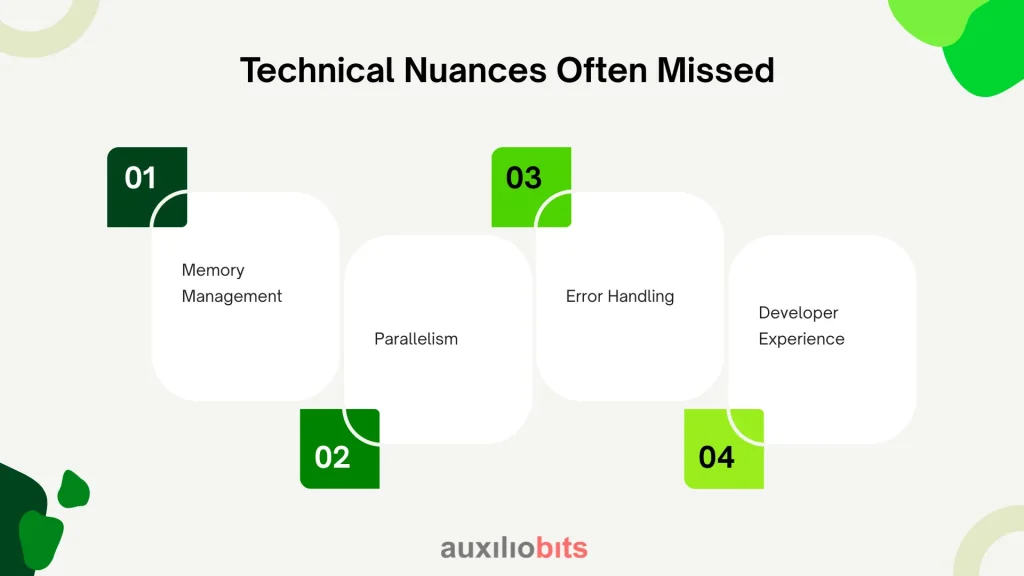

1. Memory Management

- LangChain offers a variety of memory objects, from simple conversation buffers to vector-backed recall. But they often act like global variables—handy but chaotic.

- LangGraph forces memory to live as a state in the graph. That means no hidden side effects. It’s stricter, but debugging becomes saner.

2. Parallelism

- LangChain technically allows async calls, but concurrency is awkward to express.

- LangGraph, being graph-based, models parallel branches naturally. If two agents can run independently (say, one checking compliance rules and another retrieving contract clauses), LangGraph handles it cleanly.

3. Error Handling

- LangChain error handling feels like duct tape—it wraps around functions or manual retries.

- LangGraph bakes in retries, backoffs, and fallback nodes at the orchestration level. That distinction matters once you leave the demo stage.

4. Developer Experience

- LangChain has a larger community and more tutorials, making onboarding easier.

- LangGraph, while younger, appeals to engineers who prefer deterministic control and dislike the “black box” feel of chains.

The Subtle Trade-Offs Nobody Mentions

- Cost of Overhead: LangGraph’s extra rigor introduces overhead. For a startup with one ML engineer, spending days defining graph states feels bureaucratic. LangChain’s chaos is sometimes a feature.

- Maturity vs. Innovation: LangChain’s integrations are more mature, but LangGraph is innovating faster in orchestration-specific areas. Expect LangGraph to catch up on integrations, but don’t expect LangChain to pivot into deep orchestration.

- Learning Curve: LangChain’s learning curve is gentle at first, then suddenly steep when you hit advanced orchestration. LangGraph’s curve is steeper upfront but smoother later.

Switching Teams: Challenges, Learnings, and Wins

There was a retail company that started with LangChain for a personalized shopping agent. It worked fine in development. But once deployed, agents occasionally hallucinated discounts or gave contradictory product info. Debugging in LangChain was painful—flows weren’t explicit, so engineers had to replay logs and guess where things went wrong.They rebuilt in LangGraph. Now, each agent’s decision was a node: “Check inventory,” “Fetch current discounts,” and “Assemble response.” When a hallucination occurred, they could trace exactly where the discount logic went off. It wasn’t magic—it was just structure. Interestingly, performance didn’t improve much, but trust in the system did. And that made stakeholders more willing to scale the project.

The Decision Lens: How to Choose

When weighing LangChain vs LangGraph, I find it useful to ask three blunt questions:

1. What stage are you in—exploration or production?

- Exploration → LangChain

- Production-critical → LangGraph

2. How complex are the workflows?

- Linear, low-risk → LangChain

- Multi-agent, high-stakes → LangGraph.

3. What does your team value more—speed of iteration or operational reliability?

- If culture prizes moving fast, accept LangChain’s messiness.

- If culture prizes reliability, endure LangGraph’s upfront design cost.

Important Key Takeaways

Many teams treat this as a zero-sum choice. In reality, using both can be strategic. LangChain for quick iteration, LangGraph once the winning design needs hardening. Think of it as moving from Jupyter notebooks to Airflow DAGs. The former helps you think; the latter keeps the business running.

Practical Checklist for Enterprises

Before committing, evaluate against:

- Observability needs: Do you need full traceability?

- Compliance requirements: Regulated industries lean toward LangGraph.

- Team size and skill: Smaller teams benefit from LangChain’s simplicity.

- Long-term maintainability: If the system will live for years, LangGraph’s discipline pays dividends.

- Integration priority: Need a wide array of plug-ins today? LangChain is more complete.

Final Thought

The truth is, most enterprises will experiment with both—starting with LangChain because it feels familiar, then adopting LangGraph when things get messy. It’s not unlike the history of data engineering: people hacked ETL scripts until Airflow forced structure. Orchestration in AI is heading down the same road.

And maybe that’s the most important insight: the framework matters less than recognizing when to graduate from playbooks to production. LangChain and LangGraph are tools for different stages of a project. The real skill is knowing when the season has changed.