Key Takeaways

- Unlike traditional workflow engines, LangGraph enables stateful, memory-aware, multi-agent interactions that are better suited to enterprise environments with non-linear processes and context-heavy decision-making.

- LangGraph treats workflow components as autonomous actors with roles, context, and reasoning capabilities, enabling agents to adapt, recover, and communicate during execution.

- Shared memory architecture solves real-world complexity. In distributed workflows (procurement, compliance, customer service, etc.), LangGraph’s shared memory model allows different agents to learn from and build on each other’s states—avoiding redundant tasks and miscommunication.

- For simple, deterministic automations, LangGraph might add unnecessary complexity. Its power shines in dynamic, exception-heavy, or multi-system environments where traditional RPA or BPMN tools fall short.

- While LangGraph offers visibility and flexibility, enterprises must still enforce governance around LLM behavior, data handling, and compliance—especially when agentic systems are making semi-autonomous decisions.

In enterprise environments, complexity isn’t just an adjective—it’s the terrain. You’ve got sprawling systems, deeply entrenched human processes, legacy software duct-taped to modern platforms, and an abundance of exceptions that don’t quite fit into anyone’s “happy path” diagrams. Automation vendors have promised end-to-end orchestration for years, but much of what’s been achieved is more linear and brittle than adaptive and intelligent.

This is where LangGraph enters the picture—not as a buzzword-fueled alternative, but as a structural rethink. It’s not about stitching tasks together. It’s about enabling stateful, contextual, multi-agent collaboration that mirrors how work unfolds in the real world—non-linearly, with dependencies, interruptions, and ambiguity.

Also read: EMR Integration Using LangChain and OpenAI for Smart Data Entry

Not Another Pipeline Tool

It’s tempting to throw LangGraph in the same bucket as Airflow, Step Functions, or BPMN-based tools. But you’d be missing the point. LangGraph isn’t designed around tasks or DAGs. It’s designed around conversational state transitions, memory, and agentic behaviors—which, in enterprise terms, translates to agents that not only act but also reason, recover, and adapt across workflows.

Here’s the kicker: LangGraph doesn’t treat each step like a function call. It treats it like an actor in a scene—with its memory, role, and dialogue. That alone changes the game for enterprise use cases.

The Real Problem: Workflows Aren’t Linear

Traditional enterprise automations tend to assume a straight line: Input → Processing → Output. But real workflows? They branch, loop, escalate, pause, and involve decisions that depend on human context.

Take this real-world example:

A multinational manufacturing company wants to automate its incident management process across plants. The process involves:

- First-level triage by site engineers.

- Escalation to a remote diagnostics team.

- Conditional routing to OEM vendors based on machine make.

- Compliance documentation that varies by country.

Trying to model this in a workflow engine like Camunda or Power Automate gets messy fast. Conditional paths explode. Exception handling becomes unreadable. And God forbid someone changes the escalation matrix—because you’re back in workflow diagram hell.

LangGraph handles this differently. It allows each “agent” in the process (engineer, diagnostics bot, compliance advisor) to function semi-independently but still communicate via a shared state machine. You model logic as nodes, yes—but the state carries memory, enabling much more natural progression, rollback, or escalation based on context.

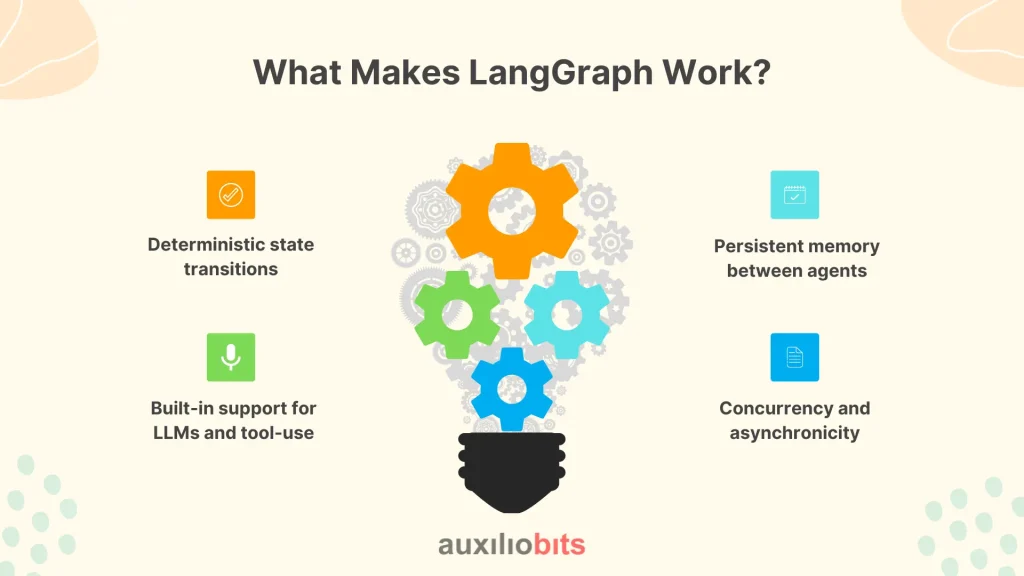

What Makes LangGraph Work?

A few architectural decisions behind LangGraph make it particularly well-suited for enterprise-grade intelligent automation:

Deterministic state transitions:

Unlike agent frameworks that can feel like black boxes (LLMs hallucinating midway, anyone?), LangGraph uses a graph structure where transitions are predictable and auditable. You know which node led to which, and why.

Persistent memory between agents:

Agents don’t have to be stateless microservices. They can “remember” what’s been discussed, attempted, or skipped. That’s a far cry from RPA scripts that rerun the same action regardless of past attempts.

Built-in support for LLMs and tool-use:

LangGraph doesn’t force you to choose between deterministic logic and generative AI. You can embed an LLM-driven node next to a strict rules-based validator—giving you precision where needed and flexibility where useful.

Concurrency and asynchronicity:

Need three agents to handle tasks in parallel and then converge? LangGraph can handle that. Need one agent to pause and wait for human input without blocking others? That’s native, too.

Let’s Get Technical HE.

Let’s say you’re orchestrating a procurement workflow for a global enterprise. Here’s what it might look like in LangGraph:

1. Agent: Requisition Collector:

Gathers order details from internal teams. Uses a fine-tuned LLM to understand semi-structured emails or voice notes. Knows the context of previous requests.

2. Agent: Vendor Evaluator:

Looks up approved vendor lists, compliance checks, and pricing history. Connects to SAP or Oracle ERP but reasons through exceptions (e.g., single-source justifications).

3. Agent: Risk and Compliance Checker:

Uses both rules and LLMs to flag orders that may trigger audit concerns based on jurisdictional thresholds or historic anomalies.

4. Agent: Negotiation Assistant

Initiates communication with vendors. Summarizes counteroffers. Can switch between automated emails and prompting a human manager for intervention.

5. Agent: Approval Coordinator:

Routes to department heads, CFOs, or region-specific controllers, depending on purchase size and risk flags. Adjusts behavior based on the company calendar (end-of-quarter crunch, anyone?).

Each agent is a node, and each node can hand off, roll back, or run in parallel. The key is that memory is shared across the graph. The negotiation assistant knows what the compliance checker flagged earlier. The approval coordinator can see past requisition patterns.

This interconnected memory is critical in enterprise settings, where siloed automations typically lead to disjointed experiences and shadow work.

Where LangGraph Succeeds (and Where It Might Not)?

LangGraph’s strength lies in structured, stateful coordination of agents with memory and context. But like any tool, it’s not universally applicable. Based on hands-on implementations, here’s where it shines—and where it might struggle:

Ideal Use Cases

- Multi-agent scenarios where agents must reason, talk to each other, and adapt.

- Human-in-the-loop systems that require contextual decision-making or exception handling.

- Cross-system orchestrations where no single system owns the entire flow (e.g., bridging Salesforce, SAP, internal APIs).

- Dynamic workflows that change based on memory, events, or past actions.

Warnings and Limitations

- High overhead for simple processes: If your use case is just triggering a webhook on form submission, LangGraph might be overkill.

- Learning curve: Enterprise developers used to linear workflows or drag-and-drop builders might find the graph mental model challenging initially.

- LLM dependency risks: If not properly sandboxed or controlled, agentic LLM nodes can introduce nondeterministic behavior.

Also, LangGraph is still maturing as a platform. Some enterprise features—like role-based access controls, full audit trails, or out-of-the-box integrations with older systems—still need custom stitching.

A Note on Governance

Enterprise workflows don’t live in a vacuum. They live in ecosystems full of compliance constraints, audit requirements, security protocols, and change management policies. LangGraph, by design, gives you introspectability—you can log every state change, decision path, and output. That’s essential when your process needs to stand up to scrutiny.

However, governing agent behaviors—especially when LLMs are involved—are still a work in progress. Guardrails like prompt validation, output sanitization, and human checkpoints aren’t optional. They need to be embedded from the start.

One tactic we’ve seen work well? Wrapping every LLM node in LangGraph with a pre-flight and post-flight rule layer, kind of like middleware. This lets you catch outliers before they cascade across the workflow.

Observations from the Field

A few firsthand notes from deployments we’ve seen or supported:

- In pharma: A global life sciences firm uses LangGraph to coordinate safety report triage across pharmacovigilance teams, automating both extraction from adverse event reports and routing to medical reviewers—cutting average handling time by 60%.

- In insurance: An underwriting group leverages LangGraph agents to gather customer data, compare policy history, flag anomalies, and prep draft responses—while always allowing underwriters to intercept at decision points.

- In banking: For complex KYC workflows that span multiple systems and regions, LangGraph agents serve as intermediaries—extracting data from documents, reasoning about risk, and routing tasks based on jurisdictional logic and past case history.

What unites all these? They’re non-linear, multi-actor workflows that traditional BPM or RPA tools couldn’t handle without spaghetti logic or brittle integrations.

Final Thoughts

LangGraph isn’t for every workflow. But for the ones that defy simple flowcharts and cookie-cutter bots—especially in enterprises juggling multiple systems, stakeholders, and judgment-based tasks—it opens up a new way of thinking. It’s less about building perfect flows and more about assembling intelligent, autonomous collaborators that understand their role, remember what’s happened, and adapt to what’s next.

Is it a silver bullet? No. But it’s one of the few tools today that feels designed for the messy reality of enterprise work, not just the idealized version in automation brochures.

And maybe that’s what makes it worth paying attention to.