Key Takeaways

- Voice-first interfaces often target older systems first because the operational pain is greater, not because integration is easier.

- A robust voice-to-legacy pipeline requires careful orchestration across ASR, NLU, connectors, and guardrails to bridge conversational input with rigid systems.

- No single integration pattern fits all—teams must choose between RPA, terminal emulation, middleware, or hybrid approaches depending on stability, risk, and data flow.

- Reliability and subtle UX design choices—such as adaptive prompts, selective confirmations, and safe barge-in—make the difference between a flashy demo and a dependable daily tool.

- Security, compliance, and operational ownership are critical; without proper privilege scoping, dual controls, and maintenance responsibility, the system will degrade over time.

Enterprise software has a long tail. Green-screen terminals still route freight. Client–server tools built around the turn of the century still run payroll and inventory. Some systems live inside Citrix sessions; others hide behind terminal emulators. None of them was designed to hold a conversation. Yet staff increasingly expect to speak a command and get a result. The bridge between those expectations and those systems is a voice-driven autonomous agent—part interpreter, part traffic cop, part stubbornly patient clerk.

The concept is simple; the reality, not so much. Spoken input is confusing, whereas applications demand exactness. Somewhere in the middle, orchestration has to reconcile “Check ETA for order 7349 and notify Raj if it slips past Friday” with navigation keystrokes and quirky field formats. It can be elegant. It can also snap like a dry twig when a screen moves two pixels.

Also read: Autonomous Agents for Container Tracking and Exception Handling

Where voice fits—and why it shows up first in “old” environments

Contrary to the usual assumption, older platforms often get voice enablement before newer ones. Not because they are easier, but because the pain is higher.

- Hands are busy. Warehouse operators and field engineers cannot keep switching to a terminal.

- Navigation is costly. Multi-step paths through arcane menus create cognitive load and delay.

- Training cycles never end. A new hire needs weeks to memorize where “Goods Receipt > Advanced Search > Status” lives.

- Quick wins are visible. Replacing a five-minute terminal dance with a ten-second spoken lookup changes sentiment overnight.

Another under-discussed factor: risk. Full system replacement threatens uptime. A thin conversational layer wrapped around the existing stack changes almost nothing below the waterline.

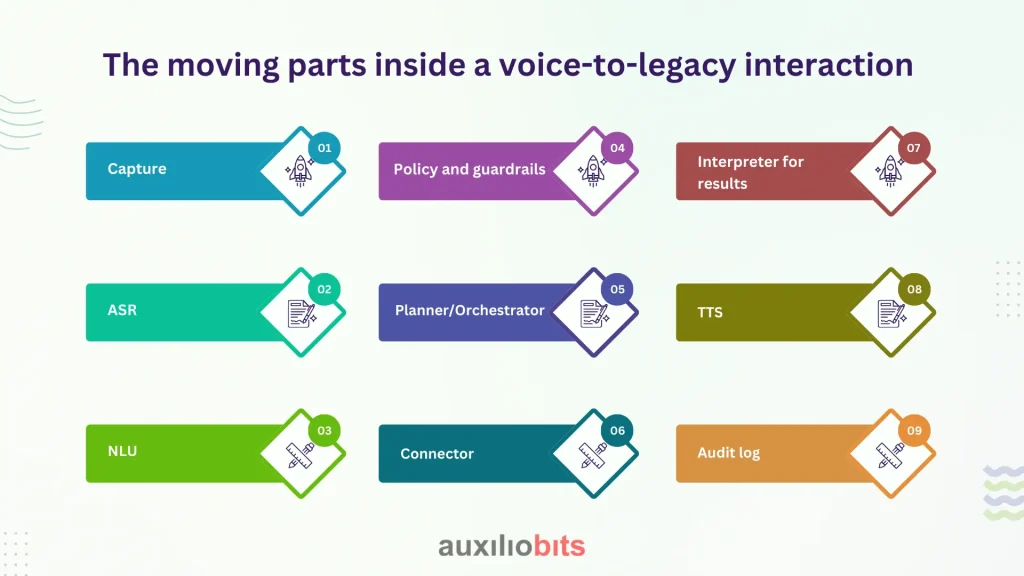

The moving parts inside a voice-to-legacy interaction to

Strip off the UI gloss, and the pipeline looks like this:

- Capture—Microphones with sensible placement and basic echo control. Shop floors and ambulances are loud; hardware matters.

- ASR—Automatic speech recognition converts a signal to text. Domain vocabulary (SKUs, drug names, and feeder lines) lowers error rates by a lot.

- NLU – Intent and entity extraction: “check ETA” → action; “order 7349” → identifier; “Friday” → time constraint.

- Policy and guardrails – Permission checks. Can the requester trigger a write? Is an approval required?

- Planner/Orchestrator – Decides the path: API call if available, fallback to RPA, otherwise terminal commands; inserts confirmations where needed.

- Connector—The actual bridge: REST/SOAP adapter, message broker, RPA robot, or 3270/5250 terminal emulator.

- Interpreter for results – Legacy output is terse: codes, fixed-width lines, low-context fields. Convert to human-readable phrasing.

- TTS—Text-to-speech with interruptions (barge-in) enabled. No one wants to sit through a paragraph if a new thought arrives mid-sentence.

- Audit log—Who asked, what was heard, which steps were fired, what changed, and the evidence.

If any piece drifts out of tune—new jargon in the field, a changed hotkey in the emulator, a password policy tweak—the experience degrades. Sometimes subtly. Sometimes dramatically.

Patterns that get shipped

No single pattern wins everywhere. Teams mix and match.

Screen automation (RPA) that “drives” the UI

- Good when there are no APIs, and screens stay stable for years.

- Fragile if layouts change often or dynamic elements shuffle.

- Works best with selectors that target labels, not coordinates.

Terminal emulation (3270/5250/VT)

- Surprisingly reliable—text screens don’t drift visually.

- Completely unforgiving of typos and timing.

- Expect to implement pacing, retries, and screen-state assertions.

Middleware wrappers

- A small service that pretends to be an API for the agent and speaks “legacy” to the backend.

- Adds a layer of sanity; becomes the place to enforce policy and caching.

- Warning: a wrapper can age into a new legacy if neglected.

Database read-only side doors

- Useful for quick lookups with heavy traffic (status, balances, counts).

- Dangerous for writes; bypassing business rules invites data skew.

- Pair with reconciliation jobs and idempotent operations.

Hybrid choreography

- Read via SQL or API, write via RPA so validations fire.

- Escalate to a human for high-risk commits or edge cases.

- Not elegant, usually necessary.

Side comment, no one likes hearing: “Email gateway” still appears on architecture diagrams. Some systems only accept changes by consuming a structured email. A voice agent can generate those, too. Ugly, yes. Effective, also yes.

Subtle design choices that separate “demo” from “daily use”

A polished voice demo and a durable production assistant differ in tiny decisions.

Phonetic mode on demand

- IDs like C7ZN are misheard in noisy rooms. A quick switch: “spell code,” then accept alpha-bravo char sets or digit-by-digit.

Confidence-driven confirmations

- Don’t confirm everything. Confirm only when ASR confidence drops below a threshold or the action is irreversible

Adaptive prompts

- If the warehouse always checks the same 12 SKUs at 7:30 a.m., offer them unprompted. If a field engineer consistently says “last service,” map that colloquialism to the right timestamp.

Barge-in with state safety

- Allow interruptions, but don’t leave half-completed writes. Commit checkpoints; on interrupt, roll back or resume cleanly.

- Pulling shipment data… last scan shows a 12-minute delay at Gate B.” Progress plus partial value beats dead air

Reliability engineering

Voice feels slow, long before a screen does. A four-second gap on a web page is tolerable; on a call, it feels broken. Reliability work, therefore, becomes the difference between adoption and abandonment.

- Set a total envelope (e.g., 1500 ms target, 3000 ms max). Budget pieces: ASR, NLU, middleware, connector, system. If the legacy hop alone eats two seconds, compensate elsewhere.

- If the API path trips, drop to cached answers or a screen path. If everything fails, say so quickly and route to a human.

Idempotency keys

- A must for writers. Duplicate voice commands are common (“repeat that” isn’t always heard as a question).

Session resilience

- Systems behind Citrix or with short timeouts need re-login playbooks. Cache sessions per user role; refresh proactively.

Drift detection

- ASR models drift as vocabularies change. RPA scripts drift as UIs evolve. Monitor error signatures, not just outages.

A side note that sounds trivial until it isn’t: clock skew. If “yesterday” is computed in one service with a different timezone than the terminal host, reports disagree. Users will trust the green screen, not the agent.

Security and compliance

Voice plus automation raises eyebrows, and rightly so.

Impersonation vs. service identity

- Two patterns: the agent acts as the user (strong audit mapping) or acts as a service account (simpler but riskier). Many choose a hybrid: read as service, write as user.

Scoped privileges

- Create minimal roles for agents; never reuse full human roles.

- Over a threshold (refunds, master data edits), require spoken confirmation and a second factor or supervisor approval.

Redaction

- Strip sensitive snippets from stored audio and transcripts. Keep hashes for forensic traceability.

- “Heard X; parsed Y; executed Z; result R; hashes H” beats a blob of JSON no one ever reviews.

Real-life Examples

1. Dispatch on an AS/400

A regional carrier kept a 5250 app for loads and lanes. Dispatchers juggled calls and screens. A voice assistant joined the telephony system: “Where’s truck 19?” triggered a terminal macro to pull GPS pings from a nightly file import. Gains: ~18% faster call handling. First breakage: a security update inserted a CAPTCHA. Fix: shift login to a pre-authenticated session pool and rotate tokens internally.

2. Theater scheduling in a hospital

Operating room availability lived on a text terminal with arcane commands. A spoken query—“Is OR-3 free for two hours next Tuesday afternoon?”—translated into three sequential screens and a printable report. The catch? Ambient noise during peak times. Solution: beamforming microphones and an “exact repeat” mode for room names. It felt old and new at once—and that was fine.

3. Utilities maintenance and the stubborn CMMS

A maintenance team needed hands-free closure of work orders. Commands like “Close WO-45719; replaced feeder cable; next inspection in six months.” The agent handled templated notes and scheduled math, then wrote via RPA so all validations fired. Jargon training turned “recloser” from “recluse” into the right asset type. The thin edge case: future-date validation differed by environment. The agent learned both rules.

4. Manufacturing quality call-outs

An MES recorded deviations. Line supervisors spoke: “Log deviation on Line B: blister pack tears at station five, batch 7K.” Voice captured the narrative, then posted a structured record. The same agent refused to close a lot without a human sign-off. Fast when it should be, stubborn when it had to be.

Where this approach simply doesn’t fit

- Volatile UIs that shuffle fields based on data. RPA turns into whack-a-mole.

- Irreversible, high-risk actions (e.g., securities trade execution). A human last mile is mandatory.

- Non-stationary noise environments (jackhammers, sirens) without serious audio gear.

- Organizations are unwilling to treat the glue layer as a product. If no owner exists, entropy wins.

A small contradiction surfaces here: voice often speeds things up most where stakes are lowest (lookups, notes), yet leadership wants it first where stakes are highest (financial adjustments). Expectations need tuning.

A closing note

The durable pattern is clear. Voice becomes a thin control layer. Autonomous agents do the parsing, the planning, and the politeness. Connectors shoulder the awkward parts—keystrokes, terminal commands, awkward reports—and shield people from idiosyncrasies that shouldn’t matter anymore. The underlying systems keep their gravity; the surface gets easier to live with. Some days, that is the exact right amount of change.