Key Takeaways

- Composable architecture isn’t failing because of technology—it’s failing because of centralized thinking. Agentic thinking shifts composability from rigid orchestration to distributed, context-aware collaboration.

- Agents replace static logic with reasoning and intent. They move enterprises from command execution (APIs) to goal negotiation (intent-driven systems).

- Cognitive modularity enables systems to understand the ‘why,’ not just the ‘how.’ This makes architectures resilient to change, capable of self-adjustment without manual intervention.

- Bounded autonomy is essential. Agents must act independently within well-defined constraints to balance flexibility with compliance.

- The next generation of composability is cognitive. Enterprises that embed reasoning into their architectures—through agentic design—will achieve true adaptability, not just modularity.

Every architect has a version of the same story. You build a system that’s “composable.” It looks great on paper—modular, API-first, and well-governed. Then three months later, a new business requirement comes along, and that “composable” masterpiece behaves like poured concrete. It doesn’t bend; it cracks.

We love the idea of composability. It promises speed, reusability, and freedom. But in reality, composable enterprise architecture often hits a wall because the organization itself isn’t built to think in a composable way. That’s where agentic thinking makes a difference—not as a technology, but as a mindset shift.

Also read: Agentic Policy Adherence in Pharmaceuticals Manufacturing

Composability Has a Thinking Problem

Most composable frameworks are designed like Lego sets. You have blocks (services, APIs, modules) that can be rearranged to form new solutions. Simple enough in theory. But business systems aren’t toys. They don’t exist in isolation, and their environment changes faster than you can rebuild them.

The hidden assumption behind composability is that someone—a person, a team, or an orchestration layer—knows how to reassemble those blocks correctly every time. That’s the weak link. It’s the dependency on central coordination, not the technology stack itself, that slows everything down.

Agentic thinking flips that dependency. Instead of central orchestration, you get distributed cognition—systems that don’t just wait for instructions but decide what to do based on context and intent.

That sounds abstract, but it’s not. Think of it as replacing flowcharts with reasoning.

From APIs to Intent

APIs describe what a service can do. Agents, in contrast, represent why something should be done—and when it makes sense to adapt.

That small linguistic difference changes how an enterprise functions.

An API will always execute the same logic. It’s a command. An agent evaluates context before acting. It’s a conversation.

So instead of having a central orchestrator tell ten systems what to do, you might have ten agents negotiating outcomes based on shared goals. Each one knows its role and limits. They coordinate, not just connect.

For example:

- A pricing agent could lower rates when demand dips—but only within a margin defined by finance.

- A logistics agent might reroute shipments when weather data predicts a disruption.

- A compliance agent could block a transaction the moment it detects policy violations, not two weeks later when audit logs are reviewed.

That’s the cognitive version of composability. It’s not just modular software—it’s modular decision-making.

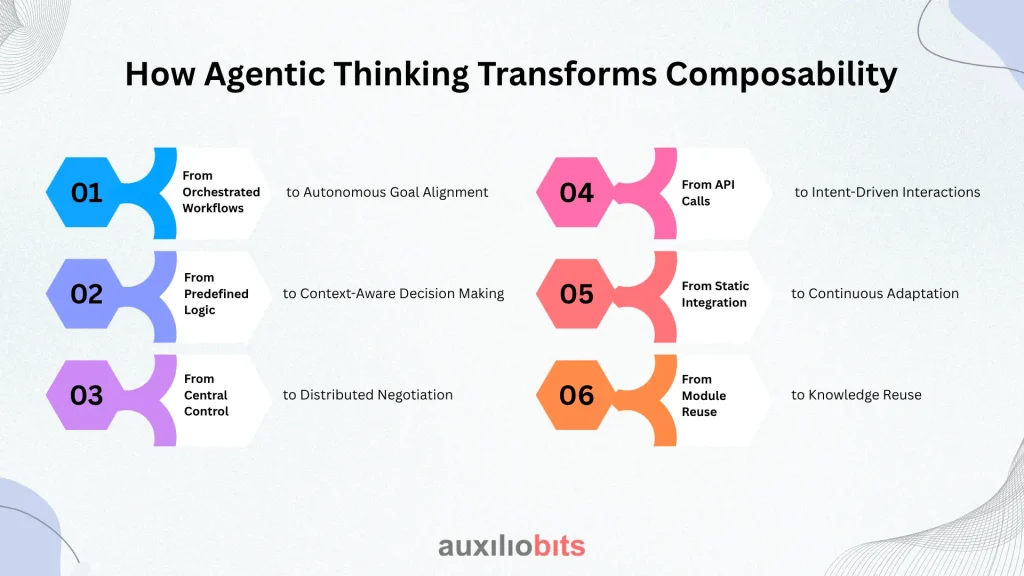

How Agentic Thinking Transforms Composability

Agentic thinking doesn’t replace composable architecture—it rewires how it behaves. Instead of rigid modules waiting for orders, you get intelligent components that reason, adapt, and collaborate.

- From Orchestrated Workflows → to Autonomous Goal Alignment

- From Predefined Logic → to Context-Aware Decision Making

- From Central Control → to Distributed Negotiation

- From API Calls → to Intent-Driven Interactions

- From Static Integration → to Continuous Adaptation

- From Module Reuse → to Knowledge Reuse

Why We Keep Getting Composability Wrong

Here’s the part no whitepaper likes to admit: most enterprises already think they’re composable. They have APIs, a microservices registry, and even a governance layer with service contracts. And yet, every new integration takes weeks of coordination and manual testing.

That’s not a lack of technology. It’s a lack of trust between systems.

Composability in its current form assumes predictability — fixed inputs, known outputs, stable dependencies. Business reality laughs at that. New regulations arrive overnight. Data formats change midstream. Teams reorganize faster than codebases can adapt.

Agentic systems don’t crumble under those shifts. They interpret, adjust, and sometimes—yes—make mistakes. But they recover faster because they understand why they exist, not just how they function.One insurance client had a “composable” claims platform. But every change request still required six teams and three weeks of testing. When they introduced autonomous validation agents, the system began adapting on its own—skipping redundant checks and prioritizing critical claims. The payoff wasn’t in raw speed, but in how much thinking the system could do independently.

Cognitive Modularity: The Missing Ingredient

True composability isn’t just about breaking systems apart. It’s about ensuring each part can think for itself while understanding the whole.

Agentic thinking introduces something we rarely talk about in enterprise design—intent propagation. That means each digital component doesn’t just execute a rule; it understands the goal behind that rule.

That’s how you get behavior like:

- A risk assessment agent decides to request additional data instead of halting a process.

- A workflow agent adjusting its path when new dependencies appear.

- A data agent chooses between two conflicting data sources based on reliability.

The magic isn’t autonomy. It’s awareness. Agents make composable systems context-sensitive. Instead of wiring everything manually, you define relationships in terms of goals and constraints. The system figures out the rest.

Control vs. Autonomy

Here’s where things get messy. Every enterprise says it wants autonomy—until it actually has it. Give systems too much freedom, and compliance gets nervous. Tighten governance too much, and flexibility dies. The trick is finding bounded autonomy—agents can act independently but within limits that align with business intent.

Think of it like this: A finance agent might automatically reclassify expenses under $1,000, but must seek approval above that threshold. It’s self-managing up to a point, but not ungoverned.

That balance doesn’t happen automatically. It requires cultural and architectural alignment. When leadership tries to impose old command-and-control habits onto an agentic ecosystem, the whole thing collapses under policy contradictions.

The Hidden Costs of Centralized Thinking

Most failures in composable initiatives stem from centralized assumptions. Even when we distribute systems, we centralize thinking through rigid approval processes, dependency matrices, and workflow engines that don’t actually adapt.

Agentic thinking breaks that pattern. Instead of workflows, you have goal networks. Instead of “execute this task,” you have “achieve this state.”

That small linguistic change leads to huge behavioral differences:

- Systems become exploratory rather than procedural.

- Dependencies become negotiable instead of fixed.

- Adaptation becomes systemic, not project-based.

But—and this matters—agentic architectures demand humility. Not every process benefits from autonomy. Some are better left deterministic: payroll, tax filing, and compliance reporting. Agentic layers work best where uncertainty and human decision-making dominate: supply chains, pricing, customer engagement, and operational resilience.

A Quick Reality Check

Let’s talk about when agentic thinking doesn’t work.

- When data quality is garbage. No reasoning framework can fix missing or inconsistent data. Garbage in, confident garbage out.

- When objectives conflict. If sales wants speed and compliance wants control, an agent can’t mediate values—it needs policy clarity.

- When organizations chase autonomy without context. I’ve seen teams deploy “self-learning agents” that optimize for speed and accidentally break service-level agreements.

It’s not that agentic architectures are fragile. They’re just unforgiving of sloppy intent. You have to know what outcomes you care about and make those explicit.

A Working Model

A mature composable-architecture stack with agentic principles might look like this:

- Agents as primary actors: Each module or service is represented by an intelligent agent responsible for interpreting context and making local decisions.

- Shared semantic layer: Instead of static data schemas, a shared knowledge graph defines relationships dynamically.

- Intent and policy layer: Goals and constraints live in a machine-readable format, so agents negotiate without human babysitting.

- Human oversight channel: Not dashboards, but actual oversight—explaining reasoning, surfacing trade-offs, and allowing overrides when needed.

Some global enterprises are already experimenting with this. I’ve seen a financial institution use policy-driven AI agents to manage cross-system compliance and a logistics player deploy adaptive routing agents that negotiate delivery schedules based on real-time constraints. It’s not widespread yet, but the early signals are strong.

The Human Mirror

Interestingly, agentic architectures reflect something broader: how organizations themselves are structured.

Centralized IT teams trying to manage a thousand microservices mirror traditional hierarchies—efficient on paper, slow in practice. Distributed, autonomous teams reflect agentic design—each empowered but contextually aligned.

Spotify’s “squad” model gets this right. Each team owns a capability end-to-end, coordinating through shared goals, not directives. Enterprise systems designed with agentic principles end up behaving the same way.

Technology and culture aren’t separate layers; they co-evolve.

A Few Hard Truths

Agentic thinking won’t save an enterprise that’s addicted to control. It also won’t make sense if you’re running on 20-year-old data pipelines and manual approval workflows.

But if your goal is real composability—systems that assemble themselves around changing goals—then autonomy isn’t optional. It’s required.

Practical lessons learned the hard way:

- Start with intent clarity. If your system doesn’t know why it’s doing something, it can’t adapt intelligently.

- Don’t over-automate early. Let agents observe before they act.

- Invest in explainability. You can’t trust what you don’t understand.

- Keep humans in the loop—not because they’re faster, but because they notice what machines miss.

Agentic systems amplify human reasoning; they don’t replace it

Why It Matters Now

The timing for this shift isn’t just technological—it’s cognitive. We’ve hit the limit of what static composability can offer. The next frontier is systems that can interpret change, not just react to it.

Composable architectures gave us modular software. Agentic thinking gives us modular intelligence.

And that difference—the ability to reason, not just respond—is what will separate adaptive enterprises from those endlessly refactoring yesterday’s integrations.