Key Takeaways

- Business logic must be visible and modular—don’t hide it in prompts or inside agents.

- Use metadata and structured control flow—LLMs shouldn’t infer every decision.

- Tool wrappers should include guardrails—wrap them with policy, not just Python.

- Externalize what you can—via rules engines or policy APIs for better maintainability.

- Every step in the chain should know what it does—and why—traceability matters more than cleverness.

When people first get their hands on LangChain, the instinct is to build chains. Input → Agent → Tool → Output. It’s fast, powerful, and deceptively satisfying. But for enterprise use cases—where workflows reflect policy rules, approval gates, SLA conditions, regulatory constraints, and decision forks—you quickly hit a wall.

LangChain gives you the skeleton. It’s up to you to define the muscle: business logic.

And this is where things get messy. Because no one tells you where or how to embed logic that reflects real-world processes. Worse, LangChain’s design encourages putting logic into the chain itself—a choice that, over time, becomes hard to debug, harder to extend, and impossible to explain to anyone who wasn’t the original author.

This blog is about sidestepping those mistakes and embedding business logic where it belongs—firmly, modularly, and maintainably.

Also read: The Role of Large Language Models (LLMs) in Agentic Process Automation.

What Counts as Business Logic in LLM Workflows?

This isn’t just about “if-then” statements or JSON routing. Business logic is the institutional behavior of your application. It reflects how decisions get made, how exceptions are handled, and what outcomes are permissible.

Here are some examples that show up in LangChain-powered workflows:

- An AI-generated response must be approved by a human if the topic is financial or legal.

- A tool should only be called if the customer’s tier is Enterprise and the query volume exceeds a threshold.

- A refund should be offered only if conditions A, B, and C are met—and even then, only during business hours.

You can’t just “train” a model to learn these. They are policies, not patterns. And while prompts can encapsulate some of this logic, the responsibility often falls on the workflow layer—where LangChain operates.

The Core Misunderstanding: Chaining ≠ Workflow Logic

LangChain is a framework for orchestrating components: prompts, LLMs, memory, tools, and retrievers. It does not prescribe how to handle branching, conditionality, fallback mechanisms, exception handling, or role-based behavior.

Yet developers often shove this logic into the chain—in functions wrapped around tools, inside prompts, or worse, hardcoded into the agent executor.

This works—until it doesn’t.

Why?

- Lack of visibility: When logic is buried in a prompt template or an agent’s tool selection code, it becomes invisible to business stakeholders.

- Rigidity: Every time the policy changes, you’re redeploying logic across multiple places.

- Versioning chaos: There’s no clear way to track changes in business rules across workflows or environments.

This is not a criticism of LangChain. It’s a caution against confusing its building blocks with your domain logic layer.

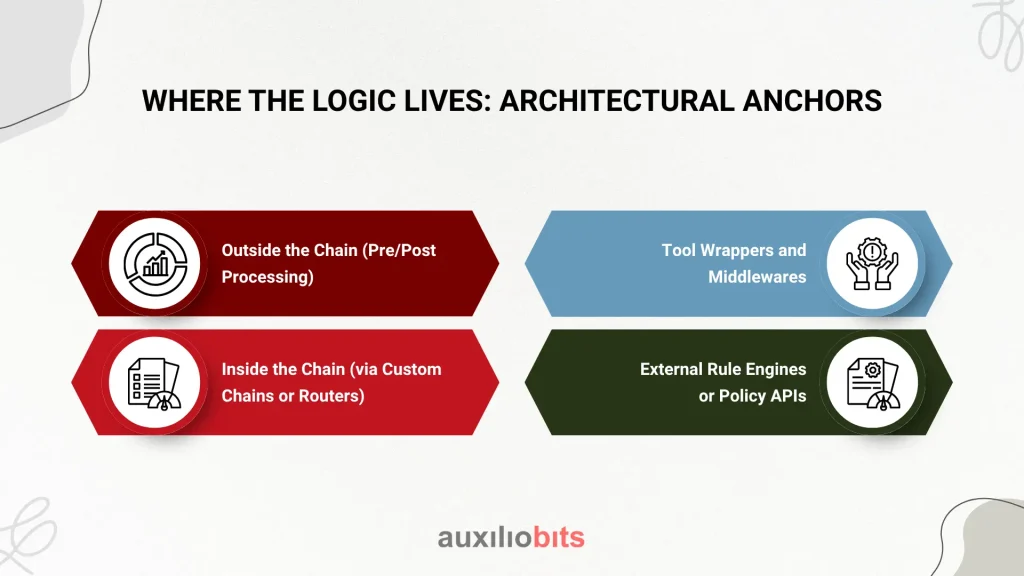

Where the Logic Lives: Architectural Anchors

Business logic in LangChain-powered applications typically needs to anchor itself in one or more of these layers:

Outside the Chain (Pre/Post Processing)

This is where request validation, user entitlement checks, or external system triggers happen. It’s the right place for security and routing logic.

Inside the Chain (via Custom Chains or Routers)

Here, logic can direct flow between components—e.g., using MultiPromptChain to route based on input semantics. But use sparingly.

Tool Wrappers and Middlewares

If your tool has business implications (e.g., issuing refunds), it should wrap that functionality with policy enforcement. Think of them as “guardrails,” not “functions.”

External Rule Engines or Policy APIs

For mature orgs, this is ideal. Keep business logic in configurable, versioned, policy-as-code systems (like Open Policy Agent or even a custom rules engine) and call them from LangChain tools or middleware.

The worst choice? Embedding logic deep inside prompt instructions and hoping for consistent behavior.

Common Patterns and Anti-Patterns

Patterns That Work:

Routing via Classifiers

Use a lightweight classifier model to determine intent, category, or user type—and route accordingly.

Guarded Tool Invocation

Wrap your LangChain tools with logic that checks permissions, thresholds, or states before execution.

Business Policy APIs

Externalize decision logic into REST endpoints. LLMs don’t need to know why a refund is denied—just that it was

Anti-Patterns:

Prompt-as-Logic

“If the user is a VIP, be more polite.” Sounds clever until the prompt balloons into an unreadable policy document.

Agent Obsession

Not every use case needs an agent. Agents with multiple tools and autonomous behavior are brittle unless carefully controlled.

Hardcoded Decisions in Python Functions

Yes, Python is easy to write. But it’s not easy to read when your business logic is scattered across handlers, callbacks, and Lambdas.

Strategies for Structuring Complex Logic

Here’s what experienced teams do:

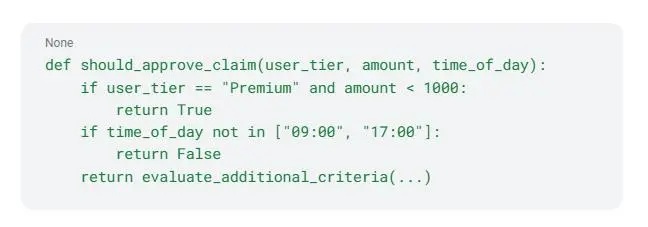

Modularize Business Rules

Break logic into functions that return decisions. Example:

Use Metadata, Not Just Text

Pass structured metadata between chain steps—things like user_role, intent, confidence_score. Don’t rely on LLMs to extract all context from raw text every time.

Separate Control Flow from Content Generation

Let LLMs do what they’re best at—language, synthesis, search. Let you decide what paths are allowed or blocked

Case Example: Claims Processing Workflow in Insurance

Imagine a workflow where a user submits a claim via chat. Here’s how a LangChain-powered system might look with embedded logic in the right places:

1. Input Preprocessing

- Classify the claim type: vehicle, health, or property.

- Extract urgency score via LLM.

- Look up the user’s policy from a DB.

2. Routing Logic

- Health claims over $5K → human agent.

- Vehicle claims under $1K → auto-assessment LLM.

- Claims marked “urgent” → prioritized workflow.

3. Tool Invocation

- Use tools like the fraud-check API, document parser, and policy validation tool.

- But wrap each tool: don’t run a fraud check if the claim is under a threshold, for example.

4. LLM Response Generation

- LLM generates a user-facing response.

- Business logic decides whether to offer settlement, escalate, or delay.

5. Audit Trail

- Every logic decision is logged with reason codes.

- The business team can replay the decision path without knowing LangChain internals.

This division ensures that when rules change, only the logic functions or rules engine needs an update—not the entire chain.

Handling Change: Making Business Logic Maintainable

Enterprise logic changes. Frequently. Compliance rules tighten, pricing models shift, and new exceptions get added weekly.

If your logic is too deeply embedded in chains, here’s what happens:

- Developers become bottlenecks: Business analysts can’t modify logic directly.

- Regression bugs increase: Changing one rule breaks another downstream step.

- Reusability suffers: Logic written for one chain can’t be reused elsewhere.

Here’s how to mitigate it:

- Create policy abstraction layers—even if they’re just Python modules at first.

- Version your logic—use feature flags or environment-specific rules.

- Keep business logic testable outside LangChain—unit test your decisions in isolation.

Risks, Frictions, and Trade-offs

Let’s not pretend this is clean.

- Adding abstraction makes debugging harder. When something goes wrong, tracing logic through wrappers and external APIs is painful.

- Too much modularity kills velocity. For prototypes or internal tools, embedding logic directly may be acceptable.

- LLMs sometimes behave unpredictably anyway. So even perfectly architected logic isn’t a silver bullet if your prompt is vague or the model shifts behavior across versions.

But over the long term, treating business logic as a first-class concern—not an afterthought—yields dividends in maintainability, compliance, and scale.

Final Thoughts

LangChain is a powerful orchestration layer—but it doesn’t care what your business rules are. That’s your job. The real architectural maturity comes not from chaining tools, but from clearly separating how things are said from why decisions are made.

If your LangChain app is just “glue” between LLM and a tool, it’s not a workflow—it’s a pipe. Embedding business logic gives it structure, purpose, and control. It’s what turns a clever demo into a production-ready system.