Key Takeaways

- Prompt layers are the cognitive infrastructure of multi-agent systems. They function like API contracts for language — defining not just data structure but meaning, intent, and epistemic confidence between autonomous agents.

- Communication design outweighs model intelligence. Most failures in multi-agent systems stem from misinterpretation, not lack of reasoning power. Structuring prompts with semantic, epistemic, and behavioral contracts keeps interactions coherent.

- Negotiation, not obedience, is the new paradigm. Agents must be able to question, reinterpret, or escalate unclear instructions. This shift—from command execution to collaborative discourse—defines the next evolution of intelligent automation.

- Prompt governance is essential for system reliability. Techniques like intent validation, escalation pathways, and prompt auditing help maintain linguistic integrity, preventing drift and looping behaviors across agent networks.

- The future of AI architecture lies in linguistic alignment. As models proliferate, success won’t depend on who has the largest model but on who builds the most fluent ecosystem—where agents truly understand one another across layers of abstraction.

In human conversations, the meaning often lives between the words. The same is now true for autonomous agents. As enterprises move from single-model automation to multi-agent ecosystems, the real challenge isn’t the intelligence of individual agents—it’s how they talk to each other. The connective tissue between them—the prompt layer—has become the new design frontier.

That might sound like semantics, but it’s actually infrastructure design. Just as APIs standardized digital service communication, prompt layers are becoming the lingua franca of cognitive systems. But unlike APIs, they’re not rigid schemas. They’re adaptive, interpretive, and often unpredictable.

Also read: Custom vs managed platforms: when to build vs when to partner in agentic automation

The Problem with “Just Passing Context”

A common misconception in multi-agent systems is that communication is simply about sharing the same context window. Pass the same JSON payload, include a goal statement, and voilà—agents collaborate.

Except they don’t.

In reality, each agent interprets that context differently based on its own architecture, model base, or function. A retrieval agent reading an analytics report doesn’t “think” the same way a negotiation agent does. Without a well-designed prompt layer—one that frames semantics, intent, and authority—cross-agent communication starts to resemble office chatter without a meeting agenda.

Consider a workflow where:

- A data summarizer agent condenses transaction data.

- A risk assessor agent determines anomalies.

- A decision recommender agent suggests actions.

If the summarizer’s output is verbose or underspecified, the risk assessor’s reasoning derails. The recommender then misinterprets results as noise. The system hasn’t failed technically—it’s failed linguistically.

This is why prompt layers matter: they act as translation contracts, cognitive middlewares that ensure the “what” and “why” survive the relay.

Prompt Layers: The Cognitive Equivalent of an API Contract

When developers talk about APIs, they think structure: input, output, and validation. Prompt layers follow the same logic but introduce new variables—intent, tone, and epistemic confidence (how sure an agent is about its own output).

A well-designed prompt layer for inter-agent communication usually includes three implicit contracts:

1. Semantic Contract—Defines the meaning of information across agents.

- What does “priority” mean to a task manager vs. a planner agent?

- Should “high confidence” map to numerical probability or linguistic certainty?

2. Epistemic Contract—Captures what the sender believes to be true and how that confidence should be interpreted.

- For example, if the data-fetching agent is only 70% sure about a trend, the strategy agent shouldn’t treat it as deterministic.

3. Behavioral Contract—Encodes how agents should react to ambiguity.

- Should an agent re-query, self-correct, or escalate uncertainty to a human loop?

This tri-layered contract forms the basis of prompt governance between agents. It’s part ontology, part psychology.

Designing the Prompt Layer: From Instruction to Negotiation

Traditional prompt engineering focuses on optimizing single-agent outputs: “Do this task accurately and fast.” Inter-agent prompt design, however, must accommodate negotiation—a kind of controlled misinterpretation. Agents must be able to ask for clarification, reject faulty inputs, or reinterpret requests based on shared goals.

In practice, this means prompt layers evolve through several design phases:

1. Defining Common Ontologies

Before any agent can “talk,” it must agree on what words mean. You don’t want the planning agent to interpret “risk threshold” as a financial metric while the compliance agent reads it as a regulatory score.

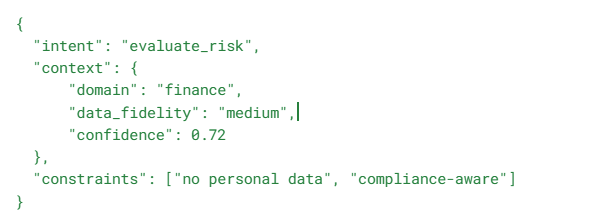

Real-world implementations often use a shared schema—something like:

Yet, the schema alone isn’t enough. The prompt layer wraps this data with meta-language cues, e.g., “You are a compliance-aware agent interpreting risk assessments. If confidence is below 0.75, request additional validation.” That phrasing teaches the agent how to interpret the field, not just what the field is.

2. Layered Prompt Structuring

Instead of single monolithic prompts, multi-agent systems perform better when prompts are layered by function:

- Task Layer: Specifies the operational goal.

- Context Layer: Embeds relevant background or shared memory.

- Coordination Layer: Defines inter-agent roles, tone, and escalation rules.

For example:

“As the synthesis agent, summarize insights from all available data analysts, maintaining traceability and confidence attribution.”

The prompt explicitly positions the agent in the communication graph. It’s not merely receiving instructions—it’s entering a conversation.

3. Adaptive Prompt Mutation

Static prompts are brittle. In agent networks, you need dynamic prompt mutation based on situational context. For instance, if a summarizer agent keeps sending verbose outputs, the controller agent can issue a meta-prompt like:

“Shorten summaries to <100 tokens. Prioritize anomalies first.”

Over time, the network stabilizes—a kind of linguistic homeostasis.

This is where reinforcement comes in: prompt weighting, historical scoring, and even embedding similarity models can help maintain prompt coherence across iterations.

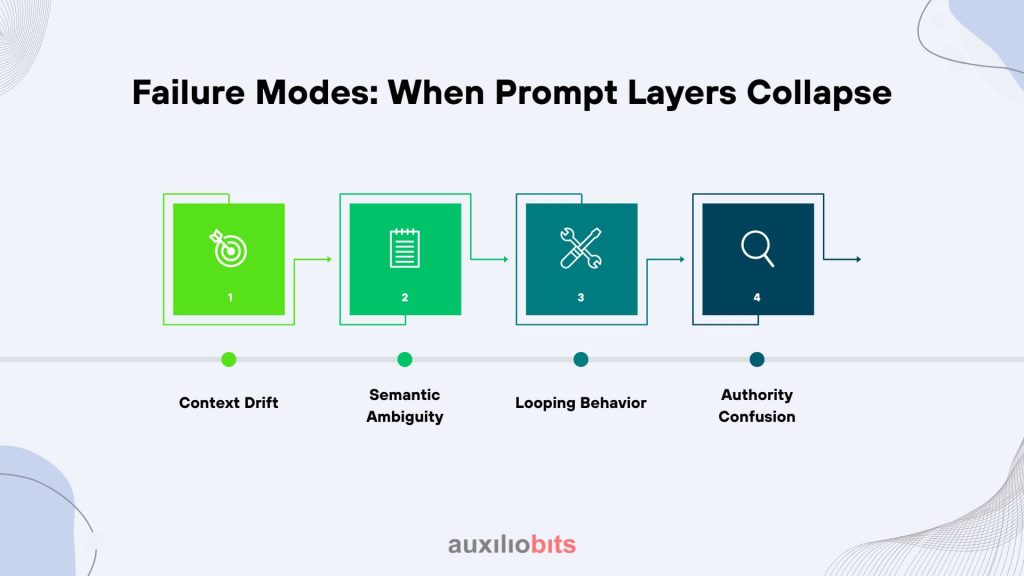

Failure Modes: When Prompt Layers Collapse

Most multi-agent breakdowns stem not from computation errors but interpretation drift.

Some of the most common failure patterns include:

- Context Drift: Agents misinterpret temporal or situational context after long message chains.

- Semantic Ambiguity: Different models assign different meanings to identical phrases.

- Looping Behavior: Two agents repeatedly correct each other without resolution (“I think you meant…” “No, I meant…”).

- Authority Confusion: Agents override or contradict one another because the prompt doesn’t specify hierarchy or role scope.

Take a real-world example: a retail pricing optimizer where one agent manages inventory projections and another adjusts discount models. Without clear epistemic contracts, both might overcompensate—the inventory agent increases stock due to “predicted demand,” while the pricing agent lowers prices to “stimulate sales.” The result: overstocking and margin loss.

The fix wasn’t better models—it was better prompt governance.

Prompt Governance: Keeping Agents Civil

Prompt governance is emerging as a critical discipline in AI architecture. It ensures that the linguistic glue between agents doesn’t dissolve over time.

Some practical design strategies:

- Message Typing: Define whether a message is declarative (fact), interrogative (query), or imperative (instruction). This helps agents decide response behavior.

- Intent Validation: Require agents to restate received intent before acting. A simple “intent echo” step dramatically reduces misinterpretation.

- Escalation Pathways: When two agents disagree, define who resolves it—another meta-agent or a human-in-the-loop.

- Prompt Auditing: Log and review prompts like transaction traces. Over time, patterns of confusion or misalignment become apparent.

- Fallback Defaults: If an agent receives ambiguous input, it should defer to a safe behavior rather than proceed with uncertainty.

In essence, governance converts communication chaos into predictable collaboration.

Real-World Parallels: Lessons from Microservices and Human Teams

Interestingly, much of what’s emerging in agentic communication mirrors past lessons from distributed computing. Remember when microservices first took off? Everyone loved the modularity—until they realized managing service dependencies, schema versions, and error handling was the real problem.

Prompt layers are the new interface contract—only this time, the language is natural, not structured.

Similarly, human teams face analogous coordination challenges. Think of a newsroom: editors, reporters, photographers, and fact-checkers. They don’t follow a single rigid script. They use implicit coordination—tone, expectation, and shared mental models. When a journalist says, “We’re going deep on this,” everyone understands the shift in priority, even without an explicit command. Agents, ideally, should develop similar shared heuristics.

That’s why some enterprises are experimenting with emergent prompt ontologies: instead of handcrafting every prompt rule, they allow agents to develop shared phrasing through reinforcement. It’s risky—drift can occur—but it mimics how language evolves in human groups.

Metrics and Feedback Loops

You can’t improve what you can’t measure, even in linguistic systems. Prompt-layer performance metrics are still nascent, but some early-stage heuristics show promise:

- Interpretation Consistency Score (ICS): Measures how similarly multiple agents interpret the same input.

- Prompt Compression Ratio: Evaluates how much prompt verbosity can be reduced without loss of accuracy.

- Response Entropy: Quantifies variability in agent replies—too high means chaos, too low means stagnation.

- Escalation Frequency: Tracks how often agents require human resolution. Ideally, this drops as prompt layers mature.

These aren’t standardized yet, but forward-looking teams (particularly in automation-heavy enterprises) are already experimenting with such metrics to evaluate communication quality, not just output accuracy.

Case Glimpse: Financial Reconciliation Network

A Tier-1 bank recently tested a multi-agent network for daily reconciliation—transactions from multiple systems, currencies, and accounts. The architecture involved a fetcher, validator, anomaly detector, and report generator.

Initial tests failed miserably: false-positive anomalies, misaligned timestamps, contradictory logs. Engineers realized the problem wasn’t data—it was dialogue. The agents were misinterpreting “discrepancy severity” levels.

After redesigning the prompt layer with:

- Explicit epistemic framing (“treat any timestamp mismatch below 5 minutes as minor unless over $10,000 variance”)

- Context bundling (“assume batch consistency across region and day”)

- Meta-role prompts (“validator outputs must be phrased as confidence statements, not absolute decisions”)

The reconciliation accuracy jumped from 78% to 96%. That’s not a modeling improvement—it’s linguistic architecture paying off.

The Subtle Art of Prompt Pragmatics

There’s a quiet truth here: prompt layers are not about syntax—they’re about pragmatics, about how meaning shifts in context. An “instruction” to one agent might function as a “suggestion” to another, depending on who’s speaking.

Designers must think like linguists and systems engineers simultaneously. Too much rigidity, and agents lose adaptability; too little, and communication entropy takes over.

Some subtle but useful techniques:

- Use meta-prompts for tone calibration (“interpret prior agent’s output charitably unless conflicting evidence appears”).

- Embed intent mirrors (“If your output contradicts the previous agent’s claim, explain why before proceeding”).

- Maintain prompt histories as shared memory so agents develop a sense of conversational continuity.

These design decisions create systems that “understand” each other not because they share the same model, but because they share the same communicative culture.

Closing Reflection

The future of agentic systems won’t be determined by model size or API integrations—it’ll hinge on how well we design the conversations between machines.

Prompt layers are, in many ways, the new runtime. They determine cooperation, alignment, and even trust.

We’re still early. There’s no ISO standard for prompt layering, no universal grammar for agent talk. But just as TCP/IP turned chaotic networks into the internet, a well-designed prompt protocol could turn autonomous silos into coherent digital societies.

And maybe that’s the bigger story here: the next revolution in AI infrastructure won’t be about intelligence at all—it’ll be about understanding. Not between humans and machines, but among the machines themselves.