Key Takeaways

- VoiceFlow is ideal for rapid prototyping, but once you hit dynamic logic and multi-system workflows, the flow-based architecture starts to buckle.

- Twilio provides the most reliable backbone and full integration freedom, but you need internal teams ready to build and maintain the actual conversational layer.

- GPT-centric custom architectures offer unmatched reasoning capability, yet introduce operational and safety risks that require AI-product-grade governance.

- Failure modes differ dramatically across these models: scripted flows fail predictably; LLM-based agents fail creatively (and therefore more dangerously).

- In real-world deployments, successful teams often blend approaches, using VoiceFlow for non-LLM fallback flows, Twilio for infrastructure, and GPT for reasoning when true autonomy is required.

Voice interactions have been steadily creeping into enterprise workflows—not flashy “Jarvis”-type experiences, but practical, business-grade use cases: password reset assistants for internal IT teams, guided voice onboarding in insurance, and conversational IVRs that answer with context rather than canned logic chains. And the moment an organization seriously evaluates whether to move from basic IVR to intelligent voice agents, three pathways immediately emerge: “Let’s build on VoiceFlow,” “let’s leverage Twilio’s Programmable Voice stack,” or “let’s just engineer a bespoke GPT-based architecture from the ground up.”

The problem is that these three options aren’t apples-to-apples. They sit across different abstraction layers and build philosophies. So the only way to compare them properly is to go under the hood, break down how they behave in real deployments, and highlight not only what they can do in a demo environment but also what happens when the use case starts scaling across countries, languages, and backend workflows.

Also read: Designing Frictionless Voice UX for Complex Enterprise Workflows

Where Each Option Sits in the Stack

Let’s not pretend that all three platforms are equivalent “voice agent” solutions:

| Solution | Primary Role | Abstraction Level | Typical Starting Point |

| VoiceFlow | No-code/low-code conversation design + orchestration layer | High-level | Product or CX teams prototyping/tuning flows |

| Twilio | Programmable telephony and communication APIs | Mid/low-level | Engineering-driven custom integration |

| Custom GPT (LLM-first) Architecture | Fully bespoke, application logic embedded in prompts or orchestrated over tools | Low-level | R&D / advanced automation initiatives |

Right away, this tells us something: the choice isn’t simply “which one is more powerful,” but “how much control vs. velocity do we need today, and how much are we willing to own tomorrow?”

VoiceFlow: Rapid Flow Design, Limited Depth

VoiceFlow has become the go-to for teams that want to show a working voice agent in two weeks. Drag-and-drop flows, quick slot-filling logic, and fine-grained control over wording—extremely useful for UX teams and product owners. But reality hits once you need:

- Dynamic context switching (e.g., mid-call escalation requires pulling two parameters from CRM that the user didn’t provide)

- Complex business rules driven by third-party systems

- Non-linear conversations where LLMs need to “decide” next actions, not just follow dialog trees

Yes, they’ve recently added an “LLM block” (basically an OpenAI call wedged inside a node), but that pushes the complexity down the line. You still end up building a tightly coupled dialog tree that, six months later, has the same maintainability issues as any scripted IVR system—just slightly prettier.

Another subtle detail: VoiceFlow expects humans to actively maintain and version designs. That’s fine for early-stage products, but quickly becomes fragile for high-volume service operations (think: telecoms, digital banks, large-scale logistics).

Note: VoiceFlow is fantastic for getting stakeholder buy-in and validating a conversational idea. But you don’t want to scale a mission-critical voice agent purely on it unless you have a dedicated “voice experience operations” team.

Twilio: Power and Bare Metal

Twilio sits in an entirely different mental model. It doesn’t care about conversation design. It gives you an incredibly robust programmable telephony backbone: voice, routing, SIP trunks, recording, analytics, encryption, and carrier management. Twilio will let you place and receive millions of calls reliably—and that’s already 60% of the battle.

However, the actual intelligence must come from wherever you plug into it

- Amazon Lex? Fine.

- OpenAI GPT with your decision orchestrator? Also fine.

- Hard-coded rules driving an IVR tree? Still valid.

So with Twilio, an enterprise effectively builds its voice agent platform on top of a stable communication layer. That means:

- Full flexibility in orchestration logic

- Ability to plug in any LLM / multi-agent framework

- Granular control over call routing, data privacy, recording regulation, etc.

- Clean integration into existing backend systems (through APIs or service buses)

…but the trade-off is time. Building the conversation stack, LLM guardrails, testing interfaces, monitoring analytics—all of that becomes your problem.

When does Twilio win?

- When the organization already has mature engineering teams that want to embed voice interactions inside existing services.

- When regulatory and compliance constraints (e.g., GDPR, HIPAA) require full control of data flow.

- When the voice agent must trigger sophisticated workflows (think multi-step ticket creation, role-based access, and external identity verification).

One mid-size insurer even built a Twilio + custom GPT-4 orchestration layer entirely in-house to handle claims FNOL (first notice of loss). Their logic needed to fetch policy limits in real-time, detect fraud patterns based on sentiment and lexical patterns, and then decide whether to route the call to an adjuster or ask additional clarifying questions automatically. That sort of agility is nearly impossible with prepackaged design tools.

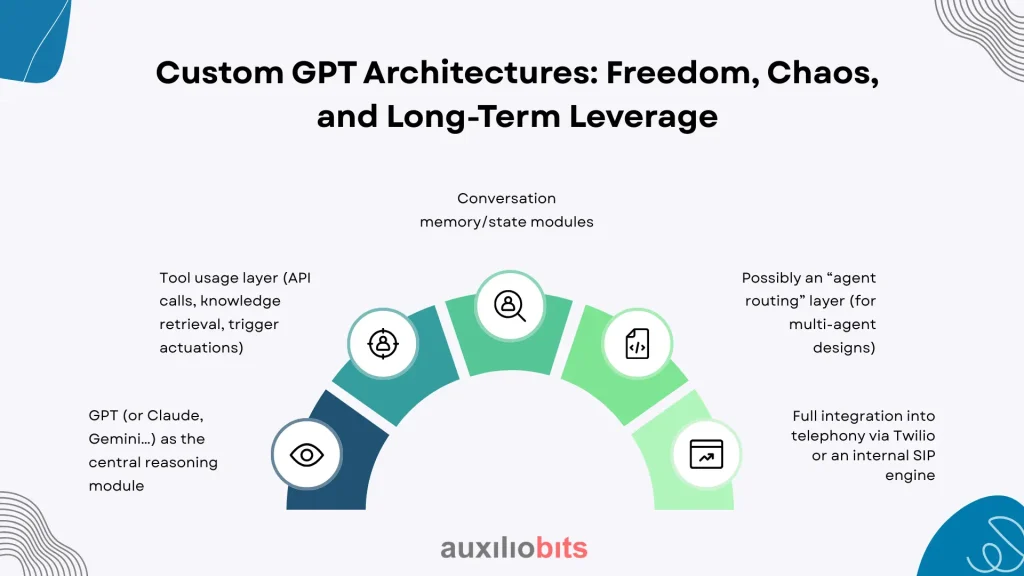

Custom GPT Architectures: Freedom, Chaos, and Long-Term Leverage

This third path tends to appear when a CTO says, “We want the voice agent to not only read from the policy system but also reason over it in real time.” You end up with a bespoke architecture inspired by agentic systems:

- GPT (or Claude, Gemini…) as the central reasoning module

- Tool usage layer (API calls, knowledge retrieval, trigger actuations)

- Conversation memory/state modules

- Possibly an “agent routing” layer (for multi-agent designs)

- Full integration into telephony via Twilio or an internal SIP engine

Why go through all that pain? Because it gives you granular control over reasoning, error handling, fallback behavior, and even emergent behavior tuning.

It also means:

- Conversation flows are not “pre-authored” but dynamically generated

- The system can adapt to unseen intents without re-authoring flows.

- Business logic can be codified in prompts, or even in an external policy engine.

However—and most vendors don’t like admitting this—the failure modes are drastically different. LLM-based voice agents sometimes hallucinate. They occasionally produce overly verbose answers or mismatch the tone with the context. You need guardrails, contingency escalation rules, and continuous reinforcement training. That requires a very different kind of operational mindset: more like running an AI product, not a static IVR.

Some uncomfortable but real observations:

- Fine-tuning helps, but doesn’t eliminate unpredictable edge cases

- Agents may “do the right thing, but say it badly” (good action, poor phrasing)

- Prompt stacks grow messy fast unless structured like reusable functions

Many enterprises underestimate that part. Everyone is excited on the day the GPT-based voice agent completes a previously unseen call flow. The excitement quickly fades when the agent misroutes a VIP customer or reveals internal troubleshooting steps verbatim.

Concrete Comparison Chart (Not Just Feature Checkboxes)

| Criterion | VoiceFlow | Twilio | Custom GPT Architecture |

| Speed of initial prototype | Very high | Medium | Medium/low |

| Flow design flexibility | Moderate | Full (if engineered) | High (dynamic) |

| LLM integration | Plug-in block | External/custom | Native/core |

| Control over reasoning | Low | High | Very high |

| Compliance/data control | Moderate | High | Very high |

| Maintenance complexity | Low initially, high at scale | High | Very high |

| Required skill set | Conversational UX + light scripting | Backend/DevOps/LLM | AI engineering + DevOps + conversational design |

| Suitable for mission-critical? | Only with heavy customization | Yes | Yes (with safeguards) |

So… Which One and When?

There’s no universally “best” option—it’s more about maturity, appetite, and use case depth.

VoiceFlow is excellent for:

- Early-stage experiments or concept validation with stakeholders

- UX-first teams where design iteration speed matters

- Static or semi-dynamic FAQs, onboarding scripts, or basic IVR upgrades

Twilio-based architectures shine when:

- You need a bulletproof telephony infrastructure

- The conversation logic is bespoke (integrated to existing microservices or workflow engines)

- The enterprise already runs DevOps-driven conversational systems

GPT-centric architectures are justified when:

- The conversation requires actual reasoning, not just routing

- Real-time synthesis of context is needed (across documents, policies, internal data)

- The organization is comfortable adopting an AI product lifecycle (monitoring, reinforcement, prompt governance)

Final Thoughts

One thing often missed in these “VoiceFlow vs Twilio vs Custom GPT” debates is that enterprises tend to evolve through these options. They start on VoiceFlow to show viability. They move to Twilio when they want performance and compliance. And eventually (sometimes reluctantly), they arrive at a custom GPT-based agent architecture simply because no amount of pre-authoring can keep up with business complexity.

Interestingly, some leading teams are combining them: VoiceFlow is used as a visualization layer for non-LLM fallback flows, while GPT-driven decision logic runs underneath via Twilio webhooks. That hybrid approach is starting to show up in places like retail banking and logistics BPOs—not exactly the usual “cutting-edge AI” crowd, but the ones who genuinely need robust and flexible voice automation.

In short, the “right” solution isn’t only about feature breadth. It’s about how much cognitive autonomy you require your voice agent to demonstrate—and how prepared you are operationally to handle that autonomy.

If an organization’s procurement team still manually routes tickets via email and legacy SharePoint lists, jumping directly to a multi-agent GPT architecture is not “innovative”—it’s a recipe for failure. But for companies already investing in observability, DevOps, and knowledge-aware workflows, custom LLM voice agents become less of a moonshot and more of an inevitable extension of their stack.

Should every enterprise build its GPT-based agent? Probably not. Should they assume that drag-and-drop platforms will be enough for the next five years? Not.

The truth sits somewhere in between—and, frankly, it keeps moving.