Key Takeaways

- Supplier risk is no longer an onboarding problem—it’s a runtime problem. Static approvals assume stability. Modern supplier ecosystems are volatile by default, which makes periodic validation structurally insufficient.

- Agentic AI succeeds by distributing responsibility, not centralizing intelligence. Multiple specialized agents—financial, ESG, compliance, behavioral—outperform monolithic risk platforms because they detect change, not just status.

- Continuous validation is about signal detection, not constant escalation. Effective systems don’t monitor harder; they monitor smarter, adjusting scrutiny based on supplier criticality and emerging risk patterns.

- False positives are not system failures—they’re governance decisions. The real failure is designing risk systems that surface issues too late because teams demanded perfect accuracy instead of early warning.

- The biggest impact of Agentic AI is cultural, not technical. Continuous monitoring shifts accountability upstream, changes procurement behavior, and reframes suppliers as ongoing risk partners—not approved records.

Supplier onboarding used to be a front-loaded activity. You collected documents, ran background checks, ticked the compliance boxes, and moved on. Six months later—sometimes six years later—you might look again, usually because something went wrong. A regulatory notice. A missed delivery traced back to a sanctioned subcontractor. An ESG controversy that suddenly became your problem.

That mental model doesn’t survive today’s supplier networks. Not with multi-tier sourcing, volatile geopolitics, evolving ESG standards, and regulators who assume you are monitoring continuously—even if you’re not.

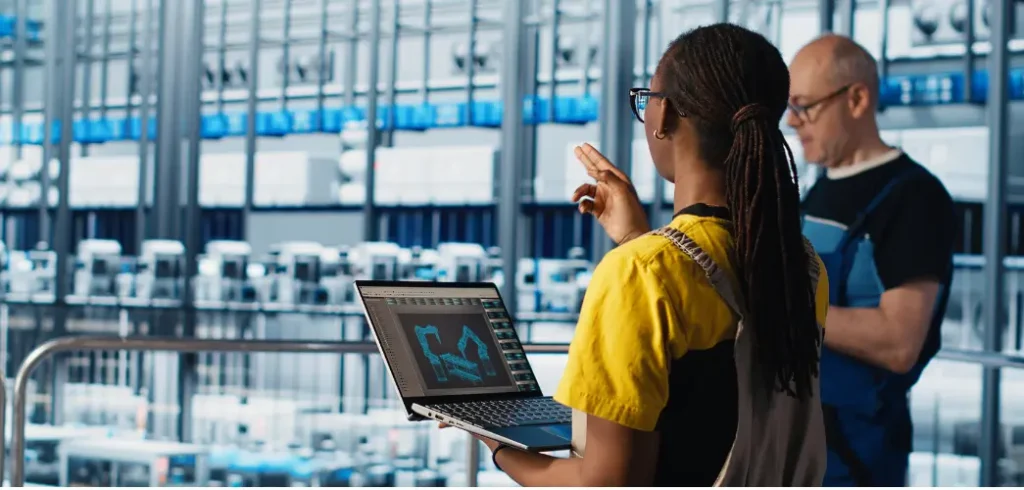

This is where Agentic AI actually earns its keep. Not as another onboarding workflow, but as a system of autonomous, continuously validating agents that treat supplier risk as a living condition, not a one-time clearance.

The problem with “onboarding” as a phase

Most enterprises still think in stages: onboard → approve → operate → review. The tools reflect that thinking. Vendor master creation in ERP. A compliance check during onboarding. Maybe an annual re-certification email that everyone ignores until procurement starts chasing.

But risk doesn’t respect stages.

A supplier that was financially stable in Q1 can show stress in Q3.

An ESG rating can shift after a labor incident two countries away.

A subcontractor can quietly appear on a sanctions list overnight.

And yet, most organizations rely on:

- Static questionnaires

- Periodic audits

- Human-driven exception reviews

If you’ve worked in procurement or compliance long enough, you know how this plays out. Teams are reactive by design. Issues surface late. Everyone asks, “How did we miss this?” The honest answer is: the system wasn’t built to notice.

Also read: Agentic AI: The Future of Autonomous Decision-Making in Enterprises

Agentic AI changes the operating model, not just the tooling

Agentic AI isn’t just “AI plus automation”. It’s a shift in how responsibility is distributed across software entities.

Instead of one monolithic supplier risk platform, you deploy multiple autonomous agents, each with a specific mandate:

- One watches financial signals.

- Another tracks ESG indicators.

- A third monitors regulatory and sanctions exposure.

- Yet another reconciles internal behavior—delivery performance, dispute patterns, invoice anomalies.

They operate continuously. They trigger actions. They escalate when thresholds are crossed. And—this part matters—they learn when escalation was noise versus signal.

This is not theoretical. Large manufacturers and financial institutions are already running early versions of this model, often quietly, because it touches risk governance and no one likes to oversell that.

Continuous validation: what it looks like in practice

“Continuous validation” sounds neat until you ask what’s being validated, how often, and against what standard. Agentic systems force those questions into the open.

1. Financial health monitoring

- Agents ingest credit bureau updates, payment behavior, trade finance signals, even shipment delays.

- They don’t wait for a quarterly review. They look for change, not absolute scores.

- A sudden tightening of payment terms elsewhere? That’s a signal.

- Repeated invoice corrections? Another one.

Sometimes these indicators conflict. That’s fine. A human analyst would argue about them too.

2. ESG and sustainability checks

- ESG isn’t a single score. It’s labor practices, environmental incidents, governance issues, and sometimes just bad press that later turns out to be real.

- Agents monitor public disclosures, NGO reports, adverse media feeds, and internal audit findings.

- They correlate external signals with supplier criticality. A minor violation from a Tier-3 vendor may be logged. The same issue at a sole-source supplier? Escalated.

Here’s the truth: ESG data is messy. Agentic systems don’t “solve” that. They surface uncertainty earlier, which is usually enough to change outcomes.

3. Compliance and sanctions screening

- Continuous watchlists beat point-in-time screening, but only if alerts are handled intelligently.

- Agents cross-check supplier entities, directors, and beneficial owners against evolving lists.

- They track near-matches over time instead of triggering panic every time a common name appears.

This is where older rule-based systems fall apart. They treat every alert as equally urgent. Agentic models don’t. They build context.

Where this approach works—and where it struggles

Let’s be clear: Agentic AI is not a magic risk shield.

It works best when:

- Supplier data is fragmented but accessible

- Risk appetite is defined (even if imperfectly)

- There is governance clarity on who acts on escalations

It struggles when:

- Organizations expect “zero false positives”

- Legal and compliance teams refuse machine-initiated actions

- Supplier master data is a mess no one wants to own

A real-world example

A consumer goods company with a heavy APAC supplier base implemented Agentic monitoring after a near-miss sanctions incident. They didn’t replace their onboarding system. They layered agents around it.

What changed:

- Financial stress signals were picked up months earlier than before, mostly through indirect indicators.

- ESG alerts initially spiked—too many, too noisy.

- After three months, the system was tuned to focus on suppliers tied to regulated markets.

What didn’t change:

- Human review still mattered.

- Legal still overruled the system occasionally.

- Some alerts were ignored—and later turned out to be irrelevant.

The net effect? Fewer surprises. And in risk management, that’s usually the real KPI

Why continuous beats periodic, even when it feels excessive

There’s a common pushback: “Do we really need to monitor everyone all the time?”

Short answer: not equally. But continuously, yes.

Periodic reviews assume stability between checkpoints. That assumption no longer holds. Agentic systems don’t monitor harder; they monitor differently:

- Low-risk suppliers get passive observation.

- High-impact suppliers get active scrutiny.

- Risk posture adjusts dynamically.

Think of it less like surveillance and more like vital signs. You don’t run a full diagnostic every hour. You watch for deviations.

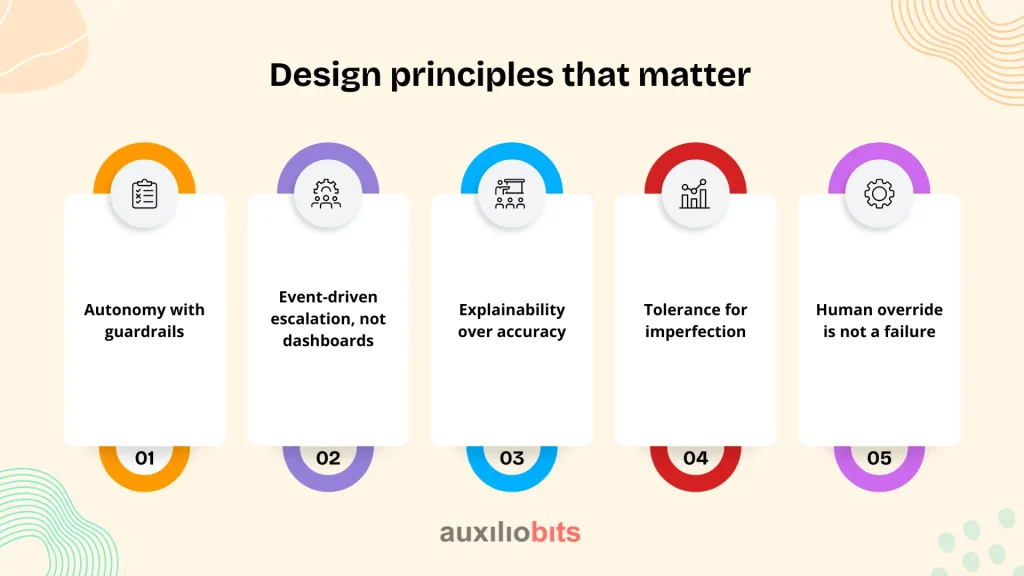

Design principles that matter

If you’re considering Agentic AI for supplier onboarding and risk, a few hard-earned lessons:

- Autonomy with guardrails: Let agents recommend and escalate, not terminate suppliers automatically—at least at first.

- Event-driven escalation, not dashboards: Dashboards are for retrospectives. Agents should trigger actions.

- Explainability over accuracy: A slightly less accurate alert that explains itself will be trusted more than a perfect black box.

- Tolerance for imperfection: Some false positives are the price of early detection. Decide upfront how many you can live with.

- Human override is not a failure: It’s part of the system. Pretending otherwise is naïve.

Shifts in accountability

One of the quieter impacts of Agentic AI is how accountability shifts.

When risk is monitored continuously, “we didn’t know” stops being a valid excuse. At the same time, teams gain earlier warnings, which changes behavior upstream. Procurement negotiates differently. Compliance engages earlier. Suppliers realize they are being assessed as ongoing partners, not just approved entries.

That cultural shift is often more valuable than the technology itself.

Final Thought

Supplier risk used to be something you checked. Now it’s something you live with. Agentic AI doesn’t remove that burden—but it distributes it across systems that don’t get tired, don’t forget, and don’t wait for calendar reminders.

Continuous validation isn’t about control. It’s about staying oriented in a supply ecosystem that moves whether you’re watching or not.