Key Takeaways

- Static programs break under variability. Traditional robots excel at repeatability but collapse when material properties, environmental conditions, or upstream processes shift even slightly. Adaptive systems treat those shifts as input, not error.

- Operational data is the real enabler. Sensors, vision systems, and contextual enterprise data only create value when they’re connected into closed loops that feed back into robot execution—otherwise, they’re just noise.

- Guardrails are essential. Adaptive agents can overfit to short-term anomalies (like one bad batch or an unusual week of orders). Without boundaries and human oversight, “learning” can degrade efficiency.

- Integration matters more than algorithms. Many projects fail not for lack of AI capability but because plants can’t clean their sensor data, bridge legacy PLCs, or win operator trust. Success depends on plumbing and people.

- The factory, not the robot, is the destination. The future isn’t one adaptive machine in isolation but fleets that coordinate and share insights. That leap is promising but dangerous—failures can spread as fast as improvements.

On paper, factories appear predictable, but the reality on the ground is anything but static. Unexpected variations constantly arise: a bearing overheats, material batches differ, humidity fluctuates, and adhesives suddenly fail to adhere. Traditional robots, however, remain oblivious. They rigidly follow pre-programmed scripts, failing to adapt to these shifting conditions. This inflexibility leads to costly downtime, wasted materials, and a constant scramble by technicians to address “unexpected” failures that are, in fact, entirely foreseeable.

Adaptive robotics tries to solve that. These aren’t just mechanical arms repeating programmed motions; they’re systems that listen to operational data, learn from it, and change how they work.

Also read: Autonomous scheduling agents bridging ERP and shop‑floor systems

Where Fixed Programs Fall Apart

Anyone who has debugged a production cell knows the limits of rigid code. An arm programmed for perfect geometry welds or picks flawlessly—until reality intervenes. Panels arrive a millimeter off. Boxes sag from moisture. Suddenly, the routine is useless.

How do factories usually respond?

- Hire more inspectors to catch defects.

- Hard-code even more exception handling.

- Tolerate higher scrap rates and write them off as “inevitable.”

All of these add cost without addressing the root problem. Conditions change every day. Treating that variability as “error” is a losing game.

The Shift From Recipes to Learning Agents

Anyone who has debugged a production cell knows the limits of rigid code. An arm programmed for perfect geometry welds or picks flawlessly—until reality intervenes. Panels arrive a millimeter off. Boxes sag from moisture. Suddenly, the routine is useless.

How do factories usually respond?

- Hire more inspectors to catch defects.

- Hard-code even more exception handling.

- Tolerate higher scrap rates and write them off as “inevitable.”

All of these add cost without addressing the root problem. Conditions change every day. Treating that variability as “error” is a losing game.

The Shift From Recipes to Learning Agents

The difference is simple but profound. Fixed recipes tell a robot, “Do this, exactly this, every time.” Learning agents watch the outcome and ask, “Did it work? If not, what should I change?”

One warehouse example: suction grippers in pick-and-place systems. Anyone in logistics knows how inconsistent cardboard is—winter moisture swells it, suppliers cheap out on coatings, and boxes flex differently week to week. A hard-coded suction force either works or fails. An adaptive agent, after 100,000 picks, learns patterns—surface roughness, slippage rates, even subtle acoustic cues when suction is imperfect—and adjusts grip strength in real time. Less downtime, fewer drops.

The Data That Makes It Possible

No magic here. Just data—lots of it.

- Sensor readings: torque spikes, vibration signatures, thermal drift.

- Vision input: micro-misalignments, weld penetration, surface defects.

- Contextual signals: which supplier delivered this batch, ambient humidity, and even operator shift schedules (because human variance matters too).

The power comes when all three are stitched together. A suction failure is no longer random—it’s “Supplier B cartons + humidity over 65% + worn suction cups.” With that, an agent can adapt before the failure repeats.

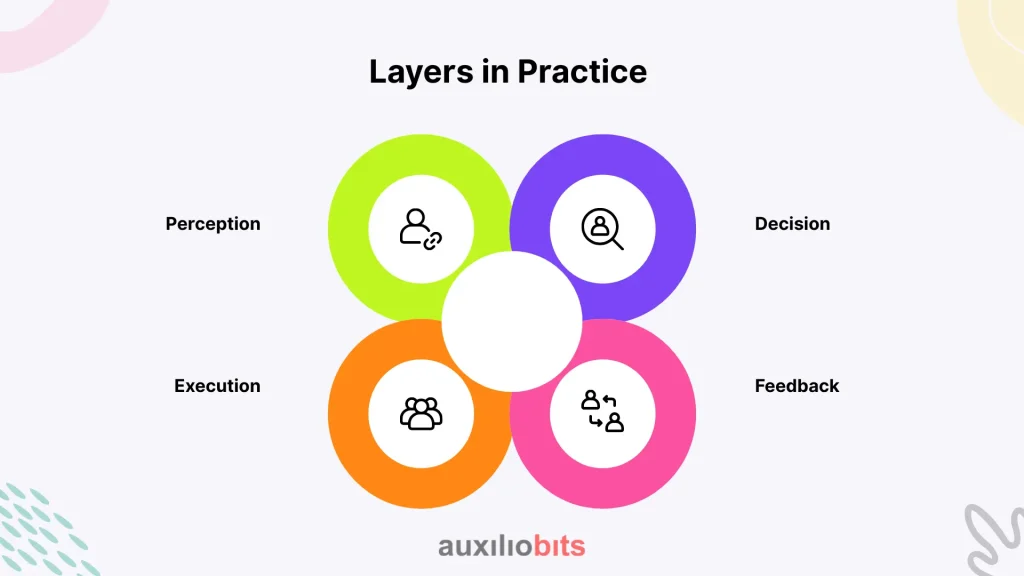

Layers in Practice

It helps to picture adaptive robotics not as one big brain but as a loop:

- Perception – messy data feeds from sensors and cameras.

- Decision – models that find patterns in that mess. Some reinforcement learning, some simple heuristics, depending on the task.

- Execution – actuators responding with micro-adjustments.

- Feedback – results logged back for the next cycle.

While these elements aren’t individually groundbreaking, their innovation lies in the closed-loop system where perception directly influences and reshapes execution, rather than merely populating a dashboard.

A Real Case: Adaptive Welding

At an auto plant, weld failures kept spiking whenever coil stock came from certain suppliers. The panels weren’t “bad,” just slightly different. Traditional robots didn’t care—they ran with the same torch current regardless.

Engineers added adaptive control: sensors measured arc stability, vision checked bead consistency, and an agent correlated settings with supplier batches. After a few months, the robots adjusted automatically—slightly more current here, slower speed there. Rework dropped by a fifth. That’s a staggering figure when you’re building over a thousand cars a day.

Failures Do Happen

Not every story is a success. One logistics firm tested adaptive palletizers. For a week, oversized cartons dominated orders. The robots over-learned from the anomaly, adjusting stacking patterns around these rare cases. When volumes were normalized, the system was less efficient than before.

Point being: adaptive systems need guardrails. Left unchecked, they can “chase noise.” Humans still have to supervise and occasionally reset the baseline.

Edge vs. Cloud

Where should the learning sit?

- Edge—Right on the robot controller. Instant decisions, no network dependence. But the computer is limited.

- Cloud—Richer models, data from entire fleets. But latency and connectivity risks.

In reality, manufacturers split the difference. Quick adjustments (like grip pressure) stay local. Broader lessons (batch-level insights) sync to the cloud weekly. The hybrid model is less elegant than marketing slides, but it works.

The Human Side

This is the part vendors gloss over. Operators need to trust the robots. If a machine starts “changing its mind” without explanation, seasoned technicians won’t accept it.

Some ways plants have managed the shift:

- Dashboards that show what was adjusted and why.

- Gradual rollouts where human override remains in play.

- Training sessions that frame adaptation as a tool, not a threat.

Without this cultural groundwork, adaptive robots often get sidelined—not because the tech fails, but because people don’t buy in.

Tangible Gains Seen So Far

From field deployments, recurring wins include:

- Shorter downtime: agents anticipate wear before failure.

- Greater input tolerance: robots handle more material variance without reprogramming.

- Quality stability: micro-adjustments reduce hidden drift.

- Energy savings: path optimizations shave idle cycles.

Worth noting, though: in ultra-controlled sectors (semiconductors, pharma filling), the payoff is slim. Standardization is already so tight that adaptation adds cost with little benefit.

Hype and Missteps

Sales pitches often promise “self-optimizing factories.” The reality: most projects die not because algorithms fail, but because of groundwork that never happens—messy sensor data, integration with ancient PLCs, staff untrained on adaptive dashboards.

The firms that succeed are methodical. They invest in data plumbing, not just shiny robots. They expect learning to take months, not days. In other words, patience and discipline matter more than vendor promises.

Looking Ahead: Multi-Agent Factories

The next step isn’t single adaptive robots—it’s swarms of them sharing intelligence.

- If one picker struggles with Supplier B cartons, others learn instantly.

- Lines shift workload automatically when one unit slows.

- Plants on different continents have different adaptation rules.

That vision is seductive, but risky. Distributed intelligence means distributed mistakes, too. Failures could propagate at the same speed as improvements. Standards for safe agent-to-agent communication are still immature. It’s coming, but it’s not turnkey.

Practical Notes for Practitioners

From hard lessons learned:

- Pilot one process, not the whole line.

- Pick success metrics up front. Otherwise, the “improvement” is meaningless.

- Keep operators in the loop. Visibility builds trust.

- Don’t rip out old systems. Bolt adaptation gradually.

- Audit regularly. Adaptive doesn’t mean infallible.

Closing Reflection

Adaptive robotics isn’t hype, and it isn’t a cure-all. It’s a slow but steady shift toward machines that don’t just repeat—they refine. When done well, the gains compound: lower scrap, higher uptime, more resilient operations.

The irony is that the biggest challenge isn’t technical. It’s organizational. Trust, training, and patience matter as much as reinforcement learning. Ignore those, and all the sensors in the world won’t help.