Key Takeaways

- Cyber insurance has shifted from static attestations to continuous reality checks

Insurers increasingly expect proof that security controls are operating consistently, not just declared during annual renewals. - Risk posture drift happens faster than most reporting cycles can keep up. Cloud churn, SaaS sprawl, and identity exceptions quietly change exposure. Agents make this drift visible before it turns into higher premiums or reduced coverage.

- Agents serve as a translation layer between insurance language and security systems. They continuously map abstract requirements—like MFA enforcement or privileged access—onto live signals across identity, cloud, and endpoint tools.

- The true value of agents shows up during claims, not questionnaires. Time-stamped evidence of control health and remediation history can prevent disputes when insurers scrutinize incidents after the fact.

- Insurer trust increasingly depends on clarity, not tooling sophistication. Organizations that can clearly explain and evidence how risk is managed day to day gain leverage—often more than those with the largest security stacks

Cyber insurance used to be treated as a mere checkbox. Fill out a questionnaire once a year, attest to a handful of controls, negotiate premiums, and move on. That era is gone—and frankly, it deserved to be.

Today, cyber insurers behave less like passive risk absorbers and more like continuous evaluators. They want evidence. Not PDFs. Not screenshots from six months ago. Evidence that your controls are actually operating, that your attack surface hasn’t drifted, and that the story you tell underwriting still matches reality.

This is where things get uncomfortable for most enterprises. Security teams know their posture changes weekly. Insurance applications assume it’s static. The gap between those two truths is where premiums spike, coverage shrinks, or claims get challenged.

Agent-based systems—when implemented thoughtfully—are starting to close that gap. Not by replacing GRC teams or CISOs, but by doing the tedious, error-prone work that humans are inadequate at sustaining: monitoring, cross-checking, correlating, and explaining.

And yes, there are ways the process goes wrong.

Also read: Agents as Frontline Advisors in Retail Loyalty Programs

The Real Problem Isn’t Insurance. It’s Drift

Most organizations don’t lose cyber insurance because they’re negligent. They lose leverage because their risk profile drifts faster than their reporting mechanisms.

Think about a typical mid-to-large enterprise:

- New SaaS tools are being adopted by various business units.

- Cloud environments spun up and torn down weekly

- MFA coverage eroding quietly as exceptions pile up

- EDR agents installed, but not always healthy

- Vendors changing their own security postures without notice

Now contrast that with how cyber insurance underwriting still largely works:

- Annual or semi-annual questionnaires

- Point-in-time attestations

- Broad yes/no answers to nuanced technical realities

The result? The risk narrative loses accuracy every month after it is submitted.

What “Agents” Mean in This Context

Let’s get specific, because “AI agents” has become an overloaded term.

In cyber insurance risk management, agents are autonomous or semi-autonomous systems that:

- Continuously observe security-relevant signals

- Interpret those signals in the context of insurance requirements

- Detect divergence between attested controls and operational reality

- Trigger actions, explanations, or remediation workflows

They’re not just dashboards. They don’t wait for quarterly reviews. And they shouldn’t be confused with SOC automation tools, even though they often integrate with them.

A useful way to think about it: if your GRC team is responsible for telling the story of your cyber risk, agents are the ones quietly fact-checking it every day.

Mapping Insurance Requirements to Living Systems

One underappreciated challenge in cyber insurance is translation. Insurance language doesn’t map cleanly to security tooling. “Privileged access is restricted” sounds simple until you try to prove it across:

- On-prem AD

- Azure AD / Entra

- AWS IAM

- SaaS admin roles

- Local service accounts no one remembers creating

Agents help by acting as translators.

For example, instead of answering “Yes, MFA is enforced for all remote access,” an agent-driven approach might:

- Monitor identity providers for MFA enforcement policies

- Detect exclusion groups and service accounts

- Correlate VPN logs with authentication methods

- Flag drift when coverage drops below a defined threshold

Not to panic anyone. Just to surface reality.

Some insurers are already asking for this level of detail during renewals. Others aren’t—yet. But the direction is obvious.

Where Agents Add Real Value

Used well, agents reduce friction between security teams, risk managers, and insurers. Used poorly, they become just another noisy system everyone ignores.

Places they genuinely help:

- Continuous posture validation

- Pre-renewal readiness without fire drills

- Evidence collection that doesn’t rely on screenshots

- Faster, cleaner responses to insurer follow-ups

Places they often fail:

- When they’re deployed without ownership

- When alerts aren’t tied to business or insurance impact

- When they’re treated as “AI that will figure it out”

Claims Are Where the Truth Comes Out

Underwriting gets attention. Claims are where everything gets scrutinized.

During a cyber incident, insurers don’t just ask what happened. They ask:

- Were the declared controls in place at the time?

- Were they functioning or merely configured?

- Was there material misrepresentation?

Agents don’t prevent incidents. But they help organizations avoid the nightmare scenario where a claim is disputed because the risk profile on paper didn’t match reality.

Imagine being able to show:

- Time-stamped evidence of control health

- Logs demonstrating enforcement, not intent

- A history of remediation actions triggered by detected drift

That changes the conversation.

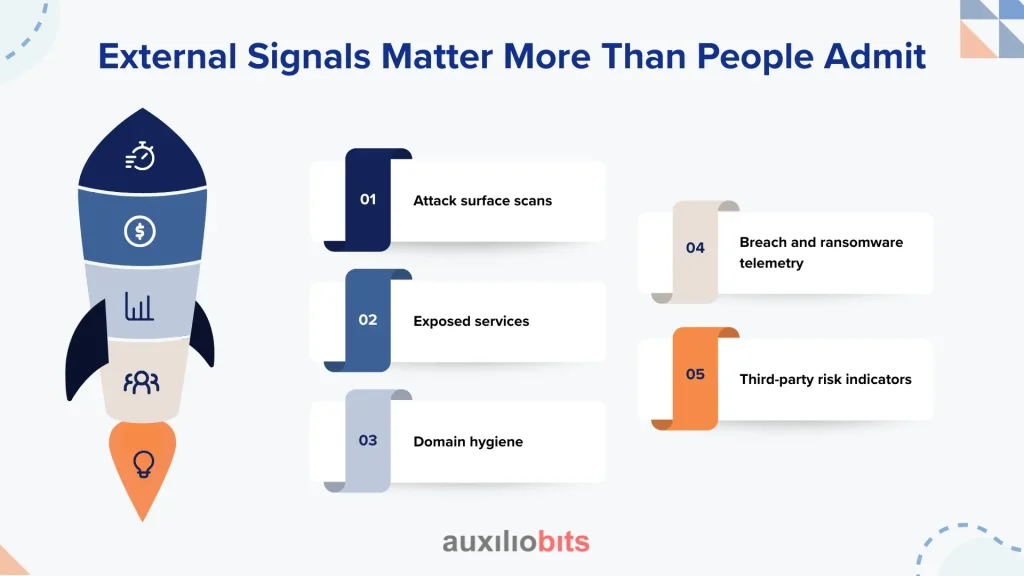

External Signals Matter More Than People Admit

Here’s an uncomfortable truth: insurers increasingly trust external signals more than your internal attestations.

They look at:

- Attack surface scans

- Exposed services

- Domain hygiene

- Breach and ransomware telemetry

- Third-party risk indicators

Agent-based systems can ingest the same signals and compare them with internal views. When there’s a mismatch, that’s a warning sign worth paying attention to.

There have been cases where:

- Internal teams believed assets were decommissioned

- External scans still left them exposed.

- Insurers noticed before the organization did

That’s not an AI problem. That’s an ownership problem. Agents just make it visible.

Cyber insurance is not a shield. It’s a negotiation based on trust, evidence, and probability.

Agents don’t make you safer by default. They make you more legible to insurers. And in today’s market, legibility matters almost as much as control itself.

The organizations getting better terms aren’t necessarily the ones with the fanciest tools. They’re the ones who can explain, with confidence and proof, how their risk is managed day to day.

That explanation is challenging to sustain manually. Humans get tired. Agents don’t.