Key Takeaways for CEOs

- Agentic AI will expose strategic ambiguity faster than any consultant ever could. If leadership hasn’t clearly articulated trade-offs—speed versus risk, cost versus resilience—agents will operationalize the gaps. Scaling fails not because the AI is wrong, but because intent was never made explicit.

- Autonomy shifts accountability upstream, not away. When agents make decisions, accountability doesn’t disappear—it moves to system design, policy definition, and governance. CEOs must be comfortable owning outcomes that emerge from systems, not individuals.

- The fastest wins come from unglamorous processes with chronic human fatigue. Order-to-cash, claims triage, vendor onboarding—these are where agentic AI earns trust quietly. Flashy use cases create headlines; boring ones create adoption.

- Culture will determine whether agents become leverage or liabilities. Organizations that punish mistakes or demand perfection will suffocate learning systems. Those that reward clarity, challenge, and escalation will see agents improve—and people trust them faster.

- Architectural discipline outlives any AI platform choice. Models will change. Vendors will fade. What endures is clean separation between policy, reasoning, and execution. Enterprises that get this wrong scale quickly—and then stop entirely.

Most CEOs are no longer asking whether to adopt AI. That debate ended. The real question now is more uncomfortable: How do we scale AI without creating chaos, dependency, or a fragile organization that no one fully understands? Agentic AI has moved this question into sharper focus. Unlike copilots or embedded analytics, agentic systems act. They decide, coordinate, retry, escalate, and sometimes surprise. That autonomy is the value—and also the risk. Scaling it is less about technology and more about operating philosophy.

First Principle: Don’t Scale Intelligence Before You Scale Intent

Many AI programs fail for a surprisingly simple reason: the organization never agreed on what it wants agents to optimize for. Speed? Cost? Compliance? Customer experience? All of the above? When humans are involved in making decisions, the ambiguity becomes less significant. When software agents make decisions at machine speed, it becomes crucial.

Before scaling agentic AI, CEOs need explicit intent, not aspirational statements. Intent shows up as trade-offs written down in plain language:

- “We will accept slightly higher unit costs to preserve supplier reliability.”

- “Customer resolution speed matters more than call deflection.”

- “Regulatory confidence outranks automation coverage.”

These decisions feel strategic because they are. Agents operationalize them relentlessly.

Also read: The CFO’s Guide to Automation Investment and Payback Periods

Agentic AI Is an Operating Model Shift, Not a Tool Rollout

Treating agentic AI like another traditional enterprise platform—a system you simply deploy, feed data to, train on historical examples, and then iteratively optimize performance metrics—is a profound category error. This perspective fundamentally misrepresents the nature of autonomous agents and the transformative impact they have on business operations.

Agentic AI systems are not merely sophisticated automation tools designed to execute existing workflows faster or cheaper. They are intelligent entities capable of planning, reasoning, and adapting to novel situations autonomously. This means that they don’t just follow a workflow; they change it in real time and actively.

The introduction of agents triggers a cascade of changes that redefine core organizational dynamics:

- Change in Decision Rights (Who Decides): Traditional systems only process decisions predetermined by human-coded logic. Agentic AI is designed to make decisions within defined boundaries, often in real-time, based on emergent data and internal reasoning models. This shifts the point of decision from a human manager or a static rulebook to the agent itself. This requires rethinking governance, accountability, and auditing.

- Change in Temporal Dynamics (When Decisions Are Made): In human-centric workflows, decisions often wait for scheduled meetings, approvals, or batch processing cycles. Agents operate continuously and can execute decisions instantaneously upon detecting the relevant conditions. This speedup closes traditional latency gaps, but it also raises the risk of “runaway” processes or immediate, unvetted effects.

- Change in Exception Handling (How Exceptions Propagate): When a legacy system encounters an exception (an unknown variable, a missing data point, or a violation of a rule), it typically halts the process and escalates the issue to a human. Agents, by definition, possess the capacity for problem-solving and self-correction. They might attempt novel solutions, modify the sub-workflow, or seek alternative data sources before escalating. This means exceptions no longer follow rigid, predefined human pathways but are handled dynamically, making the overall process flow far less predictable and requiring new mechanisms for human oversight and intervention only at critical junctures.

To leverage agentic AI effectively, organizations must recognize that they are integrating a new form of cognitive labor, not just a new piece of software. This demands a strategic pivot from focusing on ‘deployment’ and ‘optimization’ to focusing on ‘governance’, ‘safety’, ‘alignment’, and ‘process redesign’.

Start Where Friction Is Chronic, Not Where AI Looks Impressive

There’s a temptation—often driven by internal AI teams—to start with flashy use cases: autonomous negotiation, dynamic pricing, and fully agent-led customer interactions. Sometimes that’s fine. Often it’s premature.

The strongest enterprise-scale deployments begin in places with three characteristics:

- High-volume decisions

- Well-understood failure modes

- Persistent human fatigue

Think order-to-cash, claims processing, vendor onboarding, and IT service triage. These aren’t glamorous. They are brutally repetitive, politically sensitive, and full of tacit rules humans apply without realizing it.

One healthcare payer started agentic automation in prior authorization—not to eliminate reviewers, but to triage requests. Agents handled “obvious yes” and “obvious no,” escalated the grey areas, and learnt from reversals. Adoption stuck because clinicians felt relief, not displacement.

The lesson for CEOs: credibility compounds. Start where agents earn trust quietly.

The Human-AI Boundary Must Be Explicit—or It Will Be Contested

One of the messiest failure modes in agentic programs is role ambiguity. When agents act independently, humans start asking uncomfortable questions: Am I accountable for this decision? Did the system override me? Why am I still doing this work?

Ignoring those questions doesn’t make them go away. It turns them into resistance.

Successful organizations redraw roles deliberately:

- Humans as exception architects, not exception handlers

- Managers as system stewards, not task assigners

- Domain experts as policy designers, not manual validators

There’s often a dip in productivity during this transition. That’s normal. What’s dangerous is pretending the old org chart still applies.

Scaling Requires a Different Talent Strategy

Scaling agentic AI is less about brilliance and more about institutional memory. Scaling agentic AI often stalls due to the misconception that it demands exceptionally rare talent. In reality, successful deployment requires a blend of complementary skills:

Key Talent Requirements:

- Process Experts: Individuals with a deep understanding of workflows, even if they lack coding expertise.

- Probabilistic Engineers: Engineers who can design systems with inherent uncertainty and learning, rather than purely deterministic logic.

- Adaptive Leaders: Management comfortable overseeing and guiding systems that evolve and learn.

The most effective teams combine domain veterans with automation architects. The domain veteran defines success—what “good” looks like and how to measure learning—while the architect translates this into executable agent behavior.

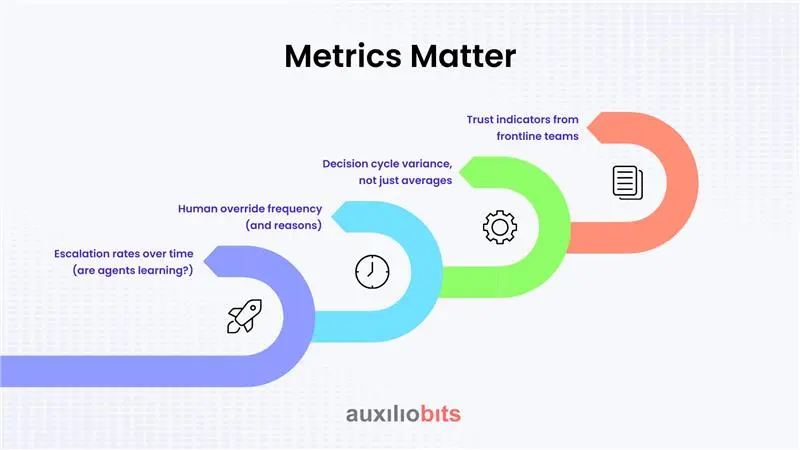

Metrics Matter

Traditional KPIs struggle to capture agentic impact. Cost savings and throughput improvements show up, but they lag. Cultural damage may already have occurred by the time you notice it.

More useful signals include:

- Escalation rates over time (are agents learning?)

- Human override frequency (and reasons)

- Decision cycle variance, not just averages

- Trust indicators from frontline teams

One retail enterprise tracked something unusual: time-to-disagree. How quickly could a human challenge an agent’s decision and get a satisfactory explanation? As that number dropped, adoption rose.

CEOs should pay attention to these second-order metrics. They reveal whether scaling is healthy or brittle.

Technology Choices Matter Less Than Architectural Discipline

There’s no shortage of platforms promising agentic capabilities. Some are excellent. Many will be obsolete in two years. CEOs shouldn’t obsess over vendor selection—but they should insist on architectural principles:

- Loose coupling between agents and core systems

- Clear separation between policy, reasoning, and execution

- Replaceability of models without rewriting workflows

Organizations that violate these principles move fast initially and then freeze. Every change becomes a risk. Every upgrade is a negotiation.

One enterprise had to pause its entire agent program because a single model update altered decision behavior in subtle ways. No one could trace the impact. They rebuilt—slowly, expensively—with better separation of concerns.

That rebuild cost more than doing it right the first time.