Key Takeaways

- Co-creation thrives on tension, not harmony. The most innovative outcomes emerge when human intuition and machine logic challenge each other.

- Agents aren’t tools—they’re creative participants. True LLM agents remember, reason, and debate, bringing dynamic context into design.

- Feedback must be emotional and descriptive. The “why it feels wrong” matters more than numeric ratings or binary approval.

- Governance and memory are non-negotiable. Without structured feedback loops and contextual storage, collaboration collapses.

- Ego is the final barrier. Teams that redefine ownership as stewardship unlock genuine machine-human creativity.

Some teams still think design is a sequence of steps. You research, sketch, test, iterate—like running laps around a track. But that’s not how design actually happens, not anymore. Today, half the creative conversation happens with something that doesn’t even have a face: an LLM agent that talks back, argues, and occasionally outsmarts you.

Designers often dismiss AI critique, feeling it doesn’t align with human thought processes—and sometimes they are correct. However, the AI can also offer valid insights. Co-creation is not about transferring the design process to machines; it’s about including them as collaborators at the decision-making table, allowing them to offer input, even if challenging.

Also read: AI Agents in Strategic Scenario Simulation for Executive Decisioning

What “Co-Creation” Really Means

Beyond the typical marketing hype, co-creation here isn’t a simple case of “AI assisting designers.” Instead, it’s a continuous, dynamic struggle—a tug-of-war between the cold logic of the machine and the nuanced taste of the human. This iterative process unfolds as follows:

Beyond the typical marketing hype, co-creation here isn’t a simple case of “AI assisting designers.” Instead, it’s a continuous, dynamic struggle—a tug-of-war between the cold logic of the machine and the nuanced taste of the human. This process is far more profound than mere tool use; it is a collaborative dialectic where each iteration challenges the assumptions of the last, pushing the boundaries of creative possibility.

This iterative process unfolds as follows:

- The Initial Prompt and Machine Logic: The human designer initiates the process with a core concept, a set of constraints, or a visual mood board. The AI, acting on its vast training data and algorithms, processes this input. Its initial output is characterized by cold logic—solutions that are statistically probable, technically sound, and often highly efficient, yet potentially devoid of emotional resonance or the subtle “flair” the designer seeks.

- Human Critique and Refinement: The designer reviews the AI’s generation. This is where the nuanced taste of the human comes into play. They don’t just accept or reject; they identify the elements that are structurally correct but aesthetically lacking—the uncanny valley of design. They articulate why a particular shade feels wrong, how a composition fails to evoke the desired feeling, or where the AI missed the cultural context.

- The Iterative Struggle (Tug-of-War): The designer feeds their critique back into the system, often by manipulating the AI’s output directly or by refining the prompt with highly specific, qualitative language. The AI is then forced to adapt its mathematical model to accommodate these non-quantifiable, subjective human preferences. This creates the dynamic struggle: the AI strains against its logical bounds, while the human pushes the AI to transcend pure efficiency for the sake of artistry.

- Emergence of Novelty: Genuine co-creation arises from the tension of this “tug-of-war.” The AI, reacting to the human’s abstract feedback, frequently generates unforeseen variations. This synthesis leads to design solutions—neither purely human nor purely machine—that would have been impossible for either party to conceive in isolation. The final design is, therefore, a blend of intelligence types: algorithmically robust yet deeply and emotionally resonant.

The Anatomy of a Human–LLM Design Loop

You can break this new ecosystem into three feedback threads:

- Human ↔ Model—the obvious one. The designer talks, the model replies, critique by critique.

- Model ↔ User Data—where the agent learns directly from real user sentiment and behavior. It doesn’t just simulate personas; it reads what people actually said and extrapolates patterns.

- Human ↔ User – the contextual check. Humans re-interpret what the AI infers, filter out nonsense, and decide whether logic or emotion should win.

When those three loops start feeding each other, the process stops feeling like workflow management and starts feeling more like jamming with a band—you play off each other’s rhythm, not a script.

Stop Calling LLMs “Tools”

We need to rethink the language we use around advanced generative models. Calling them simple “AI tools” fundamentally misrepresents their capability and potential. They are not merely sophisticated implements; they are closer to colleagues—colleagues, perhaps, with selective memory, no desire for promotion, and blessedly, no ego. This subtle yet profound distinction matters.

A traditional tool is entirely subservient. It performs the function it is instructed to do, with no deviation, no argument, and no independent thought. If you tell a hammer to hit a nail, it hits the nail. An intelligent agent, however, is designed to argue back. It might challenge the premise of the instruction, suggest a superior approach, or highlight a constraint you hadn’t considered. It moves the interaction from command-and-control to collaboration and iteration.

For an AI system to ascend from a “tool” to an “agent,” it must overcome the crippling limitation of short-term memory. A model that forgets context every time you refresh the chat window is not an agent; it’s a parrot—capable of mimicking language patterns but incapable of continuous, informed dialogue.

True agency demands robust structure and memory. This memory is not a simple transaction log; it is a rich, persistent context layer:

- Context Embedding: A system for encoding the ongoing conversation, the project’s brief, and accumulated constraints into a dense vector space that the model can query efficiently.

- Retrieval from Past Decisions: The ability to look back at previous iterations, design choices, and rationale. “Why did we rule out the red button last Tuesday?” An agent should know.

- User Sentiment Vectors: Incorporating real-time or accumulated understanding of user satisfaction, frustration, and preference to steer the conversation and output proactively, not just reactively.

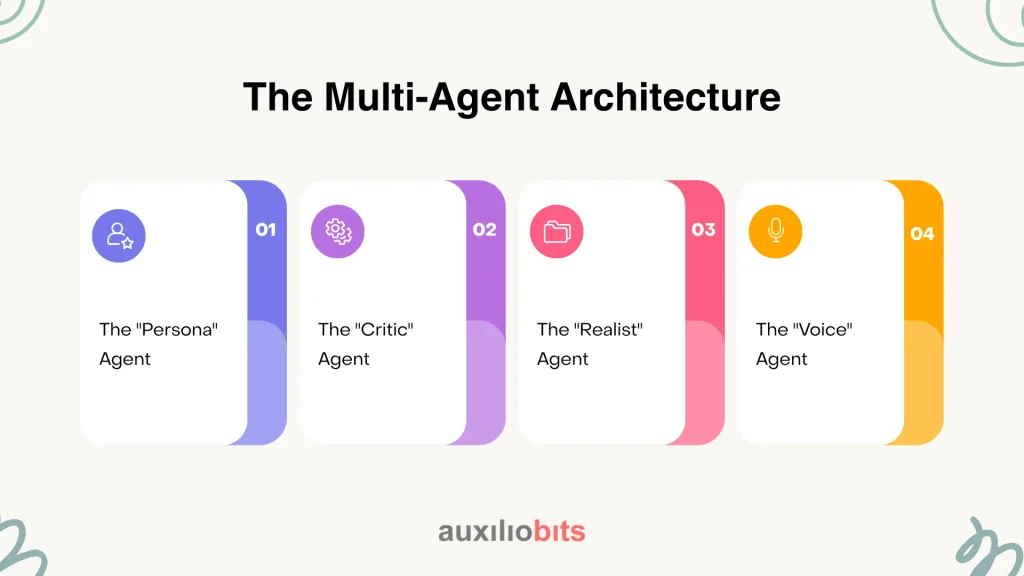

The Multi-Agent Architecture

The most significant leap in the evolution of AI collaboration is the multi-agent layer. Real-world creative and technical teams thrive on productive tension. The friction between competing priorities—a sleek user experience versus rigid technical feasibility, or ambitious aesthetics versus production cost—is what forges a truly balanced and durable product.

This dynamic can now be digitally replicated through a structured architecture of specialized agents, each embodying a core organizational tension:

- The “Persona” Agent: This agent acts as the unyielding voice of the user. It speaks from the perspective of their goals, pain points, and usage context, constantly challenging decisions that compromise usability or accessibility.

- The “Critic” Agent: Focused on quality and standard, this agent cares primarily about heuristics. It guards against common cognitive biases, ensures adherence to established design principles, and checks the output against industry best practices and accessibility standards.

- The “Realist” Agent: Serving as the counterweight to ambition, the Realist keeps things buildable. It injects constraints related to engineering effort, cloud budget, existing infrastructure, and time-to-market, ensuring the suggested solution is feasible and scalable.

- The “Voice” Agent: Charged with maintaining brand identity and integrity, this agent guards the tone. It ensures all generated copy, documentation, or interface text adheres to the specified voice and style guide—be it authoritative, playful, or empathetic—preventing stylistic drift.

By orchestrating this digital tension, the system moves beyond simply generating an answer and begins to refine, debate, and optimize a solution, mirroring the complexity and robustness of a high-functioning human team.

When It Works, and When It Really Doesn’t

Truth is, it doesn’t always work. It works when you’re solving ambiguous problems—like deciding how users feel about a new onboarding journey. It works when you have partial but structured data—old design notes, inconsistent feedback, scattered survey results. The AI can stitch threads together.

It falls apart when things depend on touch, space, or timing. You can’t ask an LLM how a phone feels in someone’s hand or how long a micro-interaction should last before it feels sluggish. The model will guess.

Also, never trust an ungrounded model in co-creation. Without context, it’s a very confident liar. It’ll generate a perfectly formatted argument for an idea that has no basis in user need. The language makes it sound credible—that’s the trap.

And if your team starts letting the machine decide because “it seems more objective,” stop. Design is not democracy, and it’s definitely not data absolutism.

The Texture of Human Feedback

Most people think “human feedback” means scoring or choosing between outputs. In design, it’s subtler. You’re not just saying what is wrong; you’re saying why it feels wrong.

Example:

- Instead of “the layout is confusing,” you note, “the eye doesn’t rest anywhere.”

- Instead of “the color palette is dull,” you write, “this tone doesn’t carry the emotional weight of the brand.”

Those nuances teach the model far more than simple labels. Over time, it starts recognizing patterns of taste. That’s how co-creation evolves—from mere iteration to something that approximates shared judgment.

The AI starts anticipating your reasoning—not predicting your taste, but contextualizing it. When that happens, you realize the line between feedback and training has blurred.

The System Behind the Scenes

A functioning co-creation setup isn’t just a chat window with a smart backend. You need a few invisible layers working quietly underneath:

- Interaction Layer: where humans and agents exchange ideas, embedded inside tools like Figma or Notion.

- Feedback Layer: tagging what got used, what didn’t, and why.

- Memory Layer: an evolving database that remembers what decisions were made and how users reacted.

- Governance Layer: attribution, bias checks, and the occasional human sanity review (“did this idea come from us or the bot?”).

Without that scaffolding, co-creation devolves into chaos. With it, it becomes something akin to creative infrastructure.

The Human Problem: Ego and Ownership

No one likes being told by an algorithm that their design doesn’t work. It bruises my ego. I’ve seen seasoned designers quietly tweak their concepts so the AI “agrees” with them—like arguing with your boss in a polite tone.

The trick is to stop thinking of ownership as authorship. In co-creation, ownership means stewardship: guiding the system toward a shared aesthetic outcome. The ego shift is uncomfortable but necessary.

If teams can handle that, they unlock something remarkable. When a designer and an agent disagree, it’s rarely about right or wrong—it’s about intent vs. pattern. The argument itself refines both.

The Organizational Ripple

Once this co-creative rhythm settles in, it doesn’t stay confined to design. PMs start using it for prioritization, marketing teams use it for campaign tone calibration, and support teams begin to analyze conversational friction using the same framework.

What starts as a design experiment becomes a company-wide behavioral shift—decisions become more conversational, less top-down. Everyone starts thinking in feedback loops.

It’s subtle but transformative. You stop producing and start negotiating with intelligence, humans, and synthetics alike.

A Few Lessons Worth Keeping

After seeing this play out across different teams and industries, a few takeaways stick:

- Don’t chase alignment too fast. Disagreement is the design.

- Train models on the why, not just the what. Context outlasts correctness.

- Over-personalized models become parrots. Keep a bit of friction alive.

- Measure tone, not just clicks. Emotional resonance is that, too.

- Use your flops as feedback fuel—the AI learns more from them than from your polished work.

Conclusion

Sometimes the model gets it completely wrong—tone-deaf, overconfident, bizarrely literal. Other times, it articulates a design principle you didn’t even realize you believed in. Both moments are valuable.

Co-creation isn’t about efficiency. It’s about expanding the boundary of what “creative reasoning” can mean. And if we get it right, design stops being a static process and becomes an ongoing conversation—between intuition and inference, taste and telemetry, us and the systems we’ve built to challenge us.