Key Takeaways

- AI agents turn strategy into a live rehearsal, not a static forecast. Instead of producing one-off models, agents simulate dynamic business behavior—showing how competitors, markets, and customers might respond in real time.

- Executives gain decision velocity through dynamic trade-off exploration. They can test multiple “what-if” narratives quickly, enabling faster and more confident moves in uncertain environments.

- Trust comes from transparency, not complexity. The best agentic systems reveal their reasoning, assumptions, and failure triggers—building credibility with leadership teams.

- Human strategists still define the quality of simulation. AI agents explore scenarios, but it’s human judgment that crafts the right questions and interprets the strategic nuances.

- Agentic simulation redefines the rhythm of strategy. Boardrooms are shifting from reviewing what happened to rehearsing what could happen—turning uncertainty into a source of foresight and competitive advantage.

Almost every senior leader says the same thing in different words: We don’t need more data. We need to understand what it means when things change.

The dashboards, KPIs, and weekly analytics reviews are fine for routine steering. But when volatility hits—when a new competitor enters from nowhere or when the regulatory mood flips overnight—data feels like fog. Everyone’s staring at the same numbers, yet no one agrees on what they imply.

That’s the real gap. Scenario planning was meant to fill it, but most companies still treat it like a calendar ritual. Pull together a few analysts, run “best case / worst case” models, and publish a neat deck that ages badly within two months.

Lately, though, something different has been creeping into the conversation: autonomous agents that simulate strategic behavior instead of static forecasts. It’s not about prediction accuracy—it’s about decision rehearsal.

Also read: Agentic personalization engines: dynamic offers based on real‑time customer behavior

The Difference Between Models and Agents

Models are frozen logic. They hold assumptions still, so we can look at them. Agents move inside that logic, push against it, and see what breaks.

A traditional model might tell you, “If inflation rises by 3%, the margin will compress by 1.2%.” An agent system might go further: “If inflation rises 3%, your suppliers raise costs in Q2, logistics are delayed by two weeks, and customers switch to substitutes—what happens to your regional P&L?”

That’s not math; that’s behavior.

The most interesting part? These agents aren’t perfect predictors—they’re mirrors. They show how fragile your current strategy might be when the world stops behaving the way your spreadsheet thinks it should.

How It’s Showing Up in the Real World

One consumer electronics company started using AI agents to test new market entry plans. Previously, they built linear financial models with 30 assumptions. Now, they run autonomous “market agents” that simulate competitor reactions, ad fatigue, and currency fluctuations.

Instead of producing a single forecast, the agents generate a range of narratives:

- Aggressive competitor response squeezes early margin but expands share after two quarters.

- Price sensitivity is higher in urban clusters; bundling stabilizes the revenue curve.

- Currency slide offsets 60% of the expected gain unless hedged by Q3.

What changed wasn’t the accuracy of prediction—it was the executives’ ability to see their strategy breathe. They could experiment with cause and effect without waiting for quarterly reports.

That’s what decision velocity feels like in practice.

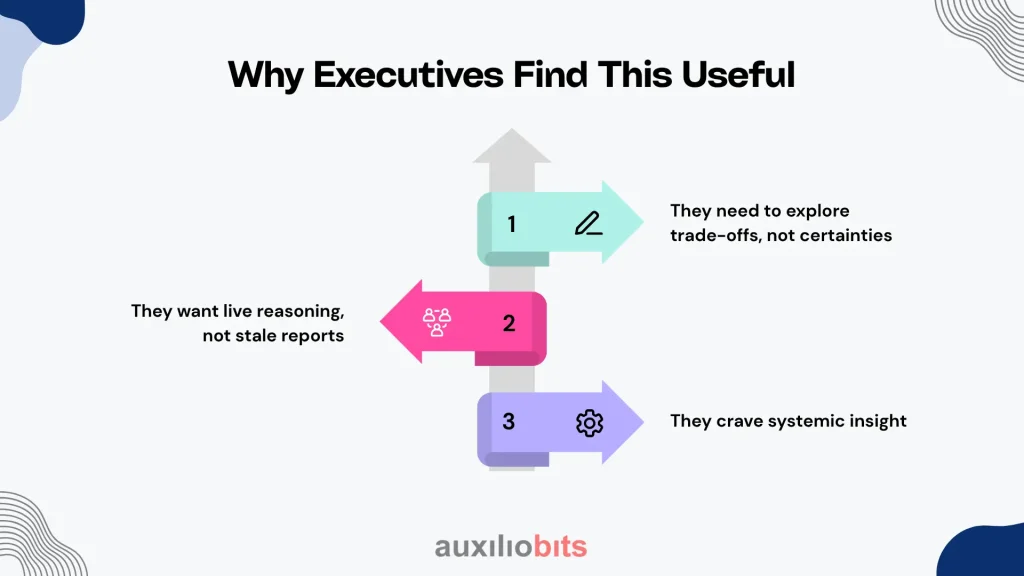

Why Executives Find This Useful

Three reasons come up over and over in private discussions:

- They need to explore trade-offs, not certainties. Business leaders rarely want one “answer.” They want to see how different moves play out before they commit real money.

- They want live reasoning, not stale reports. AI agents can update scenarios when a new data point lands—say, a shipping delay or policy change—without rebuilding the model from scratch.

- They crave systemic insight. Traditional analytics treat problems separately. Agents expose connections—how a logistics delay quietly turns into a revenue problem two quarters later.

Executives finally get something that feels closer to intuition—but backed by machine rigor.

The Mechanics Behind It

Behind the scenes, these systems are less mystical than vendors claim. Think of it as five moving parts working together:

- Data ingestion—Structured data from ERP or CRM meets unstructured sources like news, market chatter, and regulatory feeds.

- Knowledge mapping—Relationships are drawn between suppliers, currencies, products, and markets so agents can reason in context.

- Simulation sandbox—A virtual environment where multiple agents “act” and record consequences.

- Collaborating agents – Each specialized in risk, market, finance, or operations. They sometimes disagree, and that tension produces useful insight.

- Executive layer—Instead of a dashboard, you get narrative feedback: “If you delay launch by one month, risk exposure drops 15%, but the cost of delay exceeds savings.”

It’s not science fiction; it’s a digital rehearsal room for strategy.

When It Works Beautifully

The energy and manufacturing sectors have taken to this faster than most. In one energy firm’s pilot, agents modeled potential carbon-pricing regimes under different political outcomes. At the same time, a financial agent tested the firm’s capital plans against each policy variant. Another agent tracked how consumer sentiment toward renewables could affect market share.

By the end, executives weren’t looking at a single forecast—they were studying families of futures.

The decision they made was messy but informed: invest moderately in transition assets now, while keeping capital flexible for faster shifts later. No model could have proposed that middle ground; the agents found it through scenario tension.

The Trust Problem

Let’s be candid: many leaders still hesitate. They’ve been burned before by “AI dashboards” that produced confident nonsense.

The core issue isn’t the math; it’s opacity. When an agent says, “Option A carries 22% less exposure,” the immediate question is, why?

Good systems are starting to expose their reasoning—surfacing assumptions, alternative paths, and uncertainty ranges. Some even show what would flip the recommendation:

“If oil prices rise above $90, this path fails. Here’s what to watch.”

That sort of humility makes all the difference. The moment an AI system starts explaining itself like a seasoned analyst rather than an oracle, executives start listening.

Where Human Judgment Still Dominates

Even with powerful simulation, there’s an irreducibly human dimension to strategy. Agents don’t sense political tone, internal morale, or reputational nuance. They can’t read the room—or a regulator’s body language.

So, the best companies are turning the process inside out: humans frame the questions, and agents explore the answers.

It’s subtle but crucial. The quality of simulation depends on the sharpness of inquiry. If you ask lazy questions (“What if demand drops?”), You’ll get shallow scenarios. If you ask the layered ones, “What if demand drops after the new tariff hits and before our supplier migration finishes?”), The system begins to reveal edge cases worth discussing.

That’s where the human strategist still earns their keep.

A Word of Caution

There’s a temptation to oversimulate. Some early adopters fell into it—running tens of thousands of micro-scenarios until they lost sight of what mattered. One retailer I consulted ended up with a dashboard that visualized so many potential futures it paralyzed everyone.

The irony: in trying to reduce uncertainty, they multiplied it.

The fix was surprisingly simple—tie every simulation to a decision boundary. For example, “only run scenarios that materially shift gross margin by more than 2%.” Once that constraint was in place, the noise dropped, and the insights became sharper.

It’s a good reminder: thinking faster isn’t the same as thinking better.

A Quiet Redefinition of Strategy

What’s emerging from all this isn’t a new toolset—it’s a new rhythm of decision-making. Strategy used to move at the pace of quarterly reports. Now it can evolve weekly, even daily.

Some organizations are already structuring their leadership meetings differently. Instead of reviewing past KPIs, they review active simulations. The conversation moves from “What happened?” to “What’s changing right now, and what’s the next move?”

That shift—from analysis to rehearsal—sounds small, but it rewires the executive brain. Once you start seeing your business as a dynamic environment with agents probing its limits, you stop fearing uncertainty. You start using it.

Final Reflections

Not every company needs this level of sophistication. For some, well-built dashboards and a smart analyst team are enough. But for firms operating in turbulent markets—finance, logistics, energy, or tech—the ability to simulate consequences before committing real capital is becoming a competitive edge.

The best implementations share a few habits:

- They focus on bounded, high-impact decisions, not general curiosity.

- They enforce transparency of reasoning so leaders trust what they see.

- They keep a human narrative layer—someone to interpret results in context.

In truth, the role of AI agents isn’t to decide. It’s to stretch the imagination responsibly. They let executives ask “what if?” without betting the company every time.

And maybe that’s the quiet revolution: boardrooms becoming rehearsal studios for the future—guided not by static slides, but by intelligent agents constantly testing how far a strategy can bend before it breaks.