Key Takeaways

- Exploratory research demands curiosity at scale — Swarm agents enable distributed, autonomous exploration across diverse data sources, uncovering insights that traditional analytics miss.

- Swarm intelligence thrives on diversity, not consensus — Controlled disagreement between agents surfaces hidden patterns and prevents echo chambers in enterprise research.

- Technology is secondary to coordination — Success depends on designing clear autonomy boundaries, healthy feedback loops, and meaningful human oversight, not just advanced AI frameworks.

- The human role evolves from analysis to interpretation — Analysts act as meta-agents who synthesize and contextualize collective insights rather than manually query data.

- Swarm systems build institutional curiosity — Beyond automation, they transform how organizations approach uncertainty, encouraging continuous discovery and adaptive thinking.

Every organization claims to “do research,” but most of it isn’t exploration—it’s confirmation. Teams run queries to validate assumptions they already believe. They define their metrics, set up dashboards, and track what they can count. But when the business question is vague—when you’re trying to understand a new market behavior, an unexpected customer pattern, or a sudden operational anomaly—those systems stumble.

Exploratory research has always required curiosity. The problem is, curiosity doesn’t scale. A few good analysts can follow threads and find insights, but not across dozens of data sources, thousands of conversations, or millions of transactions. That’s where swarm agents come into play.

No, not the science-fiction kind. In practice, these are small, semi-autonomous AI entities—each built to explore a fragment of a larger question. They don’t replace analysts; they act more like tireless interns who never lose context or sleep. Together, they behave a bit like a hive mind, but one that a business can actually direct and measure.

Also read: Agentic AI as a Competitive Advantage in Emerging Markets

Rethinking What “Research” Means

Most analytics setups assume you already know what you’re looking for. “Show me the churn rate by region.” “Compare Q3 customer sentiment to Q2.” That’s not research—it’s bookkeeping with fancy charts. True research starts with uncertainty. You don’t know which data matters or what the right question even is.

Traditional systems fail there. They rely on centralized control—a single analyst or model defining queries and interpreting results. Swarm systems take the opposite approach: they distribute curiosity. Each agent explores a small slice of the unknown, running pattern searches, testing hypotheses, or even contradicting its peers.

If this sounds inefficient, it is—by design. Exploration isn’t neat. But it’s precisely this controlled redundancy that helps the swarm surface insights a centralized model would overlook.

What a Swarm of Agents Actually Looks Like

In business settings, a swarm setup might involve:

- A few dozen lightweight agents, each tuned for a different data type—text, transactions, web activity, sensor feeds, or public filings.

- Local reasoning, meaning each agent has context for its assigned domain and can decide whether something looks “interesting.”

- Information exchange, where agents share findings through a coordination layer—like a chat among researchers who occasionally interrupt each other.

- Emergent synthesis, where shared signals start to converge around a pattern.

Think of it like a team of specialists doing fieldwork. One interviews customers, another digs through financial reports, and a third scans competitor patents. None of them sees the full picture, but when their notes overlap, meaning begins to form.

A Case in Point: Detecting Market Drift

A consumer electronics company noticed something odd. Sales of a mid-range device were slipping, but sentiment reports didn’t show complaints. Marketing suspected pricing; finance suspected supply issues.

The data team decided to try a swarm-based approach. They created:

- An agent to scan product reviews and extract emotion beyond star ratings.

- Another to track competitor pricing changes in real time.

- A third to monitor Reddit and Twitter mentions for casual, untagged discussion.

- One more to analyze product imagery trends on Instagram.

No single agent found the cause. But when their observations were aggregated, a story emerged: customers were slowly gravitating toward smaller, minimalist designs, as signaled by photos and hashtags—not by text reviews. It wasn’t dissatisfaction; it was taste evolution.

That realization led to a redesign, not a discount. A single data model would never have seen it. The swarm did so because it explored without being told what to prove.

The Machinery Beneath

People imagine multi-agent systems as chaotic. In reality, they’re orderly chaos—coordinated, but decentralized. The architecture usually includes:

- A coordination layer (sometimes built on LangGraph or Azure’s Agentic Framework) that handles who talks to whom.

- Specialized connectors that let each agent tap into its own domain—CRM, ERP, or social data.

- A shared knowledge layer where findings are stored semantically so that agents can reference each other’s discoveries.

- A feedback system that rewards agents for aligning with validated patterns and discourages repetitive or irrelevant exploration.

A lot of this can be hosted on Azure’s infrastructure—agents as containerized services, talking through Event Grid or Service Bus, and storing results in a shared Cosmos DB or AI Search layer.

What matters most isn’t the tech—it’s the intended design. You define autonomy boundaries: how far each agent can go, when it should ask for help, and what “success” even means for exploratory work.

Where It Works—and Where It Fails Miserably

Swarm setups shine when the domain is uncertain, data-rich, and cross-disciplinary. Market trend detection, ESG risk discovery, early-stage product research—these are natural fits. The system thrives on fuzziness.

But it’s terrible at tasks that require precision or singular truth. If you already know the metric you need, swarm behavior becomes overkill. It’s like using a dozen detectives to confirm someone’s birthday.

Other common pitfalls:

- Data isolation. Agents can’t collaborate if corporate silos block access.

- Poor feedback loops. Without a mechanism to validate findings, agents drift into noise.

- Over-orchestration. If you centralize control too tightly, you destroy the very adaptability that makes swarms effective.

Many early enterprise pilots failed for exactly these reasons—they built swarms that acted like glorified chatbots, generating lots of chatter but no learning.

The Human’s New Role

Here’s the paradox: the more autonomous your agents get, the more human context you need. Analysts don’t disappear; they become meta-agents—interpreters of the swarm’s collective reasoning.

Instead of asking, “What’s the trend?” they ask, “Why did these six agents converge while the others disagreed?” That meta-analysis is where insight happens.

It’s a very different mindset. You’re no longer dealing in certainties. You’re working with probabilities, contradictions, and partial truths. And that’s uncomfortable for traditional business reporting. But if you’re doing exploratory research, it’s the only honest way to work.

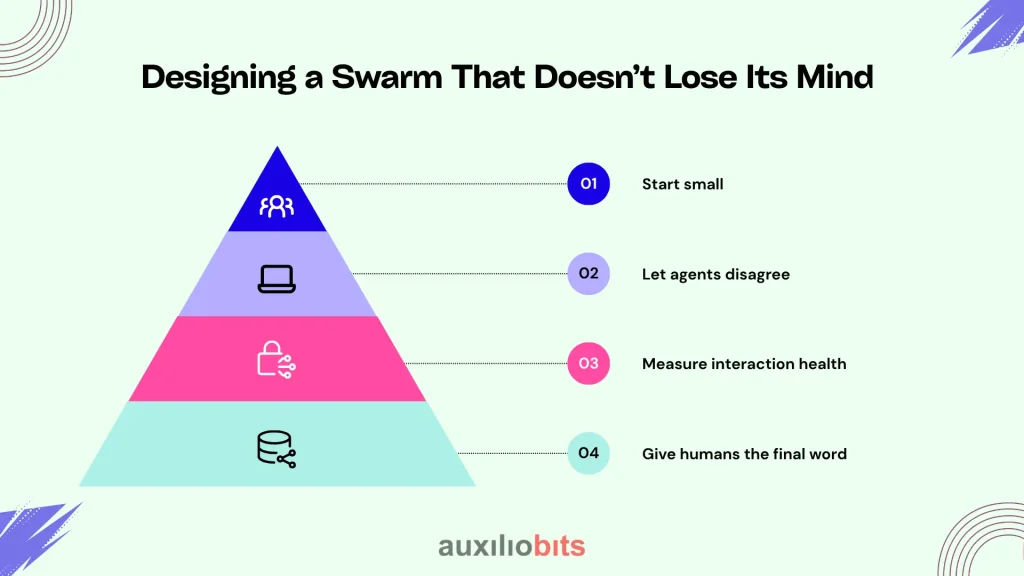

Designing a Swarm That Doesn’t Lose Its Mind

Based on what’s worked (and failed) across early deployments, a few design principles help:

- Start small. Don’t build a 50-agent swarm out of the gate. Begin with four or five roles:

- Scouts—gatherers of raw data

- Analysts—pattern finders

- Challengers – question existing hypotheses

- Integrators combine findings into a coherent summary

- Let agents disagree. Disagreement isn’t dysfunction—it’s diversity. Consensus too early often means your agents are echoing each other’s blind spots.

- Measure interaction health. Instead of KPIs, track how often agents reference or correct one another. Healthy swarms exhibit tension before alignment.

- Give humans the final word. Insights should always return to a human layer for interpretation and narrative framing.

It’s a surprisingly social system. You’re not managing code; you’re managing behavior.

Why Swarms Outperform “Big Models”

The enterprise AI world still worships scale—larger LLMs, bigger context windows, and endless fine-tuning. But exploratory work rewards diversity, not mass.

A swarm composed of small, narrow agents can outperform one big model because it captures multiple ways of seeing. Big models smooth out contradictions; swarm systems preserve them until they’re resolved or proven irrelevant.

Nature figured this out long ago. A colony of ants outperforms a lion not because ants are smart, but because their collective intelligence adapts faster than any centralized brain could. Business ecosystems aren’t that different

The Interface: From Dashboards to Dialogue

The most fascinating part of swarm deployments isn’t the computation—it’s the interaction design. Analysts don’t need another static dashboard. What they need is a conversation with the swarm.

Imagine asking:

- “Which early signals point to regulatory risk in Southeast Asia?”

- “Where did agents disagree about supplier sustainability data?”

And instead of a number, the swarm answers like a panel of experts: one summarizing, another countering, and a third adding context. It feels messy—and human.

That’s the point. Research is a dialogue, not a verdict.

Ethics and Governance

Distributed intelligence raises new headaches. If one agent fabricates a pattern, others might amplify it. If they all use similar training data, they’ll mirror the same bias.

Some working practices help keep things sane:

- Traceable provenance. Every agent output should include its data source and timestamp.

- Bias diversification. Train agents on varied corpora, even conflicting ones.

- Human arbitration. Don’t let the system self-confirm; humans must decide when an emergent pattern is worth trusting.

You can’t remove bias entirely—but you can distribute it, watch it, and learn from it.

What Businesses Actually Gain

Companies that have adopted swarm exploration often describe the benefit in odd terms—not “speed,” but serendipity. They discover things no one thought to ask.

Some real outcomes I’ve seen:

- Early detection of supplier instability through fragmented sentiment signals.

- Identification of unexpected co-branding opportunities by correlating search and image data.

- The discovery of emerging compliance gaps months before formal audits caught them.

The real win isn’t automation—it’s institutional curiosity at scale. A research culture that continuously probes the unknown, without requiring someone to define the question first.

A Quiet Shift in How Organizations Think

If you look closely, what’s changing isn’t technology. It’s epistemology—the way organizations decide what’s true.

Swarm systems invite a kind of humility. You stop pretending your dashboards hold absolute truth. You start engaging with data as conversation—messy, ongoing, and full of partial perspectives.

And that’s perhaps the real promise here: not faster analysis, but a more human way of thinking at machine speed.

Exploratory business research will always be uncertain. But with swarm agents, it doesn’t have to be blind.