Key Takeaways

- Historical risk models are losing reliability as climate volatility makes past data a poor predictor of future events.

- Agentic AI introduces adaptive intelligence, continuously learning from live data and reasoning through changing climate conditions.

- Leading insurers like Munich Re, Swiss Re, and AXA Climate are already experimenting with agentic architectures for dynamic exposure management.

- Governance and transparency mechanisms—from audit trails to human-in-the-loop models—are critical for regulatory confidence and trust.

- The future of underwriting is proactive, not reactive—agentic systems will help insurers prevent losses, not just price them, transforming the business from risk calculation to resilience management.

Every insurer today is wrestling with a quiet panic. The models that once predicted risk so confidently are starting to crumble under new pressures. You can feel it in strategy meetings and quarterly reviews—nobody trusts the numbers like they used to.

The reason isn’t incompetence. It’s physics. The planet itself has rewritten the rules. A “hundred-year flood” now arrives every five years. Wildfire seasons spill into winter. Entire risk maps that took decades to perfect no longer match reality. The past, which used to be underwriting’s compass, has lost its magnetic north.

So what replaces it? That’s where a new kind of system—agentic AI—is starting to reshape how insurers think about climate exposure. Not another dashboard or ML model that spits out loss ratios, but something closer to a thinking layer inside the enterprise.

Also read: Agentic AI as a Competitive Advantage: What Your Competitors Might Be Doing

When History Stops Helping

For more than a century, actuarial science was built on an elegant premise: history repeats itself. The more data you had, the safer your predictions became. But the climate doesn’t repeat anymore—it mutates.

A heatwave doesn’t just scorch crops; it shifts energy markets, insurance portfolios, and even health claims in northern Europe. Those chain reactions break the nice, clean curves actuaries used to draw.

Machine learning tried to bridge that gap. It helped for a while, until everyone realized that training on old data in a changing world is like reading yesterday’s weather report to plan tomorrow’s flight path.

Agentic AI behaves differently. Instead of being frozen in time, it learns as conditions change. It observes. It questions. It rebalances. It argues with its own assumptions.

So, What Makes It “Agentic”?

Think of a junior underwriter who never sleeps, keeps reading the latest climate data, and sends you three possible portfolio adjustments at 2 a.m. That’s roughly what these systems do.

They can:

- Pull live data from weather stations, satellites, and IoT sensors.

- Recognize patterns (say, soil drying trends or wind shifts) that increase exposure.

- Simulate outcomes before an event unfolds.

- Send recommendations—or execute them automatically, depending on policy.

Unlike typical automation, agentic AI doesn’t wait for instruction. It looks for trouble. It reasons through data the way a human analyst might, except it never gets tired, and it doesn’t cling to yesterday’s assumptions.

The Early Movers

A handful of major insurers are already experimenting—quietly. Nobody wants to announce a paradigm shift until it works at scale.

- Munich Re has layered adaptive models over its catastrophe datasets. These models continuously reweight exposure zones as fresh satellite imagery arrives.

- Swiss Re runs autonomous simulations that track economic ripple effects after climate shocks—testing how a storm in Asia affects a portfolio in Europe.

- AXA Climate built a geospatial AI network for agricultural underwriting that adjusts crop risk based on satellite vegetation and rainfall anomalies.

These examples aren’t just pilot projects. They’re the early steps toward self-adjusting underwriting engines—systems that behave more like living organisms than static software.

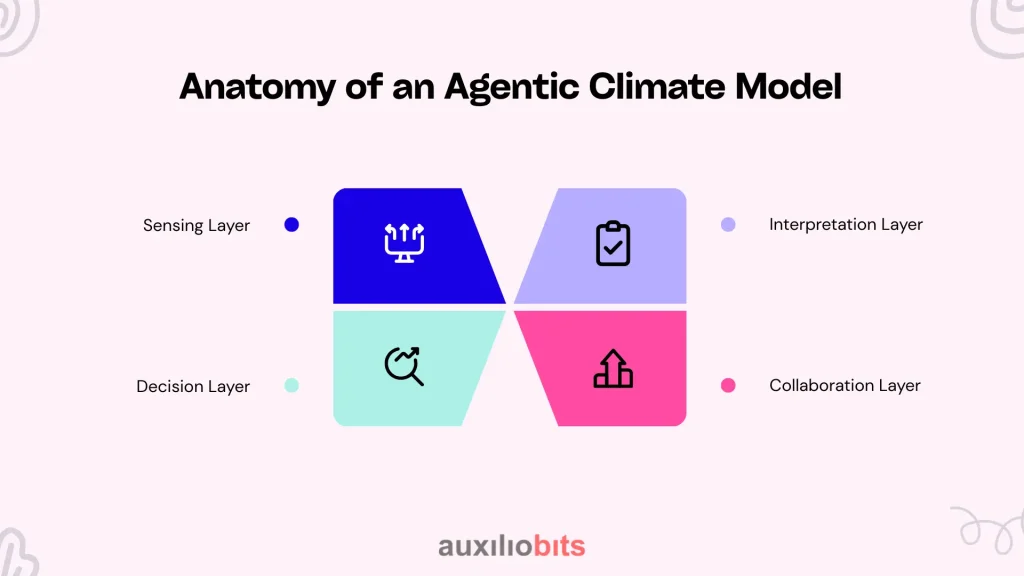

Anatomy of an Agentic Climate Model

To anyone outside the data science department, this stuff sounds mystical. But it’s built on a layered structure that’s surprisingly intuitive:

- Sensing Layer—Grabs signals: temperature, ocean heat, floodplain shifts, soil dryness, policy data.

- Interpretation Layer—Converts that mess into structured insight—what does a three-day wind anomaly mean for wildfire risk next month?

- Decision Layer – Runs internal debates between models, tests scenarios, and suggests actions.

- Collaboration Layer—Talks to other systems, reinsurers, or humans before taking action.

That last part is the key. Agentic AI doesn’t replace people; it surrounds them with a living context. It tells an underwriter, “Something’s changing here—you might want to look again.”

A Scene From the Field

Let’s say an insurer covers 12,000 coastal homes. Their models—built on a decade of NOAA data—mark a certain region as low risk.

Then, two small things happen: inland construction blocks old drainage routes, and sea levels rise a few centimeters. A regular model won’t notice the compound effect. An agentic one will.

Within hours, it spots abnormal runoff readings, checks rainfall projections, simulates the new flood path, and flags those zip codes as newly vulnerable.

No big announcement, no drama. Just a quiet correction that saves millions before the next storm season. That’s the practical power of these systems—they make sense of the invisible drift between data and reality.

When Technology Meets Insurance Culture

Of course, this all sounds great until you try to deploy it. The insurance world has deep traditions of governance and control. Every number in a rate filing needs a name beside it. Every decision must be traceable.

Agentic systems don’t exactly fit into that mold. They evolve too fast. A model that changes itself at 3 a.m. makes regulators nervous.

That’s why most insurers are taking a middle path: The AI proposes, and the human approves. A sort of supervised autonomy that balances adaptability with accountability.

It slows things down a bit, but it keeps everyone comfortable. And honestly, in insurance, comfort is half the battle.

The Hard Part: Data Gaps and Silos

Climate modeling lives or dies on data quality, and right now, data is a mess. Sensors break, regions underreport, and many firms still treat their catastrophe models as trade secrets.

Agentic systems struggle when data is locked away. To solve this, several insurers are forming climate data consortia—secure spaces where anonymized datasets can be shared across organizations.

Once you open those gates, the agents can triangulate the truth: cross-verifying between public NOAA feeds, proprietary satellite data, and even customer-reported losses. It’s messy, but it’s how living systems learn.

When the System Gets It Wrong

No one likes to admit it, but agentic AI can absolutely misfire. A satellite glitch can read cloud shadows as smoke. The system overreacts, hiking fire risk ratings. Clients notice. Trust wobbles.

That’s why new governance models are appearing around these systems:

- Counterfactual testing (asking: what if the model hadn’t made that call?).

- Reconstruction logs that show every data point influencing a decision.

- Kill-switch rules so humans can freeze an agent’s autonomy instantly.

You have to let the system think—but you also have to be able to stop it.

From Insuring Losses to Preventing Them

Something more interesting is happening beneath all this. Once an insurer can sense environmental shifts fast enough, it stops being just a risk calculator—it becomes a risk partner.

Imagine:

- Property insurers are sending early drainage alerts to city councils.

- Crop insurers advising farmers on irrigation patterns weeks before yield loss.

- Health insurers are using air-quality sensors to warn clients about asthma risks.

That’s where underwriting stops reacting to disasters and starts helping prevent them. It’s not altruism—it’s economics. Prevention is cheaper than payouts.

Trust, Transparency, and the Human Factor

Insurance is a business of promises. You can’t automate trust. Regulators, brokers, and customers all want to know why a system made a decision, not just what it decided.

To meet that need, insurers are building transparency dashboards where agents explain their reasoning—sometimes in plain language. It’s slow work, but it’s the only way this shift survives the scrutiny of compliance officers and regulators who still remember the pre-digital days.

Some reinsurers are even working directly with regulators to define safe levels of AI autonomy. That might sound bureaucratic, but it’s progress.

What’s Actually at Stake

Ask any veteran underwriter what keeps them up at night, and it’s not automation—it’s uncertainty. The market is shifting faster than the models can adjust. Margins are thinning. Catastrophes hit unpredictably.

Agentic AI isn’t about removing humans; it’s about giving them a fighting chance against volatility. The real risk isn’t overreliance on machines—it’s under-adapting to change.

The profession that once quantified uncertainty now has to partner with it.

The Quiet Revolution Ahead

Give this five to ten years, and underwriting won’t look anything like it does now. Premiums will move dynamically. Risk models will argue with each other. Policies might even adjust automatically when exposure spikes.

It won’t be clean or orderly. But neither is the climate.

The insurers that survive will be the ones who treat intelligence—human and machine alike—as something living and evolving, not static and final. Those who cling to the old math will keep pricing yesterday’s weather while tomorrow’s world burns around them.

In Short

- Historical data is losing predictive value fast.

- Agentic AI provides continuous recalibration and context-aware reasoning.

- Real insurers—Munich Re, Swiss Re, AXA Climate—are already proving it works.

- Governance and transparency will decide who scales it successfully.

- The industry’s future lies in learning systems that evolve with the environment.

Underwriting has always been about one question: how much uncertainty can you tolerate before the numbers break? Agentic AI doesn’t remove that uncertainty—it just gives us a better grip on it. And right now, that’s worth more than any model built on the comfort of the past.