Key Takeaways

- Agentic systems fail cognitively, not mechanically. Unlike servers or APIs, agents can diverge in reasoning—misjudging goals, looping endlessly, or making confident but incorrect decisions. Designing for this means understanding failure as a thinking error, not a crash.

- Traditional redundancy models don’t protect cognition. You can replicate compute, but not context. True fault tolerance for agents means preserving goal trees, intent states, and reasoning trails—not just keeping replicas alive.

- Redundancy patterns must match the cognitive layer. From shadow agents for reasoning validation to behavioral quorums and intent replay, resilience now spans logic, coordination, and state-sharing—not just hardware or process tiers.

- Detection precedes recovery—and it’s behavioral, not binary. Failures in agentic systems emerge as drift, inconsistency, or overcorrection. Observer meshes and meta-agents are essential for catching and localizing these deviations before cascading impact.

- The end goal is continuity of cognition, not uptime. Restarting an agent wipes its reasoning history. Recovery means learning from failure, replaying intents, and allowing the system to adapt intelligently—because in the agentic era, resilience is a property of understanding, not redundancy.

If there’s one thing that enterprise architects quietly accept but rarely say out loud, it’s this: autonomous systems fail differently. Traditional infrastructure fails predictably—disks crash, APIs time out, and queues overflow. But agents? They fail with intent. They misjudge, miscoordinate, or worse, keep “trying” long after they should have stopped.

When you start orchestrating intelligent agents across workflows—say, in a procurement system or claims processing loop—the idea of “high availability” gets rewritten. You’re not just keeping nodes alive; you’re preserving reasoning continuity. And that, as it turns out, is much harder.

Also read: The Role of Intent Recognition in Multi-Agent Orchestration

The Nature of Agentic Failure

An autonomous agent doesn’t simply crash. It diverges. Where a microservice might stop responding, an agent might respond incorrectly. It may hallucinate context, misinterpret goals, or loop infinitely in pursuit of a misaligned objective.

Failures in these systems fall roughly into four buckets:

- Reasoning failures—The agent selects the wrong plan or prioritizes incorrectly (common in goal-driven systems).

- Coordination breakdowns—Multiple agents overlap, duplicate efforts, or contradict each other in shared environments.

- Communication loss—The agent loses context or fails to receive updates from the event bus or central planner.

- State corruption—The agent’s memory or belief store gets outdated or inconsistent.

In short: not all failures are mechanical. Many are cognitive.

The takeaway? Redundancy isn’t about mirroring components—it’s about mirroring understanding.

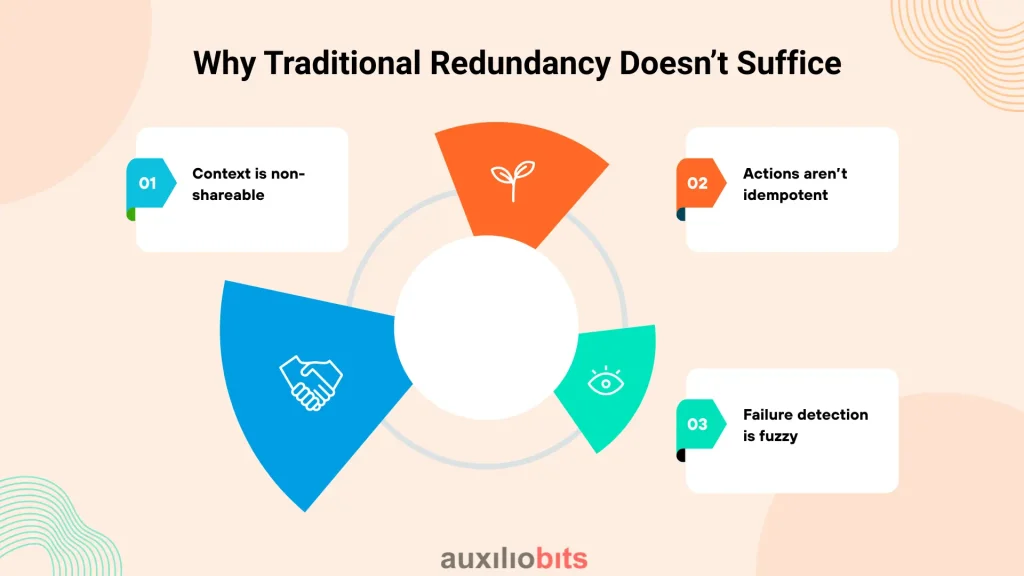

Why Traditional Redundancy Doesn’t Suffice

In a microservice world, redundancy meant clusters, replicas, and failover protocols. You could use health checks, load balancers, and consensus algorithms like Raft or Paxos to achieve fault tolerance.

But agents complicate this model for three reasons:

- Context is non-shareable. You can’t just spin up another copy of an agent mid-task unless it inherits the mental state, goal tree, and dialogue context.

- Actions aren’t idempotent. A repeated invoice submission or approval isn’t harmless; it may trigger real-world outcomes twice.

- Failure detection is fuzzy. An agent might appear active (still reasoning) while actually stuck in a logical cul-de-sac.

That’s why redundancy for agentic systems must move beyond hot replicas and into architectural fault intelligence. It’s not just redundancy in computation—it’s redundancy in cognition.

Patterns of Redundancy in Agent Architectures

Redundancy in this space isn’t monolithic. It operates across layers—behavioral, architectural, and operational. Below are the core patterns emerging from real-world deployments across automation and AI-driven systems.

1. Shadow Agents (The Parallel Thinkers)

One of the most effective patterns for critical workflows is shadow reasoning. Here, a secondary agent runs the same plan in parallel—observing, simulating, or sanity-checking the primary agent’s steps.

Think of it as a cognitive twin, not a backup. It doesn’t execute actions; it validates intent.

Used in:

- Financial compliance automation (e.g., verifying decision paths for audit logs)

- Healthcare agent systems (e.g., treatment recommendation validation)

Advantages:

- Early detection of logical drift

- Transparent explainability (“Why did the main agent choose X over Y?”)

Tradeoff:

- High compute overhead—running reasoning twice isn’t cheap.

- Shadow agents can’t fully catch emergent multi-agent miscoordination.

Still, if you’ve ever watched two pilots in a cockpit independently calculate the same numbers before a landing, you know why this pattern matters.

2. Hierarchical Goal Arbitration

When multiple agents pursue goals that overlap or conflict, redundant goal arbitration ensures recovery without chaos. Here, you introduce a meta-agent—a kind of “referee”—that doesn’t perform tasks itself but resolves deadlocks and reallocates objectives when agents fail.

Pattern insight:

- In manufacturing automation, if a “supply forecasting agent” stalls, the arbitrator reassigns its tasks to a standby unit trained for similar functions.

- In IT operations, a “triage arbitrator” might detect non-responsiveness in diagnostic agents and reroute escalation paths dynamically.

It’s redundancy through meta-coordination rather than replication.

Why it works: goal reassignment preserves continuity without forcing full resets.

When it fails: the arbitrator itself becomes a bottleneck or single point of reasoning authority—something enterprises must design carefully, often by federating arbitration across clusters.

3. State Handoff Meshes

Agents often depend on transient memory—context stored locally, like a task plan, belief graph, or intermediate output. When one fails, that ephemeral state vanishes unless shared.

State handoff meshes solve this by implementing a lightweight publish-subscribe pattern for agent state deltas. Each agent intermittently broadcasts checkpoints to peers or to a central knowledge hub.

Real-world reference: Uber’s Michelangelo (AI platform) employs partial model state caching between training agents to ensure continuity under node interruptions. Similar ideas are now appearing in agent orchestration frameworks.

Benefits:

- Enables graceful resumption mid-task

- Reduces loss from transient reasoning failures

Caveat:

- Requires well-defined serialization of agent cognition (not trivial).

- Sensitive to latency—a state that’s too stale is as useless as none.

This is one of those rare places where engineering maturity and philosophical clarity meet—you have to decide what constitutes the “truth” of an agent’s mind.

4. Behavioral Quorum

Distributed AI agents sometimes produce inconsistent decisions on the same input. Instead of choosing one arbitrarily, behavioral quorum models aggregate results from multiple agents and act on a majority or weighted consensus.

You could call it democratic redundancy.

- Three diagnostic agents analyze a transaction anomaly. Two flag it as suspicious; one doesn’t. The action proceeds with the majority signal.

- In autonomous logistics, multiple route-planning agents may vote on optimal delivery sequences.

This pattern has gained traction in environments where correctness matters more than speed (e.g., compliance, medical reasoning).

It’s slower, yes. But reliability often hides in diversity.

5. Checkpointed Intent Replay

Sometimes you can’t keep reasoning continuously. In that case, the fallback is intent replay—a method where failed agents periodically serialize their current intent tree (goals, subgoals, constraints, progress markers) into a recoverable format.

When the agent crashes or diverges, another instance replays from the last known intent checkpoint, reconstructing the decision tree rather than restarting the entire workflow.

Used effectively in:

- Robotic process orchestration (UiPath + AI planner hybrids)

- AI-driven ERP workflows where transactional integrity is crucial

This is redundancy in time, not space. It’s not about duplication—it’s about reversibility.

6. Hybrid Reflex + Deliberative Layers

If you’ve ever seen a self-driving car switch to “safe stop” when its sensor fusion goes haywire, you’ve seen this pattern in action.

Hybrid reflex-deliberative design introduces a low-latency reflex layer beneath the cognitive reasoning engine. When the higher layer fails (e.g., hangs during deliberation), the reflex subsystem takes control with hard-coded safety routines—rollback, isolation, or retry.

This is the AI version of a circuit breaker. It prevents catastrophic propagation of reasoning failure into real-world actions.

In enterprise terms:

- When an autonomous contract negotiation agent loses connection, the reflex layer triggers “freeze negotiation and alert human operator.”

- When a data classification agent starts producing outliers, reflex logic halts downstream automation pipelines.

This pattern works because redundancy doesn’t always mean “replicate intelligence”—sometimes it means contain stupidity quickly.

Designing for Detection Before Recovery

All these redundancy patterns assume one thing: you can detect failure fast enough to act. But that’s non-trivial.

In traditional systems, failure signals are binary—alive or dead. In agentic systems, failure is behavioral drift.

Signs include:

- Action repetition without progress

- Increasing divergence between expected and observed states

- Contradictory outputs across agents operating on shared data

- Excessive self-correction or looping in reasoning traces

Detection patterns often use meta-observers—agents whose sole purpose is to monitor other agents. Ironically, this creates another layer of possible failure. So you build redundancy there, too.

In mature systems, this becomes an “observer mesh” rather than a single sentinel. Each agent monitors a peer subset, and anomalies trigger localized rollback or reallocation rather than system-wide halts.

This approach borrows from swarm robotics, where the failure of a few drones doesn’t cripple the mission; the collective adjusts.

Recovery Isn’t Always Restart

A subtle but critical point: recovery is not a restart. Restarting an agent resets its cognitive state—losing its reasoning trail and partial insights. That might seem clean, but it erases valuable failure context.

True recovery preserves what went wrong, because that data refines future resilience.That’s why the best-designed systems don’t aim for uptime—they aim for continuity of cognition. Recovery might mean:

- Spawning a watcher agent to analyze the failed state

- Replaying the last known reasoning step under different constraints

- Transferring partial state to a collaborative peer for continuation

Restarting is a band-aid. Recovering is an adaptation.

Practical Lessons from the Field

Across industries, patterns evolve differently:

- In healthcare automation, shadow agents dominate because accountability is paramount. Redundant reasoning equals auditability.

- In manufacturing control systems, reflex-deliberative hybrids rule; safety is non-negotiable.

- In financial workflows, behavioral quorum and goal arbitration are more common—risk tolerance allows slower, collective decisions.

- In cloud orchestration, state handoff meshes align best with elastic workloads.

One lesson that stands across all of them: redundancy is a socio-technical construct. You’re designing for failure not just in machines, but in the logic of delegation itself.

Even the most advanced LLM-powered planner can freeze mid-thought. The question isn’t whether it will—it’s whether your architecture knows what to do next.

Realities: Redundancy and Cost

Here’s the uncomfortable truth: most organizations overestimate the ROI of redundancy.

You can triple your agents and build beautiful fallback logic, yet still fail when systemic misunderstanding propagates faster than any failover can catch it.

So before implementing every pattern on this list, ask:

- Which failures actually matter?

- Is this process tolerant of temporary reasoning inconsistency?

- Can we quantify the cost of cognitive shadowing versus business risk?

Sometimes, a human-in-the-loop checkpoint every few hours does more for system resilience than a full-blown observer mesh. Redundancy, at its best, is strategic, not excessive.

Toward Cognitive Reliability

Agentic systems have pushed fault tolerance into philosophical territory. When your entities “think,” you start confronting questions once reserved for human organizations:

- How much disagreement is acceptable?

- How much autonomy before chaos?

- What counts as recovery if memory itself is fallible?

These are not engineering afterthoughts—they’re architectural principles.

A reliable agentic system doesn’t just survive failure. It understands it, internalizes it, and keeps moving. And perhaps that’s the real redundancy: intelligence itself, distributed enough to stay sane when part of it goes mad.