Key Takeaways

- Modern content creation agents do more than generate text—they plan, fetch data, verify facts, and refine outputs, unlike traditional single-step LLM prompts.

- High-throughput, low-latency content generation relies heavily on GPU inference, with optimizations (TensorRT, DeepSpeed-Inference) improving speed—but cloud GPU costs can balloon quickly.

- Frameworks like LangChain structure workflows, manage memory, and handle external tool calls, but they add complexity and require careful debugging.

- Editors and prompt engineers ensure accuracy, brand consistency, and legal compliance; fully autonomous content pipelines are often impractical.

- Success comes from pragmatic deployment, measured automation, and controlled integration—balancing speed, cost, and reliability, rather than relying on LLMs, GPUs, or orchestration alone.

The hype around AI-generated content often sounds deceptively simple: “just feed a prompt to an LLM and get paragraphs of text.” Anyone who has worked with large models in production knows it isn’t that neat. The difference between a neat demo and a system that reliably produces useful business content is ocean wide. It takes orchestration, infrastructure, and sometimes brute GPU horsepower to make it not only possible but also repeatable.

This piece walks through how content creation agents are being designed today with three intersecting layers: large language models (LLMs), GPU-accelerated inference (NVIDIA has cornered much of this market), and orchestration frameworks like LangChain. The lens here is not the generic “AI will change content” story but the engineering, deployment, and operational tradeoffs that practitioners actually face.

Also read: Smart compliance agents using NVIDIA NLP models for real‑time regulatory scanning

The Evolution of “Content Agents” Beyond Chatbots

If you rewind two or three years, most enterprises treated LLMs as novelty assistants—summarizers, Q&A bots, or SEO keyword machines. But the shift to “agents” has pushed the boundary. An agent is not just a text predictor; it can plan, call external tools, check its own output, and retry when things fail. For content teams, this means generating not just a blog paragraph but an entire draft with citations pulled from a research database, a chart created via a Python script, or a structured outline handed off to an editor.

Real-world example: a financial services firm we worked with uses a content agent to generate daily market briefs. The workflow is multi-step:

- Pull raw data from Bloomberg and Reuters feeds.

- Parse and clean it using a lightweight NLP pipeline.

- Feed summaries into an LLM with a style prompt that matches the firm’s brand tone.

- Have the agent automatically cross-check key numbers (closing prices, indices) against APIs before publishing.

This isn’t “hit GPT-4 with a prompt.” It’s a composition of reasoning, external calls, and verification. Without orchestration, the process collapses under its own complexity.

Why GPU Inference Matters (and Where It Bites)

People outside of ML engineering often underestimate how much infrastructure drives feasibility. Text generation at scale is GPU-bound. Yes, CPUs can run smaller models, but once you want high throughput, low latency, or fine-tuned variants, you’re in NVIDIA territory.

A few concrete realities:

- Batching vs. Latency—If you serve multiple content requests at once, GPUs can batch efficiently. But if a marketing team demands a single article in near real-time, batching is useless—you pay for underutilized GPU cycles.

- Memory Constraints—A 70B parameter model doesn’t just need a beefy card; it often requires model sharding across GPUs. That introduces synchronization overhead, which shows up as jitter in response times.

- Inference Optimization—Libraries like TensorRT, FasterTransformer, and DeepSpeed-Inference can cut latency by 20–40%. Is it worth it? Usually, yes—but integrating them isn’t plug-and-play. Developers burn cycles debugging kernels and handling mixed precision quirks.

This is why some teams run hybrid stacks: CPU inference for lightweight, lower-stakes tasks (metadata generation, draft outlines), and GPU inference for polished client-facing content.

And here’s the uncomfortable truth: NVIDIA pricing dictates much of this ecosystem. Every improvement in agentic content pipelines has to reckon with the economics of GPU cloud costs.

LangChain’s Role: Orchestration, Not Magic

LangChain gets both overhyped and misunderstood. It isn’t an LLM itself, nor a silver bullet. What it does offer is structure. Rather than scattering scripts across several notebooks, teams can easily retrieve pipelines more conveniently.

Key patterns that actually matter in production:

- Retrieval-Augmented Generation (RAG) – Pull in knowledge from an enterprise CMS or a vector database (Pinecone, Weaviate, FAISS) before drafting content. Without this, LLMs hallucinate confidently, which might be tolerable in marketing copy but is fatal in regulated industries.

- Tool Use & Function Calling – A content agent may need to call an API (e.g., generate a chart with Matplotlib, fetch analytics from Google Search Console). LangChain provides structured interfaces so the LLM doesn’t just spit out “Call API here” but actually triggers it.

- Memory Management – Maintaining context across steps. An agent drafting a whitepaper needs to “remember” the outline it generated two steps ago without being re-prompted from scratch.

The nuance: LangChain adds complexity itself. Debugging a misbehaving chain is harder than debugging a prompt. Developers sometimes drown in nested abstractions. So yes, it accelerates structured workflows—but it also locks you into its framework conventions.

Anatomy of a Content Creation Agent

Pulling the three layers together, a typical architecture looks like this:

- Task Planning—The agent receives a request (“Draft a 1,500-word blog on GPU threat detection for CISOs”).

- Knowledge Retrieval – Using RAG to pull internal docs, past publications, or compliance guidelines.

- Drafting with LLM – A large model generates text, with system prompts controlling tone and formatting.

- Fact Verification – A secondary model or rule-based validator cross-checks numbers, names, or citations.

- Refinement Loops—If issues are flagged, the agent re-prompts the LLM or escalates to a human.

- Formatting & Delivery—Output structured as Markdown, HTML, or directly into a CMS via API.

Under the hood:

- Inference runs on GPUs (A100, H100, or cloud equivalents).

- LangChain handles orchestration between steps.

- Custom glue code integrates company-specific data sources, compliance checks, and editorial workflows.

Where It Works and Where It Breaks

No system is flawless. Here’s where content agents shine and where they stumble.

Strengths

- Scale: Generating ten product datasheets overnight is trivial once workflows are stable.

- Consistency: Agents enforce brand tone better than a rotating cast of freelance writers.

- Multi-modality: With GPU acceleration, adding charts, summaries, or even video snippets is feasible.

Weaknesses

- Context Drift: Long chains can lose coherence—an outline step and the final draft sometimes diverge bizarrely.

- GPU Bottlenecks: High concurrency demands crash budgets quickly.

- Human Trust: Editors often spend more time fact-checking AI output than if they had drafted the text themselves, especially in the early months.

One anecdote: a biotech firm tried using content agents for grant proposal drafting. They abandoned it after six months because reviewers kept catching fabricated references. The tech wasn’t useless—it worked well for internal briefing notes—but for externally audited documents, the risk outweighed the savings.

The Hidden Human Layer

Even the most autonomous-sounding agent still needs human scaffolding. Not just in oversight, but in design:

- Prompt Engineering – Subtle changes in phrasing affect tone and structure. One misaligned system prompt can make an agent produce verbose fluff instead of crisp analysis.

- Editorial Integration—Most enterprises already have established CMS workflows. Agents must plug into these, not replace them.

- Feedback Loops—Editors flag recurring issues (say, overuse of passive voice), and those get baked into prompts or post-processing scripts.

This hybrid model—machines draft, humans steer—may persist longer than enthusiasts expect.

The Real Constraint Isn’t Models; It’s Discipline

It’s tempting to think the bottleneck is model performance. In practice, the harder problem is discipline. Discipline in scoping what the agent should and shouldn’t do. Discipline in measuring cost vs. output quality. Discipline in resisting “just let the model handle it” when you actually need hard guardrails.

Some firms get seduced into over-automation: they push for fully autonomous content pipelines and then face backlash when errors slip through. Others under-invest, treating agents as toys, and miss efficiency gains. The sweet spot lies in being ruthlessly pragmatic—deploy agents where consistency and speed matter, and keep humans where judgment and liability live.

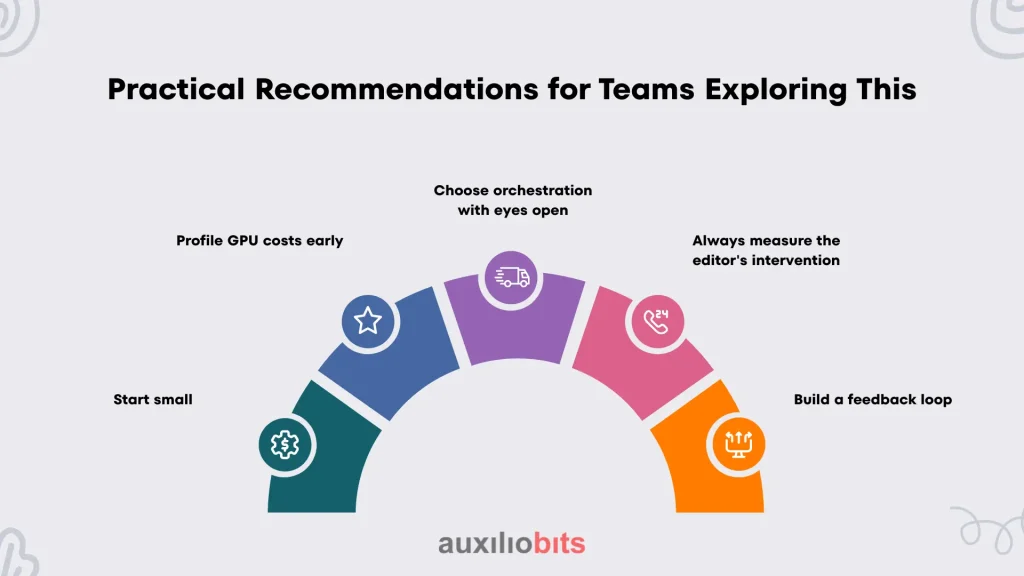

Practical Recommendations for Teams Exploring This

- Start small. Automate one content type (e.g., product FAQs) before trying white papers.

- Profile GPU costs early. Surprise bills from cloud inference can sink projects.

- Choose orchestration with eyes open. LangChain is powerful but not the only option—LlamaIndex, Haystack, or custom Python pipelines may fit better.

- Always measure the editor’s intervention. If humans rewrite 80% of outputs, you haven’t gained efficiency.

- Build a feedback loop. Capture which outputs get accepted vs. rejected and fine-tune accordingly.

Closing Thought

Content tools powered by large language models, GPUs, and management systems are no longer just ideas; they are actively changing how companies create reports, summaries, and marketing materials. However, adopting these tools is not always easy. Balancing engineering choices, costs, and human review decides if these tools help or just add confusion.

The key lesson learned is this: success doesn’t come from the language model alone, or just the GPU power, or the management system by itself. Real value comes from carefully combining, controlling, and fitting them into workflows that real people actually use every day.