Key Takeaways

- GPUs transform threat detection by enabling real-time analysis of high-dimensional, high-volume security data, which CPUs cannot process efficiently.

- Anomaly detection is powerful but noisy—it excels at spotting zero-days and insider threats but struggles with false positives, dynamic baselines, and adversarial evasion.

- Agent-based architectures are essential to make GPU-accelerated detection operationally useful, providing context, prioritization, and adaptive orchestration.

- Costs, complexity, and skills remain barriers—organizations must carefully balance GPU expenses, integration hurdles, and the need for multi-disciplinary teams.

- Successful adoption requires patience and pragmatism—pilot narrow use cases, focus on reducing analyst workload (not just raising detection rates), and build human trust in machine-driven alerts.

Security teams today face a tough challenge. Cyberattacks now happen faster and in greater numbers than before. Traditional systems that monitor security, like SIEM tools, worked well ten years ago with a dedicated analyst team. But now, they struggle to keep up. Data comes from many sources—like computers, cloud services, smart devices, and even encrypted network data. To manage this flood of information, modern security tools use GPUs, the powerful processors made for gaming, to quickly analyze patterns and spot unusual behavior that regular CPUs can’t handle. This change is significant and affects how organizations detect cyber threats. There are big benefits, but also some difficulties to consider.

Also read: Reducing downtime: NVIDIA GPU‑powered anomaly detection agents for machinery

Why GPUs Became Central to Threat Detection

Most people in security circles understand that GPUs accelerate machine learning. But the way they specifically enable anomaly analysis deserves a closer look.

- Parallelism matters. Threat data comes from many different sources, like logs, network flows, and events. GPUs can process millions of these data points at the same time instead of one after another. This means a system can quickly check all users’ behavior against weeks of past activity right away, rather than looking at just a small part. This helps find unusual actions faster and more accurately while handling large amounts of information.

- High-dimensional patterns. Detecting cyber threats is not about simple yes-or-no signals. It involves spotting small differences, like slightly changed login times, unusual sequences of API calls, or odd pairs of connection points. To find these, systems use advanced methods that group and analyze hundreds of data features together. CPUs struggle to handle this many details, while GPUs handle it smoothly.

- Training speed directly impacts defense. The speed of training these detection models is crucial. When a model can be retrained in hours instead of days, it can keep up with attackers who constantly change their tactics. This quick updating isn’t just theory—it’s vital for staying protected.

A good example comes from financial services. A Tier-1 bank running GPU-accelerated behavioral analytics on user sessions cut their mean time to detect insider fraud from 14 days to under 36 hours. Not perfect, but transformative compared to the static rules they used before.

Anomaly Analysis in Practice: What Works, What Doesn’t

The concept sounds elegant: identify deviations from normal behavior and flag them as potential threats. But reality is messier.

Where it shines:

- Unsupervised learning thrives on logs where labeled attack data is scarce.

- Subtle insider threats—an employee gradually exfiltrating documents—emerge as anomalies long before static rules would notice.

- Zero-day exploits, by definition unseen, often create unusual behavior traces detectable by GPU-accelerated models.

Where it falters:

- False positives remain the bane of anomaly detection. Not every deviation is malicious. A VP logging in from a hotel during a board meeting might be legitimate.

- Dynamic baselines drift. Cloud-native workloads spin up and down constantly; what counted as “normal” yesterday isn’t stable today.

- Attackers can game the system. Low-and-slow data theft that mirrors average patterns may evade even sophisticated anomaly detectors.

That contradiction—high power but high noise—defines much of the current field. In fact, one CISO I spoke with quipped, “We bought GPUs to catch more fish. Now the nets pull up so much kelp, my team spends all day cleaning.” The challenge isn’t only detection; it’s triage.

Agent-Based Architectures: Why Static Models Aren’t Enough

Simply plugging anomaly models into a SIEM doesn’t solve the operational bottleneck. What’s emerging instead is a class of autonomous agents that orchestrate detection tasks. These agents aren’t just models—they’re decision-makers.

- They can prioritize alerts based on contextual signals (was this login tied to a privileged account? Did the anomaly occur during a patch window?

- They can chain models together, running lightweight heuristics first, then escalating to GPU-heavy analysis when something looks suspicious.

- They can adapt thresholds dynamically, lowering sensitivity during known change events like system migrations.

This agentic behavior is crucial because pure anomaly scores without context drown analysts. By embedding logic into the detection pipeline, agents make GPU acceleration operationally useful rather than just technically impressive.

Case Example: Cloud Workload Security

Consider a SaaS provider hosting multi-tenant applications. Their pain point: anomalous spikes in east-west traffic inside Kubernetes clusters. Using GPU-accelerated agents, they deployed:

- Lightweight detectors monitoring pod-to-pod communications.

- Agents flagging clusters that exceeded learned thresholds.

- Escalation to GPU-based deep models analyzing encrypted packet metadata for subtle timing irregularities.

- Automatic suppression of alerts tied to maintenance windows.

Result: meaningful alerts dropped from 20,000+ per day to under 400, with higher fidelity. Analysts reported that instead of spending mornings sifting through false alarms, they could actually investigate root causes.

That’s the nuance—GPUs were necessary for precision, but it was the agentic orchestration that made the system usable.

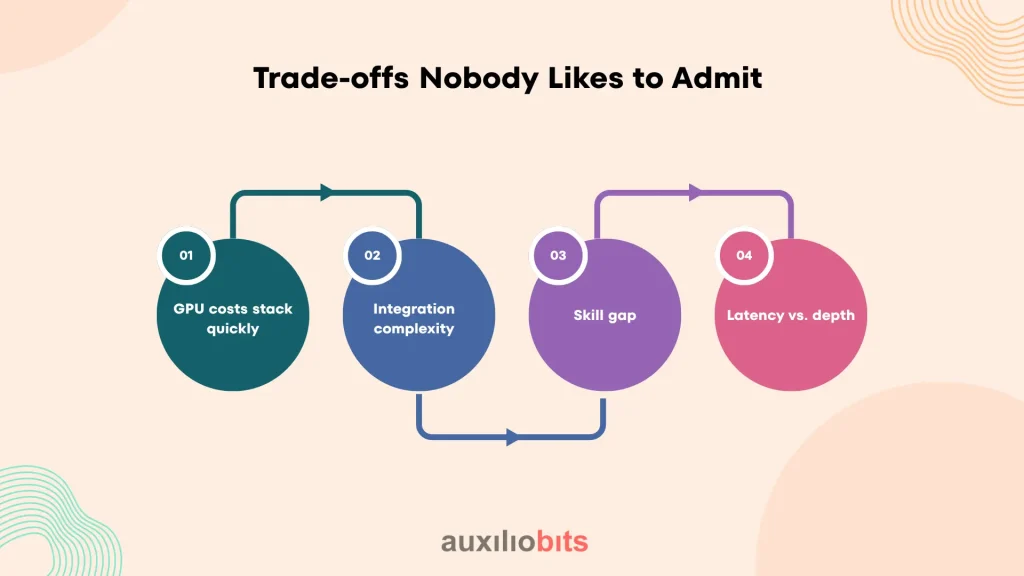

Trade-offs Nobody Likes to Admit

The marketing story often glosses over the rough edges. A few realities worth acknowledging:

- GPU costs stack quickly. Running dense models across every log stream isn’t cheap. Cloud GPU billing can balloon into six-figure monthly spending if left unchecked.

- Integration complexity. Many SOC tools weren’t designed for GPU acceleration. Retrofitting them involves awkward data pipelines, often with brittle connectors.

- Skill gap. Analysts skilled in incident response rarely double as CUDA programmers. Bridging that talent divide requires cross-functional teams, which not every enterprise can staff.

- Latency vs. depth. The more features you analyze, the slower the output. Real-time detection sometimes demands cutting corners in model depth.

It’s no coincidence that early adopters are industries where detection accuracy justifies high costs—banks, defense contractors, and cloud hyperscalers. For mid-market firms, the return on investment isn’t always clear-cut.

Emerging Directions

Even with the caveats, progress is undeniable. A few trends are worth watching:

- Hybrid inference. Models increasingly run first-pass checks on CPUs or TPUs, escalating only suspicious slices of data to GPUs. That optimizes cost and speed.

- Self-learning agents. Beyond fixed baselines, some detection agents now self-adjust thresholds based on rolling time windows, reducing false positives in volatile environments.

- Graph-based anomaly detection. Mapping relationships between users, devices, and processes uncovers attack paths invisible to linear models. GPUs excel at graph computations.

- Federated detection. In highly regulated sectors, models trained across multiple organizations without centralizing data are gaining ground—keeping privacy intact while widening detection horizons.

There’s also a cultural angle: SOC teams are slowly shifting from “rule authors” to “model supervisors.” Their job is less about encoding every scenario and more about steering agents, curating training data, and challenging model outputs.

An Important Message

It’s tempting to frame GPU-accelerated anomaly detection as a silver bullet. Vendors often present dazzling benchmarks: 95% detection accuracy, millisecond latencies, and seamless integrations. In practice, it’s closer to messy incrementalism.

- A GPU can spotlight anomalies your team would miss.

- But if workflows aren’t tuned, you’ll drown in more alerts, not fewer.

- And if you don’t invest in human-machine trust—teaching analysts why a model flagged something—the system won’t stick.

The danger isn’t failure. It’s disillusionment. Enterprises that expect instant ROI abandon promising architectures too soon. Those who recognize that GPUs are enablers—not replacements—integrate them thoughtfully, layering operational logic around raw compute.

A Practical Takeaway for B2B Leaders

If you’re evaluating GPU-accelerated detection agents, a sober checklist helps:

- Start with the business case: Is the cost of missed threats higher than the GPU bill?

- Pilot in a narrow domain—say, privileged account monitoring—before scaling across all telemetry.

- Build cross-disciplinary teams: data engineers, SOC analysts, cloud architects. Without that blend, integration efforts stall.

- Pressure-test false positive rates. Ask not just “how many threats did we catch” but “how much analyst time did we waste.”

- Plan for model lifecycle management. A GPU-accelerated model trained six months ago is often obsolete against today’s attacker playbooks.

Done right, this isn’t just about speed. It’s about shifting the role of the SOC from reactive log readers to proactive anomaly interpreters. That shift requires patience, but it’s one of the few viable ways to keep pace with adversaries who are themselves automating.

Final Thoughts

Cyber-threat detection agents powered by GPUs are not a panacea, but they are a necessary adaptation to modern attack landscapes. They thrive in high-volume, high-dimensional data contexts, where conventional CPUs simply can’t cope. Yet their value isn’t automatic—it hinges on orchestration, contextualization, and disciplined operational use.

Think of it this way: GPUs give you sharper lenses, but you still need trained eyes behind them.